What is the role of kafka consumer group

The role of kafka consumer group: 1. Load balancing; 2. Fault tolerance; 3. Broadcast mode; 4. Flexibility; 5. Automatic failover and leader election; 6. Dynamic scalability; 7 , Sequence guarantee; 8. Data compression; 9. Transaction support. Detailed introduction: 1. Load balancing. Consumer groups are the core mechanism for realizing Kafka load balancing. By organizing consumers into groups, the partitions of the topic can be assigned to multiple consumers in the group, thereby achieving load balancing; 2. Fault tolerance, the design of consumer groups allows for fault tolerance and more.

The operating system for this tutorial: Windows 10 system, DELL G3 computer.

A Kafka consumer group is a group of consumer instances that share the same group.id. The role of the consumer group is mainly reflected in the following aspects:

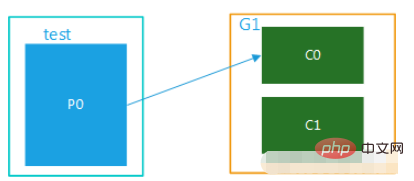

1. Load balancing: The consumer group is the core mechanism for realizing Kafka load balancing. By organizing consumers into groups, partitions of a topic can be assigned to multiple consumers within the group, thereby achieving load balancing. In this way, each consumer instance only needs to process messages from a part of the partitions, improving overall consumption performance.

2. Fault tolerance: The design of the consumer group allows for fault tolerance. If a consumer in the group fails, other consumers can take over its partition, ensuring that messages are not missed and preventing the failure of one consumer from affecting the normal operation of the entire system.

3. Broadcast mode: By creating multiple consumer groups, the broadcast mode of messages can be implemented. In this mode, each consumer group receives all messages from the topic, thus achieving one-to-many messaging.

4. Flexibility: By adjusting the configuration of the consumer group, different consumption models can be implemented, such as publish-subscribe model and queue model. In the publish-subscribe mode, a message can be consumed by multiple consumers at the same time; in the queue mode, a message can only be consumed by one consumer. This flexibility allows Kafka to adapt to different business needs and data processing scenarios.

5. Automatic failover and leader election: Kafka provides automatic failover and leader election mechanisms to ensure the stability and availability of the system when a failure occurs.

6. Dynamic scalability: As the business scale expands or shrinks, the members of the consumer group can be dynamically increased or decreased. Newly joining consumers will automatically pull data from existing copies and start consuming; while leaving consumers will automatically sense and stop consuming. This dynamic scalability allows Kafka to flexibly expand processing capabilities as the business develops.

7. Sequence guarantee: Within a single consumer group, the order of message consumption is in accordance with the order of messages in the partition. This allows Kafka to guarantee the ordering of messages within a single consumer group. If global ordering is required, all related messages can be sent to the same partition and consumed by a single consumer.

8. Data compression: Kafka supports message compression function, which can reduce the disk space required for storage when storage space is limited. By compressing multiple consecutive messages together and writing them in only one disk I/O operation, throughput and efficiency can be significantly improved.

9. Transactional support: Kafka supports transactional message processing, which can ensure the atomicity and consistency of operations during message writing and reading. This helps achieve reliable data transfer and consistent data state in distributed systems.

In actual applications, to use Kafka consumer groups, you need to set the same consumer group ID for consumer instances. In addition, parameters such as performance and fault tolerance can be optimized by adjusting the configuration of consumers. For example, you can adjust parameters such as the consumer's consumption offset, the consumer's pull timeout, and the consumer's maximum consumption rate to meet specific business needs.

In short, the Kafka consumer group is the core mechanism to achieve Kafka's load balancing, fault tolerance, flexibility and other features. By properly configuring and using consumer groups, the overall performance and reliability of Kafka can be improved to meet various business needs and data processing scenarios.

The above is the detailed content of What is the role of kafka consumer group. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

How to implement real-time stock analysis using PHP and Kafka

Jun 28, 2023 am 10:04 AM

How to implement real-time stock analysis using PHP and Kafka

Jun 28, 2023 am 10:04 AM

With the development of the Internet and technology, digital investment has become a topic of increasing concern. Many investors continue to explore and study investment strategies, hoping to obtain a higher return on investment. In stock trading, real-time stock analysis is very important for decision-making, and the use of Kafka real-time message queue and PHP technology is an efficient and practical means. 1. Introduction to Kafka Kafka is a high-throughput distributed publish and subscribe messaging system developed by LinkedIn. The main features of Kafka are

How to dynamically specify multiple topics with @KafkaListener in springboot+kafka

May 20, 2023 pm 08:58 PM

How to dynamically specify multiple topics with @KafkaListener in springboot+kafka

May 20, 2023 pm 08:58 PM

Explain that this project is a springboot+kafak integration project, so it uses the kafak consumption annotation @KafkaListener in springboot. First, configure multiple topics separated by commas in application.properties. Method: Use Spring’s SpEl expression to configure topics as: @KafkaListener(topics="#{’${topics}’.split(’,’)}") to run the program. The console printing effect is as follows

How SpringBoot integrates Kafka configuration tool class

May 12, 2023 pm 09:58 PM

How SpringBoot integrates Kafka configuration tool class

May 12, 2023 pm 09:58 PM

spring-kafka is based on the integration of the java version of kafkaclient and spring. It provides KafkaTemplate, which encapsulates various methods for easy operation. It encapsulates apache's kafka-client, and there is no need to import the client to depend on the org.springframework.kafkaspring-kafkaYML configuration. kafka:#bootstrap-servers:server1:9092,server2:9093#kafka development address,#producer configuration producer:#serialization and deserialization class key provided by Kafka

How to build real-time data processing applications using React and Apache Kafka

Sep 27, 2023 pm 02:25 PM

How to build real-time data processing applications using React and Apache Kafka

Sep 27, 2023 pm 02:25 PM

How to use React and Apache Kafka to build real-time data processing applications Introduction: With the rise of big data and real-time data processing, building real-time data processing applications has become the pursuit of many developers. The combination of React, a popular front-end framework, and Apache Kafka, a high-performance distributed messaging system, can help us build real-time data processing applications. This article will introduce how to use React and Apache Kafka to build real-time data processing applications, and

Five selections of visualization tools for exploring Kafka

Feb 01, 2024 am 08:03 AM

Five selections of visualization tools for exploring Kafka

Feb 01, 2024 am 08:03 AM

Five options for Kafka visualization tools ApacheKafka is a distributed stream processing platform capable of processing large amounts of real-time data. It is widely used to build real-time data pipelines, message queues, and event-driven applications. Kafka's visualization tools can help users monitor and manage Kafka clusters and better understand Kafka data flows. The following is an introduction to five popular Kafka visualization tools: ConfluentControlCenterConfluent

Comparative analysis of kafka visualization tools: How to choose the most appropriate tool?

Jan 05, 2024 pm 12:15 PM

Comparative analysis of kafka visualization tools: How to choose the most appropriate tool?

Jan 05, 2024 pm 12:15 PM

How to choose the right Kafka visualization tool? Comparative analysis of five tools Introduction: Kafka is a high-performance, high-throughput distributed message queue system that is widely used in the field of big data. With the popularity of Kafka, more and more enterprises and developers need a visual tool to easily monitor and manage Kafka clusters. This article will introduce five commonly used Kafka visualization tools and compare their features and functions to help readers choose the tool that suits their needs. 1. KafkaManager

Sample code for springboot project to configure multiple kafka

May 14, 2023 pm 12:28 PM

Sample code for springboot project to configure multiple kafka

May 14, 2023 pm 12:28 PM

1.spring-kafkaorg.springframework.kafkaspring-kafka1.3.5.RELEASE2. Configuration file related information kafka.bootstrap-servers=localhost:9092kafka.consumer.group.id=20230321#The number of threads that can be consumed concurrently (usually consistent with the number of partitions )kafka.consumer.concurrency=10kafka.consumer.enable.auto.commit=falsekafka.boo

The practice of go-zero and Kafka+Avro: building a high-performance interactive data processing system

Jun 23, 2023 am 09:04 AM

The practice of go-zero and Kafka+Avro: building a high-performance interactive data processing system

Jun 23, 2023 am 09:04 AM

In recent years, with the rise of big data and active open source communities, more and more enterprises have begun to look for high-performance interactive data processing systems to meet the growing data needs. In this wave of technology upgrades, go-zero and Kafka+Avro are being paid attention to and adopted by more and more enterprises. go-zero is a microservice framework developed based on the Golang language. It has the characteristics of high performance, ease of use, easy expansion, and easy maintenance. It is designed to help enterprises quickly build efficient microservice application systems. its rapid growth