Technology peripherals

Technology peripherals

AI

AI

Google's DeepMind robot has released three results in a row! Both capabilities have been improved, and the data collection system can manage 20 robots at the same time.

Google's DeepMind robot has released three results in a row! Both capabilities have been improved, and the data collection system can manage 20 robots at the same time.

Google's DeepMind robot has released three results in a row! Both capabilities have been improved, and the data collection system can manage 20 robots at the same time.

Almost at the same time as Stanford’s “Shrimp Fried and Dishwashing” robot, Google DeepMind also released its latest embodied intelligence results.

And it’s three consecutive shots:

First, a new model that focuses on improving decision-making speed, let The robot's operation speed (compared to the original Robotics Transformer) has increased by 14% - while being fast, the quality has not declined, and the accuracy has also increased by 10.6%.

Then there is a new frameworkspecializing in generalization ability, which can create motion trajectory prompts for the robot and let it face 41 never-before-seen tasks, achieving a 63% success rate.

Don’t underestimate this array, Compared with the previous 29%, the improvement is quite big.

Finally a robot data collection system that can manage 20 robots at a time and has currently collected 77,000 experimental data from their activities, they will help Google does a better job of subsequent training.

So, what are these three results specifically? Let’s look at them one by one.

The first step in making robots a daily routine: Unseen tasks can be done directly

Google pointed out that to realize a robot that can truly enter the real world, two basic challenges need to be solved.

1. Ability to promote new tasks

2. Improve decision-making speed

The first two results of this three-part series are mainly improvements in these two areas, and All are built on Google's basic robot model Robotics Transformer (RT for short).

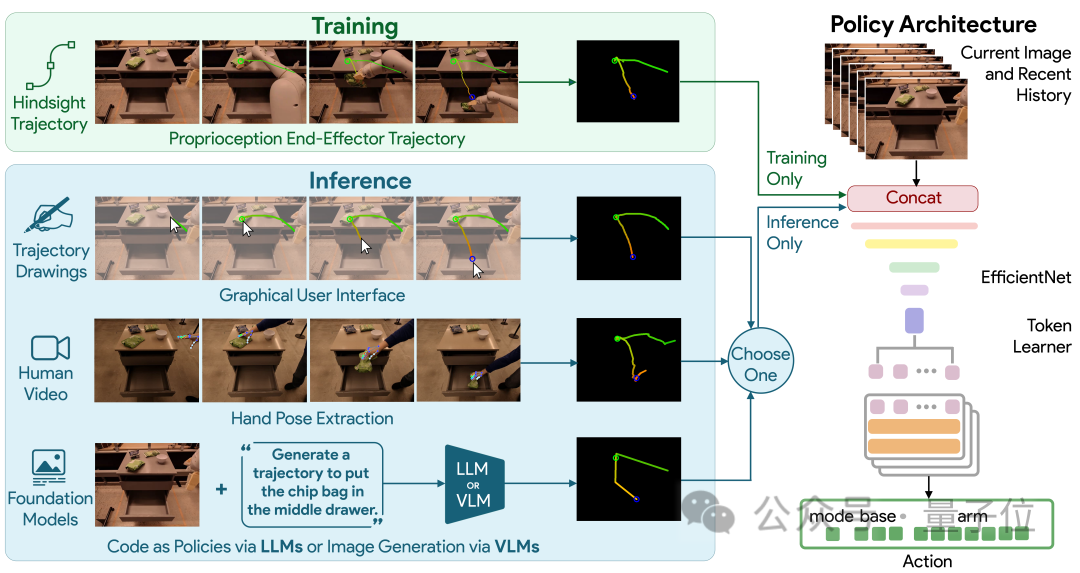

Let’s first look at the first one: RT-Trajectory that helps robots generalize.

For humans, tasks such as cleaning tables are easy to understand, but robots don’t understand it very well.

But fortunately, we can convey this instruction to it in a variety of possible ways, so that it can take actual physical actions.

Generally speaking, the traditional way is to map the task into a specific action, and then let the robot arm complete it. For example, wiping the table can be broken down into "close the clamp, move to the left, move to the left, and close the clamp to the left." Move right".

Obviously, the generalization ability of this method is very poor.

Here, Google’s newly proposed RT-Trajectory teaches the robot to complete tasks by providing visual cues.

Specifically, robots controlled by RT-Trajectory will add 2D trajectory enhanced data during training.

These trajectories are presented as RGB images, including routes and key points, providing low-level but very useful hints as the robot learns to perform tasks.

With this model, the success rate of robots performing never-before-seen tasks has been directly increased by as much as 1 times (compared to Google's basic robot model RT-2, from 29%=> 63%).

What’s more worth mentioning is that RT-Trajectory can create trajectories in a variety of ways, including:

By watching human demonstrations, accepting hand-drawn sketches, and through VLM (Visual Language Model) to generate.

The second step of daily robotization: the decision-making speed must be fast

After the generalization ability is improved, we will focus on the decision-making speed.

Google’s RT model uses the Transformer architecture. Although the Transformer is powerful, it relies heavily on the attention module with quadratic complexity.

Therefore, once the input to the RT model is doubled (for example, by equipping the robot with a higher-resolution sensor), the computational resources required to process it will increase by four times. This will severely slow down decision-making.

In order to improve the speed of robots, Google developedSARA-RT on the basic model Robotics Transformer.

SARA-RT uses a new model fine-tuning method to make the original RT model more efficient.

This method is called "up training" by Google. Its main function is to convert the original quadratic complexity into linear complexity, and at the same time Maintain processing quality.

When SARA-RT is applied to the RT-2 model with billions of parameters, the latter can achieve faster operation speeds and higher accuracy on a variety of tasks.

It is also worth mentioning that SARA-RT provides a universal method to accelerate Transformer without expensive pre-training, so it can Well promoted.

Not enough data? Create your own

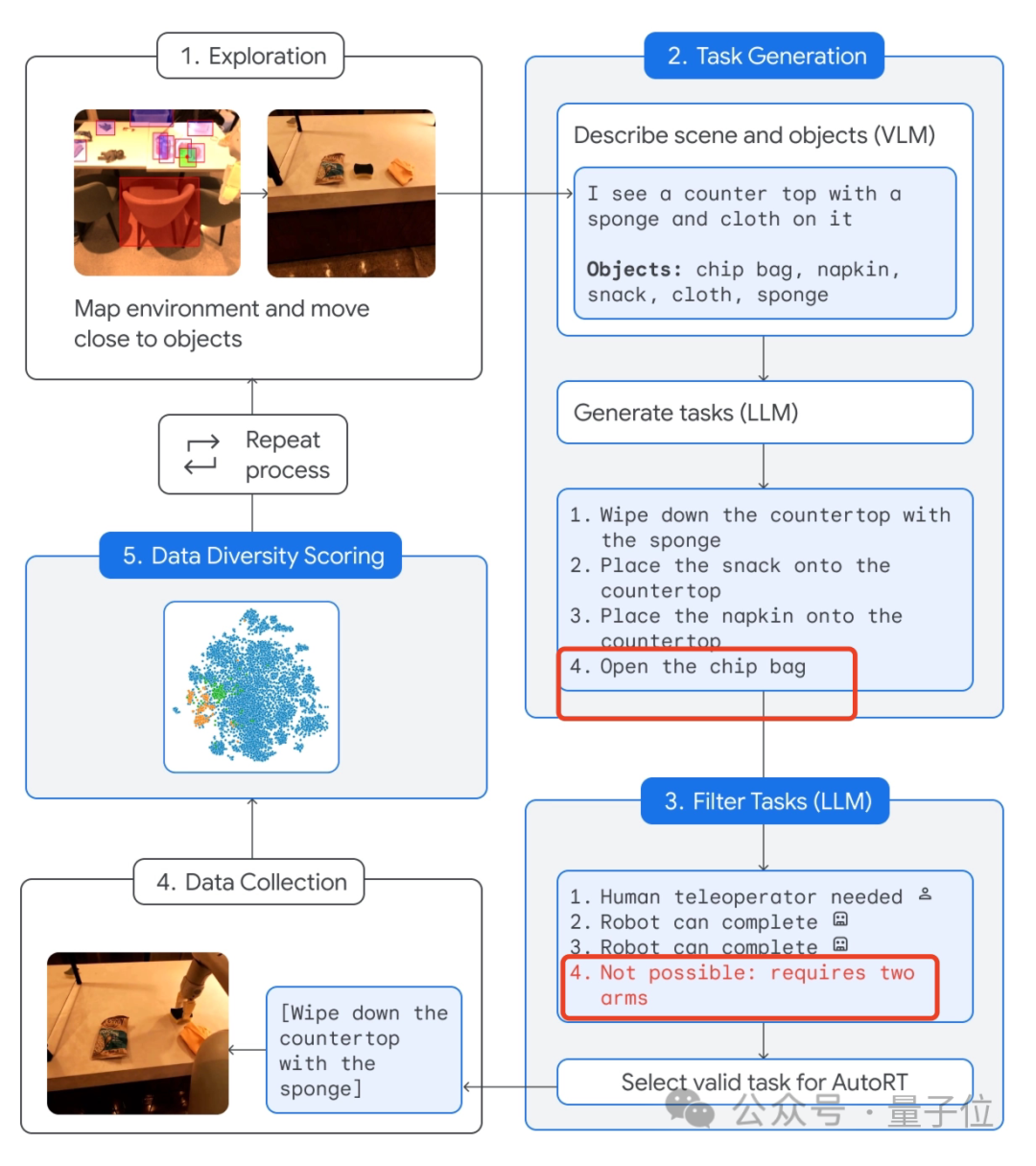

Finally, in order to help robots better understand the tasks assigned by humans, Google also started with data and directly built a collection system: AutoRT.

This system combines the large model (including LLM and VLM) with the robot control model (RT) to continuously command the robot to perform various tasks in the real world. tasks to generate and collect data.

The specific process is as follows:

Let the robot "freely" contact the environment and get close to the target.

Then use the camera and VLM model to describe the scene in front of you, including the specific items.

Then, LLM uses this information to generate several different tasks.

Note that the robot will not be executed immediately after being generated. Instead, LLM will be used to filter which tasks can be completed independently, which ones require human remote control, and which ones It simply cannot be completed.

What cannot be accomplished is "opening the bag of potato chips" because it requires two robotic arms (only 1 by default) .

Then, after completing this screening task, the robot can actually execute it.

Finally, the AutoRT system completes data collection and conducts diversity assessment.

According to reports, AutoRT can coordinate up to 20 robots at a time. Within 7 months, a total of 77,000 test data including 6,650 unique tasks were collected.

Finally, for this system, Google also emphasizes security.

After all, AutoRT’s collection tasks affect the real world, and “safety guardrails” are indispensable.

Specifically, the Basic Safety Code is provided by the LLM that performs task screening for robots, and is partly inspired by Isaac Asimov’s Three Laws of Robotics – first and foremost “Robots” Must not harm humans.

The second requirement is that the robot must not attempt tasks involving humans, animals, sharp objects or electrical appliances.

But this is not enough.

So AutoRT It is also equipped with multiple layers of practical safety measures found in conventional robotics.

For example, the robot automatically stops when the force on its joints exceeds a given threshold, and all actions can be controlled by physical switches that remain within human sight. Stop and wait.

Want to know more about these latest results from Google?

Good news, except for RT-Trajectory, which only has online papers, the rest are The code and paper are released together, and everyone is welcome to check it out~

One More Thing

Speaking of Google robots, we have to mention RT-2( All the results of this article are also based on).

This model was built by 54 Google researchers for 7 months and came out at the end of July this year.

embedded visual-text The multi-modal large model VLM can not only understand "human speech", but can also reason about "human speech" and perform some tasks that cannot be accomplished in one step, such as extracting information from three plastic toys: a lion, a whale, and a dinosaur. It's amazing to accurately pick up "extinct animals".

#Now it has achieved generalization ability and decision-making speed in just over 5 months The rapid improvement of robots can't help but make us sigh: I can't imagine how fast robots will really break into thousands of households.

The above is the detailed content of Google's DeepMind robot has released three results in a row! Both capabilities have been improved, and the data collection system can manage 20 robots at the same time.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files using Visual Studio Code? Create a header file and declare symbols in the header file using the .h or .hpp suffix name (such as classes, functions, variables) Compile the program using the #include directive to include the header file in the source file. The header file will be included and the declared symbols are available.

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Writing C in VS Code is not only feasible, but also efficient and elegant. The key is to install the excellent C/C extension, which provides functions such as code completion, syntax highlighting, and debugging. VS Code's debugging capabilities help you quickly locate bugs, while printf output is an old-fashioned but effective debugging method. In addition, when dynamic memory allocation, the return value should be checked and memory freed to prevent memory leaks, and debugging these issues is convenient in VS Code. Although VS Code cannot directly help with performance optimization, it provides a good development environment for easy analysis of code performance. Good programming habits, readability and maintainability are also crucial. Anyway, VS Code is

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Can vscode run kotlin

Apr 15, 2025 pm 06:57 PM

Running Kotlin in VS Code requires the following environment configuration: Java Development Kit (JDK) and Kotlin compiler Kotlin-related plugins (such as Kotlin Language and Kotlin Extension for VS Code) create Kotlin files and run code for testing to ensure successful environment configuration

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Depending on the specific needs and project size, choose the most suitable IDE: large projects (especially C#, C) and complex debugging: Visual Studio, which provides powerful debugging capabilities and perfect support for large projects. Small projects, rapid prototyping, low configuration machines: VS Code, lightweight, fast startup speed, low resource utilization, and extremely high scalability. Ultimately, by trying and experiencing VS Code and Visual Studio, you can find the best solution for you. You can even consider using both for the best results.

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

Can vscode be used for java

Apr 15, 2025 pm 08:33 PM

VS Code is absolutely competent for Java development, and its powerful expansion ecosystem provides comprehensive Java development capabilities, including code completion, debugging, version control and building tool integration. In addition, VS Code's lightweight, flexibility and cross-platformity make it better than bloated IDEs. After installing JDK and configuring JAVA_HOME, you can experience VS Code's Java development capabilities by installing "Java Extension Pack" and other extensions, including intelligent code completion, powerful debugging functions, construction tool support, etc. Despite possible compatibility issues or complex project configuration challenges, these issues can be addressed by reading extended documents or searching for solutions online, making the most of VS Code’s

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

What does sublime renewal balm mean

Apr 16, 2025 am 08:00 AM

Sublime Text is a powerful customizable text editor with advantages and disadvantages. 1. Its powerful scalability allows users to customize editors through plug-ins, such as adding syntax highlighting and Git support; 2. Multiple selection and simultaneous editing functions improve efficiency, such as batch renaming variables; 3. The "Goto Anything" function can quickly jump to a specified line number, file or symbol; but it lacks built-in debugging functions and needs to be implemented by plug-ins, and plug-in management requires caution. Ultimately, the effectiveness of Sublime Text depends on the user's ability to effectively configure and manage it.

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Can vscode run c

Apr 15, 2025 pm 08:24 PM

Of course! VS Code integrates IntelliSense, debugger and other functions through the "C/C" extension, so that it has the ability to compile and debug C. You also need to configure a compiler (such as g or clang) and a debugger (in launch.json) to write, run, and debug C code like you would with other IDEs.

What is a vscode task

Apr 15, 2025 pm 05:36 PM

What is a vscode task

Apr 15, 2025 pm 05:36 PM

VS Code's task system improves development efficiency by automating repetitive tasks, including build, test, and deployment. Task definitions are in the tasks.json file, allowing users to define custom scripts and commands, and can be executed in the terminal without leaving VS Code. Advantages include automation, integration, scalability, and debug friendliness, while disadvantages include learning curves and dependencies. Frequently asked questions include path issues and environment variable configuration.