System Tutorial

System Tutorial

LINUX

LINUX

Using Docker to build an ELK+Filebeat log centralized management platform

Using Docker to build an ELK+Filebeat log centralized management platform

Using Docker to build an ELK+Filebeat log centralized management platform

1. System: centos 7

2.docker 1.12.1

introduceElasticSearch

Elasticsearch is a real-time distributed search and analysis engine that can be used for full-text search, structured search and analysis. It is a search engine based on the full-text search engine Apache Lucene and written in Java language.

Logstash

Logstash is a data collection engine with real-time channel capabilities. It is mainly used to collect and parse logs and store them in ElasticSearch.

Kibana

Kibana is a web platform based on the Apache open source protocol and written in JavaScript language to provide analysis and visualization for Elasticsearch. It can search in Elasticsearch's index, interact with data, and generate tables and graphs in various dimensions.

Filebeat

Introducing Filebeat as a log collector is mainly to solve the problem of high overhead of Logstash. Compared with Logstash, Filebeat occupies almost negligible system CPU and memory.

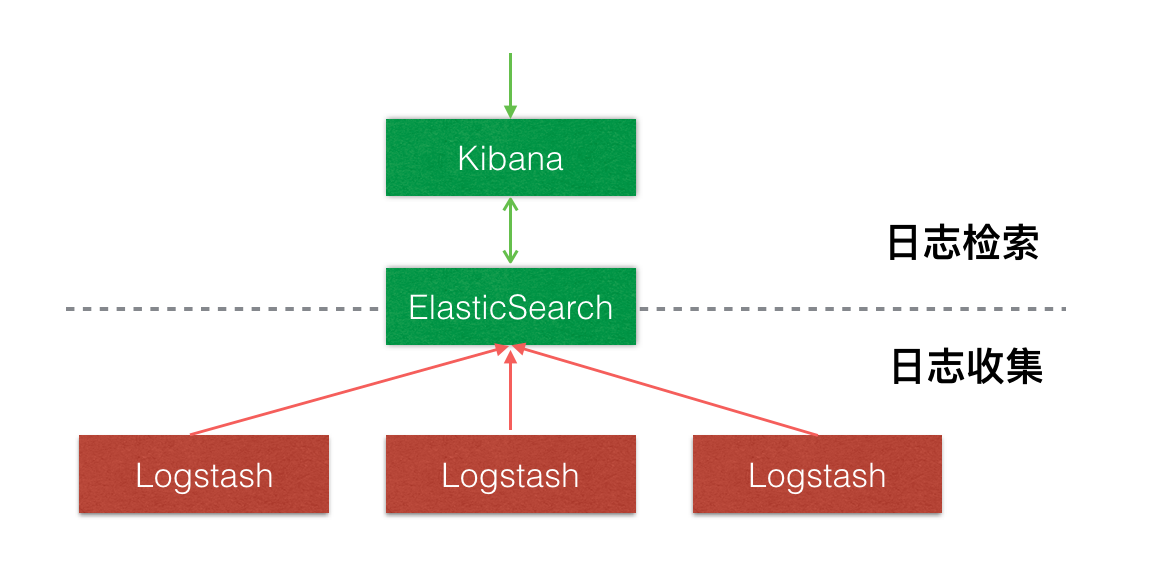

ArchitectureDo not introduce Filebeat

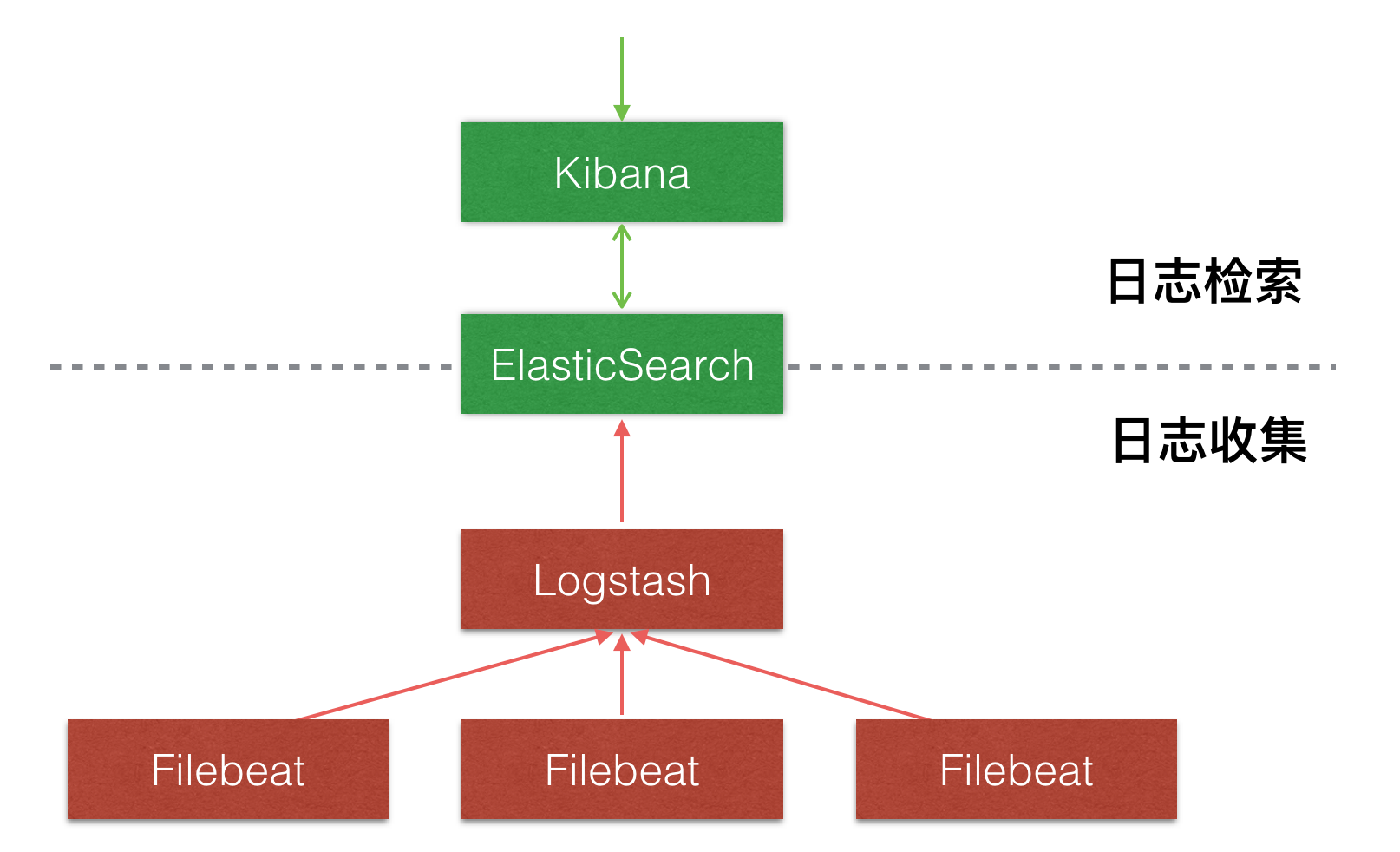

Introducing Filebeat

Start ElasticSearch

docker run -d -p 9200:9200 --name elasticsearch elasticsearch

Start Logstash

# 1. 新建配置文件logstash.conf

input {

beats {

port => 5044

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

#填写实际情况elasticsearch的访问IP,因为是跨容器间的访问,使用内网、公网IP,不要填写127.0.0.1|localhost

hosts => ["{$ELASTIC_IP}:9200"]

}

}

# 2.启动容器,暴露并映射端口,挂载配置文件

docker run -d --expose 5044 -p 5044:5044 --name logstash -v "$PWD":/config-dir logstash -f /config-dir/logstash.conf

Start Filebeat

Download address: https://www.elastic.co/downloads/beats/filebeat

# 1.下载Filebeat压缩包

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.2.2-linux-x86_64.tar.gz

# 2.解压文件

tar -xvf filebeat-5.2.2-linux-x86_64.tar.gz

# 3.新建配置文件filebeat.yml

filebeat:

prospectors:

- paths:

- /tmp/test.log #日志文件地址

input_type: log #从文件中读取

tail_files: true #以文件末尾开始读取数据

output:

logstash:

hosts: ["{$LOGSTASH_IP}:5044"] #填写logstash的访问IP

# 4.运行filebeat

./filebeat-5.2.2-linux-x86_64/filebeat -e -c filebeat.yml

Start Kibana

docker run -d --name kibana -e ELASTICSEARCH_URL=http://{$ELASTIC_IP}:9200 -p 5601:5601 kibanaSimulation log data

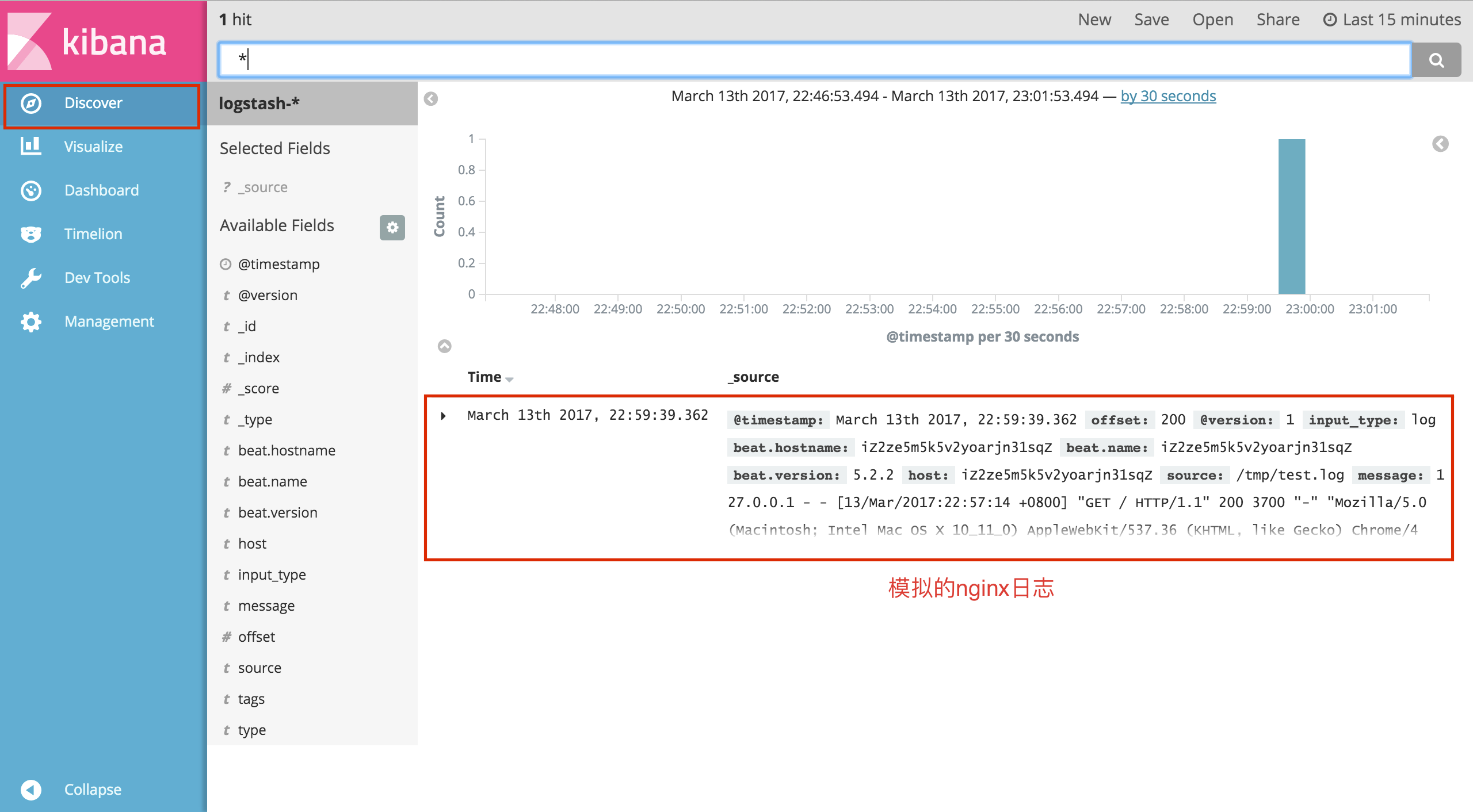

# 1.创建日志文件 touch /tmp/test.log # 2.向日志文件中写入一条nginx访问日志 echo '127.0.0.1 - - [13/Mar/2017:22:57:14 +0800] "GET / HTTP/1.1" 200 3700 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.86 Safari/537.36" "-"' >> /tmp/test.log

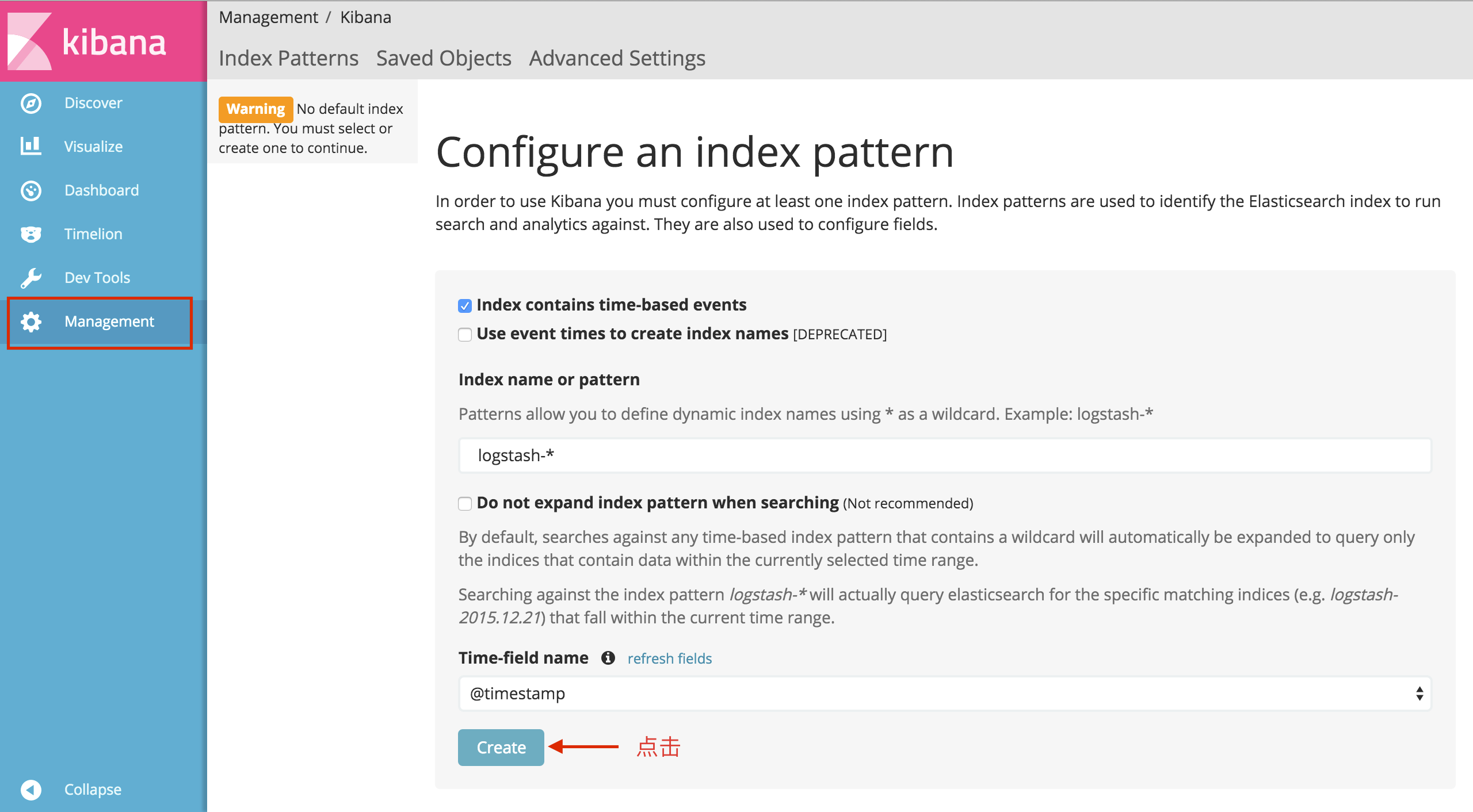

Visit http://{$KIBANA_IP}:5601

This article mainly describes how to build ELK step by step and the role Filebeat plays in it.

Here is just a demonstration for you. When deploying in a production environment, you need to use data volumes for data persistence. Container memory issues also need to be considered. Elasticsearch and logstash are relatively memory intensive. If they are not used Limitations may bring down your entire server.

Of course, security factors cannot be ignored, such as transmission security, minimized exposure of port permissions, firewall settings, etc.

Follow-uplogstash parses log formats, such as JAVA, nginx, nodejs and other logs;

Common search syntax for elasticsearch;

Create visual charts through kibana;

The above is the detailed content of Using Docker to build an ELK+Filebeat log centralized management platform. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

Difference between centos and ubuntu

Apr 14, 2025 pm 09:09 PM

The key differences between CentOS and Ubuntu are: origin (CentOS originates from Red Hat, for enterprises; Ubuntu originates from Debian, for individuals), package management (CentOS uses yum, focusing on stability; Ubuntu uses apt, for high update frequency), support cycle (CentOS provides 10 years of support, Ubuntu provides 5 years of LTS support), community support (CentOS focuses on stability, Ubuntu provides a wide range of tutorials and documents), uses (CentOS is biased towards servers, Ubuntu is suitable for servers and desktops), other differences include installation simplicity (CentOS is thin)

Centos options after stopping maintenance

Apr 14, 2025 pm 08:51 PM

Centos options after stopping maintenance

Apr 14, 2025 pm 08:51 PM

CentOS has been discontinued, alternatives include: 1. Rocky Linux (best compatibility); 2. AlmaLinux (compatible with CentOS); 3. Ubuntu Server (configuration required); 4. Red Hat Enterprise Linux (commercial version, paid license); 5. Oracle Linux (compatible with CentOS and RHEL). When migrating, considerations are: compatibility, availability, support, cost, and community support.

How to install centos

Apr 14, 2025 pm 09:03 PM

How to install centos

Apr 14, 2025 pm 09:03 PM

CentOS installation steps: Download the ISO image and burn bootable media; boot and select the installation source; select the language and keyboard layout; configure the network; partition the hard disk; set the system clock; create the root user; select the software package; start the installation; restart and boot from the hard disk after the installation is completed.

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use Docker Desktop? Docker Desktop is a tool for running Docker containers on local machines. The steps to use include: 1. Install Docker Desktop; 2. Start Docker Desktop; 3. Create Docker image (using Dockerfile); 4. Build Docker image (using docker build); 5. Run Docker container (using docker run).

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view the docker process

Apr 15, 2025 am 11:48 AM

How to view the docker process

Apr 15, 2025 am 11:48 AM

Docker process viewing method: 1. Docker CLI command: docker ps; 2. Systemd CLI command: systemctl status docker; 3. Docker Compose CLI command: docker-compose ps; 4. Process Explorer (Windows); 5. /proc directory (Linux).

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

VS Code system requirements: Operating system: Windows 10 and above, macOS 10.12 and above, Linux distribution processor: minimum 1.6 GHz, recommended 2.0 GHz and above memory: minimum 512 MB, recommended 4 GB and above storage space: minimum 250 MB, recommended 1 GB and above other requirements: stable network connection, Xorg/Wayland (Linux)

What to do if the docker image fails

Apr 15, 2025 am 11:21 AM

What to do if the docker image fails

Apr 15, 2025 am 11:21 AM

Troubleshooting steps for failed Docker image build: Check Dockerfile syntax and dependency version. Check if the build context contains the required source code and dependencies. View the build log for error details. Use the --target option to build a hierarchical phase to identify failure points. Make sure to use the latest version of Docker engine. Build the image with --t [image-name]:debug mode to debug the problem. Check disk space and make sure it is sufficient. Disable SELinux to prevent interference with the build process. Ask community platforms for help, provide Dockerfiles and build log descriptions for more specific suggestions.