Technology peripherals

Technology peripherals

AI

AI

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models

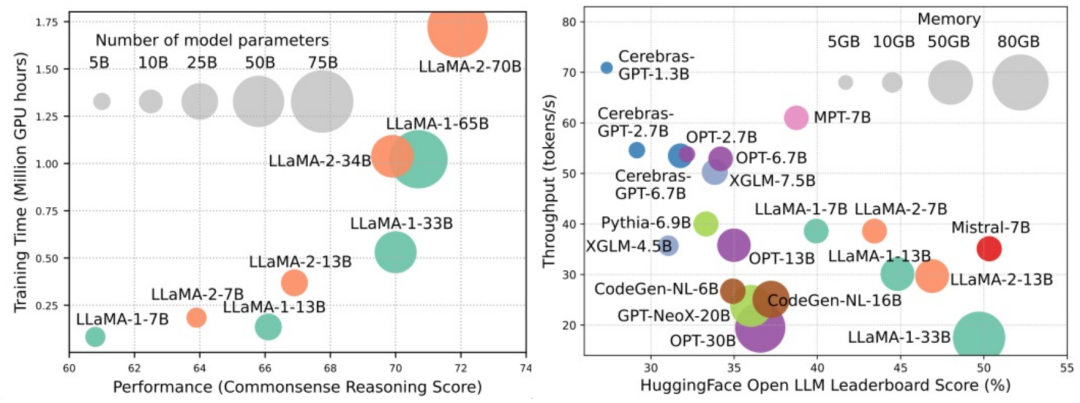

Large-scale language models (LLMs) have demonstrated compelling capabilities in many important tasks, including natural language understanding, language generation, and complex reasoning, and have had a profound impact on society. However, these outstanding capabilities require significant training resources (shown in the left image) and long inference times (shown in the right image). Therefore, researchers need to develop effective technical means to solve their efficiency problems.

In addition, as can be seen from the right side of the figure, some efficient LLMs (Language Models) such as Mistral-7B have been successfully used in the design and deployment of LLMs. These efficient LLMs can greatly reduce inference memory usage and reduce inference latency while maintaining accuracy similar to LLaMA1-33B. This shows that there are already some feasible and efficient methods that have been successfully applied to the design and use of LLMs.

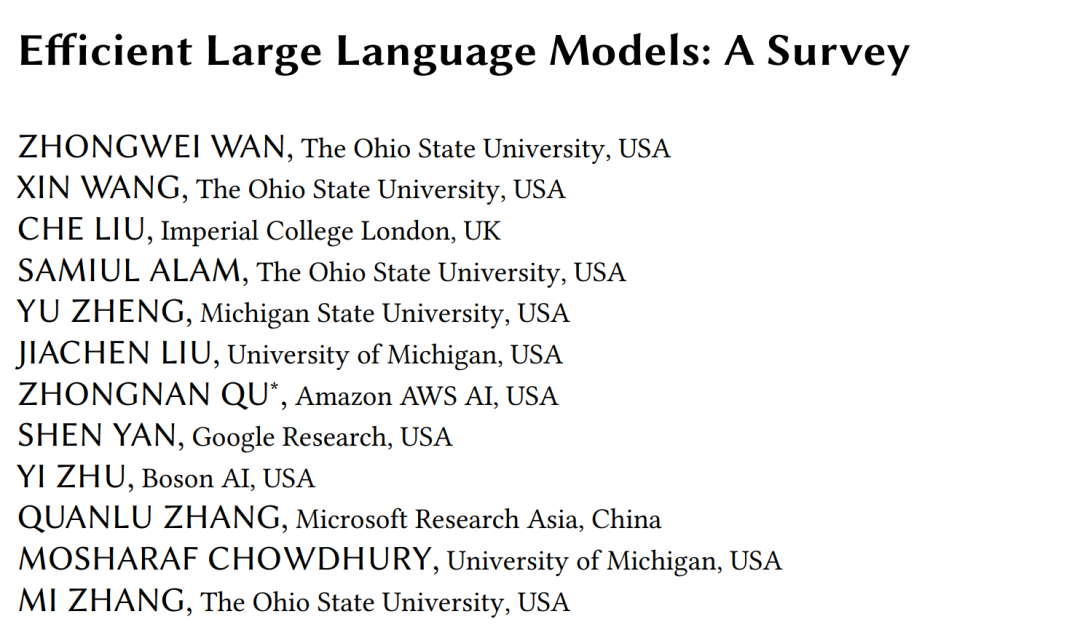

In this review, experts from Ohio State University, Imperial College, Michigan State University, University of Michigan, Amazon, Google, Boson AI, Researchers at Microsoft Asia Research provide a systematic and comprehensive survey of research into efficient LLMs. They divided existing technologies for optimizing the efficiency of LLMs into three categories, including model-centric, data-centric and framework-centric, and summarized and discussed the most cutting-edge related technologies.

- Paper: https://arxiv.org/abs/2312.03863

- GitHub: https://github.com/AIoT-MLSys-Lab/Efficient-LLMs-Survey

In order to conveniently organize the papers involved in the review and keep them updated, the researcher created a GitHub repository and actively maintains it. They hope that this repository will help researchers and practitioners systematically understand the research and development of efficient LLMs and inspire them to contribute to this important and exciting field.

The URL of the warehouse is https://github.com/AIoT-MLSys-Lab/Efficient-LLMs-Survey. In this repository you can find content related to a survey of efficient and low-power machine learning systems. This repository provides research papers, code, and documentation to help people better understand and explore efficient and low-power machine learning systems. If you are interested in this area, you can get more information by visiting this repository.

Model Centric

A model-centric approach focuses on efficient techniques at the algorithm level and system level, where the model itself is the focus. Since LLMs have billions or even trillions of parameters and have unique characteristics such as emergence compared to smaller-scale models, new techniques need to be developed to optimize the efficiency of LLMs. This article discusses five categories of model-centric methods in detail, including model compression, efficient pre-training, efficient fine-tuning, efficient inference and efficient model architecture design.

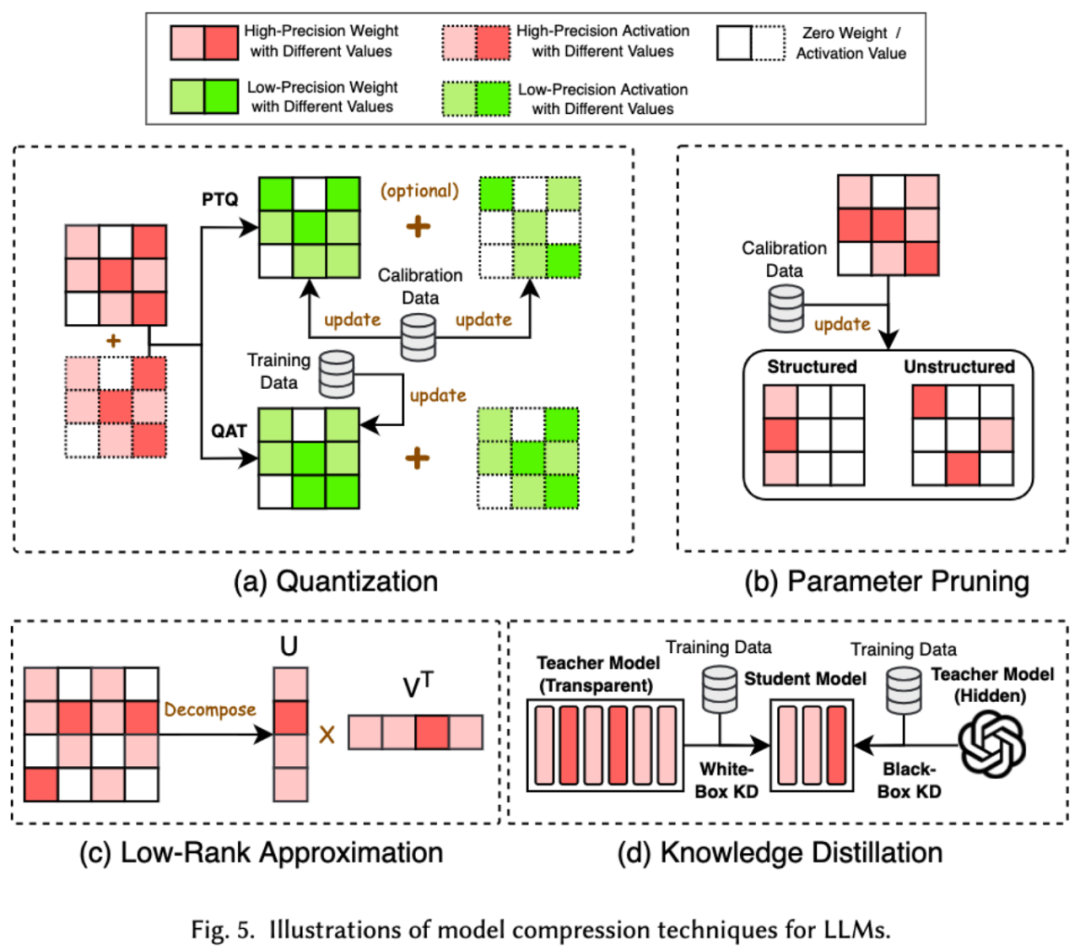

1. Compression model In the field of machine learning, model size is often an important consideration. Larger models often require more storage space and computing resources, and may encounter limitations when running on mobile devices. Therefore, model compression is a commonly used technology that can reduce the size of the model

Model compression technology is mainly divided into four categories: quantization, parameter pruning, and low-rank estimation and knowledge distillation (see the figure below), in which quantization will compress the weights or activation values of the model from high precision to low precision, parameter pruning will search and delete the more redundant parts of the model weights, and low-rank estimation will reduce the model's weights. The weight matrix is converted into the product of several low-rank small matrices, and knowledge distillation directly uses the large model to train the small model, so that the small model has the ability to replace the large model when doing certain tasks.

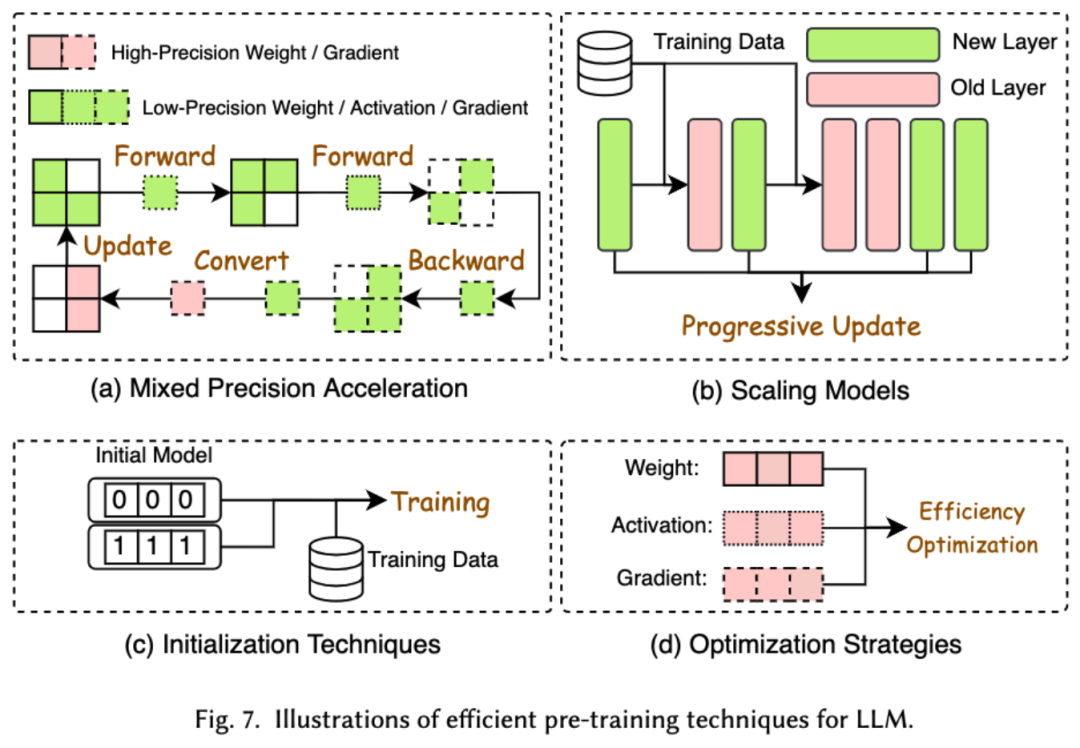

2. Efficient pre-training

The cost of pre-training LLMs is very expensive. Efficient pre-training aims to improve efficiency and reduce the cost of the LLMs pre-training process. Efficient pre-training can be divided into mixed precision acceleration, model scaling, initialization technology, optimization strategy and system-level acceleration.

Mixed-precision acceleration improves the efficiency of pre-training by calculating gradients, weights, and activations using low-precision weights, which are then converted back to high-precision and applied to update the original weights. Model scaling accelerates pre-training convergence and reduces training costs by using the parameters of small models to scale to large models. Initialization technology speeds up the convergence of the model by designing the initialization value of the model. The optimization strategy focuses on designing lightweight optimizers to reduce memory consumption during model training. System-level acceleration uses distributed and other technologies to accelerate model pre-training from the system level.

3. Efficient fine-tuning

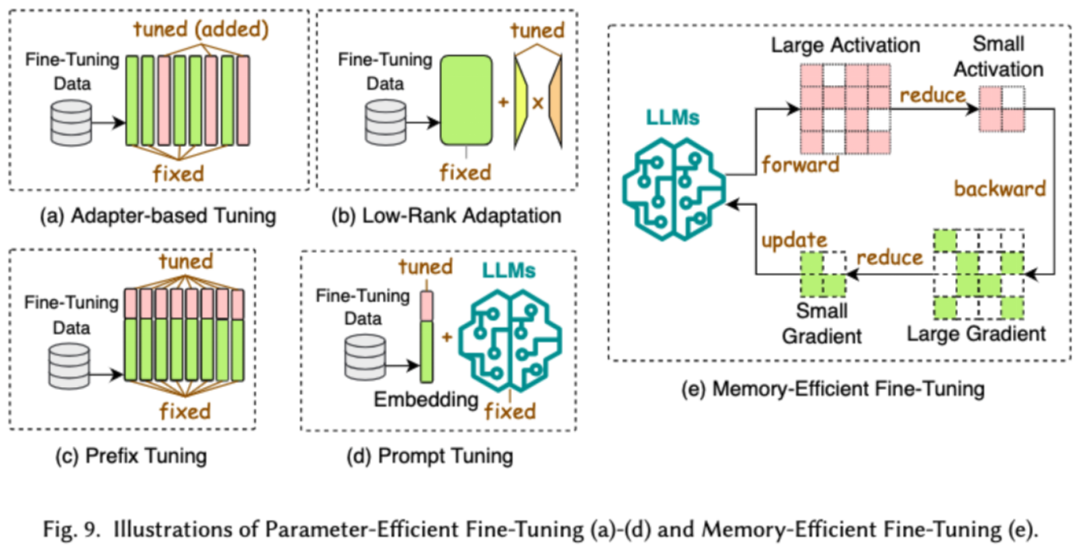

Efficient fine-tuning aims to improve LLMs Fine-tuning process efficiency. Common efficient fine-tuning technologies are divided into two categories, one is parameter-based efficient fine-tuning, and the other is memory-efficient fine-tuning.

The goal of parameter-based efficient fine-tuning (PEFT) is to tune LLM to downstream tasks by freezing the entire LLM backbone and updating only a small set of additional parameters. In the paper, we further divided PEFT into adapter-based fine-tuning, low-rank adaptation, prefix fine-tuning and prompt word fine-tuning.

Efficient memory-based fine-tuning focuses on reducing memory consumption during the entire LLM fine-tuning process, such as reducing the memory consumed by optimizer status and activation values.

4. Efficient reasoning

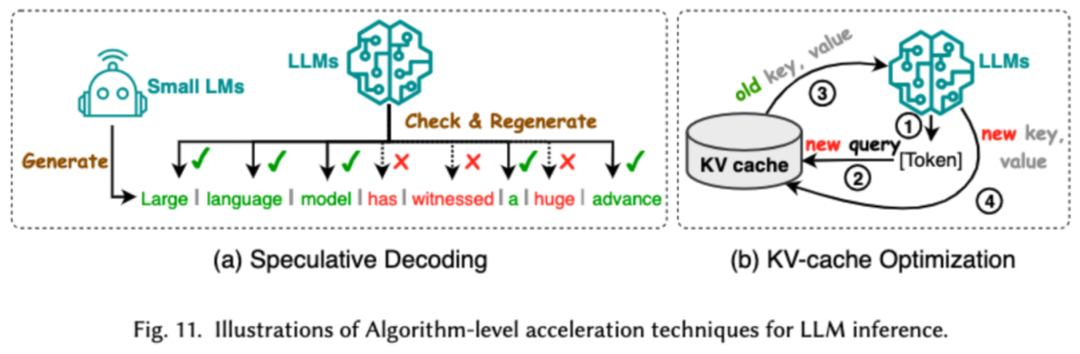

Efficient reasoning aims to improve LLMs reasoning process efficiency. Researchers divide common high-efficiency reasoning technologies into two categories, one is algorithm-level reasoning acceleration, and the other is system-level reasoning acceleration.

Inference acceleration at the algorithm level can be divided into two categories: speculative decoding and KV - cache optimization. Speculative decoding speeds up the sampling process by computing tokens in parallel using a smaller draft model to create speculative prefixes for the larger target model. KV - Cache optimization refers to optimizing the repeated calculation of Key-Value (KV) pairs during the inference process of LLMs.

System-level inference acceleration is to optimize the number of memory accesses on specified hardware, increase the amount of algorithm parallelism, etc. to accelerate LLM inference.

5. Efficient model architecture design

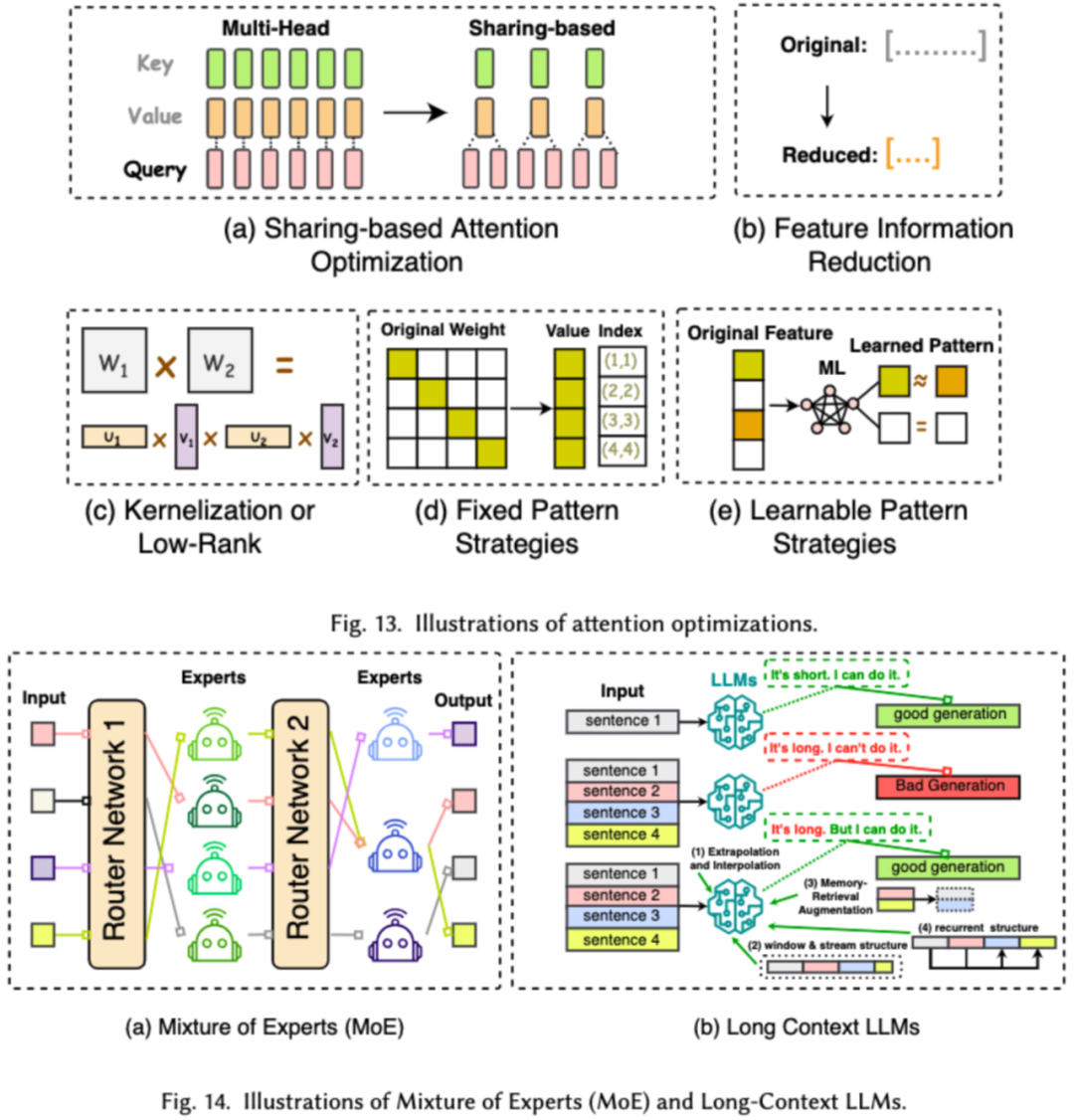

Efficient architecture design for LLMs refers to strategically optimizing the model structure and calculation process to improve performance and scalability while minimizing resource consumption. We divide efficient model architecture design into four major categories based on model types: efficient attention modules, hybrid expert models, long text large models, and architectures that can replace transformers.

The efficient attention module aims to optimize the complex calculations and memory usage in the attention module, while the mixed expert model (MoE) uses multiple reasoning decisions in some modules of LLMs. A small expert model is used as a replacement to achieve overall sparsity. The long text large model is an LLMs specially designed to efficiently process ultra-long text. The architecture that can replace the transformer is to reduce the complexity of the model and reduce the complexity of the model by redesigning the model architecture. Achieving comparable reasoning capabilities for post-transformer architectures.

Data-centric

A data-centric approach focuses on the quality and structure of data in role in improving the efficiency of LLMs. In this article, researchers discuss two types of data-centric methods in detail, includingdata selection and prompt word engineering.

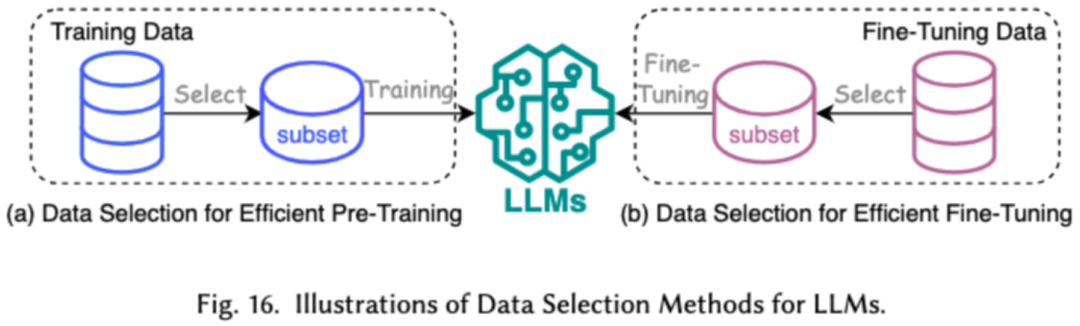

1. Data selection

The data selection of LLMs is aimed at pre-training/ Fine-tune data for cleaning and selection, such as removing redundant and invalid data, to speed up the training process.

2. Prompt Word Project

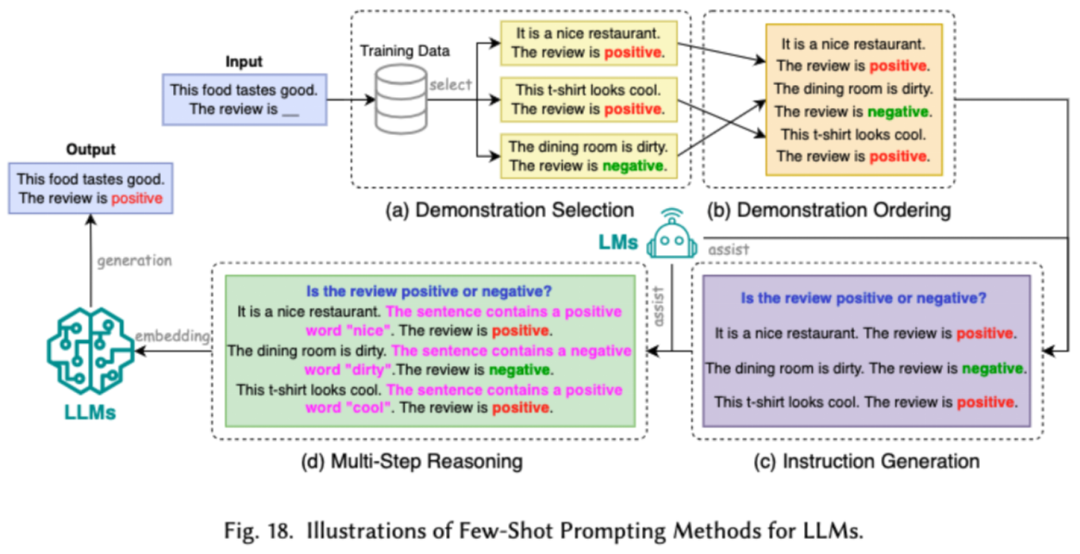

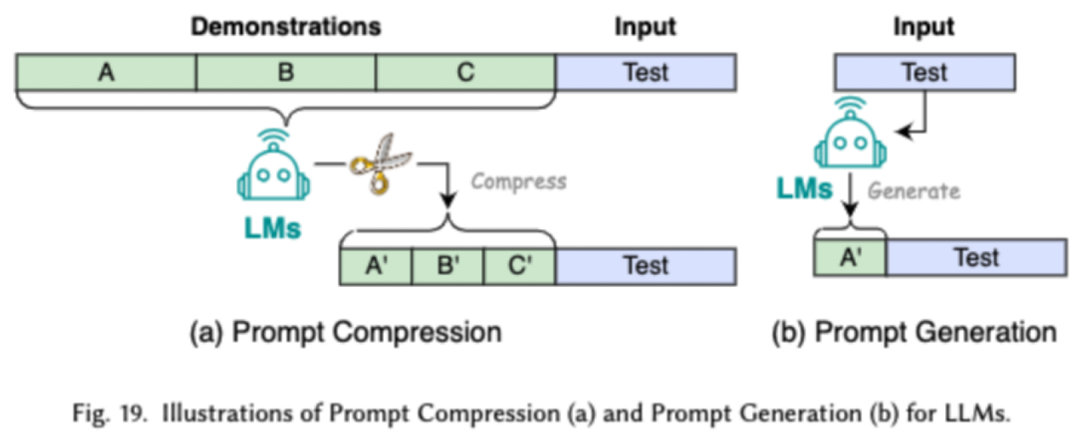

Prompt Word Project guides LLMs by designing effective inputs (prompt words) The efficiency of generating the desired output is that the prompt words can be designed to achieve model performance equivalent to that of tedious fine-tuning. Researchers divide common prompt word engineering technologies into three major categories: few-sample prompt word engineering, prompt word compression, and prompt word generation.

The few-sample prompt word project provides LLM with a limited set of examples to guide its understanding of the tasks that need to be performed. Prompt word compression accelerates LLMs' processing of input by compressing lengthy prompt input or learning and using prompt representations. Prompt word generation aims to automatically create effective prompts that guide the model to generate specific and relevant responses, rather than using manually annotated data.

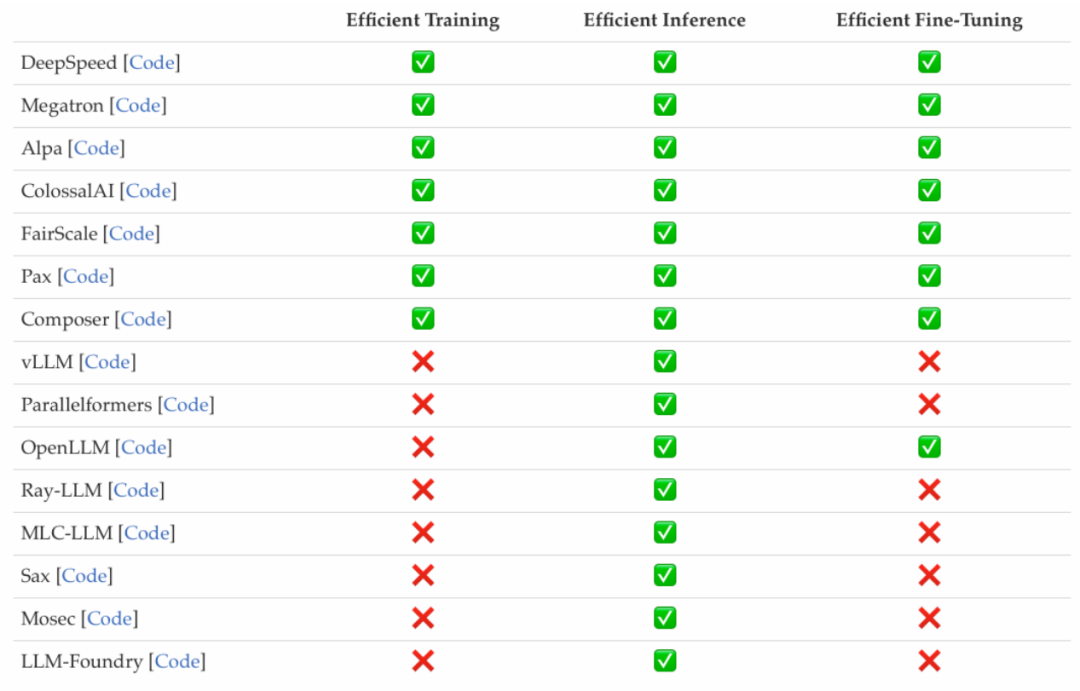

Frame-centered

The researcher investigated The recently popular efficient LLMs framework lists the efficient tasks they can optimize, including pre-training, fine-tuning and inference (as shown in the figure below).

Summary

In this survey, the researcher provides everyone with a A systematic review of LLMs, an important research area dedicated to making LLMs more democratized. They begin by explaining why efficient LLMs are needed. Under an orderly framework, this paper investigates efficient technologies at the algorithmic level and system level of LLMs from the model-centered, data-centered, and framework-centered perspectives respectively.

Researchers believe that efficiency will play an increasingly important role in LLMs and LLMs-oriented systems. They hope that this survey will help researchers and practitioners quickly enter this field and serve as a catalyst to stimulate new research on efficient LLMs.

The above is the detailed content of A deep dive into models, data, and frameworks: an exhaustive 54-page review of efficient large language models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

How to configure firewall rules for Debian syslog

Apr 13, 2025 am 06:51 AM

This article describes how to configure firewall rules using iptables or ufw in Debian systems and use Syslog to record firewall activities. Method 1: Use iptablesiptables is a powerful command line firewall tool in Debian system. View existing rules: Use the following command to view the current iptables rules: sudoiptables-L-n-v allows specific IP access: For example, allow IP address 192.168.1.100 to access port 80: sudoiptables-AINPUT-ptcp--dport80-s192.16

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

How to learn Debian syslog

Apr 13, 2025 am 11:51 AM

This guide will guide you to learn how to use Syslog in Debian systems. Syslog is a key service in Linux systems for logging system and application log messages. It helps administrators monitor and analyze system activity to quickly identify and resolve problems. 1. Basic knowledge of Syslog The core functions of Syslog include: centrally collecting and managing log messages; supporting multiple log output formats and target locations (such as files or networks); providing real-time log viewing and filtering functions. 2. Install and configure Syslog (using Rsyslog) The Debian system uses Rsyslog by default. You can install it with the following command: sudoaptupdatesud

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession