GPT-4 has demonstrated its extraordinary ability to generate detailed and accurate image descriptions, marking the arrival of a new era of language and visual processing. Therefore, multimodal large language models (MLLM) similar to GPT-4 have recently emerged and become a hot emerging research field. The core of its research is to combine powerful The LLM is used as a cognitive framework to perform multimodal tasks. The unexpected and outstanding performance of MLLM not only surpasses traditional methods, but also makes it one of the potential ways to achieve general artificial intelligence. To create useful MLLMs, one needs to use large-scale paired image-text data and visual-linguistic fine-tuning data to train frozen LLMs (such as LLaMA and Vicuna ) and visual representations (such as CLIP and BLIP-2). #The training of MLLM is usually divided into two stages: pre-training stage and fine-tuning stage. The purpose of pre-training is to allow the MLLM to acquire a large amount of knowledge, while fine-tuning is to teach the model to better understand human intentions and generate accurate responses. In order to enhance MLLM's ability to understand visual-language and follow instructions, a powerful fine-tuning technology called instruction tuning has recently emerged. This technology helps align models with human preferences so that the model produces human-desired results under a variety of different instructions. In terms of developing instruction fine-tuning technology, a quite constructive direction is to introduce image annotation, visual question answering (VQA) and visual reasoning data sets in the fine-tuning stage. Previous techniques such as InstructBLIP and Otter have used a series of visual-linguistic data sets to fine-tune visual instructions, and have also achieved promising results. However, it has been observed that commonly used multi-modal instruction fine-tuning datasets contain a large number of low-quality instances, where responses are incorrect or irrelevant. Such data is misleading and can negatively impact model performance. This question prompted researchers to explore the possibility of achieving robust performance using small amounts of high-quality follow-instruction data. Some recent studies have obtained encouraging results, indicating that this direction has potential. For example, Zhou et al. proposed LIMA, which is a language model fine-tuned using high-quality data carefully selected by human experts. This study shows that large language models can achieve satisfactory results even with limited amounts of high-quality follow-instruction data. So, the researchers concluded: Less is more when it comes to alignment. However, there has not been a clear guideline on how to select suitable high-quality datasets for fine-tuning multi-modal language models. A research team from Qingyuan Research Institute of Shanghai Jiao Tong University and Lehigh University has filled this gap and proposed a robust and effective data selector. This data selector automatically identifies and filters low-quality visual-verbal data, ensuring that the most relevant and informative samples are used for model training. ##Paper address: https://arxiv.org/abs/2308.12067

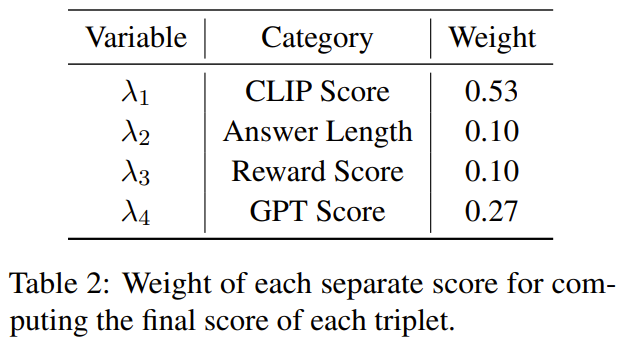

The researchers said that the focus of this study is to explore the effectiveness of a small but high-quality instruction fine-tuning data in fine-tuning multi-modal large-scale language models. In addition to this, this paper introduces several new metrics specifically designed to evaluate the quality of multimodal instruction data. After performing spectral clustering on the image, the data selector calculates a weighted score that combines the CLIP score, GPT score, bonus score, and answer length for each piece of visual-verbal data.

By using this selector on 3400 raw data used to fine-tune MiniGPT-4, the researchers found that most of the data had low quality issues. Using this data selector, the researcher got a much smaller subset of the curated data - just 200 data, only 6% of the original data set. Then they used the same training configuration as MiniGPT-4 and fine-tuned it to get a new model: InstructionGPT-4.

#The researchers said this is an exciting finding because it shows that the quality of data is more important than the quantity in fine-tuning visual-verbal instructions. Furthermore, this change with greater emphasis on data quality provides a new and more effective paradigm that can improve MLLM fine-tuning.

The researchers conducted rigorous experiments and the experimental evaluation of the fine-tuned MLLM focused on seven diverse and complex open-domain multi-modal datasets, including Flick- 30k, ScienceQA, VSR, etc. They compared the inference performance of models fine-tuned using different data set selection methods (using data selectors, randomly sampling the data set, using the complete data set) on different multi-modal tasks. The results showed the performance of InstructionGPT-4 superiority. In addition, it should be noted: The evaluator used by the researcher for evaluation is GPT-4. Specifically, the researchers used prompt to turn GPT-4 into an evaluator, which can use the test set in LLaVA-Bench to compare the response results of InstructionGPT-4 and the original MiniGPT-4. It was found that although the fine-tuning data used by InstructionGPT-4 is only a little bit 6% compared to the original instruction compliance data used by MiniGPT-4, the latter The response was the same or better 73% of the time. The main contributions of this paper include:

- By selecting 200 (approx. 6%) high-quality instruction follow data to train InstructionGPT-4, researchers show that it is possible to use less instruction data for multi-modal large language models to achieve better alignment.

- This paper proposes a data selector that uses a simple and interpretable principle to select high-quality multi-modal instruction compliance data for fine-tuning. This approach strives to achieve validity and portability in the evaluation and adjustment of subsets of data.

- Researchers have shown through experiments that this simple technology can handle different tasks well. Compared with the original MiniGPT-4, InstructionGPT-4, which is fine-tuned using only 6% filtered data, achieves better performance on a variety of tasks.

This The goal of the research is to propose a simple and portable data selector that can automatically select a subset from the original fine-tuned dataset. To this end, the researchers defined a selection principle that focuses on the diversity and quality of multimodal data sets. A brief introduction will be given below. ##In order to effectively train MLLM, Selecting useful multimodal instruction data is critical. In order to select the optimal instruction data, researchers proposed two key principles: diversity and quality. For diversity, the approach taken by researchers is to cluster image embeddings to separate the data into different groups. To assess quality, the researchers adopted some key metrics for efficient evaluation of multimodal data.

Given a visual - Linguistic instruction data set and a pre-trained MLLM (such as MiniGPT-4 and LLaVA), the ultimate goal of the data selector is to identify a subset for fine-tuning and make this subset bring improvements to the pre-trained MLLM.

To select this subset and ensure its diversity, the researchers first used a clustering algorithm to divide the original data set into multiple categories.

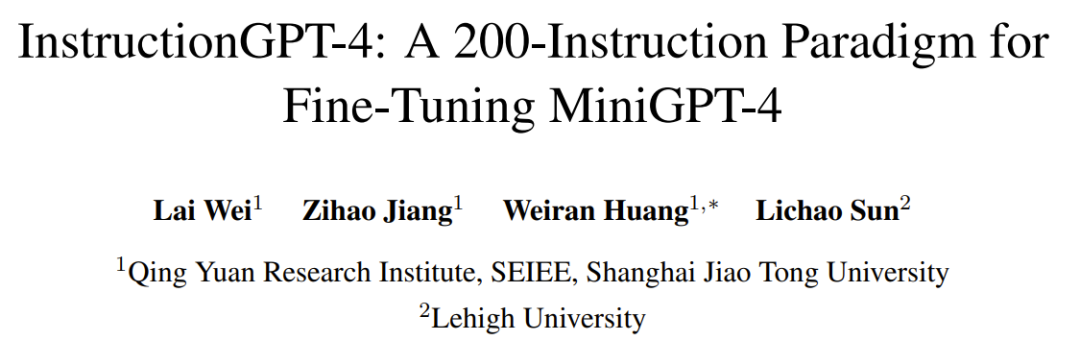

In order to ensure the quality of the selected multi-modal instruction data, the researchers developed a set of indicators for evaluation, as shown in Table 1 below.

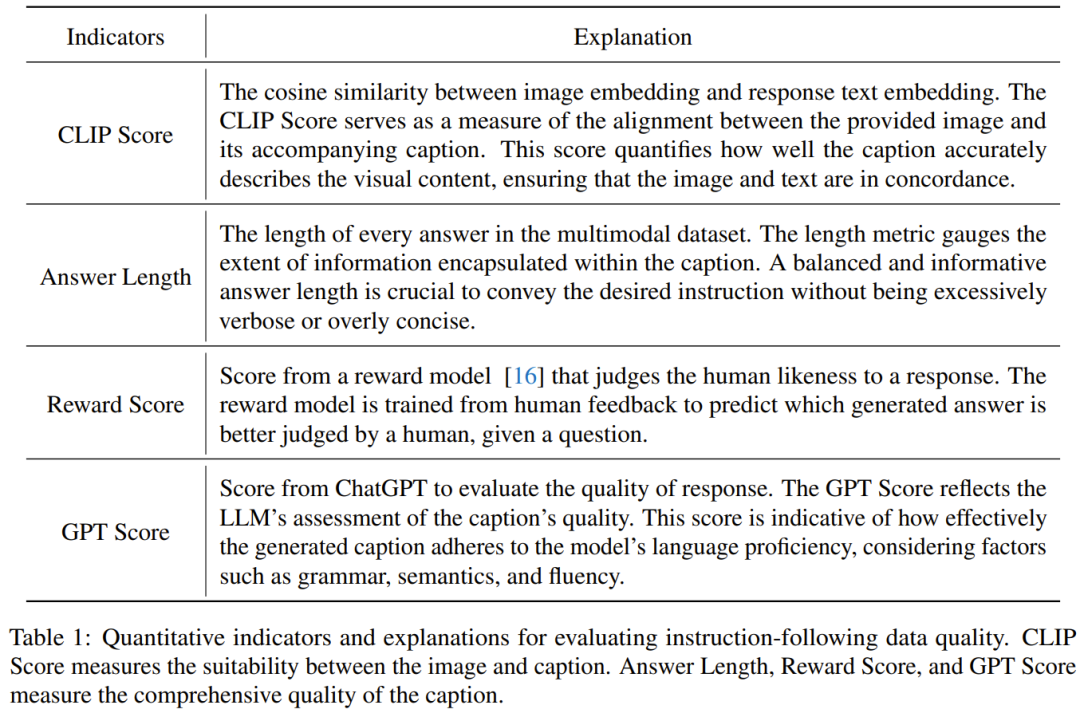

Table 2 shows the weight of each different score when calculating the final score.

Algorithm 1 shows the entire workflow of the data selector.

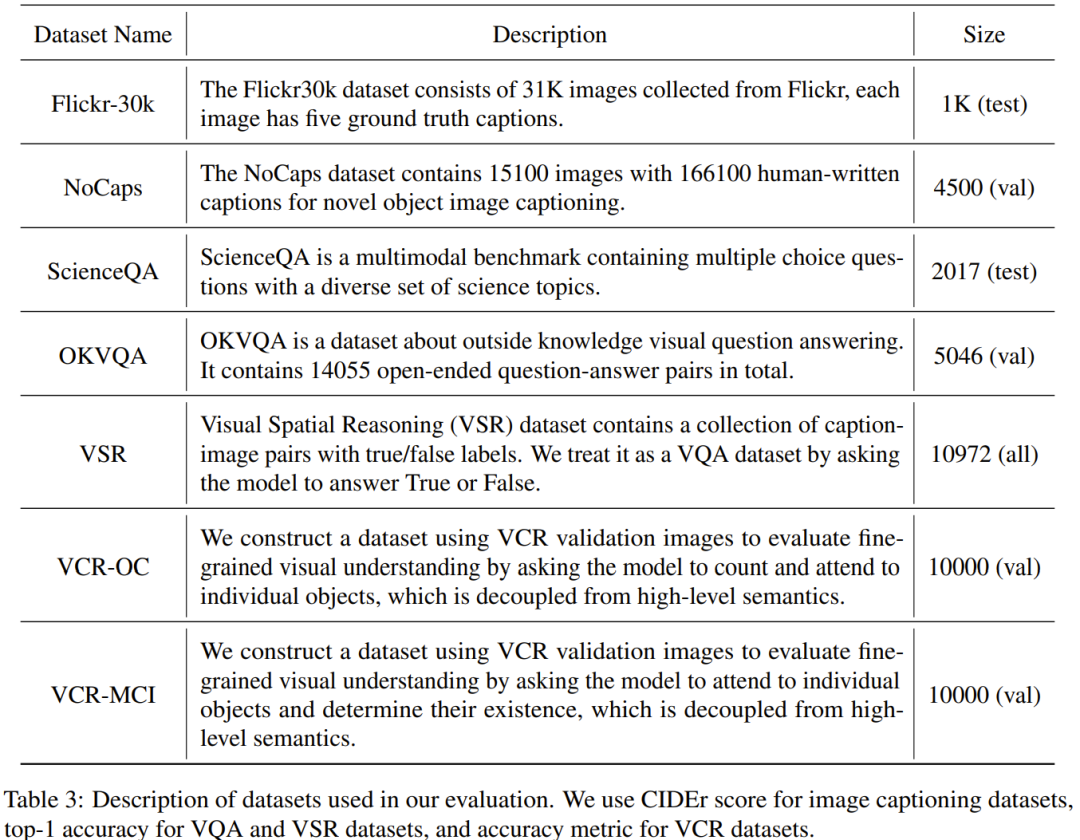

Data used in experimental evaluation The set is shown in Table 3 below.

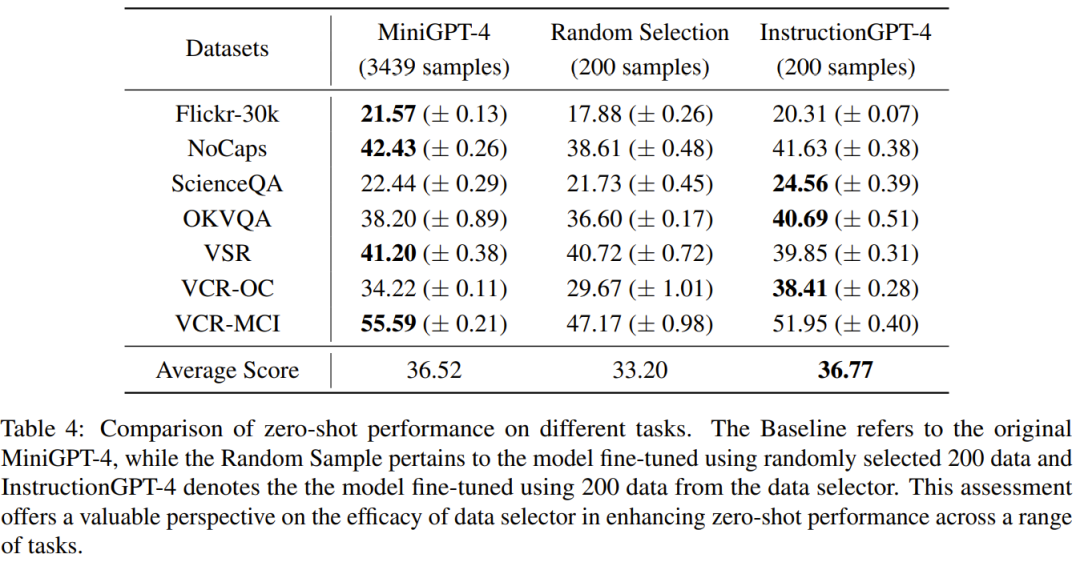

Table 4 compares MiniGPT- 4 Performance of the baseline model, MiniGPT-4 fine-tuned using randomly sampled data, and InstructionGPT-4 fine-tuned using data selectors.

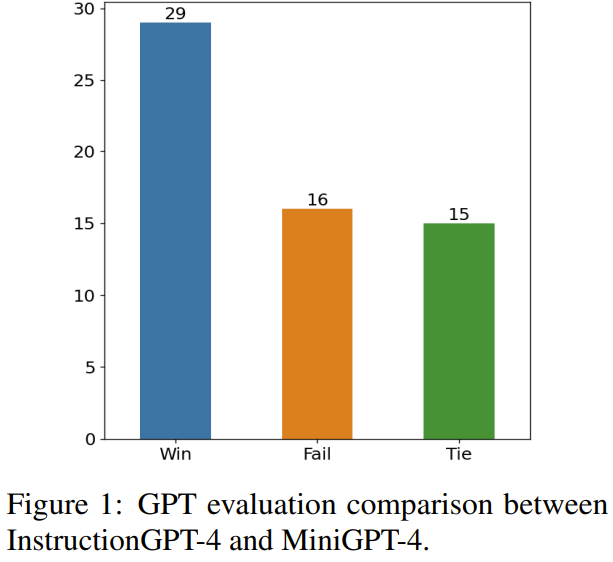

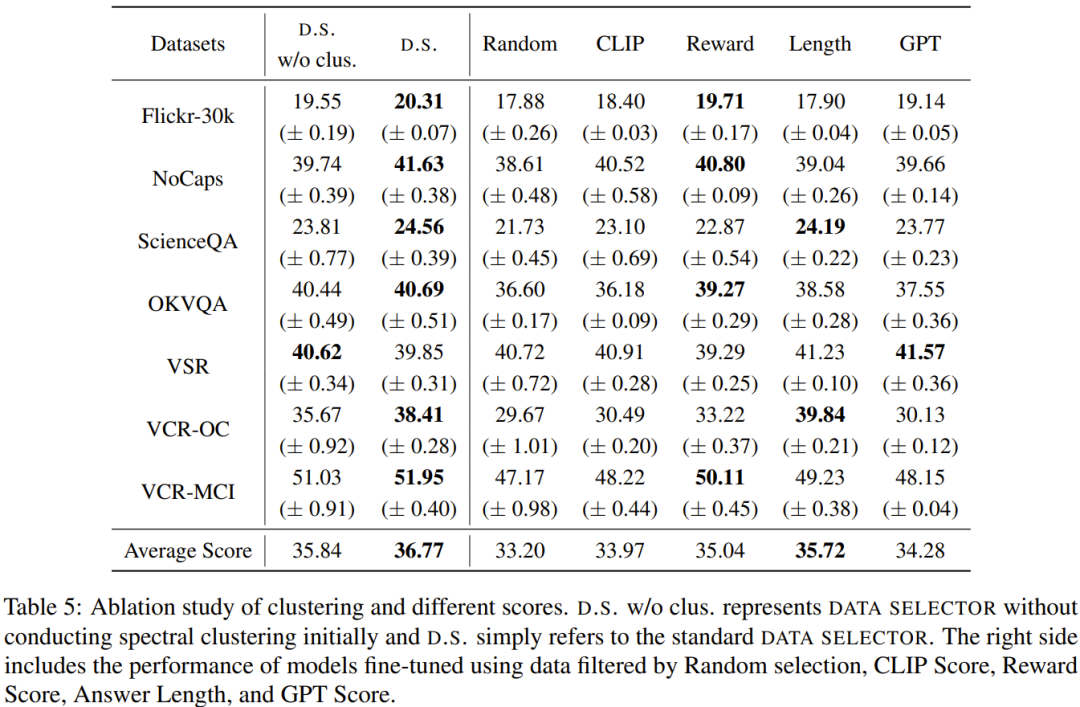

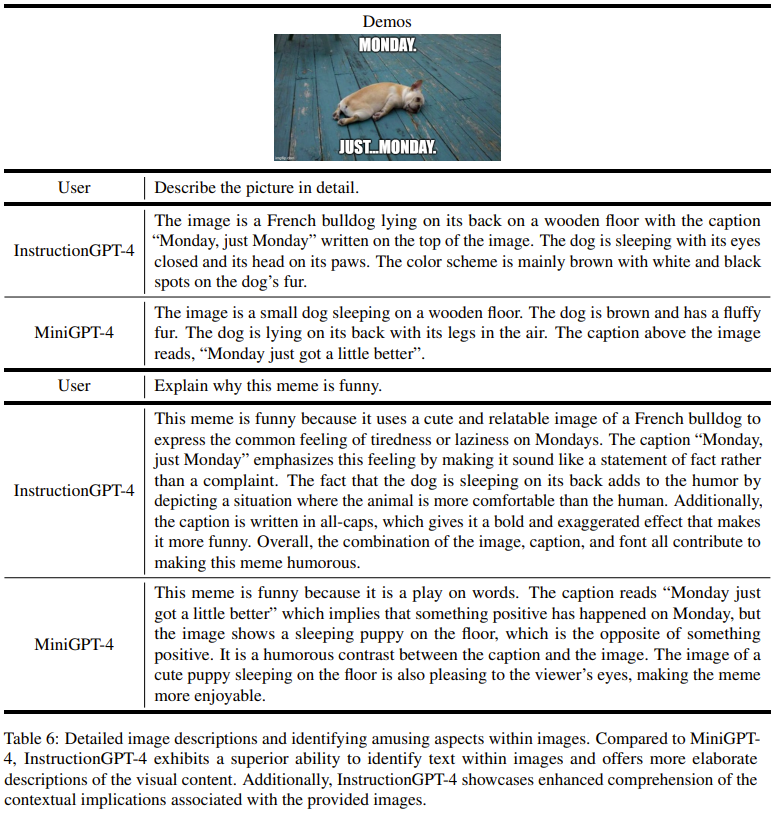

It can be observed that the average performance of InstructionGPT-4 is the best. Specifically, InstructionGPT-4 outperforms the baseline model by 2.12% on ScienceQA, and outperforms the baseline model by 2.49% and 4.19% on OKVQA and VCR-OC respectively. Furthermore, InstructionGPT-4 outperforms models trained with random samples on all other tasks except VSR. By evaluating and comparing these models on a range of tasks, it is possible to discern their respective capabilities and determine the efficacy of newly proposed data selectors that effectively identify high-quality data. # Such a comprehensive analysis shows that wise data selection can improve the zero-shot performance of the model on a variety of different tasks. 1) Win: InstructionGPT-4 in two In either case, win or win once and draw once; 2) Draw: InstructionGPT-4 and MiniGPT-4 Draw twice or win once and lose once; 3) Lose: InstructionGPT-4 Lose twice or lose once and draw once. Figure 1 shows the results of this evaluation method. Out of 60 problems, InstructionGPT-4 won 29 games, lost 16 games, and tied in the remaining 15 games. This is enough to prove that InstructionGPT-4 is significantly better than MiniGPT-4 in terms of response quality. Ablation is given in Table 5 The analysis results of the experiment, from which the importance of the clustering algorithm and various evaluation scores can be seen. In order to gain a deeper understanding of InstructionGPT-4’s ability to understand visual input and generate reasonable responses, the researchers also conducted a comparative evaluation of the image understanding and dialogue capabilities of InstructionGPT-4 and MiniGPT-4. The analysis is based on a striking example involving description and further understanding of the image, and the results are shown in Table 6 . InstructionGPT-4 is better at providing comprehensive image descriptions and identifying interesting aspects of images. Compared to MiniGPT-4, InstructionGPT-4 is more capable of recognizing text present in images. Here, InstructionGPT-4 is able to correctly point out that there is a phrase in the image: Monday, just Monday.See the original paper for more details. The above is the detailed content of After selecting 200 pieces of data, MiniGPT-4 was surpassed by matching the same model.. For more information, please follow other related articles on the PHP Chinese website!