Technology peripherals

Technology peripherals

AI

AI

Will end-to-end technology in the field of autonomous driving replace frameworks such as Apollo and autoware?

Will end-to-end technology in the field of autonomous driving replace frameworks such as Apollo and autoware?

Will end-to-end technology in the field of autonomous driving replace frameworks such as Apollo and autoware?

Rethinking the Open-Loop Evaluation of End-to-End Autonomous Driving in nuScenes

- Author unit: Baidu

- Author: Jiang-Tian Zhai, Ze Feng, Baidu Wang Jingdong Group

- Published: arXiv

- Paper link: https://arxiv.org/abs/2305.10430

- Code link: https://github.com/E2E -AD/AD-MLP

Keywords: end-to-end autonomous driving, nuScenes open-loop evaluation

1. Summary

Now Some autonomous driving systems are usually divided into three main tasks: perception, prediction and planning; the planning task involves predicting the trajectory of the vehicle based on internal intentions and the external environment, and controlling the vehicle. Most existing solutions evaluate their methods on the nuScenes data set. The evaluation indicators are L2 error and collision rate.

This article re-evaluates the existing evaluation indicators to explore whether they can be accurate. To measure the advantages of different methods. This article also designed an MLP-based method that takes raw sensor data (historical trajectory, speed, etc.) as input and directly outputs the future trajectory of the vehicle without using any perception and prediction information, such as camera images or LiDAR. Surprisingly: such a simple method achieves SOTA planning performance on the nuScenes dataset, reducing L2 error by 30%. Our further in-depth analysis provides some new insights into factors that are important for planning tasks on the nuScenes dataset. Our observations also suggest that we need to rethink the open-loop evaluation scheme for end-to-end autonomous driving in nuScenes.

2. The purpose, contribution and conclusion of the paper

This paper hopes to evaluate the open-loop evaluation scheme of end-to-end autonomous driving on nuScenes; without using vision and Lidar In this case, planning's SOTA can be achieved on nuScenes by using only the vehicle state and high-level commands (a total of 21-dimensional vectors) as input. The author thus pointed out the unreliability of the open-loop evaluation on nuScenes and gave two analyses: the vehicle trajectory on the nuScenes data set tends to go straight or have a very small curvature; the detection of collision rate is related to the grid density, and The collision annotation of the data set is also noisy, and the current method of evaluating collision rate is not robust and accurate enough;

3. The method of the paper

##3.1 Introduction and related Work Brief

Existing autonomous driving models involve multiple independent tasks, such as perception, prediction and planning. This design simplifies the difficulty of cross-team writing, but it also leads to information loss and error accumulation in the entire system due to the independence of optimization and training of each task. End-to-end methods are proposed, which benefit from learning the spatio-temporal features of the self-vehicle and the surrounding environment.Related work: ST-P3[1] proposes an interpretable vision-based end-to-end system that unifies feature learning for perception, prediction, and planning. UniAD[2] systematically designs Planning tasks, uses query-based design to connect multiple intermediate tasks, and can model and encode the relationship between multiple tasks; VAD[3] constructs scenes in a completely vectorized manner. Module does not require dense feature representation and is computationally more efficient.

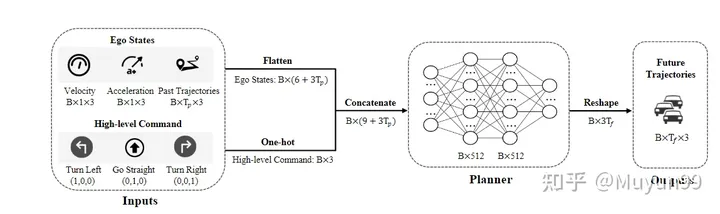

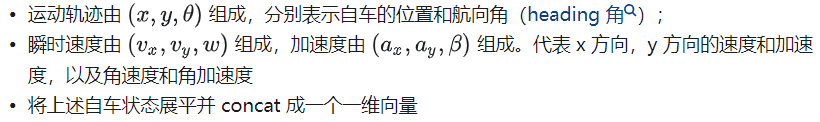

This article hopes to explore whether existing evaluation indicators can accurately measure the advantages and disadvantages of different methods. This paper only uses the physical state of the vehicle while driving (a subset of the information used by existing methods) to conduct experiments, rather than using the perception and prediction information provided by cameras and lidar. In short, the model in this article does not use visual or point cloud feature encoders, and directly encodes the physical information of the vehicle into a one-dimensional vector, which is sent to the MLP after concat. The training uses GT trajectories for supervision, and the model directly predicts the trajectory points of the vehicle within a certain time in the future. Follow previous work, use L2 Error and collision rate (collision rate.) for evaluation on the nuScenes data setAlthough the model design is simple, the best Planning results are obtained. This article attributes this to the current Inadequacy of evaluation indicators. In fact, by using the past self-vehicle trajectory, speed, acceleration and time continuity, the future movement of the self-vehicle can be reflected to a certain extent3.2 Model structure

=4 frames of the self-vehicle's motion trajectory, instantaneous speed and acceleration

Advanced commands: Since our model does not use high-precision maps, advanced commands are required for navigation. Following common practice, three types of commands are defined: turn left, go straight, and turn right. Specifically, when the own vehicle will move left or right by more than 2m in the next 3 seconds, set the corresponding command to turn left or right, otherwise it will go straight. Use one-hot encoding with dimension 1x3 to represent high-level commands

Network structure: The network is a simple three-layer MLP (the input to output dimensions are 21-512-512-18 respectively), the final number of output frames = 6, each frame outputs its own vehicle The trajectory position (x, y coordinates) and heading angle (heading angle)

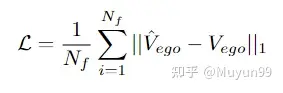

Loss function

Loss function: Use L1 Loss function for penalty

4. Experiment of the paper

4.1 Experimental setting

Dataset : Experiments are conducted on the nuScenes dataset, which consists of 1K scenes and approximately 40K keyframes, mainly collected in Boston and Singapore, using vehicles equipped with LiDAR and circumferential cameras. Data collected for each frame includes multi-view Camear images, LiDAR, velocity, acceleration, and more.

Evaluation metrics: Use the evaluation code of the ST-P3 paper (https://github.com/OpenPerceptionX/ST-P3/blob/main/stp3/metrics.py). Evaluate output traces for 1s, 2s and 3s time ranges. In order to evaluate the quality of the predicted self-vehicle trajectory, two commonly used indicators are calculated:

L2 Error: in meters, the predicted trajectory and true self-vehicle trajectory in the next 1s, 2s and 3s time range respectively. Calculate the average L2 error between trajectories;

collision rate: in percentage. In order to determine how often the self-vehicle collides with other objects, collisions are calculated by placing a box representing the self-vehicle at each waypoint on the predicted trajectory, and then detecting whether a collision occurs with the bounding boxes of vehicles and pedestrians in the current scene. Rate.

Hyperparameter settings and hardware: PaddlePaddle and PyTorch framework, AdamW optimizer (4e-6 lr and 1e-2 weight decay), cosine scheduler, trained for 6 epochs, batch size For 4, a V100 was used

4.2 Experimental results

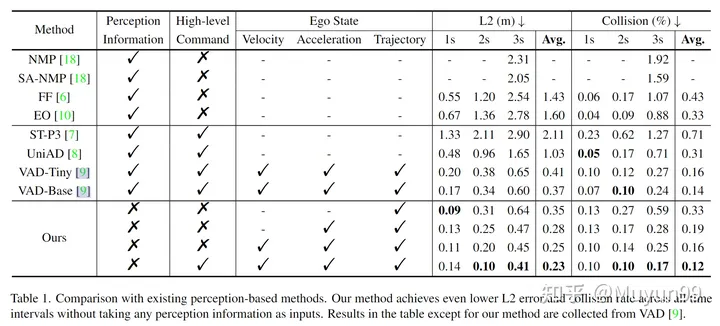

Table 1 Comparison with existing perception-based methods

Some ablation experiments were performed in Table 1. To analyze the impact of speed, acceleration, trajectory and High-level Command on the performance of this article's model. Surprisingly, using only trajectories as input and no perceptual information, our Baseline model already achieves lower average L2 error than all existing methods.

As we gradually add acceleration, velocity, and High-level Command to the input, the average L2 error and collision rate decrease from 0.35m to 0.23m, and from 0.33% to 0.12%. The model that takes both Ego State and High-level Command as input achieves the lowest L2 error and collision rate, surpassing all previous state-of-the-art perception-based methods, as shown in the last row.

4.3 Experimental Analysis

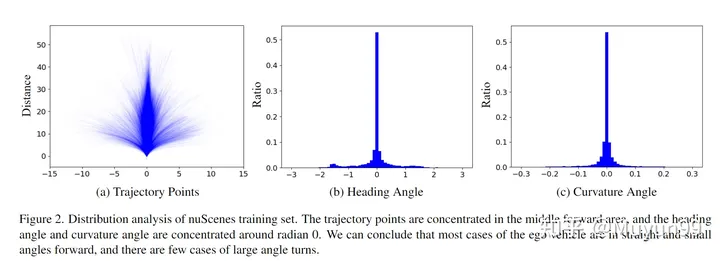

The article analyzes the distribution of self-vehicle status on the nuScenes training set from two perspectives: trajectory points in the next 3s; heading angle (heading / yaw angle) and curvature angles (curvature angles)

nuScenes Distribution analysis of the training set.

All future 3s trajectory points in the training set are plotted in Figure 2(a). As can be seen from the figure, the trajectory is mainly concentrated in the middle part (straight), and the trajectory is mainly a straight line, or a curve with very small curvature.

The heading angle represents the future direction of travel relative to the current time, while the curvature angle reflects the vehicle's turning speed. As shown in Figure 2 (b) and (c), nearly 70% of the heading and curvature angles lie within the range of -0.2 to 0.2 and -0.02 to 0.02 radians, respectively. This finding is consistent with the conclusions drawn from the trajectory point distribution.

Based on the above analysis of the distribution of trajectory points, heading angles and curvature angles, this article believes that in the nuScenes training set, the self-vehicle tends to move forward in a straight line and at a small angle when traveling within a short time range.

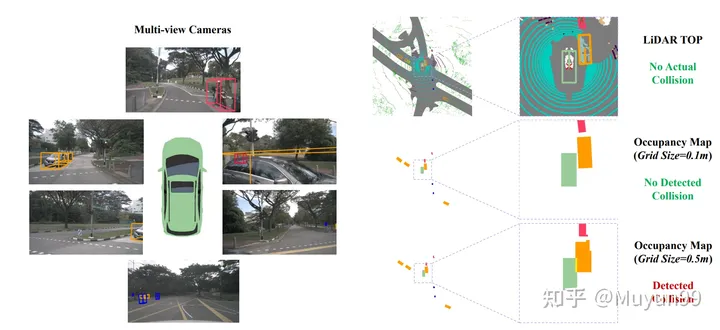

The different grid sizes of the Occupancy map will cause collisions in the GT trajectory

When calculating the collision rate, a common practice in existing methods is to combine the vehicle and Objects such as pedestrians are projected into Bird's Eye View (BEV) space, and then they are converted into occupied areas in the graph. And this is where the accuracy is lost, we found that a small percentage of GT trajectory samples (about 2%) also overlap with obstacles in the occupancy grid, but the self-vehicle will not actually overlap with any other objects when collecting data A collision occurs, which causes the collision to be incorrectly detected. Causes incorrect collisions when the ego vehicle is close to certain objects, e.g. smaller than the size of a single Occupancy map pixel.

Figure 3 shows an example of this phenomenon, as well as collision detection results for ground truth trajectories with two different grid sizes. Orange are vehicles that may be falsely detected as collisions. At the smaller grid size (0.1m) shown in the lower right corner, the evaluation system correctly identifies the GT trajectory as non-collisive, but at the larger grid size in the lower right corner (0.5m), incorrect collision detection will occur.

After observing the impact of occupied grid size on trajectory collision detection, we tested the grid size as 0.6m. The nuScenes training set has 4.8% collision samples, while the validation set has 3.0%. It is worth mentioning that when we used a grid size of 0.5m previously, only 2.0% of the samples in the validation set were misclassified as collisions. This once again demonstrates that current methods of estimating collision rates are not robust and accurate enough.

Author summary: The main purpose of this paper is to present our observations rather than to propose a new model. Although our model performs well on the nuScenes dataset, we acknowledge that it is an impractical toy that cannot be used in the real world. Driving without self-vehicle status is an insurmountable challenge. Nonetheless, we hope that our insights will stimulate further research in this area and enable a re-evaluation of progress in end-to-end autonomous driving.

5. Article evaluation

This article is a thorough review of the recent end-to-end autonomous driving evaluation on the nuScenes data set. Whether it is implicit end-to-end direct output of Planning signals, or explicit end-to-end output with intermediate links, many of them are Planning indicators evaluated on the nuScenes data set, and Baidu's article pointed out that this kind of evaluation is not reliable. . This kind of article is actually quite interesting. It actually slaps many colleagues in the face when it is published, but it also actively promotes the industry to move forward. Maybe end-to-end planning does not need to be done (perception prediction is end-to-end), maybe everyone Doing more closed-loop tests (CARLA simulator, etc.) when evaluating performance can better promote the progress of the autonomous driving community and implement the paper into actual vehicles. The road to autonomous driving still has a long way to go~

Reference

- ^ST-P3: End-to-end Vision-based Autonomous Driving via Spatial-Temporal Feature Learning

- ^Planning-oriented Autonomous Driving

- ^VAD: Vectorized Scene Representation for Efficient Autonomous Driving

Original link: https://mp.weixin.qq.com/s/skNDMk4B1rtvJ_o2CM9f8w

The above is the detailed content of Will end-to-end technology in the field of autonomous driving replace frameworks such as Apollo and autoware?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

What is Model Context Protocol (MCP)?

Mar 03, 2025 pm 07:09 PM

What is Model Context Protocol (MCP)?

Mar 03, 2025 pm 07:09 PM

The Model Context Protocol (MCP): A Universal Connector for AI and Data We're all familiar with AI's role in daily coding. Replit, GitHub Copilot, Black Box AI, and Cursor IDE are just a few examples of how AI streamlines our workflows. But imagine

Building a Local Vision Agent using OmniParser V2 and OmniTool

Mar 03, 2025 pm 07:08 PM

Building a Local Vision Agent using OmniParser V2 and OmniTool

Mar 03, 2025 pm 07:08 PM

Microsoft's OmniParser V2 and OmniTool: Revolutionizing GUI Automation with AI Imagine AI that not only understands but also interacts with your Windows 11 interface like a seasoned professional. Microsoft's OmniParser V2 and OmniTool make this a re

Replit Agent: A Guide With Practical Examples

Mar 04, 2025 am 10:52 AM

Replit Agent: A Guide With Practical Examples

Mar 04, 2025 am 10:52 AM

Revolutionizing App Development: A Deep Dive into Replit Agent Tired of wrestling with complex development environments and obscure configuration files? Replit Agent aims to simplify the process of transforming ideas into functional apps. This AI-p

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Runway Act-One Guide: I Filmed Myself to Test It

Mar 03, 2025 am 09:42 AM

Runway Act-One Guide: I Filmed Myself to Test It

Mar 03, 2025 am 09:42 AM

This blog post shares my experience testing Runway ML's new Act-One animation tool, covering both its web interface and Python API. While promising, my results were less impressive than expected. Want to explore Generative AI? Learn to use LLMs in P

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

Elon Musk & Sam Altman Clash over $500 Billion Stargate Project

Mar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate Project

Mar 08, 2025 am 11:15 AM

The $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme