Technology peripherals

Technology peripherals

AI

AI

A comprehensive introduction to autonomous driving positions—the most complete chapter in history

A comprehensive introduction to autonomous driving positions—the most complete chapter in history

A comprehensive introduction to autonomous driving positions—the most complete chapter in history

1. Background

Recently when sharing how high-tech transitions into the field of autonomous driving, several friends asked me the same question: "What are the positions for L2~L4 autonomous driving?" What are they? What specific job content does it correspond to? What skills are required?" Today I will share this topic that everyone wants to know about.

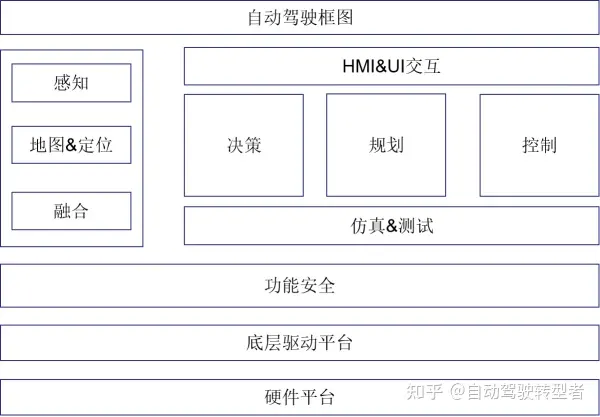

First introduce the system block diagram of autonomous driving (simplified version) to give everyone an overall concept of autonomous driving

- Next, we It is divided into two categories for introduction: algorithm engineers and non-algorithm engineers.

- Algorithm Engineer

- Laser SLAM Algorithm Engineer

- Visual SLAM Algorithm Engineer

- Multi-sensor fusion algorithm Engineer

- Machine Learning Algorithm Engineer

- Computer Vision Algorithm Engineer

- Natural Language Processing Algorithm Engineer

- Decision Algorithm Engineer

- Planning Algorithm Engineer

- Control algorithm engineer

- Non-algorithm engineer

- Software platform development engineer

- System Engineer

- Functional Safety Engineer

- Calibration Engineer

- Simulation Environment Engineer

- Test Engineer

- Data Engineer

- UI Development Engineer

- The following is a detailed introduction to the job responsibilities of each position and what needs to be learned

2.Algorithm Engineer

2.1. Laser SLAM algorithm engineer

- ##Position introduction: Collect laser sensor data and construct a map of the surrounding environment of the autonomous vehicle based on point cloud data.

- Responsible for the design and development of SLAM algorithms based on laser sensors, which can update and produce high-precision maps covering various complex scenes.

- Skill requirements: Use c, c programming;

- Requires knowledge of filtering algorithms: ESKF, EKF, UKF, etc.;

- At the same time, you need to learn G2O, ceres and other c frameworks used to optimize nonlinear error functions.

- Familiarity with open source SLAM frameworks, such as GLoam, kimera, VINS, etc. is preferred;

2.2. Visual SLAM algorithm engineer

- Job introduction: Based on VSLAM, develop autonomous navigation and positioning algorithms for robots, including lidar, gyroscope, odometer, vision, etc. Information fusion, constructing robot motion model

- Skill requirements: Learn commonly used VSLAM algorithms, such as ORB-SLAM, SVO, DSO, MonoSLAM, VINS and RGB-D etc.;

- ROS robot operating system;

- Requires knowledge of filtering algorithms: ESKF, EKF, UKF, etc.;

- At the same time, you need to learn G2O, ceres, etc. to optimize nonlinearity c-framework for the error function.

2.3. Multi-sensor fusion algorithm engineer

- Position introduction:

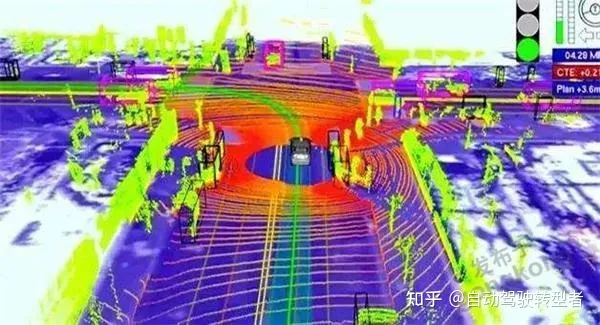

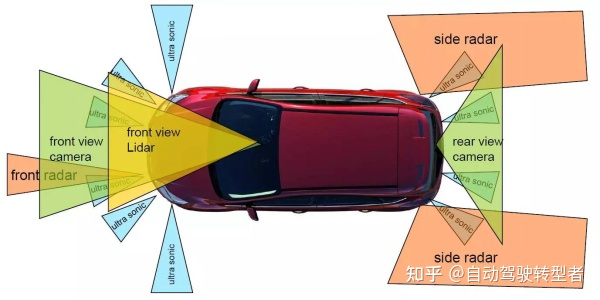

- Process and integrate information from multiple sensors such as cameras, lidar, and millimeter-wave radar to improve the environmental perception capabilities of autonomous driving vehicles;

- Responsible for target detection, tracking, identification and positioning based on multi-source information fusion;

- Responsible for environmental feature extraction based on multi-source information fusion to provide support for map construction; Provide support for navigation and positioning.

- Skill requirements:

- Master the data analysis and fusion algorithms related to camera, millimeter wave radar, lidar, inertial navigation and other related data analysis and fusion algorithms;

- Computer information science, Bachelor degree or above in electronic engineering or mathematics related major, with solid computer theory foundation

- Precision camera model, multi-vision geometry, Bundle Adjustment principle, experience in SfM, geometric ranging and other projects

- Proficient in C/C, familiar with Matlab, good object-oriented programming ideas and coding habits

- Familiar with IMU, GPS, DR and other inertial navigation positioning algorithm frameworks

- Familiar with IMU, GPS, body system principles, Hardware characteristics, calibration algorithm

2.4. Machine learning algorithm engineer

- Job introduction:

- This direction is mainly responsible for the engineering application of data generated during vehicle driving, and is biased towards data analysis, such as impact analysis of vehicle mileage, big data analysis modeling, etc.

- Skill requirements:

- Python, C/C

- Learn basic theoretical algorithms of machine learning, such as LR, GBDT, SVM, DNN, etc. ;

- Learn model training of traditional machine learning frameworks such as scikit-learn;

- Be familiar with deep learning frameworks such as PyTorch and TensorFlow (part of the neural network part), etc.

2.5. Computer Vision Algorithm Engineer

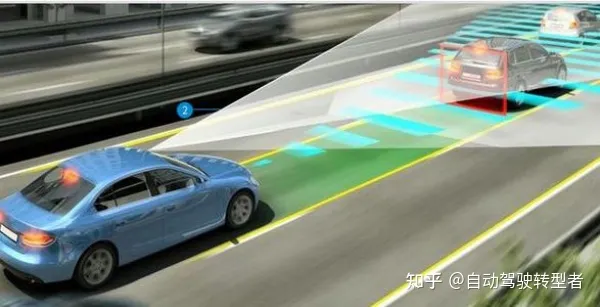

- ##Job Introduction: This direction is mainly based on camera sensors, which mainly include: lane line detection, vehicle and other obstacle detection, drivable area detection, traffic information detection such as traffic lights, etc.

- Skill requirements: C/C, Python, OpenCV;

- Requires basic algorithms of machine learning (dimensionality reduction, classification, regression, etc.) ;

- Need to learn deep learning and deep learning framework;

- Learn common methods of computer vision and image processing (object detection, tracking, segmentation, classification and recognition, etc.).

2.6. Natural Language Processing Algorithm Engineer

- Job Introduction: This direction is mainly responsible for Speech recognition in vehicle scenarios, voice interaction design, etc.

- Skill requirements: Learn machine learning algorithms, deep learning algorithms (RNN);

- Basic tasks of natural language processing (word segmentation, part-of-speech tagging, syntactic analysis, keyword extraction)

- It is necessary to use machine learning methods such as clustering, classification, regression, sorting and other models to solve text business problems;

- Familiar with deep learning frameworks such as PyTorch, TensorFlow (partially RNN part), etc.

2.7. Decision Algorithm Engineer

- ##Job Introduction:

- The decision-making of autonomous driving is to convert the information transmitted by the perception module into the behavior of the vehicle to achieve the driving goal. For example, car acceleration, deceleration, left turn, right turn, lane change, and overtaking are all outputs of the decision-making module. Decisions need to take into account the safety and comfort of the car, ensuring the safety of passengers and reaching the destination as quickly as possible.

- Skill requirements:

- c/c/python, familiar with ROS system;

- Learn commonly used decision-making algorithms, such as decision-making state machines and decision trees , Markov decision process, POMDP, etc.;

- If you want to learn in depth, you need to be familiar with machine learning algorithms (RNN, LSTM, RL) and master at least one deep learning framework (such as gym or universe). Reinforcement learning platform);

- Be familiar with vehicle kinematics and dynamics models.

- Position introduction:

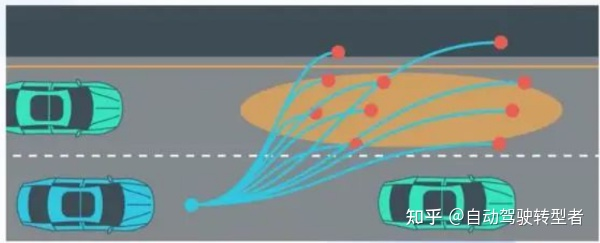

- Planning includes path planning and speed planning

- In the planning algorithm, the self-driving vehicle first determines the path where the vehicle can travel through path planning. path, and then select the path to determine the speed at which you can travel.

- Skill requirements:

- c/c/python, ROS robot operating system; (some companies develop using Matlab/simulink)

- Learning Common path planning algorithms, such as A, D, RRT, etc.;

- Learn some curve representation methods, such as: quintic curve, clothoid, cubic spline, B-spline curve, etc.;

- If you want to learn more deeply; learn trajectory prediction algorithms, such as MDP, POMDP, Came Theory, etc.;

- Learning deep learning and reinforcement learning technology is also a bonus, such as RNN, LSTM, Deep Q-Learning etc.;

- Have mathematical theoretical foundation and background, and be familiar with vehicle kinematics and dynamics models.

2.9. Control algorithm engineer

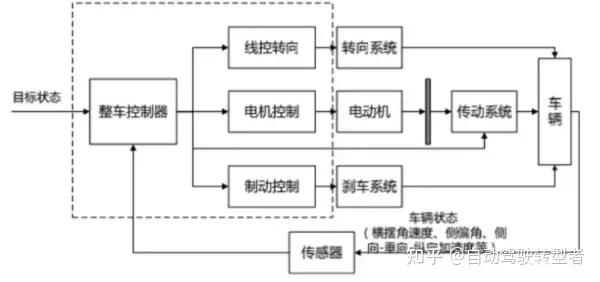

- Position introduction:

- Generally, the vehicle is modeled for horizontal and vertical dynamics, and then the control algorithm is developed to realize vehicle motion control, etc.;

- This The position involves a lot of work with vehicles, which is a good opportunity for partners from traditional car manufacturers to transition into the field of autonomous driving.

- Skill requirements:

- C/C, Matlab/Simulink

- Learn the basics of automatic control theory and learn modern control theory;

- Learn PID, LQR, MPC algorithms;

- Learn vehicle kinematics and dynamics models, and have a certain understanding of the car's chassis;

- Learn simulation software such as CarSim;

- Learning the development of auxiliary driving functions such as ACC, AEB, APA, LKA, and LCC is a bonus;

- Having real vehicle debugging experience is also a bonus.

3. Non-algorithm engineer

3.1. Software platform development engineer

- Job introduction:

- Design and implement autonomous driving software platform, including kernel modification/extension, driver implementation/enhancement, middleware implementation/enhancement, system integration, performance/power consumption optimization, pressure/ Stability/compliance testing;

- is responsible for building the system architecture and writing underlying drivers;

- is responsible for the code of vision-related algorithms on embedded processors (GPU, DSP, ARM, etc. platforms) Implementation and performance optimization, testing and maintenance;

- Assist algorithm engineers to complete the transplantation, integration, testing and optimization of algorithms on embedded platforms.

- Skill requirements:

- C/C programming skills, Python;

- Have experience in kernel or driver development of embedded operating systems and real-time operating systems , familiar with QNX, ROS;

- Familiar with software debugging and debug tools;

- Understand vehicle ADAS ECU and sensors, such as radar, camera, ultrasonic and lidar;

- Familiar with general Diagnostic Service (UDS), Controller Area Network (CAN);

- Familiarity with communication protocols (CAN, UDS, DoIP, SOME/IP, DDS, MQTT, REST, etc.) is a bonus.

3.2. System Engineer

- Position Introduction:

- Responsible for connecting with customer needs and Release the needs of internal developers;

- is responsible for the construction of the autonomous driving software system framework;

- is responsible for the modular and verifiable system software architecture design and real-time performance optimization;

- Work with hardware, algorithm and test teams to integrate and optimize autonomous driving systems.

- Skill requirements:

- Have solid basic theoretical knowledge of computers (such as: automatic control, pattern recognition, machine learning, computer vision, point cloud processing);

- Have experience in kernel or driver development of embedded operating systems and real-time operating systems;

- Have good communication skills and sense of teamwork

##3.3 .Functional safety engineer

- Job introduction: Support the functional safety of the product throughout the product life cycle

- Responsible for the functional safety system design of unmanned/autonomous driving system products and provide suggestions for improvement of existing processes;

- Responsible for the hazard analysis (HARA, FMEAs, FMEDA, FTA) of unmanned/autonomous driving systems;

- Responsible for the definition of safety goals for unmanned/autonomous driving systems;

- Responsible for the definition of safety requirements for unmanned/autonomous driving systems;

- Skill requirements: Proficient in ISO26262 and have experience in implementing functional safety projects for autonomous driving or ADAS systems; (Those who are doing functional safety in traditional car factories and want to change careers can also consider it);

- Understand FMEA, FMEDA, FMEA- MSR, FTA and other corresponding methods;

3.4 Calibration Engineer

- Position Introduction: Responsible for the calibration of multi-sensors for autonomous driving, including GPS, IMU, LiDAR, Camera, Radar and USS, etc.;

- designs and implements sensor internal and external parameter calibration algorithms, and builds a multi-sensor calibration system;

- is responsible for Calibrate parameters for relevant vehicle tests and give test reports.

- Skill requirements: C programming, familiar with Linux and ROS systems;

- Have experience in sensor calibration, familiar with visual or laser SLAM algorithms;

3.5 Simulation Environment Engineer

- Position introduction:

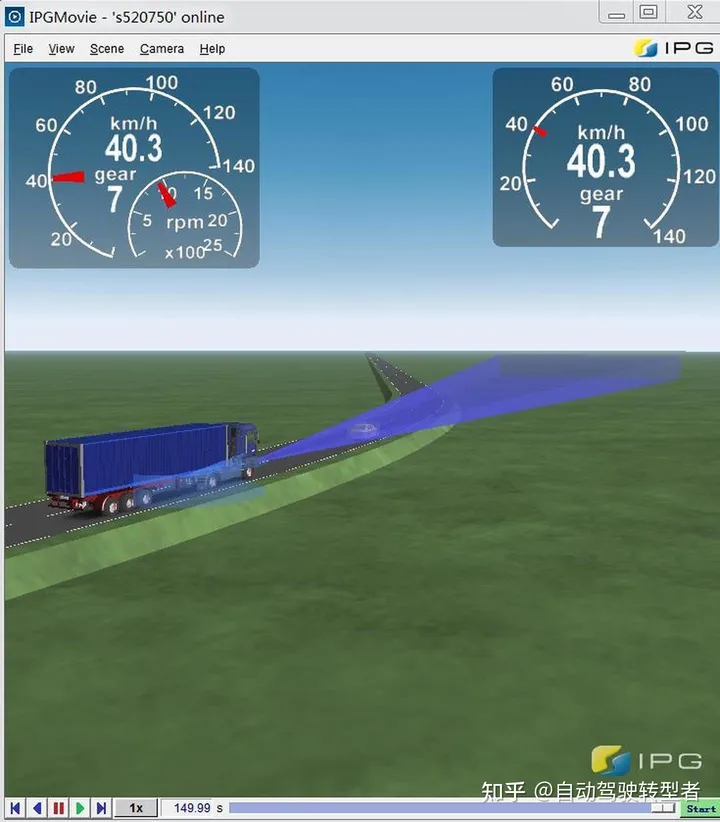

- This direction requires participation in the construction of autonomous driving-related simulation systems, including vehicle dynamics-related simulations, the construction of various virtual sensor models and virtual scenes Model and simulation, build test scenarios based on test cases, perform autonomous driving algorithm simulation tests, etc.;

- is responsible for building autonomous driving simulation systems and conducting software simulations of cars, sensors, and environments. The simulation results will be used together with real data to predict the behavior of the car in real scenarios;

- cooperates with driving decision-making, path planning, simulation algorithms and other modules to realize closed-loop simulation of autonomous driving and visualize relevant debugging information.

- Skill requirements:

- MATLAB/simulink, Python/C

- Proficient in operating a commonly used vehicle dynamics or unmanned vehicle related simulation software, For example, Perscan, Carsim, Carmaker, etc.;

- Familiar with the robot operating system ROS, etc.;

- Some simulation positions are purely for simulation, but some positions require the development of simulation environments. Such positions The programming requirements will be higher.

3.6 Test Engineer

- Job Introduction:

- This direction is mainly responsible for the development of autonomous vehicles Related testing work, testing the performance of various indicators of autonomous driving system functions, and evaluating its boundary conditions and failure modes;

- is responsible for the design and implementation of automated testing (SIL, HIL) and related verification of intelligent driving products;

- Responsible for developing test cases and test plans based on the functional requirements of the system or product;

- Responsible for formulating and implementing a complete system or product test plan, and finally writing a test report;

- Collect and test the boundary examples of the system, evaluate the safety of the intelligent driving system, and provide reasonable feedback on the technology.

- Skill requirements:

- Familiar with Ubuntu/Linux operating system, able to write python scripts

- Familiar with CAN bus;

- Familiar with testing Methods and techniques for writing use cases;

- Bonus points for those who are familiar with image recognition algorithms, deep learning, and big data related tools such as spark;

- Familiar with laser radar, millimeter wave radar, ultrasonic probes and The camera application is a plus.

3.7 Big Data Development Engineer

- Job Introduction:

- Data includes background data architecture and Presented at the front desk. An autonomous vehicle generates 1 terabyte of data every day. How to quickly clean, refine, and summarize data, such as how to quickly find the most important disengagements in a drive test. This helps engineers test more efficiently.

- is responsible for the design, development and optimization of the autonomous driving big data platform system;

- is responsible for the development of visual tools for autonomous driving data annotation and processing processes, and the design and development of automated annotation platforms.

- Skill requirements:

- Have solid data structure and algorithm skills;

- Proficient in at least one high-level programming language such as Java/Python/C;

- Familiar with the Linux development environment;

- Have experience in the design and development of applications based on SQL or No-SQL databases;

- Familiar with REST services and Web standards, familiar with one Those who can independently build front-end applications using mainstream front-end development frameworks, such as React/AngularJS, will get extra points;

- Those who are familiar with autonomous driving and related sensor data such as Lidar and Camera will get extra points.

3.8UI development engineer

- Job introduction:

- Every company needs to build an internal Tools for validating complete vehicle development. It is also necessary to create various interactive pages for the remote control center to remotely control the autonomous vehicle through a UI. It also includes the UI prepared for passengers in the carriage. Friends who like design or are good at front-end can consider it.

- Skill requirements:

- Have excellent aesthetics and rich visual expression;

- Proficient in color, graphics, information and GUI design principles and methods .

4. Ending

Finally, national policies are vigorously promoting new energy intelligent vehicles, and we hope that more and more partners will join the autonomous driving industry .

Original link: https://mp.weixin.qq.com/s/d41a5VYtJ4lvMP3GO6In_g

The above is the detailed content of A comprehensive introduction to autonomous driving positions—the most complete chapter in history. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

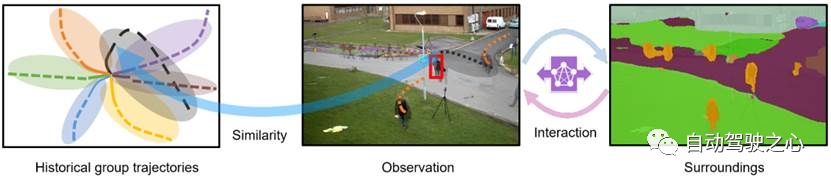

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

"ComputerWorld" magazine once wrote an article saying that "programming will disappear by 1960" because IBM developed a new language FORTRAN, which allows engineers to write the mathematical formulas they need and then submit them. Give the computer a run, so programming ends. A few years later, we heard a new saying: any business person can use business terms to describe their problems and tell the computer what to do. Using this programming language called COBOL, companies no longer need programmers. . Later, it is said that IBM developed a new programming language called RPG that allows employees to fill in forms and generate reports, so most of the company's programming needs can be completed through it.

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

The humanoid robot is 1.65 meters tall, weighs 55 kilograms, and has 44 degrees of freedom in its body. It can walk quickly, avoid obstacles quickly, climb steadily up and down slopes, and resist impact interference. You can now take it home! Fourier Intelligence's universal humanoid robot GR-1 has started pre-sale. Robot Lecture Hall Fourier Intelligence's Fourier GR-1 universal humanoid robot has now opened for pre-sale. GR-1 has a highly bionic trunk configuration and anthropomorphic motion control. The whole body has 44 degrees of freedom. It has the ability to walk, avoid obstacles, cross obstacles, go up and down slopes, resist interference, and adapt to different road surfaces. It is a general artificial intelligence system. Ideal carrier. Official website pre-sale page: www.fftai.cn/order#FourierGR-1# Fourier Intelligence needs to be rewritten.

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Recently, Huawei announced that it will launch a new smart wearable product equipped with Xuanji sensing system in September, which is expected to be Huawei's latest smart watch. This new product will integrate advanced emotional health monitoring functions. The Xuanji Perception System provides users with a comprehensive health assessment with its six characteristics - accuracy, comprehensiveness, speed, flexibility, openness and scalability. The system uses a super-sensing module and optimizes the multi-channel optical path architecture technology, which greatly improves the monitoring accuracy of basic indicators such as heart rate, blood oxygen and respiration rate. In addition, the Xuanji Sensing System has also expanded the research on emotional states based on heart rate data. It is not limited to physiological indicators, but can also evaluate the user's emotional state and stress level. It supports the monitoring of more than 60 sports health indicators, covering cardiovascular, respiratory, neurological, endocrine,

What are the effective methods and common Base methods for pedestrian trajectory prediction? Top conference papers sharing!

Oct 17, 2023 am 11:13 AM

What are the effective methods and common Base methods for pedestrian trajectory prediction? Top conference papers sharing!

Oct 17, 2023 am 11:13 AM

Trajectory prediction has been gaining momentum in the past two years, but most of it focuses on the direction of vehicle trajectory prediction. Today, Autonomous Driving Heart will share with you the algorithm for pedestrian trajectory prediction on NeurIPS - SHENet. In restricted scenes, human movement patterns are usually To a certain extent, it conforms to limited rules. Based on this assumption, SHENet predicts a person's future trajectory by learning implicit scene rules. The article has been authorized to be original by Autonomous Driving Heart! The author's personal understanding is that currently predicting a person's future trajectory is still a challenging problem due to the randomness and subjectivity of human movement. However, human movement patterns in constrained scenes often vary due to scene constraints (such as floor plans, roads, and obstacles) and human-to-human or human-to-object interactivity.

UniOcc: Unifying vision-centric occupancy prediction with geometric and semantic rendering!

Sep 16, 2023 pm 08:29 PM

UniOcc: Unifying vision-centric occupancy prediction with geometric and semantic rendering!

Sep 16, 2023 pm 08:29 PM

Original title: UniOcc: UnifyingVision-Centric3DOccupancyPredictionwithGeometricandSemanticRendering Please click the following link to view the paper: https://arxiv.org/pdf/2306.09117.pdf Paper idea: In this technical report, we propose a solution called UniOCC, using For vision-centric 3D occupancy prediction trajectories in CVPR2023nuScenesOpenDatasetChallenge. Existing occupancy prediction methods mainly focus on using three-dimensional occupancy labels

My smartwatch won't turn on: What to do now

Aug 23, 2023 pm 05:41 PM

My smartwatch won't turn on: What to do now

Aug 23, 2023 pm 05:41 PM

What to do if your smartwatch won't turn on? Here are the options available to restore the life of your beloved smartwatch. CHECK POWER PLAY: Imagine a star-studded stage with your smartwatch as the headliner, but the curtains don't rise because it forgot its battery! Before we delve into the details, make sure your smartwatch isn't just running on smoke and mirrors. Give it a proper charge time, and if you're feeling a little extra, give it a stylish new cable - the fashion-forward kind! Fantastic Reboot: When in doubt, give it a little R&R - that's Reboot and Revival! Press and hold these buttons like a maestro conducting a symphony. Different smartwatches have their own reboot rituals — Google is your guide. this is one