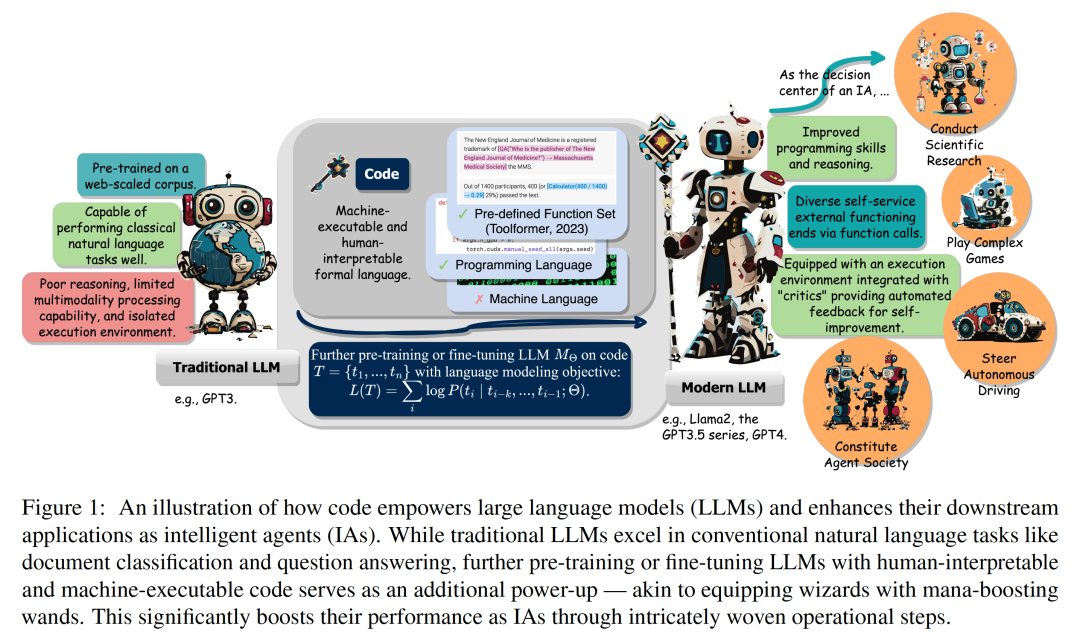

Just as Rhysford's wand created the legend of extraordinary magicians such as Dumbledore in the past, traditional large-scale language models with huge potential, after pre-training/fine-tuning of code corpus, have mastered more beyond Original execution ability. Specifically, the advanced version of the large model has been improved in terms of writing code, stronger reasoning, independent reference to execution interfaces, independent improvement, etc., which will provide As an AI agent, it brings benefits in all aspects when performing downstream tasks. Recently, a research team from the University of Illinois at Urbana-Champaign (UIUC) published an important review.

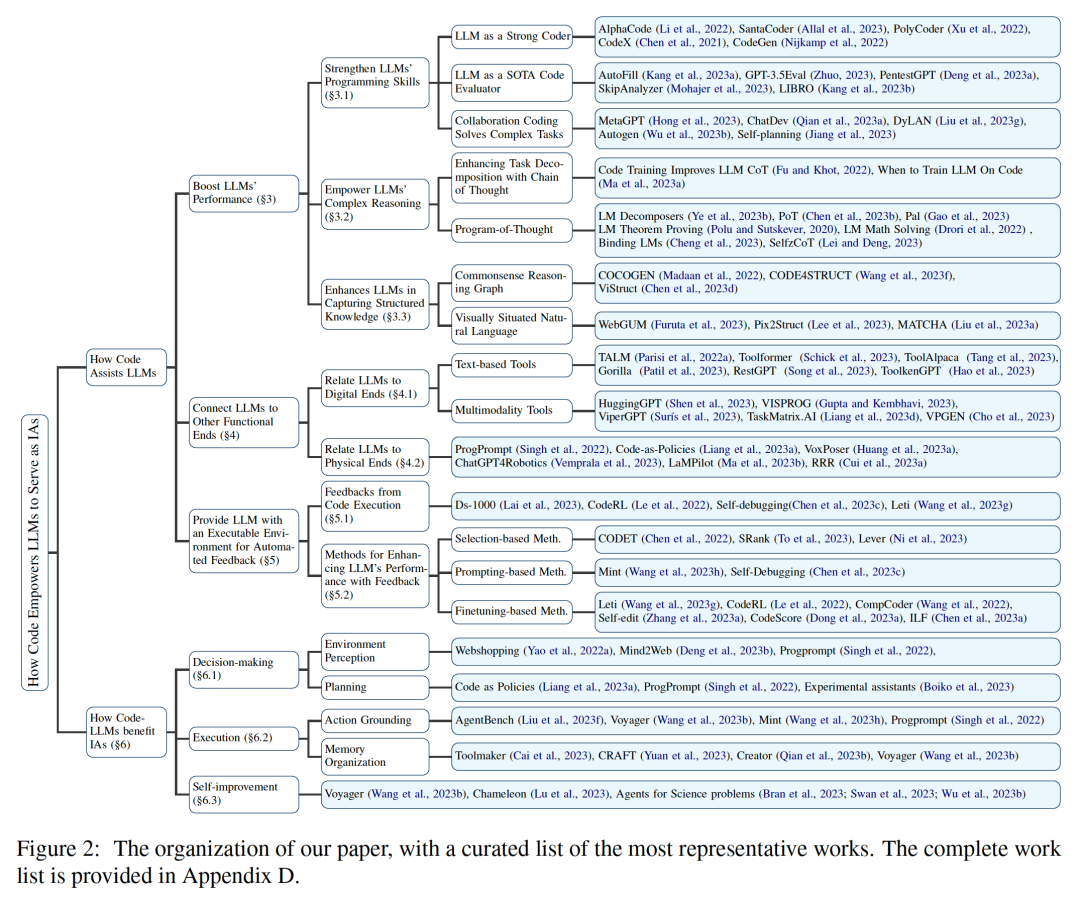

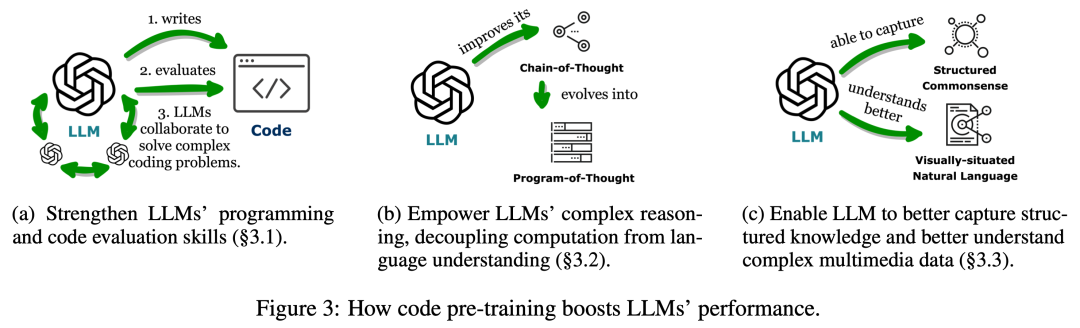

Paper link: https://arxiv.org/abs/2401.00812This review explores the code (Code) How to give large language models (LLMs) and their intelligent agents (Intelligent Agents) based on them powerful capabilities.  Among them, code specifically refers to a formal language that is machine-executable and human-readable, such as a programming language, a predefined function set, etc. Similar to how we guide LLMs to understand/generate traditional natural language, making LLMs proficient in code only requires applying the same language modeling training objectives to code data. Different from traditional language models, today’s commonly used LLMs, such as Llama2 and GPT4, have not only significantly improved in size, but they have also undergone development independent of typical natural language corpora. code corpus training. Code has standardized syntax, logical consistency, abstraction and modularity, and can transform high-level goals into executable steps, making it an ideal medium to connect humans and computers. As shown in Figure 2, in this review, the researchers compiled relevant work and analyzed in detail the various advantages of incorporating code into LLMs training data. Specifically, the researchers observed that unique properties of code contribute to: 1. Enhance the code writing capabilities, reasoning capabilities, and structured information processing capabilities of LLMs so that they can be applied to more complex natural language tasks; 2. Guide LLMs to generate structured and accurate Intermediate steps, these steps can be connected to the external execution end through function calls; 3. Use the compilation and execution environment of the code to provide diverse feedback for independent improvement of the model. In addition, the researchers also deeply investigated the optimization items of these LLMs given by the code, how to strengthen them as the decision-making center of the Intelligent Agent, understand instructions, decompose goals, and plan and a set of abilities to perform actions and improve from feedback. As shown in Figure 3, in the first part, the researchers found that the pre-training of LLMs on code has expanded the task scope of LLMs to natural language. outside. These models can support a variety of applications, including code generation for mathematical theories, general programming tasks, and data retrieval. Code needs to produce a logically coherent, ordered sequence of steps, which is essential for effective execution. Additionally, the executability of each step in the code allows step-by-step verification of the logic. Exploiting and embedding these code attributes in pre-training improves the Chain of Thought (CoT) performance of LLMs in many traditional natural language downstream tasks, validating their improvement in complex reasoning skills. At the same time, by implicitly learning the structured format of code, codeLLMs perform better on common-sense structured reasoning tasks, such as those related to markup languages, HTML, and diagram understanding.

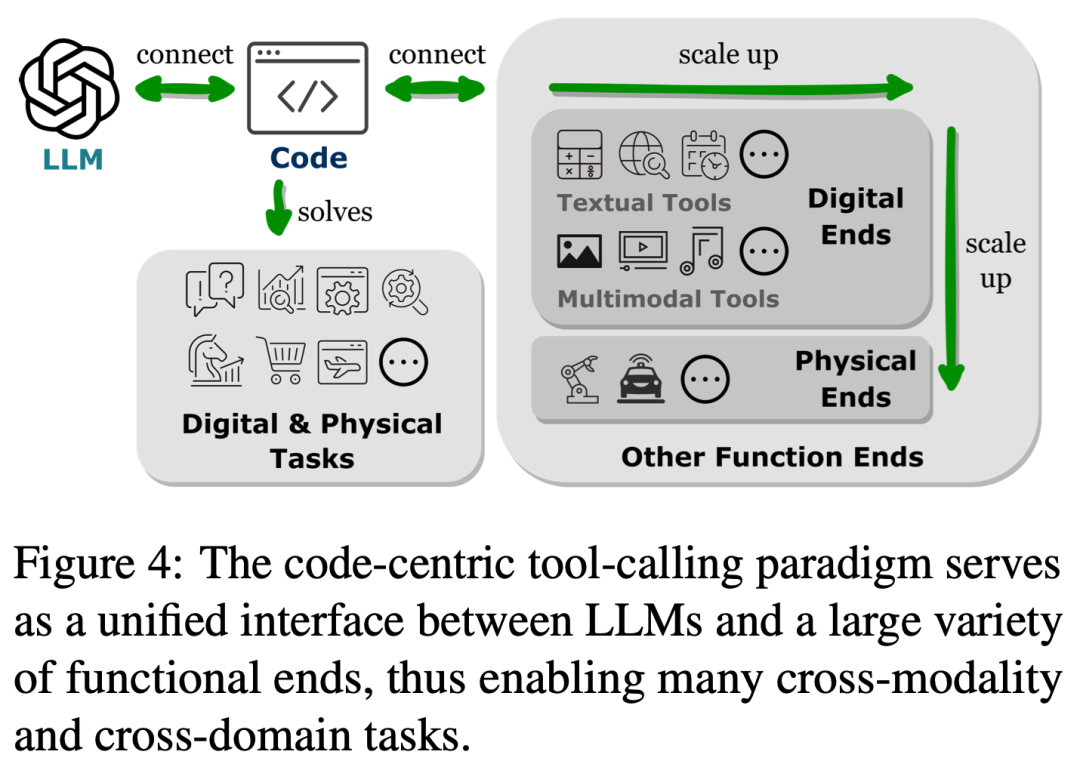

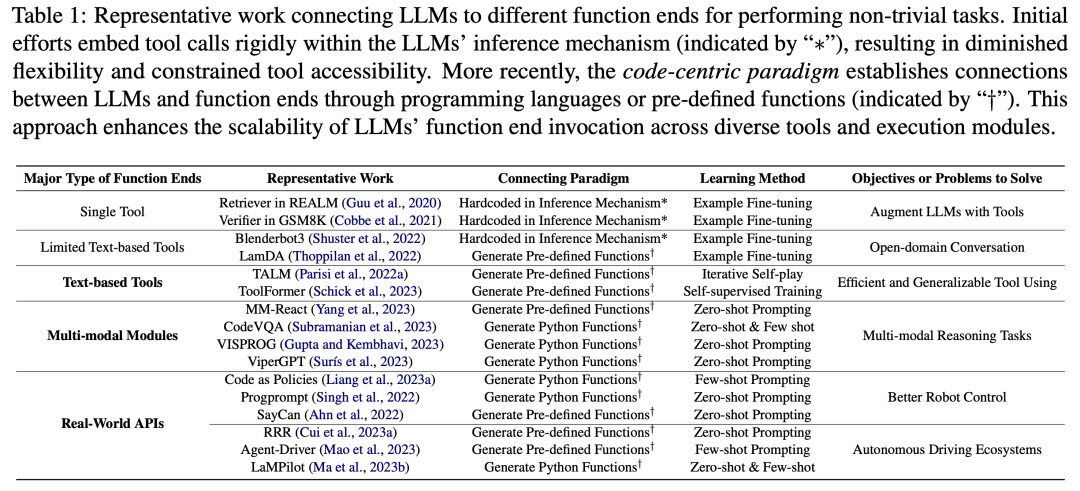

Among them, code specifically refers to a formal language that is machine-executable and human-readable, such as a programming language, a predefined function set, etc. Similar to how we guide LLMs to understand/generate traditional natural language, making LLMs proficient in code only requires applying the same language modeling training objectives to code data. Different from traditional language models, today’s commonly used LLMs, such as Llama2 and GPT4, have not only significantly improved in size, but they have also undergone development independent of typical natural language corpora. code corpus training. Code has standardized syntax, logical consistency, abstraction and modularity, and can transform high-level goals into executable steps, making it an ideal medium to connect humans and computers. As shown in Figure 2, in this review, the researchers compiled relevant work and analyzed in detail the various advantages of incorporating code into LLMs training data. Specifically, the researchers observed that unique properties of code contribute to: 1. Enhance the code writing capabilities, reasoning capabilities, and structured information processing capabilities of LLMs so that they can be applied to more complex natural language tasks; 2. Guide LLMs to generate structured and accurate Intermediate steps, these steps can be connected to the external execution end through function calls; 3. Use the compilation and execution environment of the code to provide diverse feedback for independent improvement of the model. In addition, the researchers also deeply investigated the optimization items of these LLMs given by the code, how to strengthen them as the decision-making center of the Intelligent Agent, understand instructions, decompose goals, and plan and a set of abilities to perform actions and improve from feedback. As shown in Figure 3, in the first part, the researchers found that the pre-training of LLMs on code has expanded the task scope of LLMs to natural language. outside. These models can support a variety of applications, including code generation for mathematical theories, general programming tasks, and data retrieval. Code needs to produce a logically coherent, ordered sequence of steps, which is essential for effective execution. Additionally, the executability of each step in the code allows step-by-step verification of the logic. Exploiting and embedding these code attributes in pre-training improves the Chain of Thought (CoT) performance of LLMs in many traditional natural language downstream tasks, validating their improvement in complex reasoning skills. At the same time, by implicitly learning the structured format of code, codeLLMs perform better on common-sense structured reasoning tasks, such as those related to markup languages, HTML, and diagram understanding.  As shown in Figure 4, connecting LLMs with other functional ends (that is, extending LLMs capabilities through external tools and execution modules) helps LLMs to more accurately and reliably Perform tasks. In the second part, as shown in Table 1, researchers observed a general trend: LLMs establish connections with other functional endpoints by generating programming languages or leveraging predefined functions. This "code-centric paradigm" differs from the rigid approach of strictly hardcoding tool calls in the inference mechanism of LLMs, which allows LLMs to dynamically generate tokens that call execution modules, with adjustable parameters. This paradigm provides a simple and clear way for LLMs to interact with other functional ends, enhancing the flexibility and scalability of their applications. sex. More importantly, it also allows LLMs to interact with numerous functional endpoints covering multiple modalities and domains. By expanding the number and variety of functional terminals accessible to LLMs, LLMs are able to handle more complex tasks. As shown in Figure 5, embedding LLMs into the code execution environment can achieve automated feedback and independent model improvement. LLMs perform beyond the range of their training parameters, in part because they are able to accommodate feedback. However, feedback must be chosen carefully as noisy cue input may impede the performance of LLMs on downstream tasks. Furthermore, since human resources are expensive, feedback needs to be collected automatically while maintaining authenticity. In the third part, the researchers found that embedding LLMs into the code execution environment can yield feedback that meets all of these criteria.

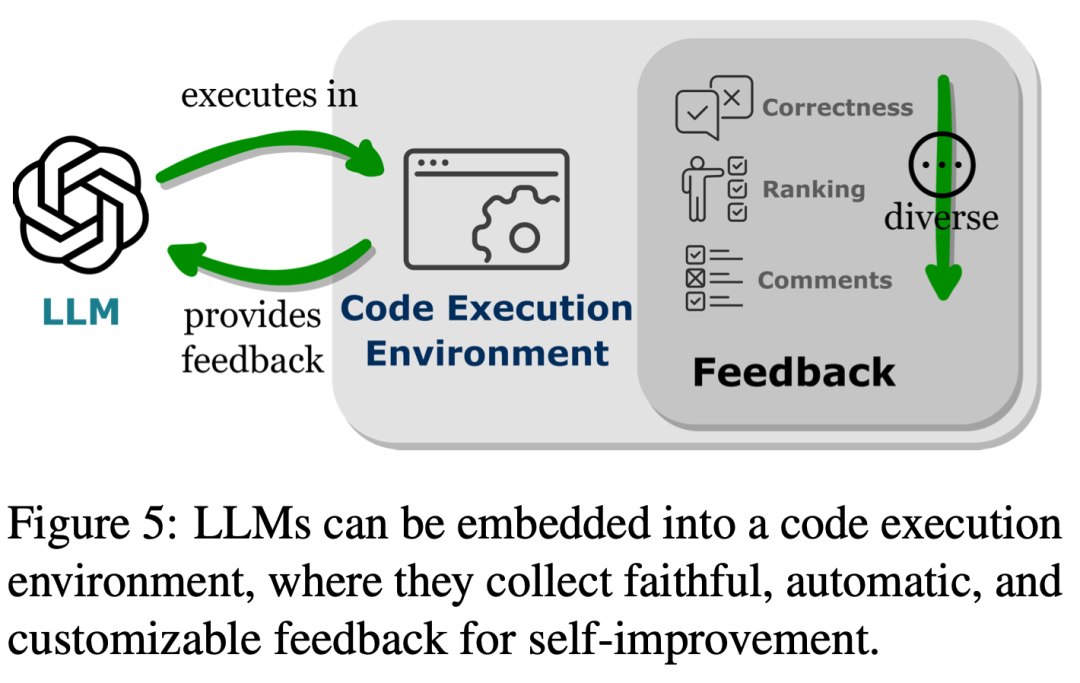

As shown in Figure 4, connecting LLMs with other functional ends (that is, extending LLMs capabilities through external tools and execution modules) helps LLMs to more accurately and reliably Perform tasks. In the second part, as shown in Table 1, researchers observed a general trend: LLMs establish connections with other functional endpoints by generating programming languages or leveraging predefined functions. This "code-centric paradigm" differs from the rigid approach of strictly hardcoding tool calls in the inference mechanism of LLMs, which allows LLMs to dynamically generate tokens that call execution modules, with adjustable parameters. This paradigm provides a simple and clear way for LLMs to interact with other functional ends, enhancing the flexibility and scalability of their applications. sex. More importantly, it also allows LLMs to interact with numerous functional endpoints covering multiple modalities and domains. By expanding the number and variety of functional terminals accessible to LLMs, LLMs are able to handle more complex tasks. As shown in Figure 5, embedding LLMs into the code execution environment can achieve automated feedback and independent model improvement. LLMs perform beyond the range of their training parameters, in part because they are able to accommodate feedback. However, feedback must be chosen carefully as noisy cue input may impede the performance of LLMs on downstream tasks. Furthermore, since human resources are expensive, feedback needs to be collected automatically while maintaining authenticity. In the third part, the researchers found that embedding LLMs into the code execution environment can yield feedback that meets all of these criteria.

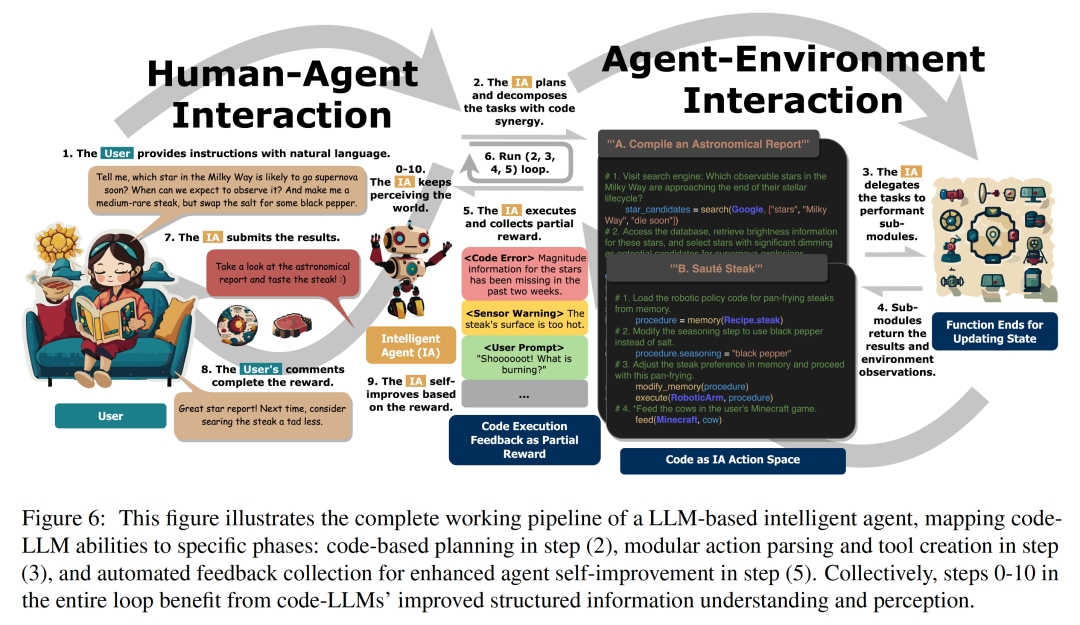

First of all, since code execution is deterministic, obtaining feedback from the results of executing code can directly and faithfully reflect the tasks performed by LLM. Additionally, code interpreters provide LLMs with a way to automatically query internal feedback, eliminating the need for expensive human annotations when leveraging LLMs to debug or optimize erroneous code. The Code compilation and execution environment also allows LLMs to incorporate diverse and comprehensive external feedback forms, such as simple generation of binary correct and error evaluations, slightly more complex natural language explanations of execution results, and various rankings with feedback values. methods, they all make the methods of improving performance highly customizable. By analyzing various ways in which code training data integration enhances the capabilities of LLMs, researchers further discovered that the advantage of code empowering LLMs lies in the key development of Intelligent Agent. LLM application areas are particularly obvious. Figure 6 shows the standard workflow of an intelligent assistant. The researchers observed that the improvements brought about by code training in LLMs also affected the actual steps they performed as intelligent assistants. These steps include: (1) enhancing IA’s decision-making capabilities in environmental awareness and planning, (2) implementing actions in modular action primitives and efficient organization of memory to optimize policy execution, and (3) optimize performance through feedback automatically derived from the code execution environment. In summary, in this review, researchers analyze and clarify how code gives LLMs powerful capabilities, and how code assists LLMs in working as decision-making centers for Intelligent Agents . Through a comprehensive literature review, the researchers observed that after code training, LLMs improved their programming skills and reasoning capabilities, and gained implementation and cross-modal and domain expertise. Flexible connection capabilities for multiple function terminals, as well as enhanced ability to interact with evaluation modules integrated in the code execution environment and achieve automatic self-improvement. In addition, the improved capabilities of LLMs brought by code training help them perform as Intelligent Agents in downstream applications, reflected in specific tasks such as decision-making, execution, and self-improvement. Steps. In addition to reviewing previous research, the researchers also proposed several challenges in the field as guiding elements for potential future directions. Please refer to the original article for more details! The above is the detailed content of Unleash excellent programming resources, giant models and agents will trigger more powerful forces. For more information, please follow other related articles on the PHP Chinese website!

Introduction to Java special effects implementation methods

Introduction to Java special effects implementation methods

How to solve the 504 error in cdn

How to solve the 504 error in cdn

What are the common secondary developments in PHP?

What are the common secondary developments in PHP?

How to obtain the serial number of a physical hard disk under Windows

How to obtain the serial number of a physical hard disk under Windows

C language data structure

C language data structure

Introduction to the meaning of invalid password

Introduction to the meaning of invalid password

Is Bitcoin trading allowed in China?

Is Bitcoin trading allowed in China?

What are the network security technologies?

What are the network security technologies?

How to import data in access

How to import data in access