MySQL redo log及recover过程浅析

写在前面:作者水平有限,欢迎不吝赐教,一切以最新源码为准。

InnoDB redo log

首先介绍下Innodb redo log是什么,为什么需要记录redo log,以及redo log的作用都有哪些。这些作为常识,只是为了本文完整。

InnoDB有buffer pool(简称bp)。bp是数据库页面的缓存,对InnoDB的任何修改操作都会首先在bp的page上进行,然后这样的页面将被标记为dirty并被放到专门的flush list上,后续将由master thread或专门的刷脏线程阶段性的将这些页面写入磁盘(disk or ssd)。这样的好处是避免每次写操作都操作磁盘导致大量的随机IO,阶段性的刷脏可以将多次对页面的修改merge成一次IO操作,同时异步写入也降低了访问的时延。然而,如果在dirty page还未刷入磁盘时,server非正常关闭,这些修改操作将会丢失,如果写入操作正在进行,甚至会由于损坏数据文件导致数据库不可用。为了避免上述问题的发生,Innodb将所有对页面的修改操作写入一个专门的文件,并在数据库启动时从此文件进行恢复操作,这个文件就是redo log file。这样的技术推迟了bp页面的刷新,从而提升了数据库的吞吐,有效的降低了访问时延。带来的问题是额外的写redo log操作的开销(顺序IO,当然很快),以及数据库启动时恢复操作所需的时间。

接下来将结合MySQL 5.6的代码看下Log文件的结构、生成过程以及数据库启动时的恢复流程。

Log文件结构Redo log文件包含一组log files,其会被循环使用。Redo log文件的大小和数目可以通过特定的参数设置,详见:innodb_log_file_size 和 innodb_log_files_in_group 。每个log文件有一个文件头,其代码在"storage/innobase/include/log0log.h"中,我们看下log文件头都记录了哪些信息:

669 /* Offsets of a log file header */670 #define LOG_GROUP_ID0 /* log group number */671 #define LOG_FILE_START_LSN4 /* lsn of the start of data in this672 log file */673 #define LOG_FILE_NO 12/* 4-byte archived log file number;674 this field is only defined in an675 archived log file */676 #define LOG_FILE_WAS_CREATED_BY_HOT_BACKUP 16677 /* a 32-byte field which contains678 the string 'ibbackup' and the679 creation time if the log file was680 created by ibbackup --restore;681 when mysqld is first time started682 on the restored database, it can683 print helpful info for the user */684 #define LOG_FILE_ARCH_COMPLETED OS_FILE_LOG_BLOCK_SIZE685 /* this 4-byte field is TRUE when686 the writing of an archived log file687 has been completed; this field is688 only defined in an archived log file */689 #define LOG_FILE_END_LSN(OS_FILE_LOG_BLOCK_SIZE + 4)690 /* lsn where the archived log file691 at least extends: actually the692 archived log file may extend to a693 later lsn, as long as it is within the694 same log block as this lsn; this field695 is defined only when an archived log696 file has been completely written */697 #define LOG_CHECKPOINT_1OS_FILE_LOG_BLOCK_SIZE698 /* first checkpoint field in the log699 header; we write alternately to the700 checkpoint fields when we make new701 checkpoints; this field is only defined702 in the first log file of a log group */703 #define LOG_CHECKPOINT_2(3 * OS_FILE_LOG_BLOCK_SIZE)704 /* second checkpoint field in the log705 header */706 #define LOG_FILE_HDR_SIZE (4 * OS_FILE_LOG_BLOCK_SIZE)

日志文件头共占用4个OS_FILE_LOG_BLOCK_SIZE的大小,这里对部分字段做简要介绍:

1. LOG_GROUP_ID 这个log文件所属的日志组,占用4个字节,当前都是0;

2. LOG_FILE_START_LSN 这个log文件记录的初始数据的lsn,占用8个字节;

3. LOG_FILE_WAS_CRATED_BY_HOT_BACKUP 备份程序所占用的字节数,共占用32字节,如xtrabackup在备份时会在xtrabackup_logfile文件中记录"xtrabackup backup_time";

4. LOG_CHECKPOINT_1/LOG_CHECKPOINT_2 两个记录InnoDB checkpoint信息的字段,分别从文件头的第二个和第四个block开始记录,只使用日志文件组的第一个日志文件。

这里多说两句,每次checkpoint后InnoDB都需要更新这两个字段的值,因此redo log的写入并非严格的顺序写;

每个log文件包含许多log records。log records将以OS_FILE_LOG_BLOCK_SIZE(默认值为512字节)为单位顺序写入log文件。每一条记录都有自己的LSN(log sequence number,表示从日志记录创建开始到特定的日志记录已经写入的字节数)。每个Log Block包含一个header段、一个tailer段,以及一组log records。

首先看下Log Block header。block header的开始4个字节是log block number,表示这是第几个block块。其是通过LSN计算得来,计算的函数是log_block_convert_lsn_to_no();接下来两个字节表示该block中已经有多少个字节被使用;再后边两个字节表示该block中作为一个新的MTR开始log record的偏移量,由于一个block中可以包含多个MTR记录的log,所以需要有记录表示此偏移量。再然后四个字节表示该block的checkpoint number。block trailer占用四个字节,表示此log block计算出的checksum值,用于正确性校验,MySQL5.6提供了若干种计算checksum的算法,这里不再赘述。我们可以结合代码中给出的注释,再了解下header和trailer的各个字段的含义。

580 /* Offsets of a log block header */581 #define LOG_BLOCK_HDR_NO0 /* block number which must be > 0 and582 is allowed to wrap around at 2G; the583 highest bit is set to 1 if this is the584 first log block in a log flush write585 segment */586 #define LOG_BLOCK_FLUSH_BIT_MASK 0x80000000UL587 /* mask used to get the highest bit in588 the preceding field */589 #define LOG_BLOCK_HDR_DATA_LEN4 /* number of bytes of log written to590 this block */591 #define LOG_BLOCK_FIRST_REC_GROUP 6 /* offset of the first start of an592 mtr log record group in this log block,593 0 if none; if the value is the same594 as LOG_BLOCK_HDR_DATA_LEN, it means595 that the first rec group has not yet596 been catenated to this log block, but597 if it will, it will start at this598 offset; an archive recovery can599 start parsing the log records starting600 from this offset in this log block,601 if value not 0 */602 #define LOG_BLOCK_CHECKPOINT_NO 8 /* 4 lower bytes of the value of603 log_sys->next_checkpoint_no when the604 log block was last written to: if the605 block has not yet been written full,606 this value is only updated before a607 log buffer flush */608 #define LOG_BLOCK_HDR_SIZE12/* size of the log block header in609 bytes */610611 /* Offsets of a log block trailer from the end of the block */612 #define LOG_BLOCK_CHECKSUM4 /* 4 byte checksum of the log block613 contents; in InnoDB versions614 <strong>Log 记录生成</strong><p>在介绍了log file和log block的结构后,需要描述log record在InnoDB内部是如何生成的,其“生命周期”是如何在内存中一步步流转并最终写入磁盘中的。这里涉及到两块内存缓冲,涉及到mtr/log_sys等内部结构,后续会一一介绍。</p><p>首先介绍下log_sys。log_sys是InnoDB在内存中保存的一个全局的结构体(struct名为log_t,global object名为log_sys),其维护了一块全局内存区域叫做log buffer(log_sys->buf),同时维护有若干lsn值等信息表示logging进行的状态。其在log_init函数中对所有的内部区域进行分配并对各个变量进行初始化。</p><p>log_t的结构体很大,这里不再粘出来,可以自行看"storage/innobase/include/log0log.h: struct log_t"。下边会对其中比较重要的字段值加以说明:</p>

| log_sys->lsn | 接下来将要生成的log record使用此lsn的值 |

log_sys->flushed_do_disk_lsn |

redo log file已经被刷新到此lsn。比该lsn值小的日志记录已经被安全的记录在磁盘上 |

log_sys->write_lsn |

当前正在执行的写操作使用的临界lsn值; |

log_sys->current_flush_lsn |

当前正在执行的write + flush操作使用的临界lsn值,一般和log_sys->write_lsn相等; |

| log_sys->buf | 内存中全局的log buffer,和每个mtr自己的buffer有所区别; |

log_sys->buf_size |

log_sys->buf的size |

log_sys->buf_free |

写入buffer的起始偏移量 |

log_sys->buf_next_to_write |

buffer中还未写到log file的起始偏移量。下次执行write+flush操作时,将会从此偏移量开始 |

log_sys->max_buf_free |

确定flush操作执行的时间点,当log_sys->buf_free比此值大时需要执行flush操作,具体看log_check_margins函数 |

接下来介绍mtr。mtr是mini-transactions的缩写。其在代码中对应的结构体是mtr_t,内部有一个局部buffer,会将一组log record集中起来,批量写入log buffer。mtr_t的结构体如下所示:

376 /* Mini-transaction handle and buffer */377 struct mtr_t{378 #ifdef UNIV_DEBUG379 ulint state;/*!<p>mtr_t::log --作为mtr的局部缓存,记录log record;</p><p>mtr_t::memo --包含了一组由此mtr涉及的操作造成的脏页列表,其会在mtr_commit执行后添加到flush list(参见mtr_memo_pop_all()函数);</p><p>mtr的一个典型应用场景如下:</p><p>1. 创建一个mtr_t类型的对象;</p><p>2. 执行mtr_start函数,此函数将会初始化mtr_t的字段,包括local buffer;</p><p>3. 在对内存bp中的page进行修改的同时,调用mlog_write_ulint类似的函数,生成redo log record,保存在local buffer中;</p><p>4. 执行mtr_commit函数,此函数将会将local buffer中的redo log拷贝到全局的log_sys->buffer,同时将脏页添加到flush list,供后续执行flush操作时使用;</p><p>mtr_commit函数调用mtr_log_reserve_and_write,进而调用log_write_low执行上述的拷贝操作。如果需要,此函数将会在log_sys->buf上创建一个新的log block,填充header、tailer以及计算checksum。</p><p>我们知道,为了保证数据库ACID特性中的原子性和持久性,理论上,在事务提交时,redo log应已经安全原子的写到磁盘文件之中。回到MySQL,文件内存中的log_sys->buffer何时以及如何写入磁盘中的redo log file与innodb_flush_log_at_trx_commit的设置密切相关。无论对于DBA还是MySQL的使用者对这个参数都已经相当熟悉,这里直接举例不同取值时log子系统是如何操作的。</p><p>innodb_flush_log_at_trx_commit=1/2。此时每次事务提交时都会写redo log,不同的是1对应write+flush,2只write,而由指定线程周期性的执行flush操作(周期多为1s)。执行write操作的函数是log_group_write_buf,其由log_write_up_to函数调用。一个典型的调用栈如下:</p><pre class="brush:php;toolbar:false">(trx_commit_in_memory()/trx_commit_complete_for_mysql()/trx_prepare() e.t.c)->trx_flush_log_if_needed()->trx_flush_log_if_needed_low()->log_write_up_to()->log_group_write_buf().log_group_write_buf会再调用innodb封装的底层IO系统,其实现很复杂,这里不再展开。

innodb_flush_log_at_trx_commit=0时,每次事务commit不会再调用写redo log的函数,其写入逻辑都由master_thread完成,典型的调用栈如下:

srv_master_thread()->(srv_master_do_active_tasks() / srv_master_do_idle_tasks() / srv_master_do_shutdown_tasks())->srv_sync_log_buffer_in_background()->log_buffer_sync_in_background()->log_write_up_to()->... .

除此参数的影响之外,还有一些场景下要求刷新redo log文件。这里举几个例子:

1)为了保证write ahead logging(WAL),在刷新脏页前要求其对应的redo log已经写到磁盘,因此需要调用log_write_up_to函数;

2)为了循环利用log file,在log file空间不足时需要执行checkpoint(同步或异步),此时会通过调用log_checkpoint执行日志刷新操作。checkpoint会极大的影响数据库的性能,这也是log file不能设置的太小的主要原因;

3)在执行一些管理命令时要求刷新redo log文件,比如关闭数据库;

这里再简要总结一下一个log record的“生命周期”:

1. redo log record首先由mtr生成并保存在mtr的local buffer中。这里保存的redo log record需要记录数据库恢复阶段所需的所有信息,并且要求恢复操作是幂等的;

2. 当mtr_commit被调用后,redo log record被记录在全局内存的log buffer之中;

3. 根据需要(需要额外的空间?事务commit?),redo log buffer将会write(+flush)到磁盘上的redo log文件中,此时redo log已经被安全的保存起来;

4. mtr_commit执行时会给每个log record生成一个lsn,此lsn确定了其在log file中的位置;

5. lsn同时是联系redo log和dirty page的纽带,WAL要求redo log在刷脏前写入磁盘,同时,如果lsn相关联的页面都已经写入了磁盘,那么磁盘上redo log file中对应的log record空间可以被循环利用;

6. 数据库恢复阶段,使用被持久化的redo log来恢复数据库;

接下来介绍redo log在数据库恢复阶段所起的重要作用。

Log RecoveryInnoDB的recovery的函数入口是innobase_start_or_create_for_mysql,其在mysql启动时由innobase_init函数调用。我们接下来看下源码,在此函数内可以看到如下两个函数调用:

1. recv_recovery_from_checkpoint_start

2. recv_recovery_from_checkpoint_finish

代码注释中特意强调,在任何情况下,数据库启动时都会尝试执行recovery操作,这是作为函数启动时正常代码路径的一部分。

主要恢复工作在第一个函数内完成,第二个函数做扫尾清理工作。这里,直接看函数的注释可以清楚函数的具体工作是什么。

146 /** Wrapper for recv_recovery_from_checkpoint_start_func().147 Recovers from a checkpoint. When this function returns, the database is able148 to start processing of new user transactions, but the function149 recv_recovery_from_checkpoint_finish should be called later to complete150 the recovery and free the resources used in it.151 @param type in: LOG_CHECKPOINT or LOG_ARCHIVE152 @param limin: recover up to this log sequence number if possible153 @param minin: minimum flushed log sequence number from data files154 @param maxin: maximum flushed log sequence number from data files155 @return error code or DB_SUCCESS */156 # define recv_recovery_from_checkpoint_start(type,lim,min,max)/157 recv_recovery_from_checkpoint_start_func(type,lim,min,max)

与log_t结构体相对应,恢复阶段也有一个结构体,叫做recv_sys_t,这个结构体在recv_recovery_from_checkpoint_start函数中通过recv_sys_create和recv_sys_init两个函数初始化。recv_sys_t中同样有几个和lsn相关的字段,这里做下介绍。

recv_sys->limit_lsn |

恢复应该执行到的最大的LSN值,这里赋值为LSN_MAX(uint64_t的最大值) |

recv_sys->parse_start_lsn |

恢复解析日志阶段所使用的最起始的LSN值,这里等于最后一次执行checkpoint对应的LSN值 |

recv_sys->scanned_lsn |

当前扫描到的LSN值 |

recv_sys->recovered_lsn |

当前恢复到的LSN值,此值小于等于recv_sys->scanned_lsn |

在获取start_lsn后,recv_recovery_from_checkpoint_start函数调用recv_group_scan_log_recs函数读取及解析log records。

我们重点看下recv_group_scan_log_recs函数:

2908 /*******************************************************//**2909 Scans log from a buffer and stores new log data to the parsing buffer. Parses2910 and hashes the log records if new data found. */2911 static2912 void2913 recv_group_scan_log_recs(2914 /*=====================*/2915 log_group_t*group,/*!buf,2934group, start_lsn, end_lsn);29352936 finished = recv_scan_log_recs(2937 (buf_pool_get_n_pages()2938 - (recv_n_pool_free_frames * srv_buf_pool_instances))2939 * UNIV_PAGE_SIZE,2940 TRUE, log_sys->buf, RECV_SCAN_SIZE,2941 start_lsn, contiguous_lsn, group_scanned_lsn);2942 start_lsn = end_lsn;2943 }

此函数内部是一个while循环。log_group_read_log_seg函数首先将log record读取到一个内存缓冲区中(这里是log_sys->buf),接着调用recv_scan_log_recs函数用来解析这些log record。解析过程会计算log block的checksum以及block no和lsn是否对应。解析过程完成后,解析结果会存入recv_sys->addr_hash维护的hash表中。这个hash表的key是通过space id和page number计算得到,value是一组应用到指定页面的经过解析后的log record,这里不再展开。

上述步骤完成后,recv_apply_hashed_log_recs函数可能会在recv_group_scan_log_recs或recv_recovery_from_checkpoint_start函数中调用,此函数将addr_hash中的log应用到特定的page上。此函数会调用recv_recover_page函数做真正的page recovery操作,此时会判断页面的lsn要比log record的lsn小。

105 /** Wrapper for recv_recover_page_func().106 Applies the hashed log records to the page, if the page lsn is less than the107 lsn of a log record. This can be called when a buffer page has just been108 read in, or also for a page already in the buffer pool.109 @param jriin: TRUE if just read in (the i/o handler calls this for110 a freshly read page)111 @param blockin/out: the buffer block112 */113 # define recv_recover_page(jri, block)recv_recover_page_func(jri, block)

如上就是整个页面的恢复流程。

附一个问题环节,后续会将redo log相关的问题记录在这里。

1. Q: Log_file, Log_block, Log_record的关系?

A: Log_file由一组log block组成,每个log block都是固定大小的。log block中除了header/tailer以外的字节都是记录的log record

2. Q: 是不是每一次的Commit,产生的应该是一个Log_block ?

A: 这个不一定的。写入log_block由mtr_commit确定,而不是事务提交确定。看log record大小,如果大小不需要跨log block,就会继续在当前的log block中写 。

3. Q: Log_record的结构又是怎么样的呢?

A: 这个结构很多,也没有细研究,具体看后边登博图中的简要介绍吧;

4. Q: 每个Block应该有下一个Block的偏移吗,还是顺序即可,还是记录下一个的Block_number

A: block都是固定大小的,顺序写的

5. Q: 那如何知道这个Block是不是完整的,是不是依赖下一个Block呢?

A: block开始有2个字节记录 此block中第一个mtr开始的位置,如果这个值是0,证明还是上一个block的同一个mtr。

6. Q: 一个事务是不是需要多个mtr_commit

A: 是的。mtr的m == mini;

7. Q: 这些Log_block是不是在Commit的时候一起刷到当中?

A: mtr_commit时会写入log buffer,具体什么时候写到log file就不一定了

8. Q: 那LSN是如何写的呢?

A: lsn就是相当于在log file中的位置,那么在写入log buffer时就会确定这个lsn的大小了 。当前只有一个log buffer,在log buffer中的位置和在log file中的位置是一致的

9. Q: 那我Commit的时候做什么事情呢?

A: 可能写log 、也可能不写,由innodb_flush_log_at_trx_commit这个参数决定啊

10. Q: 这两个值是干嘛用的: LOG_CHECKPOINT_1/LOG_CHECKPOINT_2

A: 这两个可以理解为log file头信息的一部分(占用文件头第二和第四个block),每次执行checkpoint都需要更新这两个字段,后续恢复时,每个页面对应lsn中比这个checkpoint值小的,认为是已经写入了,不需要再恢复

文章最后,将网易杭研院何登成博士-登博博客上的一个log block的结构图放在这,再画图也不会比登博这张图画的更清晰了,版权属于登博。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Pinduoduo software provides a lot of good products, you can buy them anytime and anywhere, and the quality of each product is strictly controlled, every product is genuine, and there are many preferential shopping discounts, allowing everyone to shop online Simply can not stop. Enter your mobile phone number to log in online, add multiple delivery addresses and contact information online, and check the latest logistics trends at any time. Product sections of different categories are open, search and swipe up and down to purchase and place orders, and experience convenience without leaving home. With the online shopping service, you can also view all purchase records, including the goods you have purchased, and receive dozens of shopping red envelopes and coupons for free. Now the editor has provided Pinduoduo users with a detailed online way to view purchased product records. method. 1. Open your phone and click on the Pinduoduo icon.

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

Start from scratch and guide you step by step to install Flask and quickly establish a personal blog

Feb 19, 2024 pm 04:01 PM

Start from scratch and guide you step by step to install Flask and quickly establish a personal blog

Feb 19, 2024 pm 04:01 PM

Starting from scratch, I will teach you step by step how to install Flask and quickly build a personal blog. As a person who likes writing, it is very important to have a personal blog. As a lightweight Python Web framework, Flask can help us quickly build a simple and fully functional personal blog. In this article, I will start from scratch and teach you step by step how to install Flask and quickly build a personal blog. Step 1: Install Python and pip Before starting, we need to install Python and pi first

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

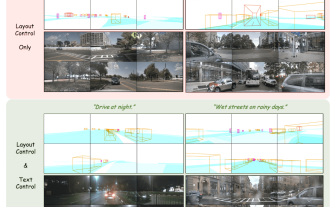

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

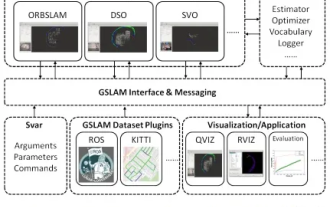

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.