Technology peripherals

Technology peripherals

AI

AI

The Swin moment of the visual Mamba model, the Chinese Academy of Sciences, Huawei and others launched VMamba

The Swin moment of the visual Mamba model, the Chinese Academy of Sciences, Huawei and others launched VMamba

The Swin moment of the visual Mamba model, the Chinese Academy of Sciences, Huawei and others launched VMamba

Transformer’s position in the field of large models is unshakable. However, as the model scale expands and the sequence length increases, the limitations of the traditional Transformer architecture begin to become apparent. Fortunately, the advent of Mamba is quickly changing this situation. Its outstanding performance immediately caused a sensation in the AI community. The emergence of Mamba has brought huge breakthroughs to large-scale model training and sequence processing. Its advantages are spreading rapidly in the AI community, bringing great hope for future research and applications.

Last Thursday, the introduction of Vision Mamba (Vim) has demonstrated its great potential to become the next generation backbone of the visual basic model. Just one day later, Researchers from the Chinese Academy of Sciences, Huawei, and Pengcheng Laboratory proposed VMamba:A visual Mamba model with global receptive field and linear complexity. This work marks the arrival of the visual Mamba model Swin moment.

- ##Paper title: VMamba: Visual State Space Model

- Paper address: https://arxiv.org/abs/2401.10166

- Code address: https://github.com/MzeroMiko/VMamba

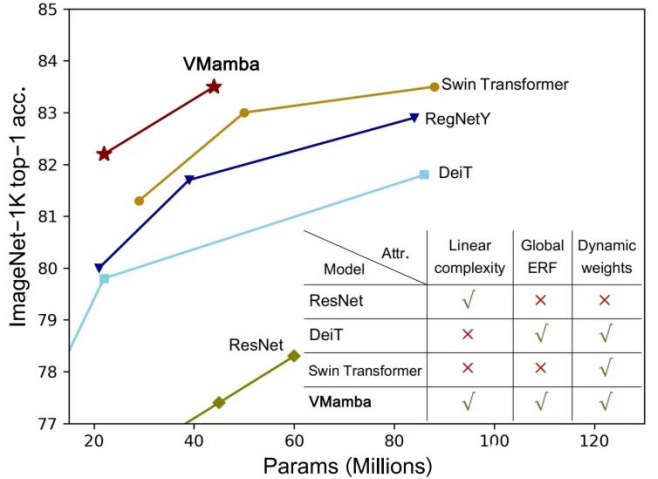

CNN and Visual Transformer (ViT) are currently the two most mainstream basic visual models. Although CNN has linear complexity, ViT has more powerful data fitting capabilities, but at the cost of higher computational complexity. Researchers believe that ViT has strong fitting ability because it has a global receptive field and dynamic weights. Inspired by the Mamba model, researchers designed a model that has both excellent properties under linear complexity, namely the Visual State Space Model (VMamba). Extensive experiments have proven that VMamba performs well in various visual tasks. As shown in the figure below, VMamba-S achieves 83.5% accuracy on ImageNet-1K, which is 3.2% higher than Vim-S and 0.5% higher than Swin-S.

Method introduction

The success of VMamba The key lies in the use of the S6 model, which was originally designed to solve natural language processing (NLP) tasks. Unlike ViT's attention mechanism, the S6 model effectively reduces quadratic complexity to linearity by interacting each element in the 1D vector with previous scan information. This interaction makes VMamba more efficient when processing large-scale data. Therefore, the introduction of the S6 model laid a solid foundation for VMamba's success.

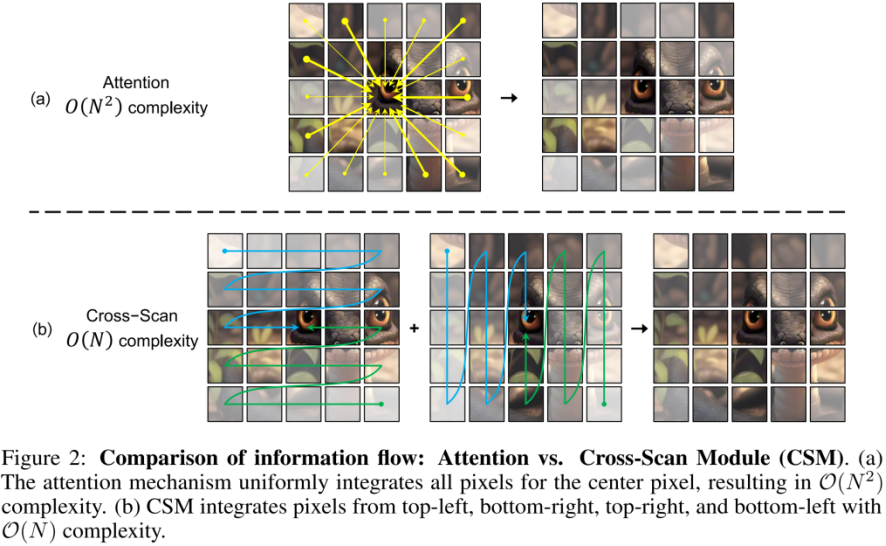

However, since visual signals (such as images) do not have a natural orderliness like text sequences, the data scanning method in S6 cannot simply be directly performed on visual signals. application. For this purpose, researchers designed a Cross-Scan scanning mechanism. Cross-Scan module (CSM) adopts a four-way scanning strategy, that is, scanning from the four corners of the feature map simultaneously (see the figure above). This strategy ensures that each element in the feature integrates information from all other locations in different directions, thus forming a global receptive field without increasing linear computational complexity.

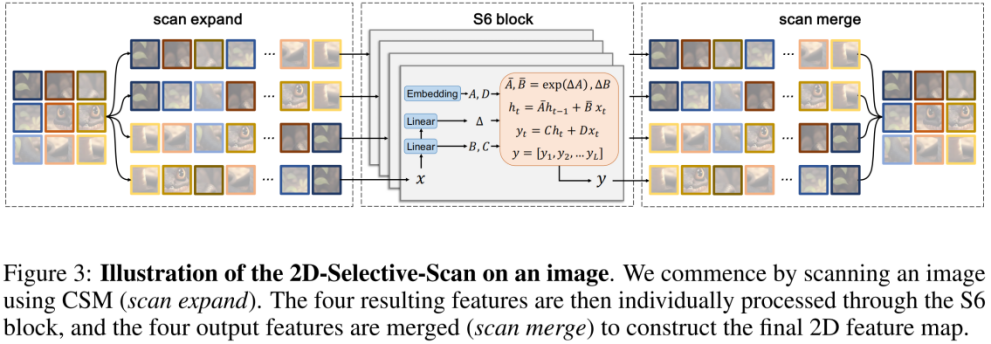

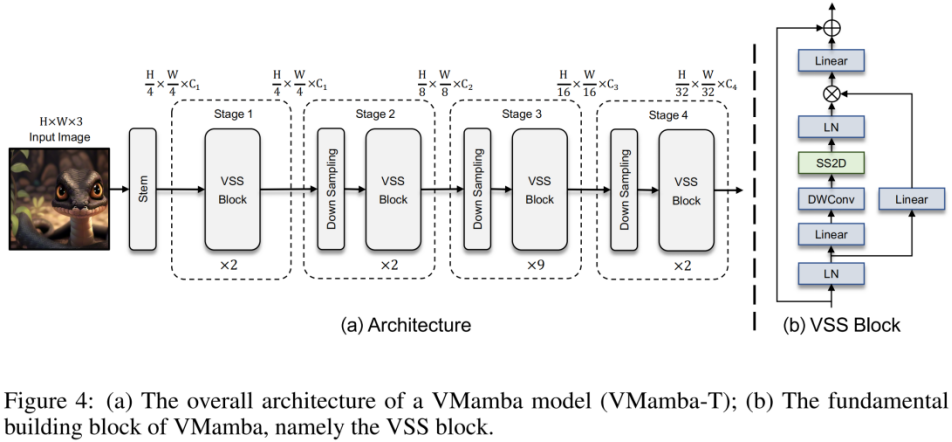

Based on CSM, the author designed the 2D-selective-scan (SS2D) module. As shown in the figure above, SS2D consists of three steps:

- #scan expand Flatten a 2D feature in 4 different directions (upper left, lower right, lower left, upper right) is a 1D vector.

- The S6 block independently sends the four 1D vectors obtained in the previous step to the S6 operation.

- scan merge fuses the four 1D vectors obtained into a 2D feature output.

The above picture is the VMamba structure diagram proposed in this article. The overall framework of VMamba is similar to the mainstream visual model. The main difference lies in the operators used in the basic module (VSS block). VSS block uses the 2D-selective-scan operation introduced above, namely SS2D. SS2D ensures that VMamba achieves the global receptive field at the linear complexity cost.

Experimental results

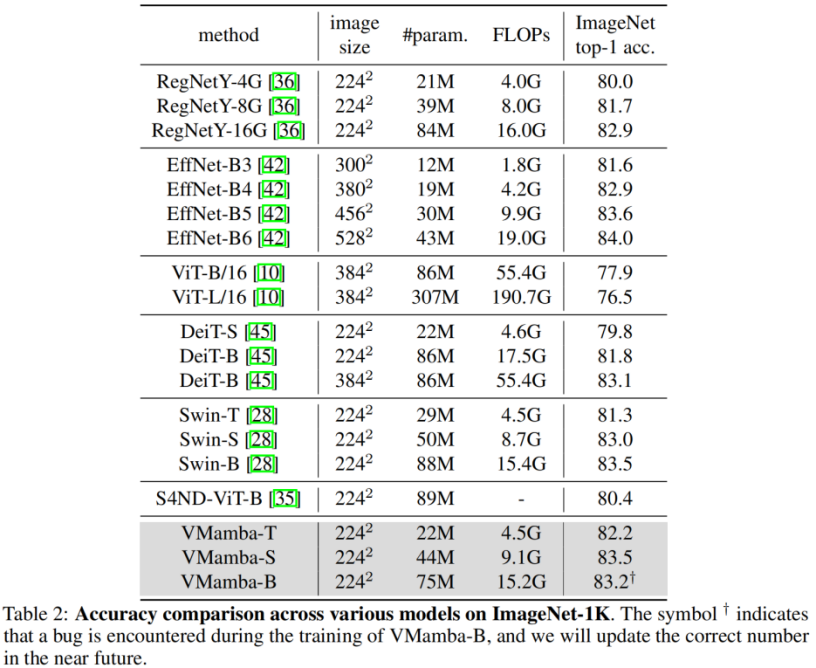

ImageNet classification

##passed Comparing the experimental results, it is not difficult to see that under similar parameter amounts and FLOPs:

- VMamba-T achieved a performance of 82.2%, exceeding RegNetY- 4G reached 2.2%, DeiT-S reached 2.4%, and Swin-T reached 0.9%.

- VMamba-S achieved a performance of 83.5%, exceeding RegNetY-8G by 1.8% and Swin-S by 0.5%.

- VMamba-B achieved a performance of 83.2% (there is a bug, the correct result will be updated on the Github page as soon as possible), which is 0.3% higher than RegNetY.

These results are much higher than the Vision Mamba (Vim) model, fully validating the potential of VMamba.

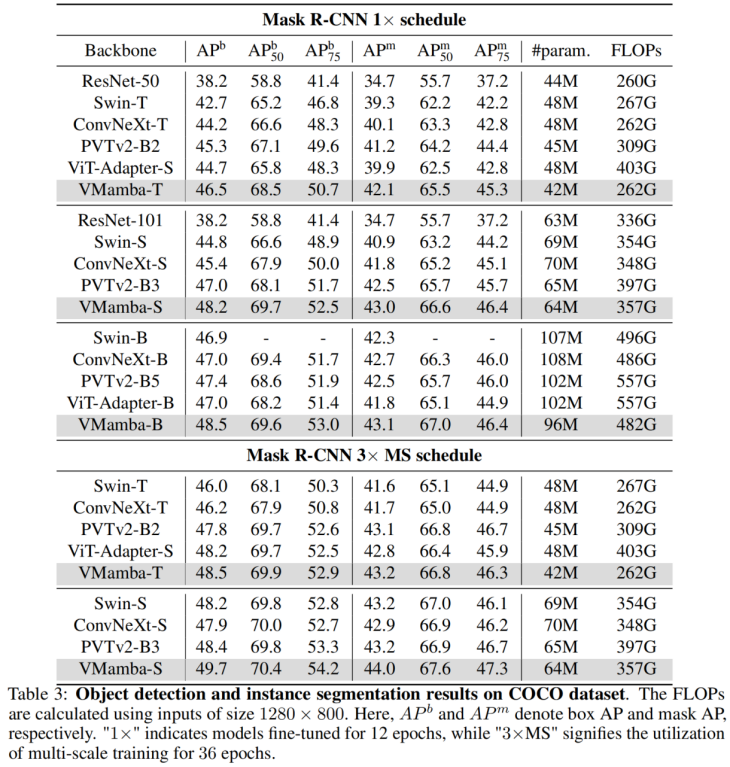

COCO target detection

On the COOCO data set, VMamba also Maintaining excellent performance: In the case of fine-tune 12 epochs, VMamba-T/S/B reached 46.5%/48.2%/48.5% mAP respectively, exceeding Swin-T/S/B by 3.8%/3.6%/1.6 % mAP, exceeding ConvNeXt-T/S/B by 2.3%/2.8%/1.5% mAP. These results verify that VMamba fully works in downstream visual experiments, demonstrating its potential to replace mainstream basic visual models.

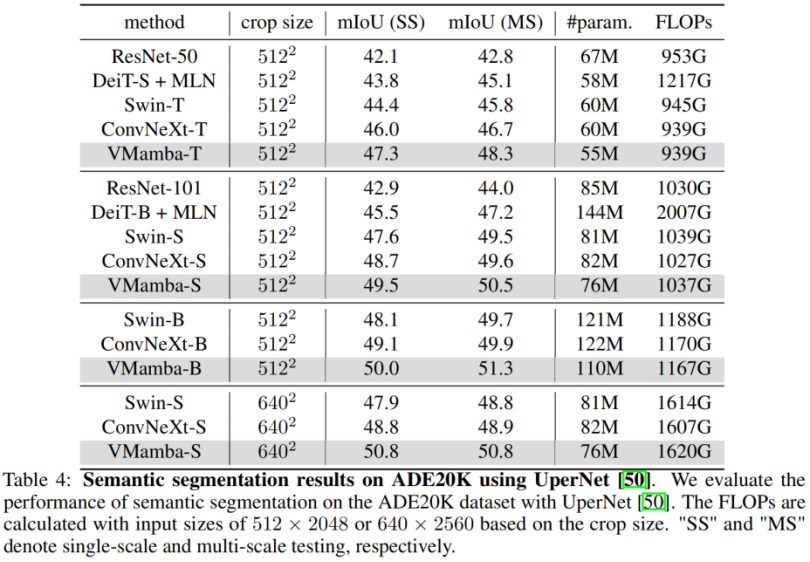

ADE20K Semantic Segmentation

On ADE20K, VMamba also shows Excellent performance. The VMamba-T model achieves 47.3% mIoU at 512 × 512 resolution, a score that surpasses all competitors, including ResNet, DeiT, Swin, and ConvNeXt. This advantage can still be maintained under the VMamba-S/B model.

Analysis Experiment

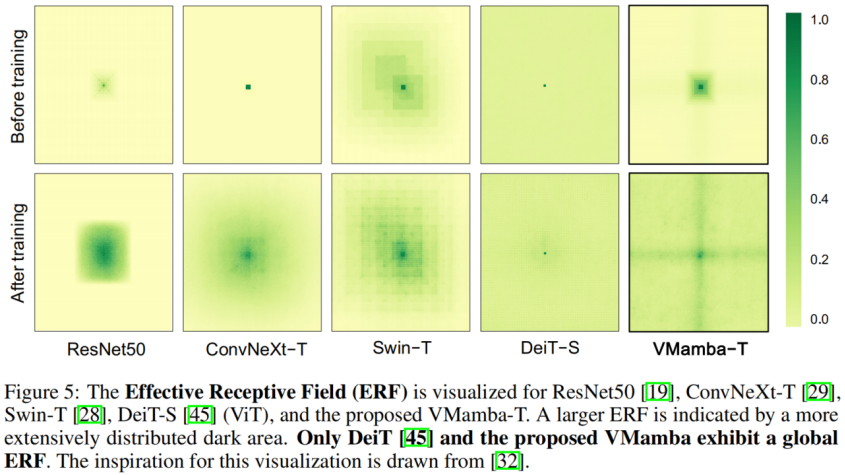

Effective Receptive Field

VMamba has a global effective receptive field, and only DeiT among other models has this feature. However, it is worth noting that the cost of DeiT is quadratic complexity, while VMamaba is linear complexity.

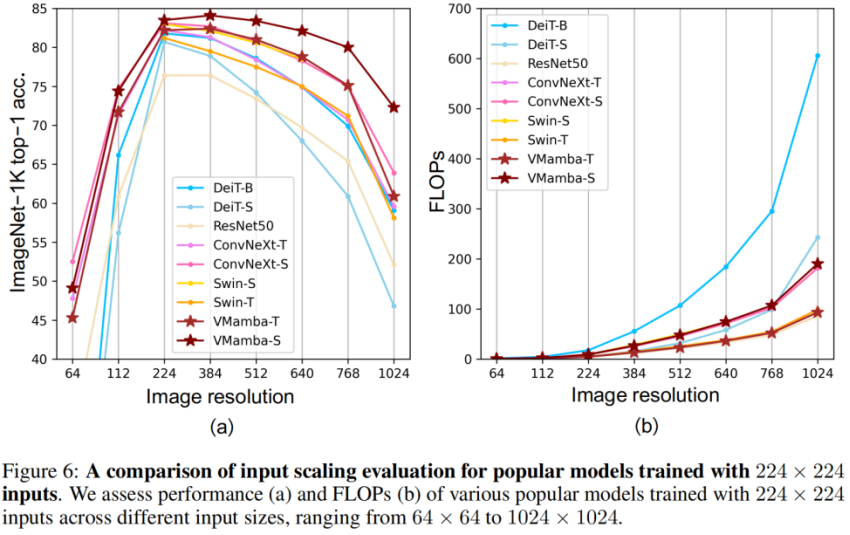

Input scale scaling

- Above picture (a) It is shown that VMamba exhibits the most stable performance (without fine-tuning) under different input image sizes. Interestingly, as the input size increases from 224 × 224 to 384 × 384, only VMamba shows a significant increase in performance (VMamba-S from 83.5% to 84.0%), highlighting its robustness to input image size changes sex.

- The above figure (b) shows that the complexity of the VMamba series models increases linearly as the input becomes larger, which is consistent with the CNN model.

Finally, let us look forward to more Mamba-based vision models being proposed, alongside CNNs and ViTs, to provide a third option for basic vision models.

The above is the detailed content of The Swin moment of the visual Mamba model, the Chinese Academy of Sciences, Huawei and others launched VMamba. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving