Technology peripherals

Technology peripherals

AI

AI

Regularization method successfully applied to multi-layer perceptrons using dropout layers

Regularization method successfully applied to multi-layer perceptrons using dropout layers

Regularization method successfully applied to multi-layer perceptrons using dropout layers

Multi-layer perceptron (MLP) is a commonly used deep learning model used for tasks such as classification and regression. However, MLP is prone to overfitting problems, that is, it performs well on the training set but performs poorly on the test set. To solve this problem, researchers have proposed a variety of regularization methods, the most commonly used of which is dropout. By randomly discarding the output of some neurons during training, dropout can reduce the complexity of the neural network, thereby reducing the risk of overfitting. This method has been widely used in deep learning models and achieved significant improvement.

Dropout is a technique for neural network regularization, originally proposed by Srivastava et al. in 2014. This method reduces overfitting by randomly deleting neurons. Specifically, the dropout layer randomly selects some neurons and sets their output to 0, thus preventing the model from relying on specific neurons. During testing, the dropout layer multiplies the output of all neurons by a retention probability to retain all neurons. In this way, dropout can force the model to learn more robust and generalizable features during training, thereby improving the model's generalization ability. By reducing the complexity of the model, dropout can also effectively reduce the risk of overfitting. Therefore, dropout has become one of the commonly used regularization techniques in many deep learning models.

The principle of dropout is simple but effective. It forces the model to learn robust features by randomly deleting neurons, thereby reducing the risk of overfitting. In addition, dropout also prevents neuronal co-adaptation and avoids dependence on specific neurons.

In practice, using dropout is very simple. When building a multi-layer perceptron, you can add a dropout layer after each hidden layer and set a retention probability. For example, if we want to use dropout in an MLP with two hidden layers, we can build the model as follows: 1. Define the structure of the input layer, hidden layer and output layer. 2. Add a dropout layer after the first hidden layer and set the retention probability to p. 3. Add another dropout layer after the second hidden layer and set the same retention probability p. 4. Define the output layer and connect the previous hidden layer to the output layer. 5. Define the loss function and optimizer. 6. Carry out model training and prediction. In this way, the dropout layer will be based on the retention probability p

model = Sequential() model.add(Dense(64, input_dim=20,activation='relu')) model.add(Dropout(0.5)) model.add(Dense(64, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(10, activation='softmax'))

In this example, we added a dropout layer after each hidden layer and set the retention probability to 0.5. This means that each neuron has a 50% probability of being deleted during training. During testing, all neurons are retained.

It should be noted that dropout should be used during training, but not during testing. This is because during testing we want to use all neurons to make predictions, not just some.

In general, dropout is a very effective regularization method that can help reduce the risk of overfitting. By randomly deleting neurons during training, dropout can force the model to learn more robust features and prevent co-adaptation between neurons. In practice, the method of using dropout is very simple, just add a dropout layer after each hidden layer and specify a retention probability.

The above is the detailed content of Regularization method successfully applied to multi-layer perceptrons using dropout layers. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

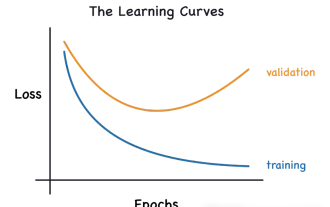

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Outlook on future trends of Golang technology in machine learning

May 08, 2024 am 10:15 AM

Outlook on future trends of Golang technology in machine learning

May 08, 2024 am 10:15 AM

The application potential of Go language in the field of machine learning is huge. Its advantages are: Concurrency: It supports parallel programming and is suitable for computationally intensive operations in machine learning tasks. Efficiency: The garbage collector and language features ensure that the code is efficient, even when processing large data sets. Ease of use: The syntax is concise, making it easy to learn and write machine learning applications.