关于删除MySQL Logs的一点记录

五一前,一个DBA同事反馈,在日常环境中删除一个大的slow log文件(假设文件大小10G以上吧),然后在MySQL中执行flush slow logs,会发现mysqld hang住。

今天尝试着重现了此问题,这里简要分析下原因。

重现步骤:

1. 构造slow log (将long_query_time设成了0);

2. 观察删rm slow log瞬间,tps/qps变化;

3. 观察执行flush slow logs瞬间,tps/qps变化;

4. 记录flush slow logs执行时, pstack打出的调用栈情况;

第一步,没啥好说的。

第二步,tps/qps没啥变化。

第三步,会发现tps/qps瞬间跌0,如下所示:

1 |

|

mysql命令行会发现,flush slow logs执行时间刚好为3s左右。

第四步,我们看下pstack的输出结果,只记录相关的:

1 |

|

会发现Thread 2在执行flush slow logs操作,其他的线程都在等待锁LOCK_log上边。

背后的原因其实很简单,在shell中执行rm slow log操作时,由于mysqld仍有文件句柄打开此文件,所以实际上此时文件并未删除。执行flush logs操作,其实际执行的是1)close;2)open 操作(logger.flush_slow_log -> mysql_slow_log.reopen_file),在close操作执行时,文件系统真正删除文件,此时该线程占用着LOCK_log锁。

删除时会执行刷脏(当然我构造这个场景很极端,基本所有slow log文件的内容都在文件系统缓存中),这个会很耗时间,比如我执行这个语句耗了3s。此时间段内,如果连接发来的语句需要记log(server层的log:slow log/binlog/general log共有LOCK_log这把锁)就会处于等待状态,那么系统对外的反应就是hang住了。

flush slow logs中调用执行的close所需时间和文件大小、以及文件系统缓存中该文件脏页比例都有关系,比如我在执行flush slow logs前使用sysctl vm.drop_caches=3清空

了文件系统缓存的话,同样大小的flush slow logs操作执行时间是0.42s,相应的阻塞时间也会减少不少。

可以考虑在slow logs的文件句柄上执行posix_fadvise调用,促使不会缓存很大的log文件内容(slow log也没啥需要缓存的),这有篇霸爷的文章,可以参考下 posix_fadvise清除缓存的误解和改进措施 。

另外,peter在07年就讨论过这个问题, Be careful rotating MySQL logs 其给出的建议是先mv file,然后flush logs,再执行删除文件的操作,让真正的删除行为由自己而不是mysqld完成。比较遗憾的是,五年过去了,LOCK_log这把锁的问题还没有完整的解决掉。

PS:

文章结尾记一点备忘,通过close/rm操作删除一个10G大小的文件,在执行sysctl vm.drop_caches=3清空缓存后,此操作的耗时仍在百毫秒量级(我的机器上是200ms+),其背后做了什么事情还需要找内核组的同事了解下。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Where can I view the records of things I have purchased on Pinduoduo? How to view the records of purchased products?

Mar 12, 2024 pm 07:20 PM

Pinduoduo software provides a lot of good products, you can buy them anytime and anywhere, and the quality of each product is strictly controlled, every product is genuine, and there are many preferential shopping discounts, allowing everyone to shop online Simply can not stop. Enter your mobile phone number to log in online, add multiple delivery addresses and contact information online, and check the latest logistics trends at any time. Product sections of different categories are open, search and swipe up and down to purchase and place orders, and experience convenience without leaving home. With the online shopping service, you can also view all purchase records, including the goods you have purchased, and receive dozens of shopping red envelopes and coupons for free. Now the editor has provided Pinduoduo users with a detailed online way to view purchased product records. method. 1. Open your phone and click on the Pinduoduo icon.

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Start from scratch and guide you step by step to install Flask and quickly establish a personal blog

Feb 19, 2024 pm 04:01 PM

Start from scratch and guide you step by step to install Flask and quickly establish a personal blog

Feb 19, 2024 pm 04:01 PM

Starting from scratch, I will teach you step by step how to install Flask and quickly build a personal blog. As a person who likes writing, it is very important to have a personal blog. As a lightweight Python Web framework, Flask can help us quickly build a simple and fully functional personal blog. In this article, I will start from scratch and teach you step by step how to install Flask and quickly build a personal blog. Step 1: Install Python and pip Before starting, we need to install Python and pi first

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

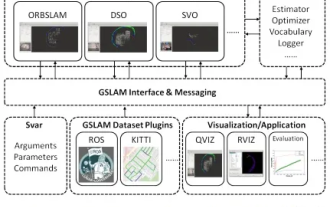

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.