Technology peripherals

Technology peripherals

AI

AI

Definition, classification and algorithm framework of reinforcement learning

Definition, classification and algorithm framework of reinforcement learning

Definition, classification and algorithm framework of reinforcement learning

Reinforcement learning (RL) is a machine learning algorithm between supervised learning and unsupervised learning. It solves problems through trial and error and learning. During training, reinforcement learning takes a series of decisions and is rewarded or punished based on the actions performed. The goal is to maximize the total reward. Reinforcement learning has the ability to learn autonomously and adapt, and can make optimized decisions in dynamic environments. Compared with traditional supervised learning, reinforcement learning is more suitable for problems without clear labels and can achieve good results in long-term decision-making problems.

The core of reinforcement learning is to enforce behavior based on the actions performed by the agent, and the agent is rewarded based on the positive impact of the action on the overall goal.

There are two main types of reinforcement learning algorithms:

Model-based and model-free learning algorithms

Model-based algorithm

Model-based algorithm uses transition and reward functions to estimate the optimal policy. In model-based reinforcement learning, the agent has access to a model of the environment, i.e., the actions it needs to perform to get from one state to another, the attached probabilities, and the corresponding rewards. They allow reinforcement learning agents to plan ahead by thinking ahead.

Model-free algorithm

Model-free algorithm finds the optimal strategy when the understanding of the dynamics of the environment is very limited. There are no transitions or incentives to judge the best policy. The optimal policy is estimated directly empirically, i.e. only the interaction between the agent and the environment, without any hint of the reward function.

Model-free reinforcement learning should be applied to scenarios with incomplete environmental information, such as self-driving cars, where model-free algorithms are superior to other techniques.

The most commonly used algorithm framework for reinforcement learning

Markov Decision Process (MDP)

Markov Decision Process is a reinforcement learning algorithm that provides us with a way to formalize sequential decision-making. This formalization is the basis for the problems that reinforcement learning solves. The component involved in a Markov Decision Process (MDP) is a decision maker called an agent, which interacts with its environment.

At each timestamp, the agent will obtain some representation of the state of the environment. Given this representation, the agent chooses an action to perform. The environment then transitions to some new state and the agent is rewarded for its previous actions. The important thing to note about the Markov decision process is that it does not worry about immediate rewards, but rather aims to maximize the total reward over the entire trajectory.

Bellman equation

The Bellman equation is a type of reinforcement learning algorithm that is particularly suitable for deterministic environments. The value of a given state is determined by the maximum action that the agent can take in the state it is in. The purpose of an agent is to choose actions that will maximize value.

Therefore, it needs to increase the reward of the best action in the state and add a discount factor that reduces its reward over time. Every time the agent takes an action, it returns to the next state.

Instead of summing over multiple time steps, this equation simplifies the calculation of the value function, allowing us to find the optimal solution by decomposing the complex problem into smaller recursive sub-problems. Best solution.

Q-Learning

Q-Learning combines a value function, quality based on the best possible strategy given the current state and the agent has The expected future value is assigned to the state-action pair as Q. Once the agent learns this Q-function, it looks for the best possible action that produces the highest quality in a specific state.

Through the optimal Q function, the optimal strategy can be determined by applying a reinforcement learning algorithm to find the action that maximizes the value of each state.

The above is the detailed content of Definition, classification and algorithm framework of reinforcement learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

Image annotation is the process of associating labels or descriptive information with images to give deeper meaning and explanation to the image content. This process is critical to machine learning, which helps train vision models to more accurately identify individual elements in images. By adding annotations to images, the computer can understand the semantics and context behind the images, thereby improving the ability to understand and analyze the image content. Image annotation has a wide range of applications, covering many fields, such as computer vision, natural language processing, and graph vision models. It has a wide range of applications, such as assisting vehicles in identifying obstacles on the road, and helping in the detection and diagnosis of diseases through medical image recognition. . This article mainly recommends some better open source and free image annotation tools. 1.Makesens

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

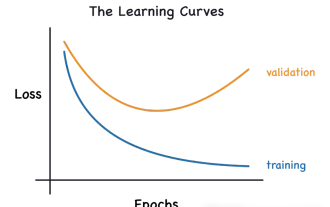

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

In layman’s terms, a machine learning model is a mathematical function that maps input data to a predicted output. More specifically, a machine learning model is a mathematical function that adjusts model parameters by learning from training data to minimize the error between the predicted output and the true label. There are many models in machine learning, such as logistic regression models, decision tree models, support vector machine models, etc. Each model has its applicable data types and problem types. At the same time, there are many commonalities between different models, or there is a hidden path for model evolution. Taking the connectionist perceptron as an example, by increasing the number of hidden layers of the perceptron, we can transform it into a deep neural network. If a kernel function is added to the perceptron, it can be converted into an SVM. this one

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Explainable AI: Explaining complex AI/ML models

Jun 03, 2024 pm 10:08 PM

Translator | Reviewed by Li Rui | Chonglou Artificial intelligence (AI) and machine learning (ML) models are becoming increasingly complex today, and the output produced by these models is a black box – unable to be explained to stakeholders. Explainable AI (XAI) aims to solve this problem by enabling stakeholders to understand how these models work, ensuring they understand how these models actually make decisions, and ensuring transparency in AI systems, Trust and accountability to address this issue. This article explores various explainable artificial intelligence (XAI) techniques to illustrate their underlying principles. Several reasons why explainable AI is crucial Trust and transparency: For AI systems to be widely accepted and trusted, users need to understand how decisions are made