The definition and use of batches and cycles in neural networks

Neural network is a powerful machine learning model that can efficiently process and learn from large amounts of data. However, when dealing with large-scale data sets, the training process of neural networks can become very slow, resulting in training times lasting hours or days. In order to solve this problem, batch and epoch are usually used for training. Batch refers to the number of data samples input into the neural network at one time. Batch processing reduces the amount of calculation and memory consumption and improves the training speed. Epoch refers to the number of times the entire data set is input into the neural network during the training process. Multiple iterative training can improve the accuracy of the model. By adjusting the batch and epoch sizes, you can find a balance between training speed and model performance to obtain the best training results.

Batch refers to a small batch of data randomly selected by the neural network from the training data in one iteration. The size of this batch of data can be adjusted as needed, typically tens to hundreds of samples. In each batch, the neural network will receive some input data and perform forward and backpropagation on this data to update the weights of the network. Using batches can speed up the training process of a neural network because it can calculate gradients and update weights faster without having to perform these calculations on the entire data set. By using batch, the network can gradually adjust its weights and gradually approach the optimal solution. This small batch training method can improve training efficiency and reduce the consumption of computing resources.

Epoch refers to a complete training iteration on the entire training data set. At the beginning of each Epoch, the neural network divides the training data set into multiple batches and performs forward propagation and back propagation on each batch to update the weights and calculate the loss. By dividing the training data set into multiple batches, neural networks can be trained more efficiently. The size of each batch can be adjusted according to memory and computing resource constraints. Smaller batches can provide more update opportunities, but also increase computational overhead. At the end of the entire Epoch, the neural network will have been trained on the entire data set for multiple batches. This means that the neural network has made multiple weight updates and loss calculations through the entire data set. These updated weights can be used for inference or training for the next Epoch. Through the training of multiple Epochs, the neural network can gradually learn the patterns and features in the data set and improve its performance. In practical applications, multiple Epoch training is usually required to achieve better results. The number of training times per epoch depends on the size and complexity of the data set, as well as the time and resource constraints of training.

Batch and Epoch have different effects on the training of neural networks. Batch refers to a set of sample data used to update weights in each iteration, while Epoch refers to the process of forward and backpropagation of the entire training data set through the neural network. Using Batch can help neural networks train faster because the number of samples for each weight update is smaller and the calculation speed is faster. In addition, smaller batch sizes can also reduce memory usage, especially when the training data set is large, which can reduce memory pressure. Using Epoch can ensure that the neural network is fully trained on the entire data set, because the neural network needs to continuously adjust the weights through multiple Epochs to improve the accuracy and generalization ability of the model. Each Epoch performs a forward pass and a back pass on all samples in the dataset, gradually reducing the loss function and optimizing the model. When choosing a batch size, you need to balance two factors: training speed and noise. Smaller batch sizes can speed up training and reduce memory usage, but may lead to increased noise during training. This is because the data in each batch may not be representative, resulting in a certain degree of randomness in the update of the weights. Larger batch sizes can reduce noise and improve the accuracy of weight updates, but may be limited by memory capacity and require longer time for gradient calculations and weight updates. Therefore, when selecting the Batch size, factors such as training speed, memory usage, and noise need to be comprehensively considered, and adjustments should be made according to specific circumstances to achieve the best training effect.

The use of Epoch ensures that the neural network is fully trained on the entire data set, thereby avoiding the problem of overfitting. In each Epoch, the neural network can learn different samples in the data set and optimize the weights and biases through backpropagation of each batch, thus improving the performance of the network. Without Epoch, the neural network may overfit to certain samples, resulting in reduced generalization ability on new data. Therefore, using Epoch is crucial to the effectiveness of training neural networks.

In addition to batch and Epoch, there are some other training techniques that can also be used to accelerate the training of neural networks, such as learning rate adjustment, regularization, data enhancement, etc. These techniques can help neural networks generalize better to new data and can improve the convergence speed of training.

The above is the detailed content of The definition and use of batches and cycles in neural networks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

Explore the concepts, differences, advantages and disadvantages of RNN, LSTM and GRU

Jan 22, 2024 pm 07:51 PM

Explore the concepts, differences, advantages and disadvantages of RNN, LSTM and GRU

Jan 22, 2024 pm 07:51 PM

In time series data, there are dependencies between observations, so they are not independent of each other. However, traditional neural networks treat each observation as independent, which limits the model's ability to model time series data. To solve this problem, Recurrent Neural Network (RNN) was introduced, which introduced the concept of memory to capture the dynamic characteristics of time series data by establishing dependencies between data points in the network. Through recurrent connections, RNN can pass previous information into the current observation to better predict future values. This makes RNN a powerful tool for tasks involving time series data. But how does RNN achieve this kind of memory? RNN realizes memory through the feedback loop in the neural network. This is the difference between RNN and traditional neural network.

A case study of using bidirectional LSTM model for text classification

Jan 24, 2024 am 10:36 AM

A case study of using bidirectional LSTM model for text classification

Jan 24, 2024 am 10:36 AM

The bidirectional LSTM model is a neural network used for text classification. Below is a simple example demonstrating how to use bidirectional LSTM for text classification tasks. First, we need to import the required libraries and modules: importosimportnumpyasnpfromkeras.preprocessing.textimportTokenizerfromkeras.preprocessing.sequenceimportpad_sequencesfromkeras.modelsimportSequentialfromkeras.layersimportDense,Em

Calculating floating point operands (FLOPS) for neural networks

Jan 22, 2024 pm 07:21 PM

Calculating floating point operands (FLOPS) for neural networks

Jan 22, 2024 pm 07:21 PM

FLOPS is one of the standards for computer performance evaluation, used to measure the number of floating point operations per second. In neural networks, FLOPS is often used to evaluate the computational complexity of the model and the utilization of computing resources. It is an important indicator used to measure the computing power and efficiency of a computer. A neural network is a complex model composed of multiple layers of neurons used for tasks such as data classification, regression, and clustering. Training and inference of neural networks requires a large number of matrix multiplications, convolutions and other calculation operations, so the computational complexity is very high. FLOPS (FloatingPointOperationsperSecond) can be used to measure the computational complexity of neural networks to evaluate the computational resource usage efficiency of the model. FLOP

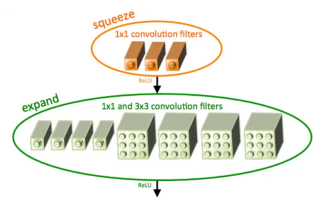

Introduction to SqueezeNet and its characteristics

Jan 22, 2024 pm 07:15 PM

Introduction to SqueezeNet and its characteristics

Jan 22, 2024 pm 07:15 PM

SqueezeNet is a small and precise algorithm that strikes a good balance between high accuracy and low complexity, making it ideal for mobile and embedded systems with limited resources. In 2016, researchers from DeepScale, University of California, Berkeley, and Stanford University proposed SqueezeNet, a compact and efficient convolutional neural network (CNN). In recent years, researchers have made several improvements to SqueezeNet, including SqueezeNetv1.1 and SqueezeNetv2.0. Improvements in both versions not only increase accuracy but also reduce computational costs. Accuracy of SqueezeNetv1.1 on ImageNet dataset

Definition and structural analysis of fuzzy neural network

Jan 22, 2024 pm 09:09 PM

Definition and structural analysis of fuzzy neural network

Jan 22, 2024 pm 09:09 PM

Fuzzy neural network is a hybrid model that combines fuzzy logic and neural networks to solve fuzzy or uncertain problems that are difficult to handle with traditional neural networks. Its design is inspired by the fuzziness and uncertainty in human cognition, so it is widely used in control systems, pattern recognition, data mining and other fields. The basic architecture of fuzzy neural network consists of fuzzy subsystem and neural subsystem. The fuzzy subsystem uses fuzzy logic to process input data and convert it into fuzzy sets to express the fuzziness and uncertainty of the input data. The neural subsystem uses neural networks to process fuzzy sets for tasks such as classification, regression or clustering. The interaction between the fuzzy subsystem and the neural subsystem makes the fuzzy neural network have more powerful processing capabilities and can

Image denoising using convolutional neural networks

Jan 23, 2024 pm 11:48 PM

Image denoising using convolutional neural networks

Jan 23, 2024 pm 11:48 PM

Convolutional neural networks perform well in image denoising tasks. It utilizes the learned filters to filter the noise and thereby restore the original image. This article introduces in detail the image denoising method based on convolutional neural network. 1. Overview of Convolutional Neural Network Convolutional neural network is a deep learning algorithm that uses a combination of multiple convolutional layers, pooling layers and fully connected layers to learn and classify image features. In the convolutional layer, the local features of the image are extracted through convolution operations, thereby capturing the spatial correlation in the image. The pooling layer reduces the amount of calculation by reducing the feature dimension and retains the main features. The fully connected layer is responsible for mapping learned features and labels to implement image classification or other tasks. The design of this network structure makes convolutional neural networks useful in image processing and recognition.

Compare the similarities, differences and relationships between dilated convolution and atrous convolution

Jan 22, 2024 pm 10:27 PM

Compare the similarities, differences and relationships between dilated convolution and atrous convolution

Jan 22, 2024 pm 10:27 PM

Dilated convolution and dilated convolution are commonly used operations in convolutional neural networks. This article will introduce their differences and relationships in detail. 1. Dilated convolution Dilated convolution, also known as dilated convolution or dilated convolution, is an operation in a convolutional neural network. It is an extension based on the traditional convolution operation and increases the receptive field of the convolution kernel by inserting holes in the convolution kernel. This way, the network can better capture a wider range of features. Dilated convolution is widely used in the field of image processing and can improve the performance of the network without increasing the number of parameters and the amount of calculation. By expanding the receptive field of the convolution kernel, dilated convolution can better process the global information in the image, thereby improving the effect of feature extraction. The main idea of dilated convolution is to introduce some

causal convolutional neural network

Jan 24, 2024 pm 12:42 PM

causal convolutional neural network

Jan 24, 2024 pm 12:42 PM

Causal convolutional neural network is a special convolutional neural network designed for causality problems in time series data. Compared with conventional convolutional neural networks, causal convolutional neural networks have unique advantages in retaining the causal relationship of time series and are widely used in the prediction and analysis of time series data. The core idea of causal convolutional neural network is to introduce causality in the convolution operation. Traditional convolutional neural networks can simultaneously perceive data before and after the current time point, but in time series prediction, this may lead to information leakage problems. Because the prediction results at the current time point will be affected by the data at future time points. The causal convolutional neural network solves this problem. It can only perceive the current time point and previous data, but cannot perceive future data.