Technology peripherals

Technology peripherals

AI

AI

OpenAI co-founder Karpathy published an article: Taking autonomous driving as an example to explain AGI! The original text has been deleted, please bookmark it now

OpenAI co-founder Karpathy published an article: Taking autonomous driving as an example to explain AGI! The original text has been deleted, please bookmark it now

OpenAI co-founder Karpathy published an article: Taking autonomous driving as an example to explain AGI! The original text has been deleted, please bookmark it now

Regarding "general artificial intelligence", OpenAI scientist Karpathy gave an explanation.

A few days ago, Karpathy published an article on his personal blog "Studying autonomous driving as a case of AGI."

I don’t know why, but he deleted this article. Fortunately, there is a network backup.

#As we all know, Karpathy is not only one of the founding members of OpenAI, but also the former senior director of AI and head of autonomous driving Autopilot at Tesla.

He uses autonomous driving as a case study to study AGI. The views of this article are indeed worth reading.

Autonomous Driving

The outbreak of LLM has raised many questions about when AGI will arrive, and even whether it may What a discussion looks like.

Some people are full of hope and optimistic about the future of AGI. And some people are full of fear and pessimism.

Unfortunately, many of the discussions are too abstract, causing people's views to be inconsistent with each other.

Therefore, I am always looking for concrete analogies and historical precedents to approach the subject in more concrete terms.

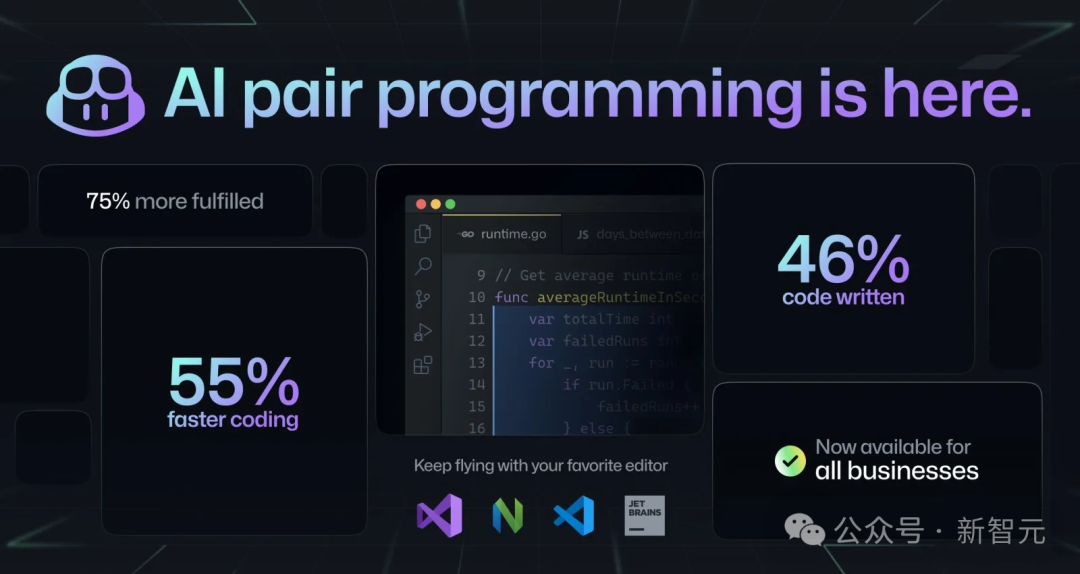

In particular, when I am asked "What do you think AGI will look like", I personally like to use autonomous driving as an example. In this article I want to explain why. Let’s start with a common definition of AGI:

AGI: An autonomous system that exceeds human capabilities in most economically valuable tasks.

Please note that there are two specific requirements in this AGI definition.

First of all, it is a completely autonomous system, that is, it operates on its own with little or no human supervision.

Second, it operates autonomously on most economically valuable work. For this part of the data, I personally like to refer to the Occupational Index from the U.S. Bureau of Labor Statistics.

A system that has both of these properties is called AGI.

What I would like to suggest in this article is that the recent development of our self-driving capabilities is a very good early example of an increasingly The social dynamics of automation can be extended to the study of the overall appearance and feelings of AGI.

The reason I think so is that this field has several characteristics. You can simply say "it is a big deal": autonomous driving is very easy to understand and more common for society.

It accounts for a large part of the economy and employs a large amount of human labor. Driving is a complex enough problem, but we have already achieved automation and it has attracted great attention from society.

Of course, there are other industries that have achieved large-scale automation, but either I am not personally familiar with them, or they lack some of the attributes mentioned above.

L2 Level Automation

The automation of driving is considered a very challenging problem in the field of AI and cannot be achieved overnight.

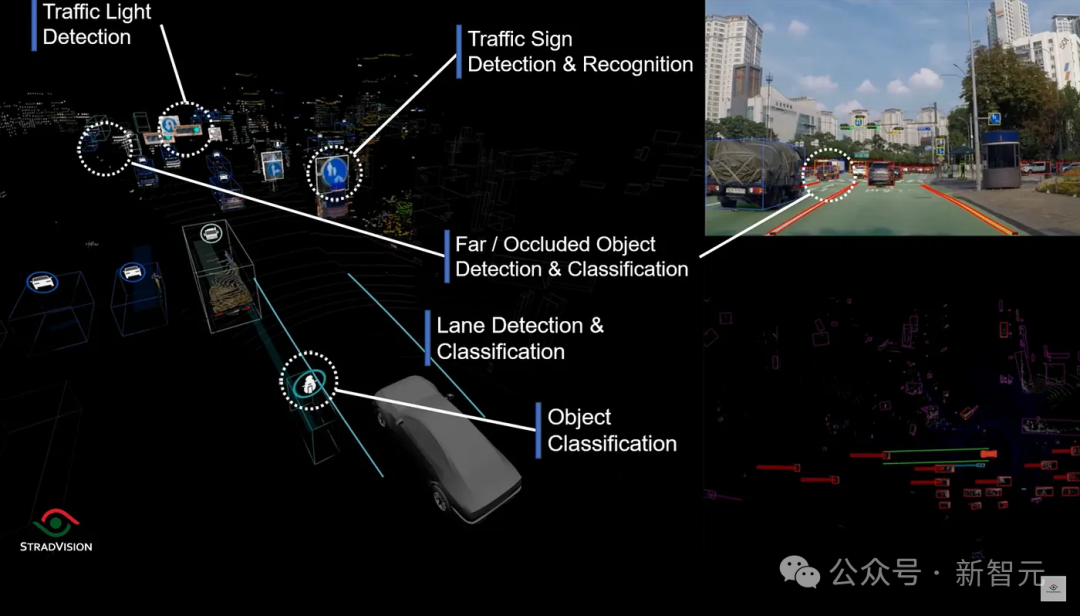

It is formed through the process of gradually automating driving tasks. This process involves many stages of "tool-based AI".

In terms of vehicle automation, many cars are currently equipped with L2 level driving assistance systems. That is, an AI that can collaborate with human drivers to complete the journey from the starting point to the destination.

Although it is not yet fully autonomous, L2 can already handle many basic tasks in driving.

Sometimes, it can even complete the entire operation process automatically, such as automatic parking.

In this process, humans mainly play a supervisory role, and can even take over at any time, drive directly or issue high-level instructions (such as lane change requests) .

In some aspects (such as keeping lanes centered and making quick decisions), AI's performance even exceeds that of humans, but it still falls short in some rare situations.

This is very similar to many AI tools we’ve seen in other industries, especially with LLM’s recent technological breakthroughs.

For example, as a programmer, when I use GitHub Copilot to automatically complete a piece of code, or use GPT-4 to write a more complex function, I actually hand over the basic task. Given the automated system.

#But again, I can always step in and make adjustments if necessary.

In other words, Copilot and GPT-4 are like "secondary" automation tools in the field of programming.

There are many similar “level 2” automation solutions across the industry, but not all are based on big models, from TurboTax, to Amazon warehouse robots, to Various "tool-based AI" in fields such as translation, writing, art, law, marketing, etc.

Fully Autonomous Driving

Over time, some systems have reached new levels of reliability Height, becoming like Waymo today.

They are gradually realizing "fully autonomous driving".

Now, in San Francisco, you only need to open the app to hail a Waymo self-driving car. It will pick you up and deliver you safely to your destination. .

This is truly amazing. You don’t need to know how to drive, and you don’t need to pay attention to road conditions. You just need to sit back comfortably and take a nap, and the system will take you from the starting point to the ending point.

Like many people I talk to, I personally prefer Waymo over Uber, and I use it almost exclusively for inner-city transportation.

You will have a more stable and predictable journey experience, and the driving process will be smooth and you can listen to music without caring about what the driver is thinking when he listens to you.

「Compound Economy」

Although autonomous driving technology has become a reality, there are still many People choose to use Uber. Why?

First of all, many people simply don’t know that they can choose Waymo as a transportation tool. Even if they know, many people still lack enough trust in automated systems and prefer to be driven by human drivers.

However, even if some people accept autonomous driving, they may still prefer human drivers, such as enjoying the conversation with the driver and the interaction with others.

More than just personal preference, it can be seen from the growing wait times in the current application that Waymo is facing a supply shortage problem. The number of vehicles on the market is far insufficient to meet demand.

Part of this may be that Waymo is very cautious in managing and monitoring risks and public opinion.

On the other hand, as far as I know, Waymo is restricted by regulators and can only deploy a certain number of vehicles on the streets. Another limiting factor is that Waymo can't completely replace Uber overnight.

They need to build infrastructure, produce cars, and scale operations.

I personally believe that the same situation will be faced with automation in other areas of the economy - some people/companies will adopt them immediately, but many people (1) do not understand these technologies, and (2) even if they do, they will not understand them. will not trust them, (3) even if they do, they still prefer to cooperate with humans.

But beyond that, demand exceeds supply, and AGI will be subject to the same constraints for the same reasons, including developer self-restraint, regulatory constraints, and resource shortages such as the need to build More GPU data centers.

Globalization of Technology

As I already hinted at the resource constraints, global deployment of this technology is very costly and also It requires a lot of manpower and the promotion speed is also slow.

Today, Waymo can only drive in San Francisco and Phoenix, but the technology itself is versatile and scalable, so the company may soon expand to Los Angeles, Austin Waiting places.

Self-driving cars may also be limited by other environmental factors, such as driving in heavy snow. In some rare cases, it may even require operator rescue.

In addition, the expansion of technical capabilities also requires a lot of resource costs and is not free.

For example, Waymo must invest resources before entering another city, such as drawing street maps, overall perception path planning, and controlling algorithms to adapt to certain special situations or local regulations.

As our work metaphor illustrates, many tasks may only be automated in certain circumstances, and scaling up will require a lot of work.

In either case, autonomous driving technology itself is universal and scalable, and its application prospects will gradually broaden over time.

Social reaction: It quickly became a "passing cloud"

One thing I find particularly interesting about the process of autonomous driving technology gradually integrating into society is that ——

Just a few years ago, people were hotly discussing and full of doubts and worries about whether it could work successfully or even whether it was feasible. This became a issues of widespread concern. But now, autonomous driving is no longer a dream of the future, it has really appeared.

More than just a research prototype, it becomes a fully automated mode of transportation that can be purchased with money.

Within the current scope of application, autonomous driving technology has achieved complete autonomy.

However, overall, this does not seem to attract too many people's attention. Most people I talk to (including in tech!) are not even aware of this development.

When you ride Waymo on the streets of San Francisco, you’ll notice a lot of curious eyes on it. They will first be surprised, then stare in curiosity.

After that, they will move on with their lives.

When self-driving technology also achieves full autonomy in other industries, the world may not be turbulent.

Most people may not even realize this change at first. When they notice, they may just look at it curiously and then shrug it off, with reactions ranging from denial to acceptance.

Some people may be upset by this and even take some protest actions, such as placing traffic cones in front of Waymo cars.

Of course, so far, we are still far from witnessing this phenomenon fully realized. But when it happens, I expect it to be largely predictive.

Economic Impact

When we talk about employment, there is no denying that Waymo has clearly replaced the driver's position.

But at the same time, it has also spawned many jobs that did not exist before, and these positions are relatively less visible - such as annotators who collect training data for neural networks, remote assistance encounters The customer service staff for the car in question, the staff responsible for building and maintaining the fleet, and so on.

What was born first was a whole new industry of sensors and related infrastructure designed to build these sophisticated, high-tech cars.

Just as people generally think about work, many positions will change. Some positions will disappear as a result, but many new positions will also appear.

This is actually a change in the form of work, rather than a simple reduction in positions, although the reduction in positions is the most intuitive change.

While it’s difficult to say that overall employment won’t decline over time, the pace of change is much slower than one would simply expect.

Competitive environment

Finally, I would like to talk about the competitive environment in the field of autonomous driving.

A few years ago, self-driving car companies were popping up like mushrooms after rain. But today, as people gradually realize the complexity of this technology (I personally believe that automation is still very difficult based on current artificial intelligence and computing technology), this field has experienced large-scale consolidation.

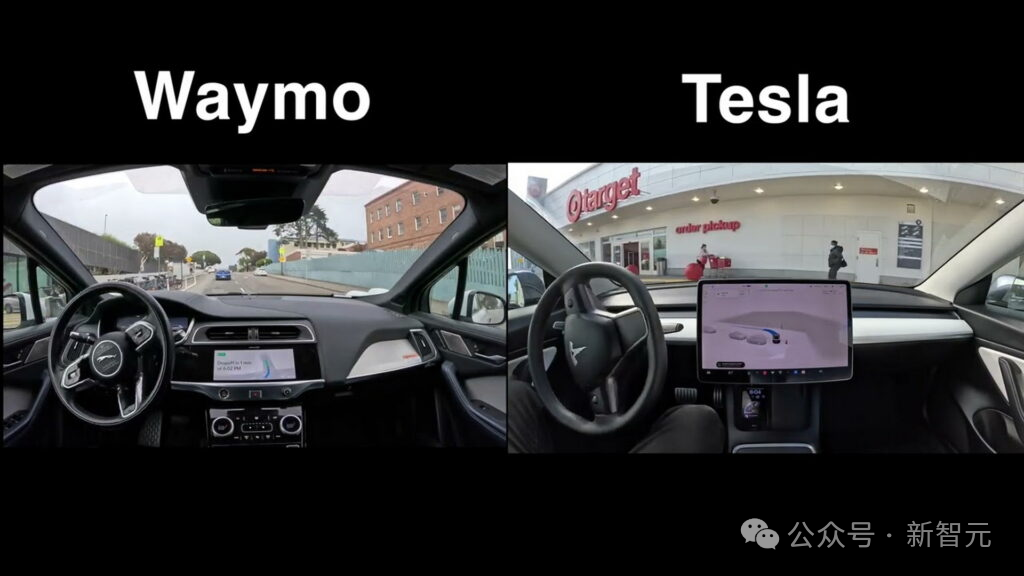

Among them, Waymo has become the first company to fully demonstrate the future of autonomous driving.

Despite this, there are still some companies that are still catching up, such as Cruise, Zoox, and my personal favorite, Tesla.

I would like to briefly mention it here, based on my experience and participation in this field. In my opinion, the ultimate goal of the autonomous driving industry is to achieve fully autonomous driving on a global scale.

Waymo chose the strategy of first realizing autonomous driving and then expanding globally, while Tesla first deployed globally and then gradually improved its autonomous driving technology.

Obviously, these two companies will face completely different adjustments: one is mainly working on software, and the other is working on hardware.

Currently, I am very satisfied with their products. Personally, I am full of support for the technology itself.

Similarly, many other industries may also go through a phase of rapid growth and expansion (like the autonomous driving field around 2015), But in the end, only a few companies may survive the competition.

In this process, many practical AI auxiliary tools (such as the current L2 ADAS function) and some open platforms (such as Comma) will be widely used.

Artificial General Intelligence (AGI)

The above is my view on the future of General Artificial Intelligence (AGI) The general idea of development.

Imagine that such changes will spread across the entire economy at different speeds, accompanied by many unpredictable interactions and chain reactions.

Although this idea may not be perfect, I think it is a model worth remembering and useful to refer to.

From a memetic perspective, AGI is related to the kind of technology that escapes our control, recursively enhances itself in cyberspace, creates deadly pathogens or nanobots, and ultimately turns the galaxy into a A far cry from gray goo superintelligence.

## In comparison, it is more like the development of autonomous driving technology - a technology that is advancing rapidly and can Automation technologies that are changing society. Its development speed will be limited in many aspects, including educated labor resources, information, materials, energy, and supervision.

In this, society is both an observer and a participant.

The world will not collapse, but will adapt, change and rebuild.

As far as autonomous driving itself is concerned, traffic automation will greatly improve safety, the city will become fresher and smoother, and the parking lots and parked cars occupying both sides of the road will gradually disappear, making way for more Lots of space.

I personally am full of expectations for all the possible changes brought about by artificial general intelligence (AGI).

Hot discussion among netizens

In short, Karpathy regards AGI as more like the development of autonomous driving. This specific analogy triggered discussed by many netizens.

"Seeing the guy who couldn't deliver FSD and decided to compare FSD to AGI actually did give me confidence that we still have decades to go."

Yeah, he seems to have forgotten "G". I remember Norvig once said in his artificial intelligence writings decades ago that "intelligence" does not mean omnipotence. For an intelligent agent to be useful, it is enough to solve a small problem. In my opinion, this is where G comes from.

And now we are suddenly back to the previous narrow definition? I still don't see a path from LLM and autonomous driving to AGI.

Of course, the development of AGI may be gradual and slow, just like we saw Waymo build self-driving cars. But this is just one of many ways, and you can also see AGI popping up in very different ways, like by scaling large-scale LLM.

The above is the detailed content of OpenAI co-founder Karpathy published an article: Taking autonomous driving as an example to explain AGI! The original text has been deleted, please bookmark it now. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy