Technology peripherals

Technology peripherals

AI

AI

UCLA Chinese propose a new self-playing mechanism! LLM trains itself, and the effect is better than that of GPT-4 expert guidance.

UCLA Chinese propose a new self-playing mechanism! LLM trains itself, and the effect is better than that of GPT-4 expert guidance.

UCLA Chinese propose a new self-playing mechanism! LLM trains itself, and the effect is better than that of GPT-4 expert guidance.

Synthetic data has become the most important cornerstone in the evolution of large language models.

At the end of last year, some netizens revealed that former OpenAI chief scientist Ilya had repeatedly stated that there is no data bottleneck in the development of LLM, and synthetic data can solve most problems.

Picture

Picture

Nvidia senior scientist Jim Fan concluded after studying the latest batch of papers that he believes that synthetic data Combined with traditional game and image generation technology, LLM can achieve huge self-evolution.

Picture

Picture

The paper that formally proposed this method was written by a Chinese team from UCLA.

Picture

Picture

Paper address: https://www.php.cn/link/236522d75c8164f90a85448456e1d1aa

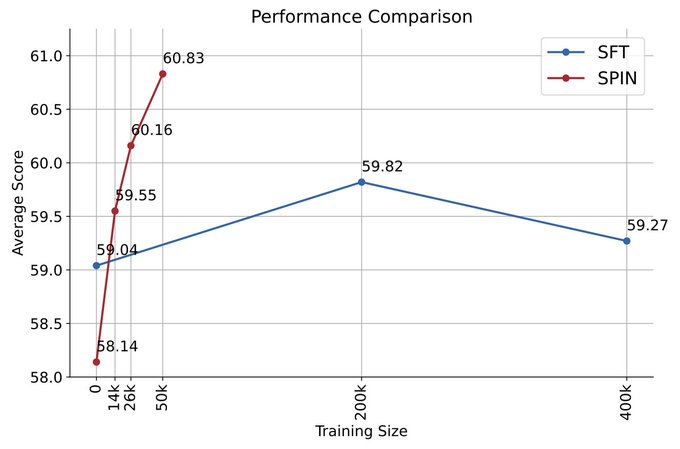

They used the self-playing mechanism (SPIN) to generate synthetic data, and through self-fine-tuning methods, without relying on new data sets, the average score of the weak LLM on the Open LLM Leaderboard Benchmark was improved from 58.14. to 63.16.

The researchers proposed a self-fine-tuning method called SPIN, through self-playing - LLM and its previous iteration version Conduct confrontation to gradually improve the performance of the language model.

Picture

Picture

This way, there is no need for additional human annotated data or feedback from a higher-level language model to complete the model's self- evolution.

The parameters of the main model and the opponent model are exactly the same. Play against yourself with two different versions.

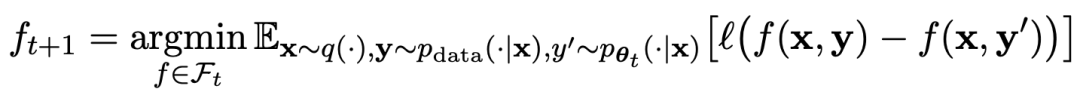

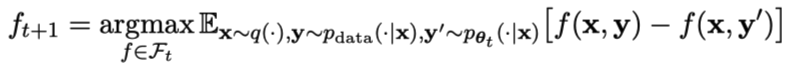

The game process can be summarized by the formula:

Picture

Picture

Self-Play The training method, in summary, the idea is roughly as follows:

By training the main model to distinguish the response generated by the opponent model and the human target response, the opponent model is a language model obtained by rounds of iterations, and the target is to generate responses that are as indistinguishable as possible.

Assuming that the language model parameter obtained in the t-th round of iteration is θt, then in the t-th round of iteration, use θt as the opponent player to fine-tune each prompt x in the data set for supervision, Use θt to generate the response y'.

Then optimize the new language model parameters θt 1 so that it can distinguish y' from the human response y in the supervised fine-tuning dataset. This can form a gradual process and gradually approach the target response distribution.

Here, the loss function of the main model adopts logarithmic loss, taking into account the function value difference between y and y'.

Add KL divergence regularization to the opponent model to prevent the model parameters from deviating too much.

The specific adversarial game training objectives are shown in Formula 4.7. It can be seen from the theoretical analysis that when the response distribution of the language model is equal to the target response distribution, the optimization process converges.

If you use synthetic data generated after the game for training, and then use SPIN for self-fine-tuning, the performance of LLM can be effectively improved.

Picture

Picture

But then simply fine-tuning again on the initial fine-tuning data will cause performance degradation.

SPIN only requires the initial model itself and the existing fine-tuned data set, so that LLM can improve itself through SPIN.

In particular, SPIN even outperforms models trained with additional GPT-4 preference data via DPO.

Picture

Picture

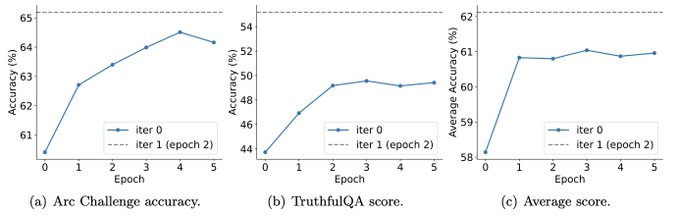

And experiments also show that iterative training can improve model performance more effectively than training with more epochs.

Picture

Picture

Extending the training duration of a single iteration will not reduce the performance of SPIN, but it will reach its limit.

The more iterations, the more obvious the effect of SPIN.

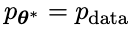

After reading this paper, netizens sighed:

Synthetic data will dominate the development of large language models, and the research on large language models This will be very good news for readers!

Picture

Picture

Self-playing allows LLM to continuously improve

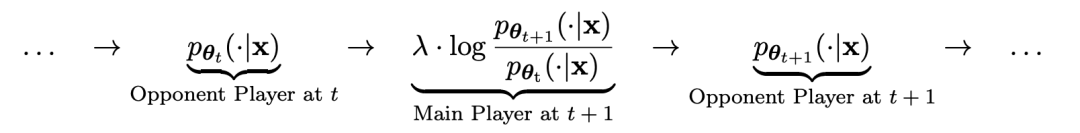

Specifically , the SPIN system developed by the researchers is a system in which two mutually influencing models promote each other.

The LLM of the previous iteration t, denoted by  , is used by the researchers to generate responses y to prompts x in the manually annotated SFT dataset .

, is used by the researchers to generate responses y to prompts x in the manually annotated SFT dataset .

The next goal is to find a new LLM that is able to distinguish

that is able to distinguish  generated responses y from those generated by humans The response y'.

generated responses y from those generated by humans The response y'.

This process can be viewed as a two-player game:

Main player or new LLM Try to discern between opponent player responses and human-generated responses, while opponent or old LLM

Try to discern between opponent player responses and human-generated responses, while opponent or old LLM generated responses are as similar as possible to the data in the human-annotated SFT dataset.

generated responses are as similar as possible to the data in the human-annotated SFT dataset.

New LLM obtained by fine-tuning the old

prefer

prefer 's response, resulting in a distribution

's response, resulting in a distribution  that is more consistent with

that is more consistent with  .

.

In the next iteration, the newly obtained LLM becomes the response generated opponent, and the goal of the self-playing process is that the LLM eventually converges to

becomes the response generated opponent, and the goal of the self-playing process is that the LLM eventually converges to  , Such that the strongest LLM is no longer able to distinguish between its previously generated version of the response and the human-generated version.

, Such that the strongest LLM is no longer able to distinguish between its previously generated version of the response and the human-generated version.

How to use SPIN to improve model performance

The researchers designed a two-player game in which the main model goal is to distinguish the responses generated by LLM and human-generated responses. At the same time, the role of the adversary is to produce responses that are indistinguishable from those of humans. Central to the researchers' approach is training the primary model.

First explain how to train the main model to distinguish LLM's responses from human responses.

The core of the researchers' approach is a self-game mechanism, in which the main player and the opponent are both from the same LLM, but from different iterations.

More specifically, the opponent is the old LLM from the previous iteration, and the main player is the new LLM to learn in the current iteration. Iteration t 1 includes the following two steps: (1) training the main model, (2) updating the opponent model.

Training the master model

First, the researchers will explain how to train the master player to distinguish between LLM responses and human responses. Inspired by the integral probability measure (IPM), the researchers formulated the objective function:

Picture

Picture

Update opponent Model

The goal of the adversary model is to find a better LLM such that it produces a response that is indistinguishable from the master model's p data.

Experiment

SPIN effectively improves benchmark performance

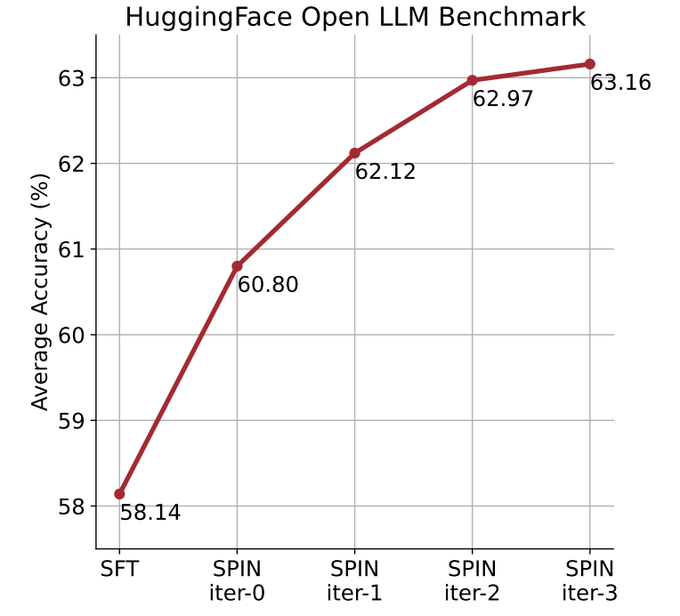

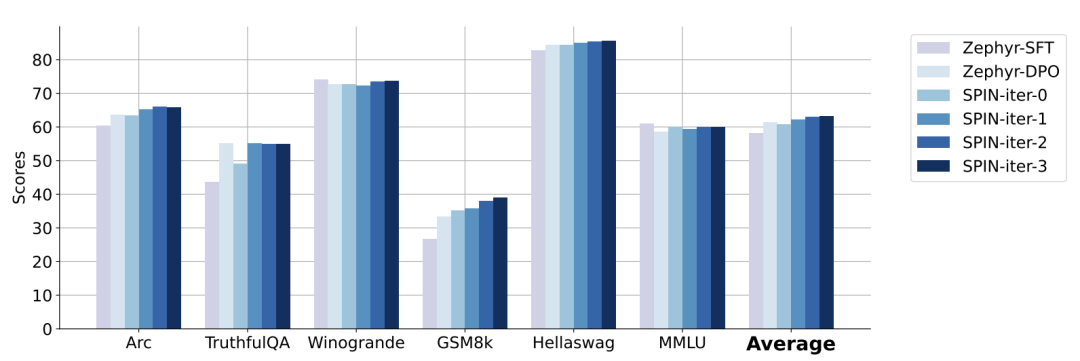

Researchers use HuggingFace Open LLM Leaderboard as Extensive evaluation to prove the effectiveness of SPIN.

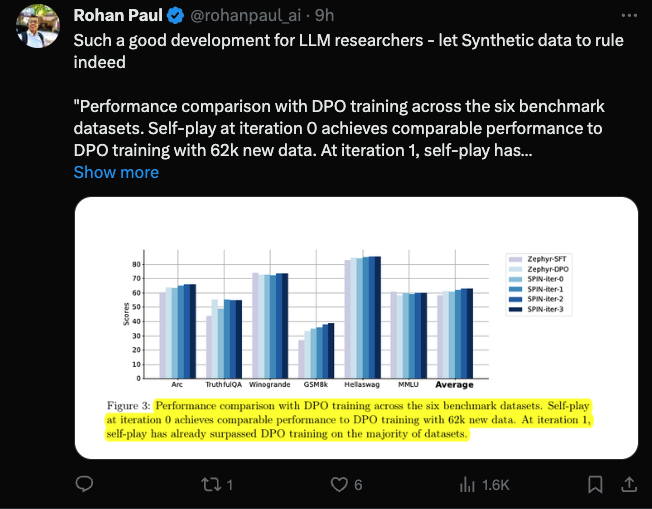

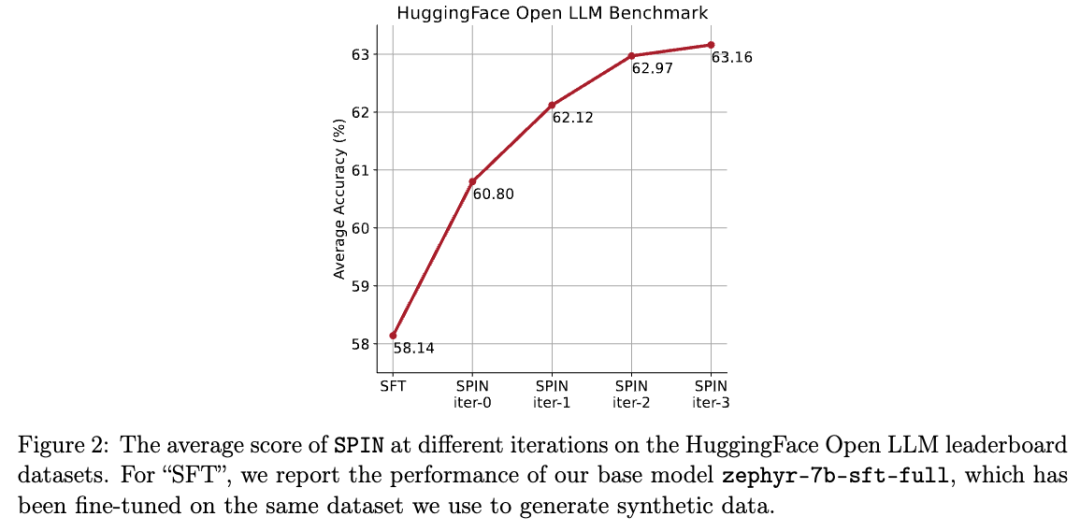

In the figure below, the researchers compared the performance of the model fine-tuned by SPIN after 0 to 3 iterations with the base model zephyr-7b-sft-full.

Researchers can observe that SPIN shows significant results in improving model performance by further leveraging the SFT dataset, on which the base model has already been tested Full of fine-tuning.

In iteration 0, the model response was generated from zephyr-7b-sft-full, and the researchers observed an overall improvement of 2.66% in the average score.

This improvement is particularly noticeable on the TruthfulQA and GSM8k benchmarks, with increases of more than 5% and 10% respectively.

In Iteration 1, the researchers employed the LLM model from Iteration 0 to generate new responses for SPIN, following the process outlined in Algorithm 1.

This iteration produces a further enhancement of 1.32% on average, which is particularly significant on the Arc Challenge and TruthfulQA benchmarks.

Subsequent iterations continued the trend of incremental improvements in various tasks. At the same time, the improvement at iteration t 1 is naturally smaller

Picture

Picture

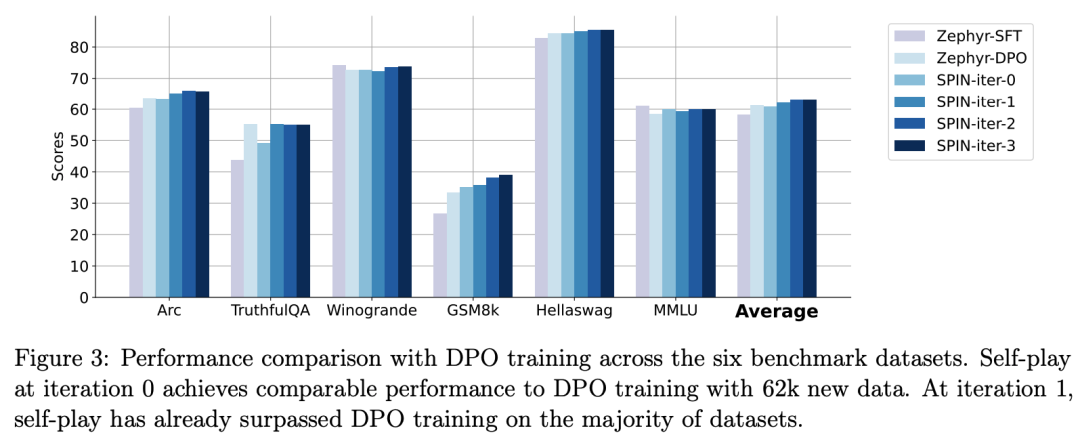

zephyr-7b-beta is derived from zephyr-7b- The model derived from sft-full is trained on approximately 62k preference data using DPO.

The researchers note that DPO requires human input or high-level language model feedback to determine preferences, so data generation is a fairly expensive process.

In contrast, the researchers’ SPIN only requires the initial model itself.

Additionally, unlike DPO, which requires new data sources, the researchers’ approach fully leverages existing SFT datasets.

The following figure shows the performance comparison of SPIN and DPO training at iterations 0 and 1 (using 50k SFT data).

Picture

Picture

The researchers can observe that although the DPO utilizes more data from new sources, the SPIN based on the existing SFT data changes from iteration 1 Initially, SPIN even exceeded the performance of DPO, and SPIN even outperformed DPO in ranking benchmark tests.

Reference:

The above is the detailed content of UCLA Chinese propose a new self-playing mechanism! LLM trains itself, and the effect is better than that of GPT-4 expert guidance.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Translator | Bugatti Review | Chonglou This article describes how to use the GroqLPU inference engine to generate ultra-fast responses in JanAI and VSCode. Everyone is working on building better large language models (LLMs), such as Groq focusing on the infrastructure side of AI. Rapid response from these large models is key to ensuring that these large models respond more quickly. This tutorial will introduce the GroqLPU parsing engine and how to access it locally on your laptop using the API and JanAI. This article will also integrate it into VSCode to help us generate code, refactor code, enter documentation and generate test units. This article will create our own artificial intelligence programming assistant for free. Introduction to GroqLPU inference engine Groq

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

LeanCopilot, this formal mathematics tool that has been praised by many mathematicians such as Terence Tao, has evolved again? Just now, Caltech professor Anima Anandkumar announced that the team released an expanded version of the LeanCopilot paper and updated the code base. Image paper address: https://arxiv.org/pdf/2404.12534.pdf The latest experiments show that this Copilot tool can automate more than 80% of the mathematical proof steps! This record is 2.3 times better than the previous baseline aesop. And, as before, it's open source under the MIT license. In the picture, he is Song Peiyang, a Chinese boy. He is

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

Image source@visualchinesewen|Wang Jiwei From "human + RPA" to "human + generative AI + RPA", how does LLM affect RPA human-computer interaction? From another perspective, how does LLM affect RPA from the perspective of human-computer interaction? RPA, which affects human-computer interaction in program development and process automation, will now also be changed by LLM? How does LLM affect human-computer interaction? How does generative AI change RPA human-computer interaction? Learn more about it in one article: The era of large models is coming, and generative AI based on LLM is rapidly transforming RPA human-computer interaction; generative AI redefines human-computer interaction, and LLM is affecting the changes in RPA software architecture. If you ask what contribution RPA has to program development and automation, one of the answers is that it has changed human-computer interaction (HCI, h

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud, the company behind the Plaud Note AI Voice Recorder (available on Amazon for $159), has announced a new product. Dubbed the NotePin, the device is described as an AI memory capsule, and like the Humane AI Pin, this is wearable. The NotePin is

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and