Technology peripherals

Technology peripherals

AI

AI

Terence Tao called him an expert after seeing it! Google and others used LLM to automatically prove theorems and won top conference outstanding papers. The more complete the context, the better the proof.

Terence Tao called him an expert after seeing it! Google and others used LLM to automatically prove theorems and won top conference outstanding papers. The more complete the context, the better the proof.

Terence Tao called him an expert after seeing it! Google and others used LLM to automatically prove theorems and won top conference outstanding papers. The more complete the context, the better the proof.

Transformer’s skill tree is getting more and more powerful.

Researchers from the University of Massachusetts, Google, and the University of Illinois at Urbana-Champaign (UIUC) recently published a paper in which they successfully achieved The goal is to automatically generate complete theorem proofs.

Paper address: https://arxiv.org/pdf/2303.04910.pdf

This This work, named after Baldur (brother of Thor in Norse mythology), demonstrated for the first time that Transformer can generate full proofs, and also showed that previous proofs of the model can be improved when providing additional context for the model.

This paper was published at ESEC/FSE (ACM European Joint Conference on Software Engineering and Symposium on Fundamentals of Software Engineering) in December 2023, and won the Outstanding Paper Award.

#As we all know, bugs are inevitable in software, which may not cause too much of a problem for an average application or website. However, for the software behind critical systems, such as encryption protocols, medical devices, and space shuttles, we must ensure there are no bugs.

- General code review and testing cannot give this guarantee, which requires formal verification.

For formal verification, ScienceDirect’s explanation is:

the process of mathematically checking that the behavior of a system, described using a formal model, satisfies a given property, also described using a formal model

refers to the process of mathematically checking whether the system behavior described by the formal model satisfies the given property.

To put it simply, it uses mathematical analysis methods to build a model through an algorithm engine to conduct exhaustive analysis and verification of the state space of the design to be tested.

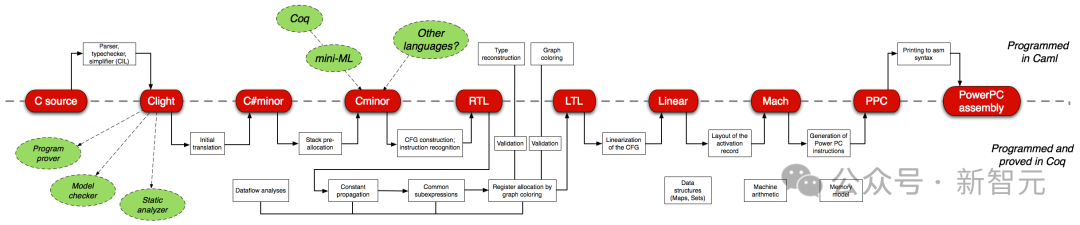

Formal software verification is one of the most challenging tasks for software engineers. For example, CompCert, a C compiler verified with the Coq interactive theorem prover, is the only compiler used by ubiquitous GCC and LLVM, among others.

However, the cost of manual formal verification (writing proofs) is quite huge - the proof of a C compiler is more than three times that of the compiler code itself.

Therefore, formal verification itself is a "labor-intensive" task, and researchers are also exploring automated methods.

Proof assistants such as Coq and Isabelle train a model to predict one proof step at a time and use the model to search the possible proof space.

Baldur in this article introduced the ability of large language models in this field for the first time, training on natural language text and code, and fine-tuning the proof,

Baldur can generate complete proofs of theorems in one go, rather than one step at a time.

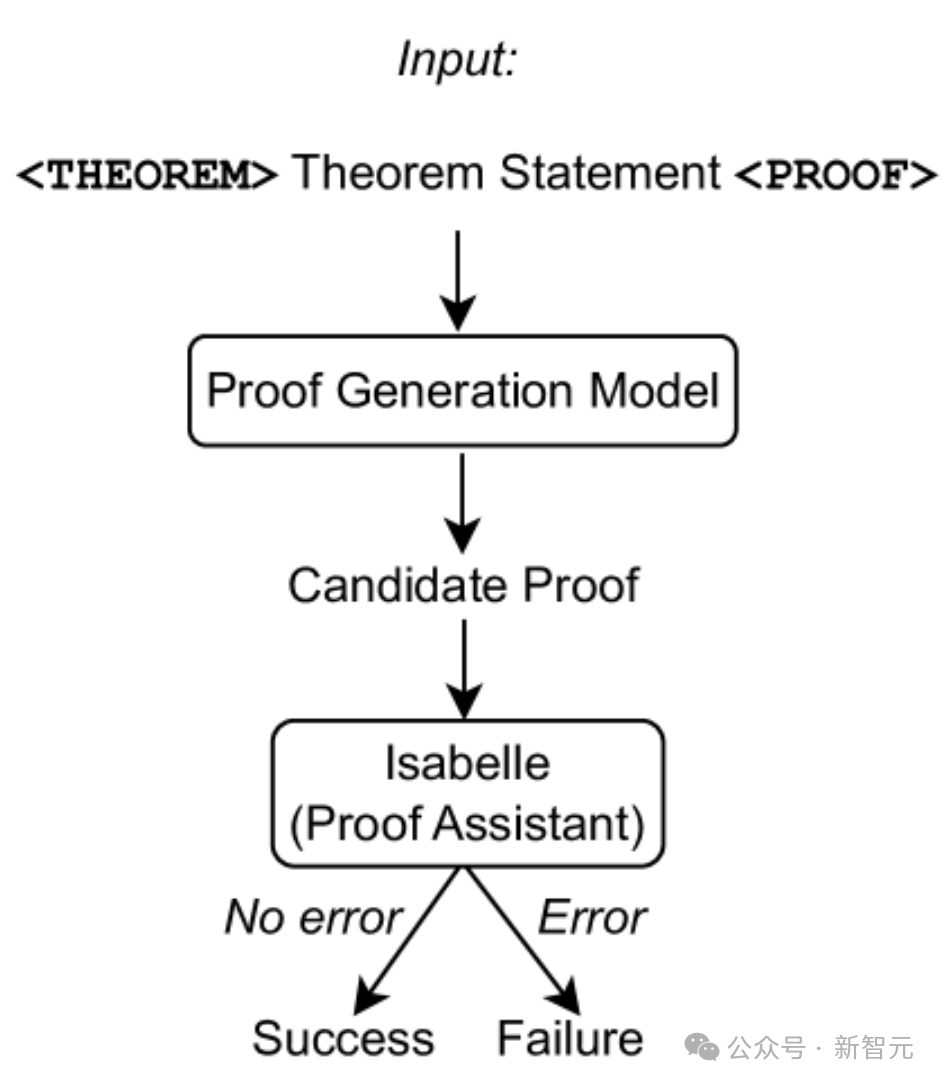

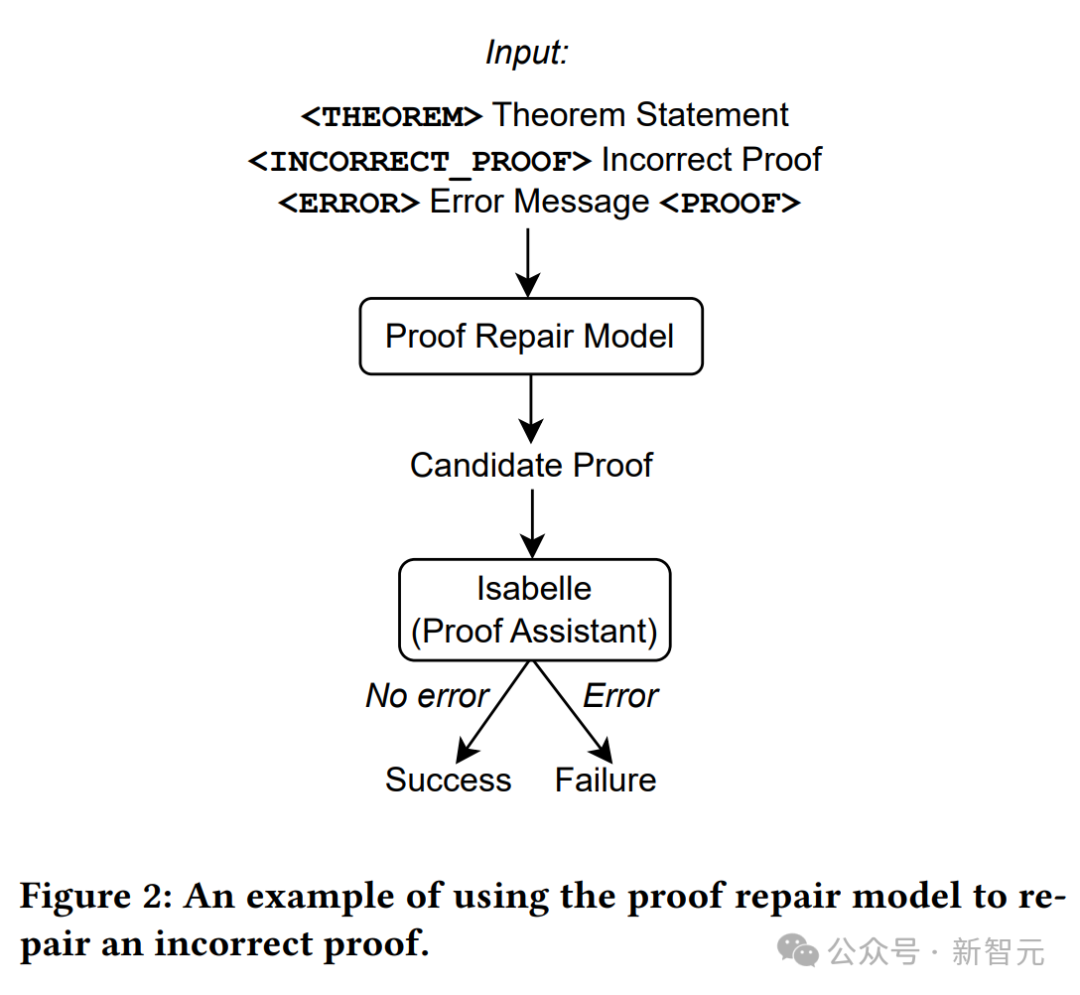

As shown in the figure above, only use theorem statements as input to the proof generation model, then extract the proof attempts from the model, and use Isabelle to perform the proof examine.

If Isabelle accepts the proof attempt without errors, the proof is successful; otherwise, another proof attempt is extracted from the proof generation model.

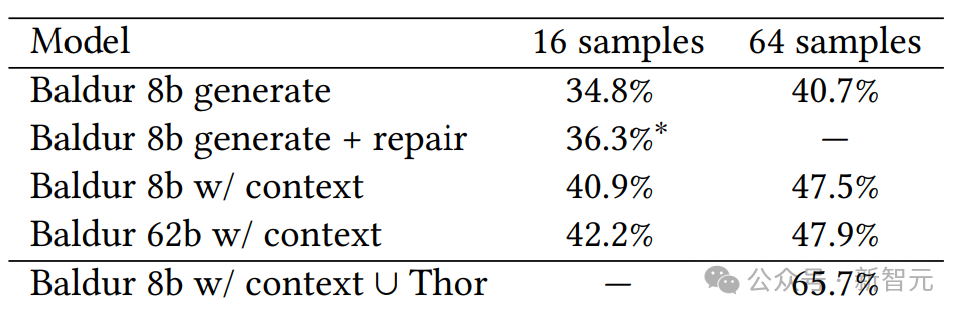

Baldur is evaluated on a benchmark of 6336 Isabelle/HOL theorems and their proofs, empirically demonstrating the effectiveness of complete proof generation, repair and adding context.

In addition, the reason why this tool is called Baldur may be because the best automatic proof generation tool currently is called Thor.

Thor has a higher proof rate (57%), using a smaller language model combined with a method of searching the space of possible proofs to predict the next step in the proof, while Baldur's advantage is its ability to generate complete proofs.

But the brothers Thor and Baldur can also work together, which may increase the proof rate to close to 66%.

Automatically generate complete proofs

Baldur is powered by Minerva, Google’s large language model, which is used in scientific papers and web pages containing mathematical expressions. It was trained on and fine-tuned on data about proofs and theorems.

Baldur can work with theorem proving assistant Isabelle, who checks the proof results. When given a theorem statement, Baldur was able to generate a complete proof almost 41% of the time.

To further improve Baldur’s performance, the researchers provided the model with additional contextual information (such as other definitions, or theorem statements in theoretical documents ), which increases the proof rate to 47.5%.

This means that Baldur is able to take the context and use it to predict new correct proofs - similar to programmers who are more likely to do so when they understand the relevant methods and code Fix bugs in the program.

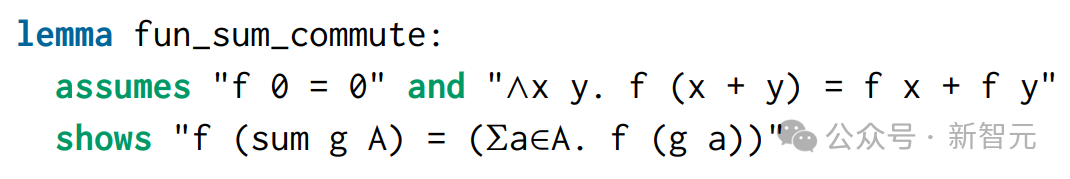

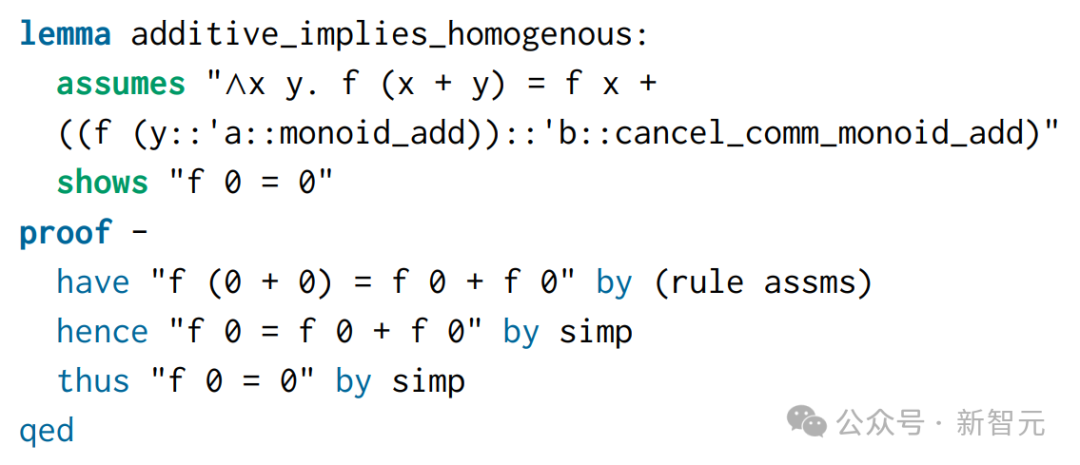

The following is an example (fun_sum_commute theorem):

This theorem comes from a project called Polynomials in the Formal Proof Archives.

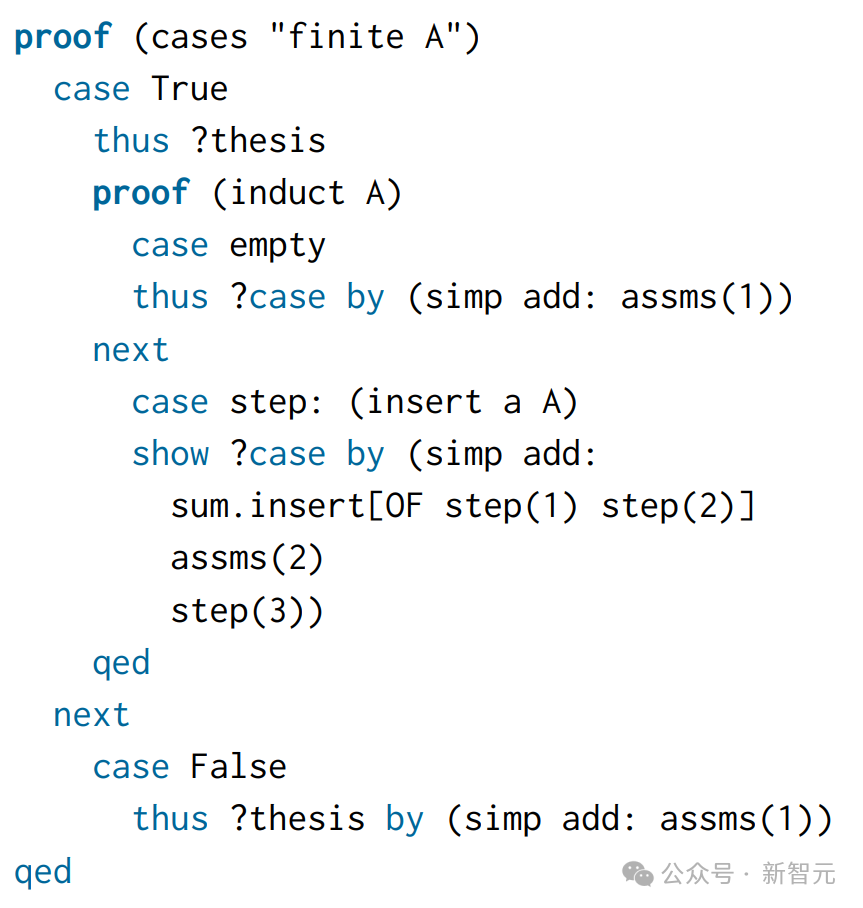

When manually writing proofs, two cases are distinguished: the set is finite or not finite:

So, for the model, the input is the theorem statement, and the target output is this manually written proof.

Baldur recognized the need for induction here and applied a special induction law called infinite_finite_induct, which follows the same general approach as human written proofs, but is more concise.

Because of the need for induction, the Sledgehammer used by Isabelle cannot prove this theorem by default.

Training

To train the proof generation model, the researchers constructed a new proof generation dataset.

The existing dataset contains examples of a single proof step, and each training example includes the proof state (input) and the next proof step to apply (goal).

Given a dataset containing a single proof step, here you need to create a new dataset in order to train the model to predict the entire proof at once.

The researchers extracted the proof steps for each theorem from the dataset and concatenated them to reconstruct the original proof.

Proof of repair

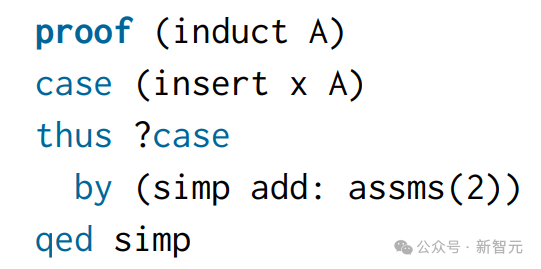

Still take the above fun_sum_commute as an example,

Baldur's first generated proof attempt failed in the proof checker.

Baldur tried to apply induction but failed to first break down the proof into two cases (finite vs. infinite sets). Isabelle returns the following error message:

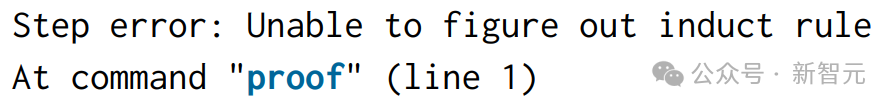

To derive a proof-repair training example from these strings, here the theorem statements, failed proof attempts, and error messages are concatenated as input, using the correct Human-written proofs as targets.

#The above figure details the creation process of training data.

Use a proof generation model to sample proofs with a temperature of 0 for each question in the original training set.

Use the Proofing Assistant to record all failed proofs and their error messages, then proceed to build a new proof-fix training set.

For each original training example, concatenate the theorem statement, the (incorrect) candidate proof generated by the proof generation model, and the corresponding error message to obtain input for the new training example sequence.

Add context

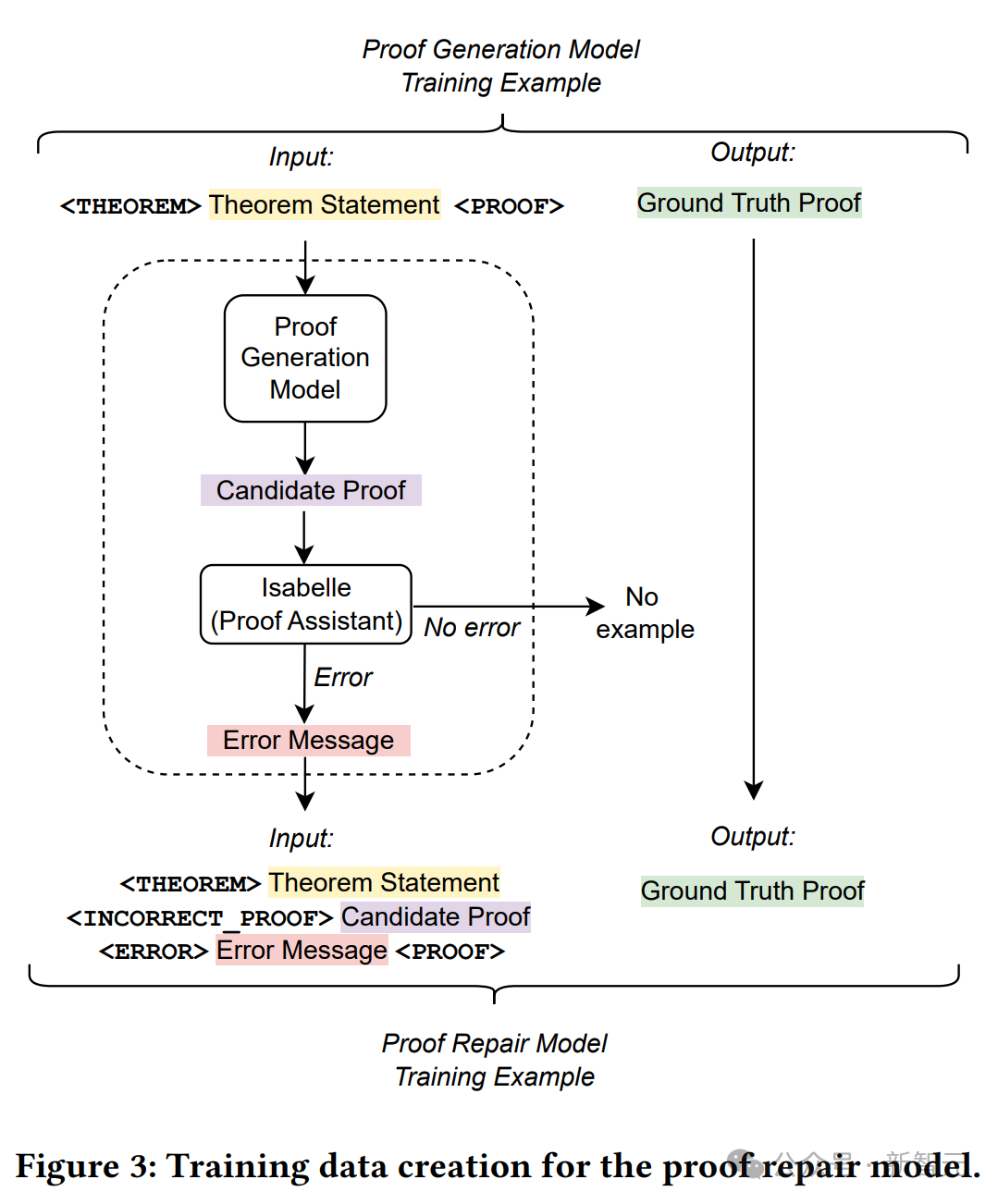

Add lines from the theory file before the theorem statement as additional context. For example, the picture below looks like this:

Baldur’s proof generation model with context can make use of this additional information. Strings that appear in the fun_sum_commute theorem statements appear again in this context, so the additional information surrounding them can help the model make better predictions.

Context can be a statement (theorem, definition, proof) or a natural language annotation.

To take advantage of LLM’s available input length, the researchers first added up to 50 statements from the same theory file.

During training, all these statements are first tokenized and then the left side of the sequence is truncated to fit the input length.

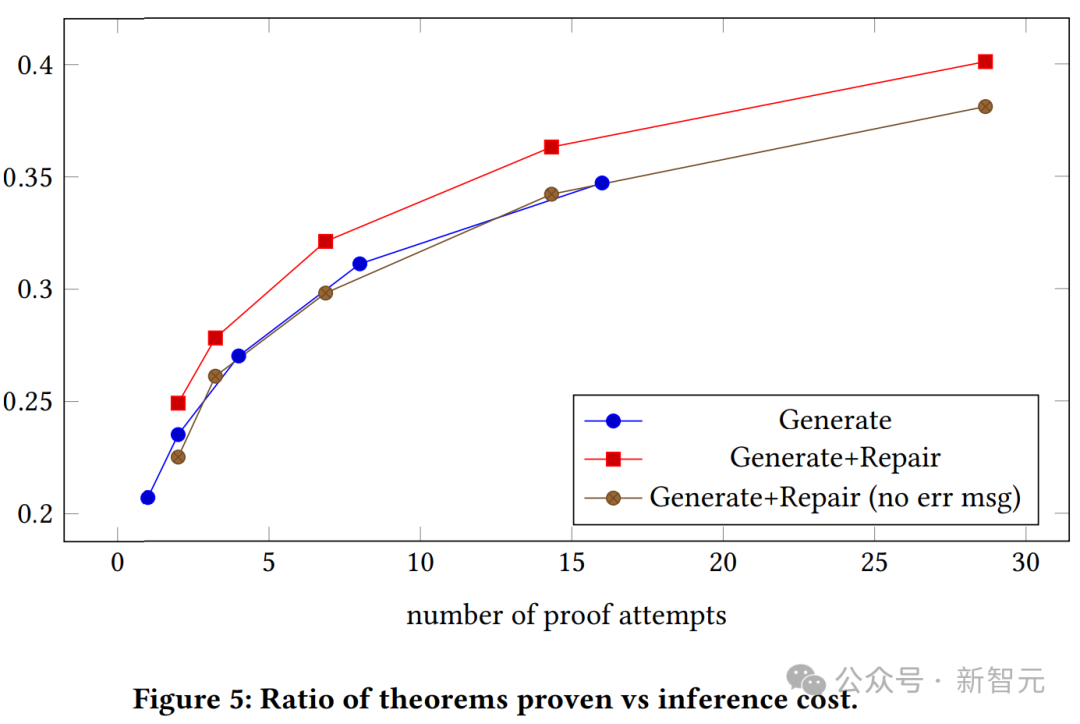

The above figure shows the relationship between the proof success rate and the number of proof attempts for the generative model with context and without context. We can see that proof generative models with context consistently outperform plain generative models.

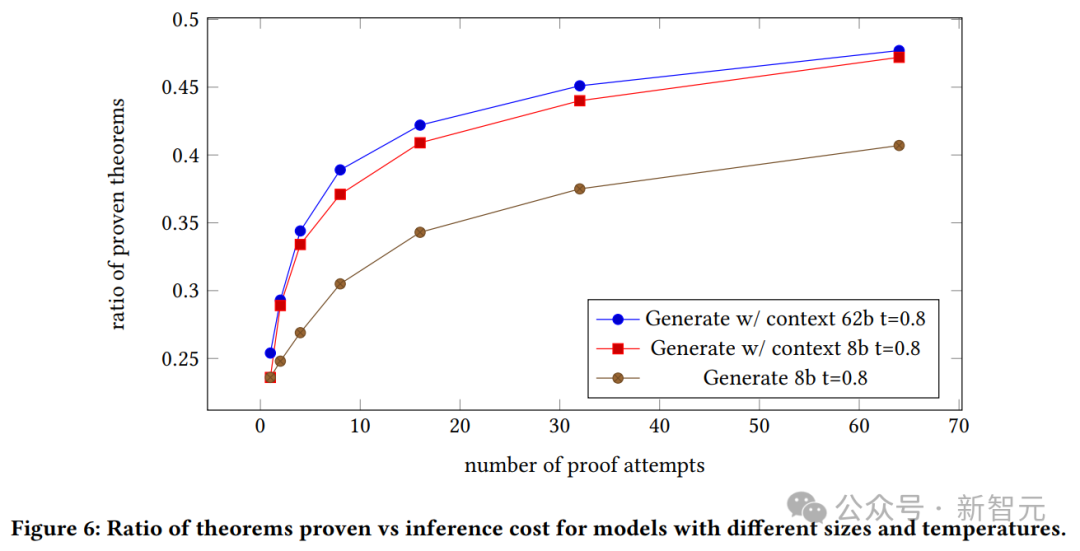

The graph above shows the ratio of verified theorems to inference costs for models of different sizes and temperatures.

We can see the proof success rate of the generated model, as well as the relationship between the context of the 8B model and the 62B model and the number of proof attempts.

62B with context proves that the generative model outperforms the 8B model with context.

However, the authors emphasize here that due to the high cost of these experiments, they cannot adjust the hyperparameters, and the 62B model may perform better if it is optimized.

The above is the detailed content of Terence Tao called him an expert after seeing it! Google and others used LLM to automatically prove theorems and won top conference outstanding papers. The more complete the context, the better the proof.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Which of the top ten virtual currency trading apps is the best? Which of the top ten virtual currency trading apps is the most reliable

Mar 19, 2025 pm 05:00 PM

Which of the top ten virtual currency trading apps is the best? Which of the top ten virtual currency trading apps is the most reliable

Mar 19, 2025 pm 05:00 PM

Top 10 virtual currency trading apps rankings: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

The latest registration portal for Ouyi official website 2025

Mar 21, 2025 pm 05:57 PM

The latest registration portal for Ouyi official website 2025

Mar 21, 2025 pm 05:57 PM

2025 Ouyi OKX registration entrance forecast and security guide: Understand the future registration process in advance and seize the initiative in digital asset trading! This article predicts that Ouyi OKX registration in 2025 will strengthen KYC certification, implement regional registration procedures, and strengthen security measures, such as multi-factor identity verification and device fingerprint recognition. To ensure safe registration, be sure to access the website through official channels, set a strong password, enable two-factor verification, and be alert to phishing websites and emails. Only by understanding the registration process in advance and preventing risks can you gain an advantage in future digital asset transactions. Read now and master the secrets of Ouyi OKX registration in 2025!

Top 10 official virtual currency trading apps Top 10 official virtual currency trading platforms for mobile phones

Mar 19, 2025 pm 05:21 PM

Top 10 official virtual currency trading apps Top 10 official virtual currency trading platforms for mobile phones

Mar 19, 2025 pm 05:21 PM

Top 10 official virtual currency trading apps: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

Virtual currency exchange ranking app

Mar 18, 2025 am 11:15 AM

Virtual currency exchange ranking app

Mar 18, 2025 am 11:15 AM

This article ranks mainstream virtual currency exchanges based on factors such as user experience, transaction volume, and security, and focuses on recommending the three major platforms of OKX, Binance and gate.io. OKX leads the way with strong technical strength and rich product line, Binance follows closely with its high transaction volume and wide user base, while gate.io ranks third with diversified currency options and low transaction fees. The article also covers other well-known exchanges such as Huobi, Kraken, and Coinbase. It also analyzes in detail the key factors such as security, liquidity, trading fees, currency selection and user experience that need to be considered when choosing an exchange, providing reference for investors and helping you choose a safe and reliable virtual currency trading platform. Select an exchange

Top 10 virtual currency trading platform app rankings Top 10 virtual currency trading platform rankings

Mar 19, 2025 pm 04:51 PM

Top 10 virtual currency trading platform app rankings Top 10 virtual currency trading platform rankings

Mar 19, 2025 pm 04:51 PM

Top 10 virtual currency trading platform app rankings: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

Detailed tutorial on how to register for binance (2025 beginner's guide)

Mar 18, 2025 pm 01:57 PM

Detailed tutorial on how to register for binance (2025 beginner's guide)

Mar 18, 2025 pm 01:57 PM

This article provides a complete guide to Binance registration and security settings, covering pre-registration preparations (including equipment, email, mobile phone number and identity document preparation), and introduces two registration methods on the official website and APP, as well as different levels of identity verification (KYC) processes. In addition, the article also focuses on key security steps such as setting up a fund password, enabling two-factor verification (2FA, including Google Authenticator and SMS Verification), and setting up anti-phishing codes, helping users to register and use the Binance Binance platform for cryptocurrency transactions safely and conveniently. Please be sure to understand relevant laws and regulations and market risks before trading and invest with caution.

A summary of the top ten virtual currency trading platforms apps, ranking of the top ten virtual currency trading platforms 2025

Mar 19, 2025 pm 05:15 PM

A summary of the top ten virtual currency trading platforms apps, ranking of the top ten virtual currency trading platforms 2025

Mar 19, 2025 pm 05:15 PM

Top 10 virtual currency trading platform apps recommended: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.