Technology peripherals

Technology peripherals

AI

AI

Research: The Internet is full of low-quality machine-translated content, and large language model training needs to be wary of data traps

Research: The Internet is full of low-quality machine-translated content, and large language model training needs to be wary of data traps

Research: The Internet is full of low-quality machine-translated content, and large language model training needs to be wary of data traps

Feb 04, 2024 pm 02:42 PM

Researchers at the Amazon Cloud Computing Artificial Intelligence Laboratory recently discovered that there is a large amount of content generated by machine translation on the Internet, and the quality of these translations across multiple languages is generally poor. Low. The research team emphasized the importance of data quality and provenance when training large language models. This finding highlights the need to pay more attention to data quality and source selection when building high-quality language models.

The study also found that machine-generated content is prevalent in translations from languages with fewer resources and makes up a large portion of web content.

This site noticed that the research team developed a huge resource called MWccMatrix to better understand the characteristics of machine translation content. The resource contains 6.4 billion unique sentences, covering 90 languages, and provides combinations of sentences that translate to each other, known as translation tuples.

This study found that a large amount of online content is translated into multiple languages, often through machine translation. This phenomenon is prevalent in translations from languages with fewer resources and accounts for a large portion of web content in these languages.

The researchers also noted a selectivity bias in content that is translated into multiple languages for purposes such as advertising revenue.

Based on my research, I came to the following conclusion: "Machine translation technology has made significant progress in the past decade, but it still cannot reach human quality levels. Over the past many years, people have used what was available at the time Machine translation systems add content to the web, so much of the machine-translated content on the web is likely to be of relatively low quality and fails to meet modern standards. This may lead to more 'hallucinations' in the LLM model, while selection bias suggests that even if not Considering machine translation errors, data quality may also be lower. For the training of LLM, data quality is crucial, and high-quality corpora, such as books and Wikipedia articles, usually require multiple upsampling."

The above is the detailed content of Research: The Internet is full of low-quality machine-translated content, and large language model training needs to be wary of data traps. For more information, please follow other related articles on the PHP Chinese website!

Hot Article

Hot tools Tags

Hot Article

Hot Article Tags

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Large models are also very powerful in time series prediction! The Chinese team activates new capabilities of LLM and achieves SOTA beyond traditional models

Apr 11, 2024 am 09:43 AM

Large models are also very powerful in time series prediction! The Chinese team activates new capabilities of LLM and achieves SOTA beyond traditional models

Apr 11, 2024 am 09:43 AM

Large models are also very powerful in time series prediction! The Chinese team activates new capabilities of LLM and achieves SOTA beyond traditional models

Deploy large language models locally in OpenHarmony

Jun 07, 2024 am 10:02 AM

Deploy large language models locally in OpenHarmony

Jun 07, 2024 am 10:02 AM

Deploy large language models locally in OpenHarmony

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Aug 08, 2024 am 07:02 AM

Hongmeng Smart Travel S9 and full-scenario new product launch conference, a number of blockbuster new products were released together

Stimulate the spatial reasoning ability of large language models: thinking visualization tips

Apr 11, 2024 pm 03:10 PM

Stimulate the spatial reasoning ability of large language models: thinking visualization tips

Apr 11, 2024 pm 03:10 PM

Stimulate the spatial reasoning ability of large language models: thinking visualization tips

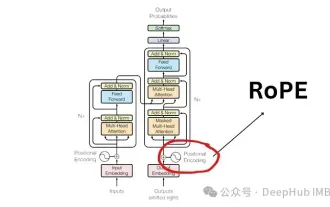

Detailed explanation of rotational position encoding RoPE commonly used in large language models: why is it better than absolute or relative position encoding?

Apr 01, 2024 pm 08:19 PM

Detailed explanation of rotational position encoding RoPE commonly used in large language models: why is it better than absolute or relative position encoding?

Apr 01, 2024 pm 08:19 PM

Detailed explanation of rotational position encoding RoPE commonly used in large language models: why is it better than absolute or relative position encoding?

Summarizing 374 related works, Tao Dacheng's team, together with the University of Hong Kong and UMD, released the latest review of LLM knowledge distillation

Mar 18, 2024 pm 07:49 PM

Summarizing 374 related works, Tao Dacheng's team, together with the University of Hong Kong and UMD, released the latest review of LLM knowledge distillation

Mar 18, 2024 pm 07:49 PM

Summarizing 374 related works, Tao Dacheng's team, together with the University of Hong Kong and UMD, released the latest review of LLM knowledge distillation