Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

Unleash the power of Kubernetes clusters

Unleash the power of Kubernetes clusters

Unleash the power of Kubernetes clusters

Due to the rise of microservices and scalable applications, the way we build, deploy, and manage applications has fundamentally changed, necessitating the need for containers. Developers and other software teams need this culture and toolset to help them better manage and orchestrate containerized applications. Kubernetes is a transformative force that is reshaping the way we manage and orchestrate containerized applications. Kubernetes is an open source container orchestration platform that has become the backbone of container-based deployments while providing a cloud-agnostic approach.

Unleash the power of powerful Kubernetes clusters: the backbone of modern applications

Explore Kubernetes Clusters capabilities and learn how Atmosly enhances its orchestration capabilities to increase agility, scalability, and efficiency.

Due to the rise of microservices and scalable applications, the way we build, deploy, and manage applications has fundamentally changed, which requires the need for containers. Developers and other software teams need this culture and toolset to help them better manage and orchestrate containerized applications. Kubernetes is a transformative force that is reshaping the way we manage and orchestrate containerized applications. Kubernetes is an open source container orchestration platform that has become the backbone of container-based deployments while providing a cloud-agnostic approach. But what really makes Kubernetes a game-changer is its ability to create powerful clusters, and that’s what we’re going to dive into today, focusing on how Atmosly takes the creation and management of these clusters to the next level.

Whether you are an experienced technical founder, a hands-on developer, or a vigilant product manager, this article is for you. It covers the power of Kubernetes clusters and how it powers modern applications. It doesn't stop there. You'll learn how platform engineering portals like Atmosly create a platform for Kubernetes to perform its functions.

Understanding Kubernetes

At its core, Kubernetes (often abbreviated as K8s) is an open source container orchestration platform designed to simplify the deployment and management of containerized applications. Originally developed by a team at Google and later donated to the Cloud Native Computing Foundation (CNCF), Kubernetes has quickly become one of the most popular options for container management. The name “Kubernetes” originates from the Greek meaning “helmsman” or “master of the sea,” aptly symbolizing its role in guiding the ship of modern applications through the turbulent waters of the digital age.

Kubernetes automates container operations through various container runtimes through the Container Runtime Interface (CRI), not only for Linux containers, but also for Windows containers and other containers. It eliminates many of the manual processes involved in deploying and scaling containerized applications. As a versatile orchestrator, it efficiently manages Linux and Windows-based containers, enabling fast, consistent, and automated deployment in diverse and hybrid environments. Kubernetes is a key player in modern software development, facilitating DevOps, CI/CD, and efficient resource utilization across multiple platforms.

Why do you need Kubernetes?

Accelerate deployment and enhance scalability

Kubernetes significantly speeds up the deployment process in containerized environments, which is critical for modern software delivery. Not only does it reduce time to market, it also supports dynamic scaling to efficiently handle sudden spikes in demand. Kubernetes' ability to load, balance, and scale applications in response to incoming traffic is critical to maintaining performance and availability.

Advanced workload portability across different environments

Highlight the role of Kubernetes in hybrid and multi-cloud strategies. It allows seamless migration and management of workloads across various cloud providers (AWS, GCP, Azure) and on-premises environments. This adaptability is critical in an era when enterprises seek to avoid vendor lock-in and need the flexibility to shift workloads in response to operational, regulatory or financial factors.

Deep integration with DevOps and enhanced CI/CD pipelines

In addition to being aligned with DevOps, Kubernetes actively facilitates the creation of more complex CI/CD pipelines. It can be integrated with various DevOps tools to automatically deploy, scale and manage containerized applications. Highlight the role of Kubernetes in enabling blue-green deployments, canary releases, and rollback mechanisms, which are critical to maintaining high availability and rapid iteration.

Resource Optimization and Cost Effectiveness

Focuses on Kubernetes’ ability to optimize resource usage through efficient container orchestration. It allows containers to share the operating system kernel and efficiently utilize system resources, thus minimizing waste. This optimization can lead to significant cost savings, especially in cloud environments where resource usage directly affects costs.

Addressing security and observability issues

Kubernetes not only optimizes resource usage, but also provides powerful security features such as secrets management and network policies. Additionally, its observability tools such as Prometheus and Grafana provide insights into application performance and facilitate proactive monitoring, which is critical for maintaining system health and security.

Adapt to the evolving technology environment

Kubernetes is constantly evolving, with strong community support and regular updates. This adaptability makes it relevant in a rapidly changing technology environment, supporting emerging technologies such as serverless architecture and edge computing.

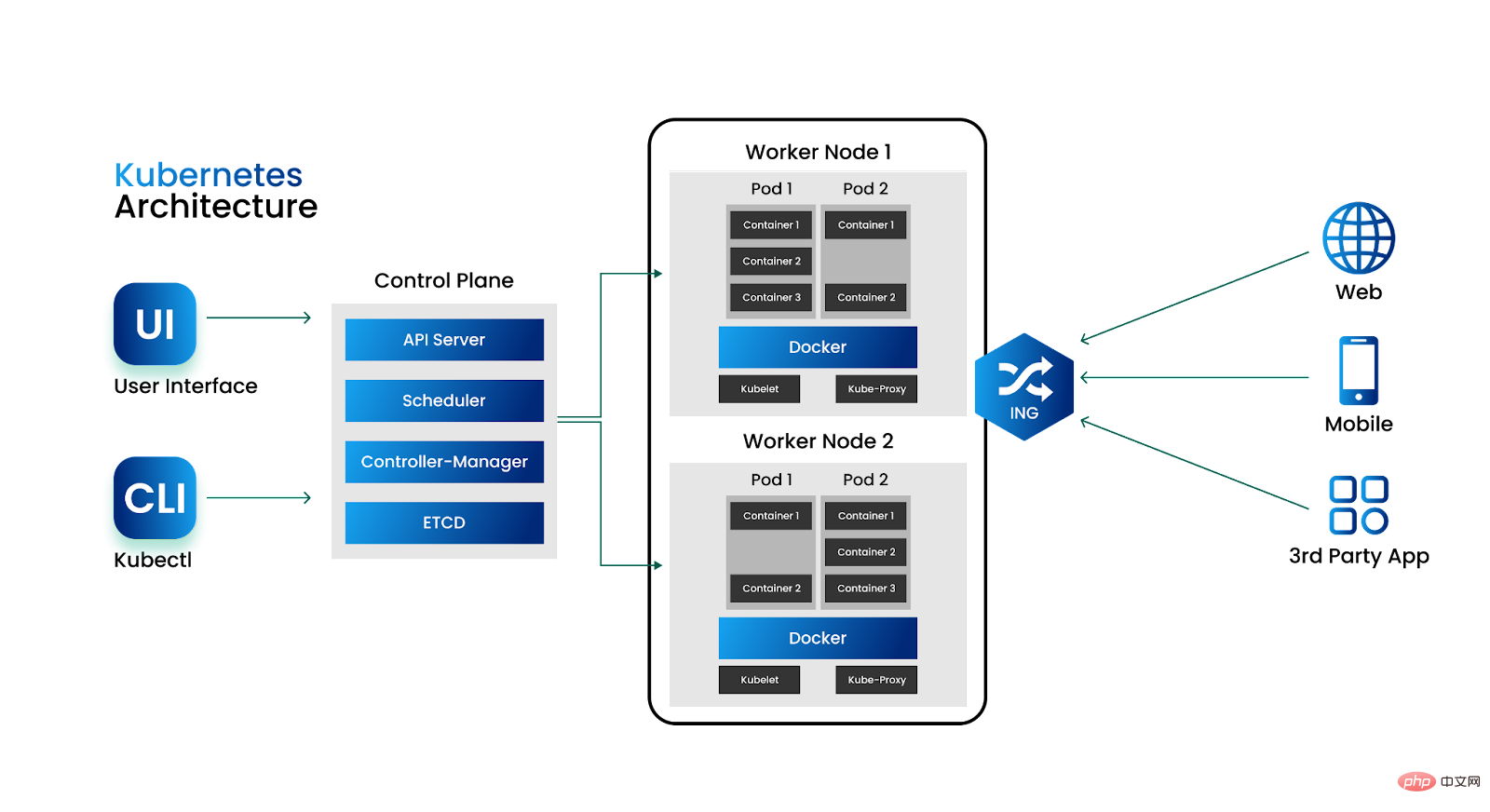

Kubernetes Cluster Anatomy

Kubernetes operates via a cluster-based architecture, which is critical for managing containerized workloads at scale. These clusters are sets of nodes or computers that manage containerized applications and typically consist of the following components:

- Master node: The master node acts as the control plane of the cluster and coordinates all activities. It manages the state of the cluster, schedules applications, and maintains overall cluster health.

- Worker Node: A worker node (also called a worker node) is where the actual workload runs. They host containers that execute applications. These nodes are responsible for ensuring that the containers are running properly and have the necessary resources.

- Pod: Pod is the smallest deployable unit in Kubernetes. They encapsulate one or more containers and share a common network and storage space. Pods enable efficient colocation of application components that need to work together.

- Replication Controller: A replication controller ensures that a specified number of Pod replicas are running at any given time. If a Pod fails, the replication controller will replace it to maintain the desired application availability level.

- Service industry: Kubernetes services support network access to a group of Pods. They provide a stable endpoint for applications to communicate with regardless of changes to the underlying Pod instance.

- Ingress controller: The ingress controller manages external access to services within the cluster. They act as entry points, routing traffic to the appropriate services based on defined rules.

- ConfigMap and secrets: ConfigMap and Secrets are Kubernetes resources used to configure applications and manage sensitive information such as API keys and passwords.

- Storage volumes: Kubernetes provides a variety of options for managing storage volumes, allowing applications to persist data or access external storage resources.

The potential of Kubernetes clusters with Atmosly

While Kubernetes clusters provide the foundation for modern application deployments, when paired with cloud-native services and The true power of these clusters is unleashed when different tools complement each other to operate application deployments. This is where cloud-native platform engineering tool Atmosly ensures that Kubernetes clusters are not only easy to deploy, but also efficiently utilize the underlying cloud infrastructure, emphasizing reliability, scalability and optimal resource utilization.

Here’s why Atmosly plays a key role in improving the Kubernetes experience

Create multi-cloud clusters with automated network setup

Atmosly simplifies the creation of Kubernetes clusters across multiple cloud environments , providing centralized management and ease of use. It automates network setup and configuration, ensuring each cluster is customized for its specific use, whether for production or non-production use. By following industry best practices, Atmosly guarantees optimal performance, security, and reliability for every Kubernetes cluster, simplifying the deployment process and reducing the complexity typically associated with every cloud environment.

Customizable add-ons for production-ready clusters

Atmosly enhances Kubernetes clusters by integrating a variety of add-ons to make them production-ready and optimized for specific operational needs. This includes advanced monitoring tools for real-time performance insights, powerful security enhancements to protect your data, and efficient network plug-ins for seamless connectivity. The integration of these add-ons simplifies and accelerates the process of preparing clusters for production, ensuring they meet industry standards for security and performance. Additionally, Atmosly's cluster-tailored approach emphasizes operational efficiency, scalability, and reliability, enabling organizations to quickly adapt to changing demands and maintain high levels of service availability.

Real-time Overview and Monitoring in the IAC Framework

Atmosly leverages Terraform for Infrastructure as Code (IAC) to automate and manage infrastructure configuration directly within customers’ cloud accounts, ensuring data sovereignty. The system operates with only the basic metadata required to manage a Kubernetes cluster, consistent with security best practices. Throughout the cluster creation process, Atmosly provides transparency through access to IAC logs, allowing customers to track the configuration of resources and gain detailed visibility into settings. In addition, Atmosly adheres to GitOps principles to ensure that all changes in the infrastructure are versioned and managed through Git repositories, thereby enhancing the traceability and consistency of infrastructure changes.

Simplified and Efficient Deployment

Atmosly enhances the Kubernetes deployment process not only by simplifying application deployment, but also by efficiently provisioning the cloud-native resources required by the application. Atmosly integrates advanced deployment tools to provide a more efficient and consistent deployment experience, significantly reducing the possibility of errors. In addition to managing application updates, Atmosly also specializes in handling the deployment and management of stateless databases such as MongoDB, MySQL, and PostgreSQL. This comprehensive approach ensures that all necessary components, from cloud resources to database services, are seamlessly integrated and optimally configured for each application, enabling a more robust and reliable deployment process in Kubernetes environments.

Enhance security through best practices and rule configuration

Atmosly improves the security of your Kubernetes cluster by integrating a set of security best practices and configurable rules. This proactive approach ensures that clusters adhere to high standards of security and compliance, effectively protecting them from a wide range of threats and vulnerabilities. By default, Atmosly deploys clusters using these established best practices, providing a solid foundation for security. To further enhance protection, Atmosly provides powerful tools to perform comprehensive cluster scans. These scans identify and resolve potential security issues, thereby maintaining the integrity and security of the cluster. Additionally, Atmosly has implemented Open Policy Agent (OPA) for advanced policy enforcement. OPA integration allows security and operational policies to be customized and implemented, ensuring cluster creation and management adhere to specific security requirements and best practices. This layered security strategy combines best practices, thorough scanning, and policy enforcement, positioning Atmosly as a powerful solution for creating and maintaining secure Kubernetes environments

Environment-specific configurations with clones and staging environments

Atmosly enables users to create custom configurations for different environments in a Kubernetes cluster, a feature that significantly increases the flexibility and efficiency of testing and development workflows. It facilitates cloning of environments, allowing developers to quickly and accurately replicate existing configurations. This cloning feature is particularly useful for testing changes in a controlled environment before deploying them in production, ensuring stability and minimizing disruption.

Additionally, Atmosly supports staging environments, which are temporary and can be launched dynamically for short-term testing or development purposes. These staging environments are ideal for continuous integration and continuous deployment (CI/CD) workflows because they allow changes to be quickly tested and verified in an isolated setting without impacting the stable, long-term environment. Using staging environments also aids in resource optimization as they can be created on demand and decommissioned after use, reducing unnecessary resource consumption and costs.

Atmosly’s advantage

The advantage of Atmosly is its comprehensive integration of features and tools, all unified under one platform to significantly enhance the Kubernetes experience. Atmosly not only enhances native functionality, but also prioritizes seamless integration, bringing together key aspects of Kubernetes management—from deployment and scaling to security and monitoring—into a cohesive ecosystem. This integrated approach simplifies the complexities typically associated with Kubernetes cluster management, providing a streamlined and user-friendly experience.

By facilitating this level of integration, Atmosly increases the efficiency of managing Kubernetes environments, allowing organizations to focus more on innovation and less on operational challenges. It perfectly aligns with the goals of digital transformation and helps enterprises quickly adapt to the changing technology environment and rapidly evolving market needs. Atmosly's platform is more than just a tool, it's a strategic partner in the digital transformation journey, enabling teams to leverage the full potential of Kubernetes during development and operations.

The future of software development with Atmosly and Kubernetes

In conclusion, powerful Kubernetes clusters have become the backbone of modern applications. Their ability to automate container operations, facilitate workload portability, and enhance DevOps practices makes them indispensable in today's software development environments. When paired with Atmosly, the synergy between Kubernetes and this cloud-native platform can unlock the full potential of applications.

Kubernetes and Atmosly provide a powerful combination for software developers, founders, and organizations looking for agility, scalability, and efficiency. By adopting these technologies, you can easily navigate the complexities of modern software development, ensuring your applications remain resilient and efficient, no matter the challenges they may face. The digital age demands powerful solutions, and Atmosly-enhanced Kubernetes clusters give you the solid foundation you need to thrive in a fast-paced, ever-evolving technology ecosystem.

The above is the detailed content of Unleash the power of Kubernetes clusters. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to install Snap on Debian 12

Mar 20, 2024 pm 08:51 PM

How to install Snap on Debian 12

Mar 20, 2024 pm 08:51 PM

Snap is an external package manager designed for Linux systems that provides you with a convenient way to install containerized applications. Snap allows you to easily download and install packages without worrying about installing additional dependencies. The manager automatically resolves the dependencies required by the package, ensuring that the package runs smoothly on your system. Snap complements the native apt package manager, giving you another option for installing and running applications on your system. In this guide, you will find a complete guide on how to install Snap on Debian12. Outline: How to install Snap on Debian12 How to find package availability on Snap How to find information about packages on Snap

How to debug docker-compose? Where is the configuration path set?

Feb 10, 2024 pm 12:48 PM

How to debug docker-compose? Where is the configuration path set?

Feb 10, 2024 pm 12:48 PM

I'm trying to debug docker-compose, this Go file, to solve some problem (this). To do this, I set up a GoLang debugger gorunmain.go-f/.../project_root/docker-compose.yml-f/.../project_root/folder1/docker-compose.ymlconfig's output is as expected, merged Configuration files. For some reason I can't find the configuration files set in the code, although they must be set somewhere because the output is the correctly merged configuration files. I suspect they must be set

Asus Slugger bios settings for best performance?

Feb 15, 2024 pm 03:30 PM

Asus Slugger bios settings for best performance?

Feb 15, 2024 pm 03:30 PM

Asus Slugger bios settings for best performance? Factory settings for optimal performance. The specific method is: 1. Press F2 while turning on the computer to enter the BIOS. 2. In the Boot menu, set secure to disabled. 3. Set BootListOption to UEFI. 4. In 1stBootPriority, HDD (hard disk) is the first boot item. 5. Select Savechangesandreset in the Exit menu or press F10 to save and exit. What are the best settings for ASUS x670ehero motherboard bios? To get the best ASUS x670ehero motherboard bios settings, you need to adjust them according to your own hardware configuration and usage needs. First, set it up correctly

What languages does pycharm support?

Apr 18, 2024 am 10:57 AM

What languages does pycharm support?

Apr 18, 2024 am 10:57 AM

Programming languages supported by PyCharm include: Python (main supported language) JavaScript (including Node.js and React) HTML/CSSTypeScriptJavaC/C++GoSQLDockerKotlinRust

Five selected Go language open source projects to take you to explore the technology world

Jan 30, 2024 am 09:08 AM

Five selected Go language open source projects to take you to explore the technology world

Jan 30, 2024 am 09:08 AM

In today's era of rapid technological development, programming languages are springing up like mushrooms after a rain. One of the languages that has attracted much attention is the Go language, which is loved by many developers for its simplicity, efficiency, concurrency safety and other features. The Go language is known for its strong ecosystem with many excellent open source projects. This article will introduce five selected Go language open source projects and lead readers to explore the world of Go language open source projects. KubernetesKubernetes is an open source container orchestration engine for automated

Easily automate your CI/CD pipeline with Kubernetes, Helm, and Jenkins

Apr 02, 2024 pm 04:12 PM

Easily automate your CI/CD pipeline with Kubernetes, Helm, and Jenkins

Apr 02, 2024 pm 04:12 PM

In a fast-paced software development environment, rapid releases are critical. CI/CD (Continuous Integration and Continuous Deployment) pipelines automate the deployment process and simplify the movement of code from development to production. This article focuses on setting up a fully automated CI/CD pipeline using Jenkins, Helm, and Kubernetes in a Kubernetes environment, including: environment setup, steps to automate pipeline builds, and deployment to development, staging, and production environments. By implementing this automated process, developers can focus on code development while leaving complex infrastructure management to automation, improving deployment efficiency and reliability.

6 Best Linux Distributions for Network Engineers

Feb 05, 2024 pm 05:20 PM

6 Best Linux Distributions for Network Engineers

Feb 05, 2024 pm 05:20 PM

As a network engineer, when considering installing Linux for your job, you may be faced with a question: Of the thousands of Linux distributions available, which one should you choose? Don't worry, you're not alone. Linux is a common operating system of choice for network engineers, and there are many distributions suitable for network-related tasks. If you are a network engineer, you may want to know which distributions provide the best functionality for your work. The following are six excellent Linux distributions that are widely recommended by network engineers: 1. Fedora Among the many Linux distributions, Fedora is one of the most respected among network engineers, and the reason is simple. Fedora is an open source distribution equivalent to Red Hat Enterprise

Linux performance tuning~

Feb 12, 2024 pm 03:30 PM

Linux performance tuning~

Feb 12, 2024 pm 03:30 PM

The Linux operating system is an open source product, and it is also a practice and application platform for open source software. Under this platform, there are countless open source software supports, such as apache, tomcat, mysql, php, etc. The biggest concept of open source software is freedom and openness. Therefore, as an open source platform, Linux's goal is to achieve optimal application performance at the lowest cost through the support of these open source software. When it comes to performance issues, what is mainly achieved is the best combination of the Linux operating system and applications. 1. Overview of performance issues System performance refers to the effectiveness, stability and response speed of the operating system in completing tasks. Linux system administrators may often encounter problems such as system instability and slow response speed, such as