Technology peripherals

Technology peripherals

AI

AI

Google officially announced the release of TensorFlow-GNN 1.0! Dynamic and interactive sampling to build graph neural networks at scale

Google officially announced the release of TensorFlow-GNN 1.0! Dynamic and interactive sampling to build graph neural networks at scale

Google officially announced the release of TensorFlow-GNN 1.0! Dynamic and interactive sampling to build graph neural networks at scale

In 2005, the release of the epoch-making work "The Graph Neural Network Model" brought graph neural networks to everyone.

Prior to this, the way scientists processed graph data was to convert the graph into a set of "vector representations" during the data preprocessing stage.

The emergence of CNN has completely changed this shortcoming of information loss. In the past 20 years, generations of models have continued to evolve, promoting progress in the ML field.

Today, Google officially announced the release of TensorFlow GNN 1.0 (TF-GNN) - a production-tested library for building GNNs at scale.

It supports both modeling and training in TensorFlow, as well as extracting input graphs from large data stores.

TF-GNN is built from the ground up for heterogeneous graphs, where types of objects and relationships are represented by different sets of nodes and edges.

Objects and their relationships in the real world appear in different types, and the heterogeneous focus of TF-GNN makes it very natural to represent them.

Google scientist Anton Tsitsulin said that complex heterogeneous modeling is back!

##TF-GNN 1.0 is first released

Objects and their relationships, in our everywhere in the world.

The relationship is as important to understanding an object as looking at the properties of the object itself in isolation, such as transportation networks, production networks, knowledge graphs or social networks.

Discrete mathematics and computer science have long formalized such networks as graphs, consisting of "nodes" arbitrarily connected by edges in various irregular ways.

However, most machine learning algorithms only allow regular and uniform relationships between input objects, such as grids of pixels, sequences of words, or no relationship at all.

Graphic Neural Network, or GNN for short, is a powerful technology that can exploit both the connectivity of graphs (such as early algorithms DeepWalk and Node2Vec) and the different node and edge inputs feature.

GNN can work on the graph as a whole (does this molecule react in some way?), on individual nodes (according to the quote, this paper What is the topic of the document?), potential edges (Is this product likely to be purchased with another product?) to predict.

In addition to making predictions on graphs, GNNs are a powerful tool for bridging the gap from more typical neural network use cases.

They encode the discrete relational information of the graph in a continuous manner, allowing it to be naturally incorporated into another deep learning system.

Google today officially announced TensorFlow GNN 1.0 (TF-GNN), a production-tested library for building GNNs at scale.

In TensorFlow, such a graph is represented by an object of type tfgnn.GraphTensor.

This is a composite tensor type (a collection of tensors in a Python class) accepted as a "first-class object" in tf.data.Dataset, tf.function, etc.

It can store not only the graph structure, but also the characteristics of nodes, edges and the entire graph.

Trainable transformations for GraphTensors can be defined as Layers objects in the high-level Kera API, or directly using the tfgnn.GraphTensor primitive.

GNN: Predicting objects in context

Next, let’s further explain TF-GNN. You can look at one of the typical Application:

Predict the attributes of certain types of nodes in a graph defined by a cross-reference table in a huge database

For example, in the computer science (CS) citation database arxiv papers, there are one-to-many citations and many-to-one citation relationships, which can predict the subject area of each paper.

Like most neural networks, GNN is trained on a data set of many labeled samples (approximately millions), but each training step only consists of a batch of small Many training samples (such as hundreds).

To scale to millions of samples, GNNs are trained on a stream of reasonably small subgraphs in the underlying graph. Each subgraph contains enough raw data to calculate the GNN results of the central labeled node and train the model.

This process, often called subgraph sampling, is extremely important for GNN training.

Most existing tools complete sampling in batch mode and generate static subgraphs for training.

And TF-GNN provides tools to improve this through dynamic and interactive sampling.

Subgraph sampling process, that is, extracting small, actionable subgraphs from a larger graph to create input examples for GNN training

TF-GNN 1.0 introduces a flexible Python API for configuring dynamic or batch subgraph sampling at all relevant scales: Interactive sampling in Colab notes.

Specifically, "efficient sampling" of small data sets stored in the main memory of a single training host, or large data sets stored in a network file system via Apache Beam (up to hundreds of millions of nodes and billions of edges) for distributed sampling.

On these same sampled subgraphs, the task of GNN is to calculate the hidden (or potential) state of the root node; the hidden state aggregates and encodes relevant information of the root node's neighborhood.

A common method is "message passing neural network".

In each round of messaging, a node receives messages from neighboring nodes along incoming edges and updates its own hidden state from these edges.

After n rounds, the hidden state of the root node reflects the aggregated information of all nodes within n edges (as shown in the figure below, n=2). The message and new hidden state are computed by the hidden layer of the neural network.

In heterogeneous graphs, it often makes sense to use separately trained hidden layers for different types of nodes and edges.

The picture shows a simple "message passing neural network". In this network, the node status will be propagated from the external node to the internal node at each step. , and the new node status is calculated through internal node collection. Once the root node is reached, the final prediction can be made

The training setup is by placing the output layer on top of the hidden state of the GNN of the labeled node, computing the loss (to measure the prediction error ) and update the model weights through backpropagation, which is common in any neural network training.

In addition to supervised training, GNNs can also be trained in an unsupervised manner, allowing us to compute continuous representations (or embeddings) of discrete graph structures of nodes and their features.

These representations are then commonly used in other ML systems.

In this way, the discrete relational information encoded by the graph can be incorporated into more typical neural network use cases. TF-GNN supports fine-grained specification of unsupervised targets on heterogeneous graphs.

Building GNN architecture

The TF-GNN library supports the construction and training of GNNs at different abstraction levels.

At the highest level, users can use any predefined model bundled with the library, which are represented as Kera layers.

In addition to a small set of models from the research literature, TF-GNN comes with a highly configurable model template that provides carefully curated modeling choices.

Google found these choices to provide a strong baseline for many of our internal issues. The template implements the GNN layer; the user only needs to initialize it starting from the Kera layer.

import tensorflow_gnn as tfgnnfrom tensorflow_gnn.models import mt_albisdef model_fn(graph_tensor_spec: tfgnn.GraphTensorSpec):"""Builds a GNN as a Keras model."""graph = inputs = tf.keras.Input(type_spec=graph_tensor_spec)# Encode input features (callback omitted for brevity).graph = tfgnn.keras.layers.MapFeatures(node_sets_fn=set_initial_node_states)(graph)# For each round of message passing...for _ in range(2):# ... create and apply a Keras layer.graph = mt_albis.MtAlbisGraphUpdate(units=128, message_dim=64,attention_type="none", simple_conv_reduce_type="mean",normalization_type="layer", next_state_type="residual",state_dropout_rate=0.2, l2_regularizatinotallow=1e-5,)(graph)return tf.keras.Model(inputs, graph)

在最低层,用户可以根据用于在图中传递数据的原语,从头开始编写GNN模型,比如将数据从节点广播到其所有传出边,或将数据从其所有传入边汇集到节点中。

当涉及到特征或隐藏状态时,TF-GNN 的图数据模型对节点、边和整个输入图一视同仁。

因此,它不仅可以直接表示像MPNN那样以节点为中心的模型,而且还可以表示更一般形式的的图网络。

这可以(但不一定)使用Kera作为核心TensorFlow顶部的建模框架来完成。

训练编排

虽然高级用户可以自由地进行定制模型训练,但TF-GNN Runner还提供了一种简洁的方法,在常见情况下协调Kera模型的训练。

一个简单的调用可能如下所示:

from tensorflow_gnn import runnerrunner.run( task=runner.RootNodeBinaryClassification("papers", ...), model_fn=model_fn, trainer=runner.KerasTrainer(tf.distribute.MirroredStrategy(), model_dir="/tmp/model"), optimizer_fn=tf.keras.optimizers.Adam, epochs=10, global_batch_size=128, train_ds_provider=runner.TFRecordDatasetProvider("/tmp/train*"), valid_ds_provider=runner.TFRecordDatasetProvider("/tmp/validation*"), gtspec=...,)Runner为ML Pain提供了现成的解决方案,如分布式训练和云TPU上固定形状的 tfgnn.GraphTensor 填充。

除了单一任务的训练(如上所示)外,它还支持多个(两个或更多)任务的联合训练。

例如,非监督任务可以与监督任务混合,以形成具有特定于应用的归纳偏差的最终连续表示(或嵌入)。调用方只需将任务参数替换为任务映射:

from tensorflow_gnn import runnerfrom tensorflow_gnn.models import contrastive_lossesrunner.run( task={"classification": runner.RootNodeBinaryClassification("papers", ...),"dgi": contrastive_losses.DeepGraphInfomaxTask("papers"),},...)此外,TF-GNN Runner还包括用于模型归因的集成梯度实现。

集成梯度输出是一个GraphTensor,其连接性与观察到的GraphTensor相同,但其特征用梯度值代替,在GNN预测中,较大的梯度值比较小的梯度值贡献更多。

总之,谷歌希望TF-GNN将有助于推动GNN在TensorFlow中的大规模应用,并推动该领域的进一步创新。

The above is the detailed content of Google officially announced the release of TensorFlow-GNN 1.0! Dynamic and interactive sampling to build graph neural networks at scale. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

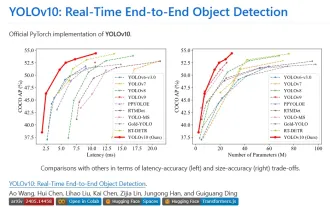

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end