Due to the instability of the operating system kernel, poor timeliness, integrity issues, and the need for manual intervention, Linux kernel pruning technology has not been widely used. After understanding the limitations of the existing technology, we try to propose a Linux kernel tailoring framework, which may be able to solve these problems.

Around 2000, the old coder was still very young. At that time, he hoped to use Linux as the operating system of mobile phones, so he came up with the idea of kernel tailoring and assisted in the practice. The effect was quite good, and it could already be executed on PDA. The function of the mobile phone is gone. More than 20 years have passed, and Linux has changed a lot, and the technology and methods of kernel pruning have also been greatly different.

Linux kernel pruning is to reduce unnecessary kernel code in target applications, which has significant benefits in terms of security and performance (fast boot time and reduced memory footprint). However, existing kernel pruning technology has its limitations. Is there a framework method for kernel pruning?

1. About kernel clipping

In recent years, Linux operating systems have grown in complexity and scale. However, an application usually requires only a portion of the OS functionality, and numerous application requirements lead to bloated Linux kernels. Operating system kernel bloat also leads to security risks, longer boot times, and increased memory usage.

With the popularity of servitization and microservices, the need for kernel tailoring has been further raised. In these scenarios, virtual machines run small applications. Each application is often "micro" and has a small kernel footprint. Some virtualization technologies provide the simplest Linux kernel for the target application.

Given the complexity of operating systems, tailoring the kernel by hand-picking kernel features is somewhat impractical. For example, Linux has over 14,000 configuration options (as of v4.14), with hundreds of new options introduced every year. Kernel configurators (such as KConfig) only provide a user interface for selecting configuration options. Given poor usability and incomplete documentation, it is difficult for users to choose a minimal and practical kernel configuration.

Existing kernel pruning technology generally follows three steps:

Configuration-driven is a general approach to kernel pruning. Most existing tools use configuration-driven techniques because they are one of the few technologies that can produce stable kernels. Configuration-driven kernel reloading reduces the kernel code based on functional characteristics. Configuration options correspond to the functionality of the kernel. The pruned kernel contains only the functionality needed to support the target application workload.

However, although the kernel pruning technique is very attractive in terms of security and performance, it has not been widely adopted in practice. This is not due to lack of demand, in fact many cloud providers hand-code Linux kernels to reduce code, but generally not as effectively as kernel pruning techniques.

2. Limitations of existing kernel pruning technology

Existing kernel pruning technology has five main limitations.

Invisible during boot phase. Existing techniques can only start after the kernel boots, relying on ftrace, so there is no way to observe what kernel code is loaded during the boot phase. If critical modules are missing from the kernel, the kernel often fails to boot, and a large number of kernel functional features can only be captured by observing the boot phase. In addition, performance and security issues are also only loaded at boot time (for example, CONFIGSCHEDMC and CONFIGSECURITYNETWORK for multi-core support), resulting in reduced performance and security.

Lack of fast support for application deployment. Using existing tools, deploying a new application tailored for the kernel requires completing the three steps of tracing, analyzing, and assembling. This process is time-consuming and can take hours or even days, hindering the agility of application deployment.

The particle size is coarser. Using ftrace can only trace kernel code at the function level, and the granularity is too coarse to trace configuration options that affect the code within the function.

Incomplete coverage. Because dynamic tracing is used, the application workload is required to drive the kernel's code execution to maximize coverage. However, benchmark coverage is challenging, and if the application has kernel code that is not observed during the trace, the trimmed kernel may crash at runtime.

There is no distinction between execution dependencies and there may be redundancy. Even code that may not actually need to be executed may be included in the kernel functionality, for example, it may initialize a second file system.

The first three limitations are surmountable and can be addressed through improved design and tooling, while the last two limitations are unavoidable and require efforts beyond the specific technology.

3. Linux kernel configuration

3.1 Configuration options

Kernel configuration consists of a set of configuration options. A kernel module can have multiple options, each controlling which code will be included in the final kernel binary.

Configuration options control different granularities of kernel code, such as statements and functions implemented by the C preprocessor, and object files implemented based on Makefiles. The C preprocessor selects code blocks based on #ifdef/#ifndef, and configuration options are used as macro definitions to determine whether such conditional code blocks are included in the compiled kernel, either at statement granularity or function granularity. Makefile is used to determine whether certain object files are included in the compiled kernel. For example, CONFIG_CACHEFILES is the configuration option in Makefile.

Statement-level configuration options cannot be identified through function-level tracing used by existing kernel tailoring tools. In fact, about 30% of the C preprocessor in Linux 4.14 are statement-level options.

With the rapid growth of kernel code and functional features, the number of configuration options in the kernel is also increasing rapidly, with Linux kernel 3.0 and above having more than 10,000 configuration options.

3.2. Configuration language

The Linux kernel uses the KConfig configuration language to instruct the compiler what code to include in the compiled kernel, allowing configuration options and dependencies between them to be defined.

The value of a configuration option in KConfig may be bool, tristate, or constant. bool means that the code is either statically compiled into a kernel binary or excluded, while tristate allows the code to be compiled into a loadable core module, i.e. a standalone object that can be loaded at runtime. constant can provide a string or a numeric value for a kernel code variable. One option can depend on another option, and KConfig uses a recursive process by recursively selecting and canceling dependencies. The final kernel configuration has valid dependencies, but may differ from user input.

3.3. Configuration template

The Linux kernel comes with many hand-crafted configuration templates. However, due to the hard-coded nature of configuration templates and the need for manual intervention, they are not adaptable to different hardware platforms and do not understand the needs of the application. For example, a kernel built with tinyconfig cannot boot on standard hardware, let alone support other applications. Some tools treat localmodconfig as a minimal configuration, however, localmodconfig has the same limitations as a static configuration template, it does not enable control statement-level or function-level C preprocessor configuration options, and it does not handle loadable kernels. module.

The kvmconfig and xenconfig templates are customized for kernels running on KVM and Xen. They provide domain knowledge such as the underlying virtualization and hardware environment.

3.4. Linux kernel configuration in the cloud

Linux is the dominant operating system kernel in cloud services, and cloud providers have abandoned the normal Linux kernel to some extent. Customization by cloud vendors is often accomplished by directly removing loadable kernel modules. The problem with manually pruning kernel module binaries is that dependencies may be violated. Importantly, the cores can be further tailored based on application requirements. For example, the Amazon FireCracker kernel is a tiny virtual machine designed for functions as a service, using HTTPD as the target application, enabling greater minimization of kernel tailoring while ensuring increased functionality and performance.

4. Thoughts on kernel pruning

Regarding limitation 1, is it possible to use instruction-level tracing from QEMU to achieve boot phase visibility? This way, kernel code can be traced and mapped to kernel configuration options. Since the boot phase is critical to produce a bootable kernel, use the tracing features provided by the hypervisor to gain end-to-end observability and produce a stable kernel.

Regarding limitation two, based on experience in NLP deep learning, a combination of offline and online methods can be used. Given a set of target applications, the App configuration can be directly generated offline, and then combined with the baseline configuration to form a complete kernel. configuration, resulting in a trimmed kernel. This composability enables new kernels to be built incrementally by reusing application configurations and previously built files (such as kernel modules). If the target application's configuration is known, kernel pruning can be accomplished in tens of seconds.

Regarding limitation three, using instruction-level tracing can solve the kernel configuration options that control the internal functional characteristics of functions. The overhead of instruction-level tracing is acceptable for running test suites and performance benchmarks.

Regarding limitation four, a basic limitation of using dynamic tracing is the imperfection of test suites and benchmarks. Many open source application test suites have low code coverage. Combining different workloads to drive applications can alleviate this limitation to some extent.

For limitation five, domain-specific information can be used to further load the kernel by removing kernel modules that execute in the baseline kernel but are not needed when the actual deployment is running. Taking Xen and KVM as examples, the kernel size can be further reduced based on xenconfig and kvmconfig configuration templates. Application-oriented kernel pruning can further reduce kernel size and even extensively customize the kernel code.

5 A preliminary study on the kernel tailoring framework

The principle of the kernel tailoring framework has not changed, it is still to track the kernel usage of the target application workload to determine the required kernel options.

5.1 Core features of the kernel clipping framework

The kernel clipping framework can probably have the following characteristics:

End-to-end visibility. Leverage the visibility of the hypervisor to achieve end-to-end observation, you can track the kernel boot phase and application workload, you can try to build a tailoring framework for the Linux kernel based on QEMU.

Composability. A core idea is to enable the kernel configuration to be combined by dividing it into several configuration sets, both for booting the kernel on a given deployment environment and for the configuration options required by the target application. Configuration sets are divided into two types: baseline configuration and application configuration. A baseline configuration is not necessarily the minimum set of configurations required to boot on a specific hardware, but rather a set of configuration options that are tracked during the boot phase. The baseline configuration can be combined with one or more application configurations to produce the final kernel configuration.

Reusability. Both baseline and application configurations can be stored in the database and reused as long as the deployment environment and application binaries remain unchanged. This reusability avoids repeated runs of the tracing workload and makes the creation of configuration sets a one-time job.

Support rapid application deployment. Given a deployment environment and target application, the kernel tailoring framework can efficiently retrieve baseline configurations and application configurations, combine them into the required kernel configuration, and then use the resulting configuration to build the obsolete kernel.

Fine-grained configuration tracing, program counter-based tracing to identify configuration options based on low-level code patterns.

5.2 Architecture of Kernel Clipping Framework

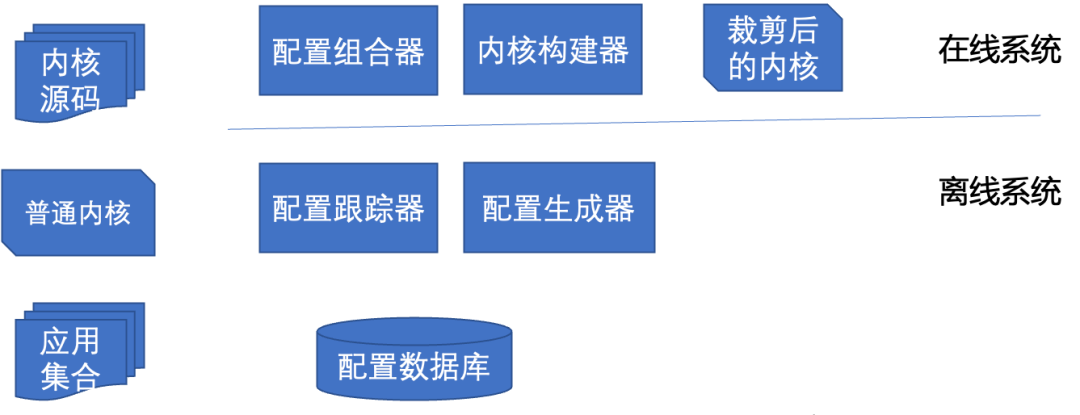

The kernel tailoring framework should have both off-line and online systems. The architecture is as shown in the figure below:

Through the offline system, the configuration tracker is used to track and record the configuration options required by the deployment environment and application. The configuration generator processes these options into baseline configuration and application configuration options and stores them in the configuration database.

Through the online system, the configuration combinator uses the baseline configuration and the application configuration to generate the target kernel configuration, and then the kernel builder generates the tailored Linux kernel.

5.3 Feasibility of implementing the kernel tailoring framework

Configuration Tracking

The kernel tailoring framework's configuration tracker tracks configuration options during kernel execution driven by a target application, using the PC register to capture the address of the executing instruction. In order to ensure that the tracked PC belongs to the target application and not other processes (for example, background services), a customized init script can be used, which does not start any other applications and only mounts the file system /tmp, /proc and /sys, enable the network interfaces (lo and eth0), and finally start the application directly after the kernel boots.

At the same time, it may be necessary to disable kernel address space configuration random loading so that addresses can be mapped correctly to source code but still be available in the trimmed kernel. Then, map PCs to source code statements. Loadable kernel modules require additional processing. You can use /proc/module to obtain the starting address of each loaded kernel module and map these PCs to statements in the kernel module binary. An alternative is to utilize localmodconfig, however, localmodconfig only provides information at the module granularity level.

Finally, attribute the statement to the configuration. For C preprocessor-based mode, the C source file is parsed to extract preprocessor directives, and then checks whether statements in those directives are executed. For Makefile-based mode, determines whether configuration options should be selected at the object file granularity. For example, if any of the corresponding files (bind.o, achefiles.o, or daemon.o) are used, CONFIG_CACHEFILES needs to be selected.

Configuration generation

Baseline configuration and application configuration are generated in the offline system. How to judge the end of the startup phase? An empty stub function can be mapped to a predefined address segment using mmap. The init script described above calls the stub function before running the target application, so it is possible to identify the end of the boot phase based on the predefined address in the PC trace.

The kernel tailoring framework obtains configuration options from the application and filters out hardware-related options observed during the boot phase. These hardware features are defined based on their location in the kernel source code. The possibility cannot be ruled out that hardware-related options can only be observed during application execution, e.g. it loads new device drivers as needed.

Configuration Assembly

Combining the baseline configuration with one or more application configurations produces the final configuration used to build the kernel. First, all configuration options are merged into an initial configuration, and then the dependencies between them are resolved using the SAT solver. Try to model configuration dependencies as a Boolean satisfiability problem, where a valid configuration is one that satisfies all specified dependencies between configuration options. Modeling the kernel configuration is based on a SAT solver because KConfig does not ensure that all selected options are included, but instead deselects unmet dependencies.

Kernel Build

KBuild for Linux builds a tailored kernel based on assembled configuration options. Incremental builds using modern make can optimize build times and can also cache previous build results (e.g., object files and kernel modules) to avoid redundant compilations. and links. When a configuration change occurs, only the modules that made changes to the configuration options are rebuilt, while other files can be reused.

6. Summary

Due to the instability of the operating system kernel, poor timeliness, integrity issues, and the need for manual intervention, Linux kernel pruning technology has not been widely used. After understanding the limitations of the existing technology, we try to propose a Linux kernel tailoring framework, which may be able to solve these problems.

The above is the detailed content of A preliminary study on the Linux kernel pruning framework. For more information, please follow other related articles on the PHP Chinese website!