8 Linux concepts older than Linux itself

Linus Torvalds announced the first version of Linux in 1991, but some Linux concepts are even older than Linux itself.

While Linux is generally considered a modern operating system, some of the concepts are much older than you might think. The following are some concepts that have a long history in Linux systems.

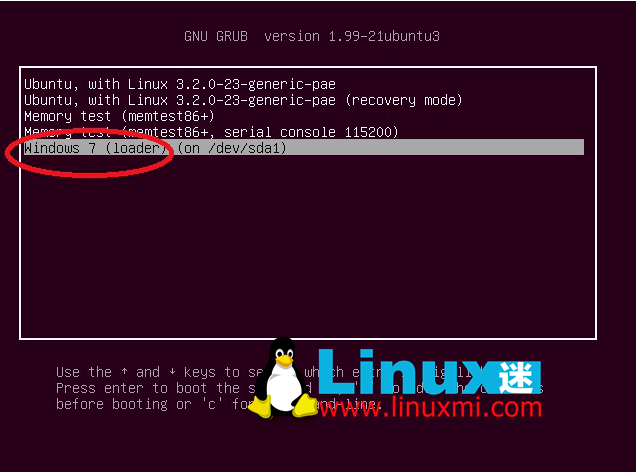

1. Dual boot

Using dual-boot has been the standard way to share Linux between different operating systems, including Windows. The concept of a multi-boot operating system is almost as old as computers.

It is also popular in Unix-like environments, such as running Unix and MS-DOS on older PC Unix systems such as Xenix. Dual boot was also implemented on the Amiga before Linux.

The Commodore Amiga 3000UX comes with Amiga Unix, a customized version of System V, and the standard Amiga Workbench operating system. You can select the operating system at boot time by using the left and right mouse buttons.

2. Support different architectures

When Linus Torvalds released the Linux kernel on Usenet in 1991, he himself did not foresee that it would expand to other architectures besides the Intel x86 platform, but he probably should have left history with such a precedent.

Like the original Unix, Linux is written in the C language. C itself is a portable language. As long as a C program makes no assumptions about the underlying environment, it can be compiled on any computer, as long as it has a corresponding compiler.

Like many operating systems of the time, Unix was originally written in assembly language, but Dennis Ritchie rewrote it in the C language he invented in the early 1970s. A side effect of this was that the operating system was decoupled from the hardware and Unix became a general-purpose operating system.

This was unusual at the time because the operating system was tied to a specific machine. This is one of the reasons why Unix made such a splash in computer science circles in the 1970s and 1980s.

3. Concepts of different Shells

The Bash shell is the popular default shell on Linux systems, but you can easily change your login shell to any shell of your choice. You probably know that this is a feature of the original Unix systems, but did you know that the idea is even older than Unix?

DEC is developing an operating system called MICA that will run on a new processor architecture called PRISM. It will be based on DEC's popular minicomputer operating system VMS, but will also have a Unix flavor.

This ambitious project is the brainchild of Dave Cutler. DEC eventually canceled the MICA project, and Cutler moved to Microsoft to lead the project that would eventually become Windows NT.

On the VMS side, there is also a program called Eunice, which can also run Unix programs. Like the original WSL, it works, but it also suffers from performance and compatibility issues compared to native Unix.

When Windows NT finally came out in 1993, it had a POSIX environment, but seemingly only so that Microsoft could say it was POSIX compliant and bid on certain contracts with the U.S. federal government.

Microsoft will also release a more complete environment-Windows Services for Unix, and the open source Cygwin project will also appear.

5. Legal issues

In the early 2000s, the lawsuit filed by SCO against IBM received widespread attention in the Linux and open source communities. SCO claimed that Linux infringed on their rights to original Unix code, which they had acquired ownership of.

While IBM and the Linux community ultimately prevailed, this situation had precedent in the original Unix days. AT&T's Unix System Laboratories (USL) claimed copyright on Berkeley's software distribution code, which put pressure on Berkeley in the early 1990s.

Linux became the darling of computer enthusiasts, although it turned out that only a small number of files were "restricted" and could be easily rewritten for open source distribution.

6. Competition between different versions

Although the Linux community likes to argue about which distribution is better, this is nothing new to Unix culture.

In the 1980s, the debate between AT&T's System V and BSD was a big deal. The latter is more popular in academia and was developed at the University of California, Berkeley. It is also a major component of workstation Unix, such as Sun Microsystems.

By the late 1980s, the Unix world was mired in the so-called "Unix Wars." AT&T and Sun began working together to merge BSD and System V, which alarmed other computer companies such as Hewlett-Packard, DEC, and IBM. These companies later formed the Open Software Foundation, and Sun and AT&T formed Unix International.

This "war" finally ended with a ceasefire. The two organizations merged, but Linux eventually replaced proprietary Unix in most applications.

7. "Year of the Unix Desktop"

Linux distributions are known for their desktop user interfaces and are designed to make Linux accessible to non-technical users. This effort also has a long history, as can be seen in the 1989 PBS television show The Computer Chronicles.

In the show, we can see products from Sun Microsystems, HP and even Apple. Apple has also launched a Unix-based operating system A/UX.

8. Open source software

Although Linux popularized the concept of open source software, the idea has been around for a long time and may have existed in computers themselves.

Although the GNU project gained its reputation for its clear philosophy of providing free software, the software has long circulated freely in academia. BSD developers created their own license allowing free distribution.

Many Linux concepts are older than you think

You might be surprised how old some concepts in Linux culture, such as dual boot and open source software, are. Much of Linux's special features can be explained by its origins in Unix.

One example that confuses many people new to the Linux command line is that the commands look strange. Why are they so short? The reason is that they were originally designed for teletypes, not screens.

The above is the detailed content of 8 Linux concepts older than Linux itself. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use docker desktop

Apr 15, 2025 am 11:45 AM

How to use Docker Desktop? Docker Desktop is a tool for running Docker containers on local machines. The steps to use include: 1. Install Docker Desktop; 2. Start Docker Desktop; 3. Create Docker image (using Dockerfile); 4. Build Docker image (using docker build); 5. Run Docker container (using docker run).

How to view the docker process

Apr 15, 2025 am 11:48 AM

How to view the docker process

Apr 15, 2025 am 11:48 AM

Docker process viewing method: 1. Docker CLI command: docker ps; 2. Systemd CLI command: systemctl status docker; 3. Docker Compose CLI command: docker-compose ps; 4. Process Explorer (Windows); 5. /proc directory (Linux).

What to do if the docker image fails

Apr 15, 2025 am 11:21 AM

What to do if the docker image fails

Apr 15, 2025 am 11:21 AM

Troubleshooting steps for failed Docker image build: Check Dockerfile syntax and dependency version. Check if the build context contains the required source code and dependencies. View the build log for error details. Use the --target option to build a hierarchical phase to identify failure points. Make sure to use the latest version of Docker engine. Build the image with --t [image-name]:debug mode to debug the problem. Check disk space and make sure it is sufficient. Disable SELinux to prevent interference with the build process. Ask community platforms for help, provide Dockerfiles and build log descriptions for more specific suggestions.

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

What computer configuration is required for vscode

Apr 15, 2025 pm 09:48 PM

VS Code system requirements: Operating system: Windows 10 and above, macOS 10.12 and above, Linux distribution processor: minimum 1.6 GHz, recommended 2.0 GHz and above memory: minimum 512 MB, recommended 4 GB and above storage space: minimum 250 MB, recommended 1 GB and above other requirements: stable network connection, Xorg/Wayland (Linux)

vscode cannot install extension

Apr 15, 2025 pm 07:18 PM

vscode cannot install extension

Apr 15, 2025 pm 07:18 PM

The reasons for the installation of VS Code extensions may be: network instability, insufficient permissions, system compatibility issues, VS Code version is too old, antivirus software or firewall interference. By checking network connections, permissions, log files, updating VS Code, disabling security software, and restarting VS Code or computers, you can gradually troubleshoot and resolve issues.

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

VS Code is available on Mac. It has powerful extensions, Git integration, terminal and debugger, and also offers a wealth of setup options. However, for particularly large projects or highly professional development, VS Code may have performance or functional limitations.

What is vscode What is vscode for?

Apr 15, 2025 pm 06:45 PM

What is vscode What is vscode for?

Apr 15, 2025 pm 06:45 PM

VS Code is the full name Visual Studio Code, which is a free and open source cross-platform code editor and development environment developed by Microsoft. It supports a wide range of programming languages and provides syntax highlighting, code automatic completion, code snippets and smart prompts to improve development efficiency. Through a rich extension ecosystem, users can add extensions to specific needs and languages, such as debuggers, code formatting tools, and Git integrations. VS Code also includes an intuitive debugger that helps quickly find and resolve bugs in your code.

How to back up vscode settings and extensions

Apr 15, 2025 pm 05:18 PM

How to back up vscode settings and extensions

Apr 15, 2025 pm 05:18 PM

How to back up VS Code configurations and extensions? Manually backup the settings file: Copy the key JSON files (settings.json, keybindings.json, extensions.json) to a safe location. Take advantage of VS Code synchronization: enable synchronization with your GitHub account to automatically back up all relevant settings and extensions. Use third-party tools: Back up configurations with reliable tools and provide richer features such as version control and incremental backups.