Computer Tutorials

Computer Tutorials

Computer Knowledge

Computer Knowledge

Redis optimization guide: network, memory, disk, blocking points

Redis optimization guide: network, memory, disk, blocking points

Redis optimization guide: network, memory, disk, blocking points

Since Redis is a memory-based operation, the CPU is not its performance bottleneck. On the contrary, the server's memory utilization, network IO, and disk reads and writes play a key role in the performance of Redis.

Therefore, we will focus on optimization from aspects such as network, memory, disk and blocking points. If any terminology is unclear, it is recommended to refer to the redis content in previous issues or consult relevant information.

Network Optimization

If the client requests the server, that is, in the "request-response" mode, use batch processing as much as possible to reduce network IO overhead.

Batch processing technology: atomic m batch processing instructions, pipline technology, redis, transactions, lua scripts.

Batch processing reduces network IO overhead

Atomic m batch processing instructions: string type, it is recommended to use mget/mset instead of get/set; hash type, it is recommended to use hmget/hmset instead of hget/hset.

pipline technology: Pipeline technology can be used when there are batch operations when using list, set and zset.

redis transaction: recommended when special business requirements ensure multiple instructions.

lua script: It is recommended to use lua script when you need to ensure the atomicity of multiple instructions. Specific examples include distributed unlocking, flash sales and inventory reduction.

Inter-node network optimization

Build a cluster in the same LAN;

Control the number of nodes that split the cluster. The hash slots allocated on the redis instance need to be transferred between different instances. When the debt balancing instance is deleted, the data will be transferred between different instances. But the hash slot information is not large, and the data migration is gradual, but it is not the main problem;

Memory optimization

Control the length of the key: It is recommended to define the specifications before development to ensure that the key is simple and clear, and abbreviate the key according to the business as much as possible.

Avoid bigkey: The recommended size of string type is within 20KB. It is recommended to control the threshold of hash, list, set and zset, and it is recommended to control it within 5000.

Key setting expires: Make full use of memory.

Choose the appropriate data structure

String type, it is recommended to use integer type. Its underlying encoding will choose integer encoding, which has low memory overhead;

Hash type, it is recommended to control the element threshold. When there are few elements, the bottom layer will use a compressed list data structure, which has a small memory overhead; list type, it is recommended to control the element threshold. When there are few elements, the bottom layer will use a compressed list data structure, which has a small memory overhead; set type, It is recommended to store integer type. The underlying encoding will choose integer encoding, which has low memory overhead;

zset type, it is recommended to control the element threshold. When there are few elements, the bottom layer will use a compressed list data structure, which has low memory overhead;

Data compression: The client can use snappy, gzip and other compression algorithms to compress data before writing to redis to reduce memory usage. However, the client needs to decompress the data after reading the data, which will consume more CPU.

Enable memory elimination strategy

Avoid the default memory elimination strategy. Please choose an appropriate elimination strategy based on actual business to improve memory utilization.

LRU: Focus on the number of accesses, eliminate the least recently used keys, and have a wide range of usage scenarios. Redis's LRU uses an algorithm similar to LRU, adding an extra field with a length of 24 bits to the key, which is the timestamp of the last access. Adopt a lazy way to process: when performing a write operation, if the maximum memory is exceeded, the LRU elimination algorithm is executed once, 5 (the number can be set) keys are randomly sampled, and the oldest key is eliminated. If the maximum memory is still exceeded after elimination, the elimination will continue. .

LFU: Focus on access frequency, recommended when dealing with cache pollution.

Memory fragmentation optimization problem

Reasons: One is caused by the allocation strategy of the memory allocator. The memory allocator allocates according to a fixed size, rather than according to the actual requested size. If the requested bytes are within the requested bytes, 32 bytes are actually allocated; the other It is the memory fragmentation caused by part of the space that will be released after the redis key-value pair is deleted.

Positioning: Observe the indicator of men_fragmentation_ratio through the instruction INFO memory; if the indicator is between 1-1.5, it is normal; if the indicator is greater than 1.5, the memory fragmentation rate has exceeded 50%, and memory fragmentation needs to be processed;

Solution: Restart the redis instance;

Enable redis automatic memory fragmentation cleaning function.

Disk Optimization

Physically build the redis service: When persisting, redis uses the method of creating a sub-process (which will call the fork system of the operating system), and the execution of fork in a virtual machine environment is slower than that of a physical machine.

Persistence Optimization Mechanism

Do not enable persistence: redis is only used for caching, so there is no need to enable persistence to reduce disk overhead;

AOF optimization: process AOF in the background, configure apenfsync everyec to put the data persistence flush operation into the background thread for execution, and try to reduce the impact of redis writing to disk on performance;

Do not build high-frequency AOF persistence. The default frequency of AOF persistence is once per second. It is not recommended to modify this configuration. It can already guarantee that data will be lost for up to 1 second;

Enable hybrid persistence, redis4.0 supports hybrid persistence RDB incremental AOF;

Enable multi-thread configuration. Before redis 6.0, persistence was handled through the main thread fork sub-process, but forks were blocked synchronously. After 6.0, multiple processes are supported to handle persistence operations;

Cluster optimization

Slave performs persistence optimization: the master does not perform persistence and shares the pressure of master disk IO as much as possible; master-slave optimization: incremental mode, the master-slave synchronization method is designated as incremental mode, and the full RDB mode will not be selected. The mode is very performance consuming; when using the cascade synchronization mode, with one master and multiple slaves, multiple slaves come to the master to synchronize data, which will directly drag down the performance of the master.

Regarding this problem, redis supports cascade synchronization, that is, the master only synchronizes data to one salve, and then the data of other salves are synchronized from this salve to relieve the pressure on the master.

The actual size is recommended not to exceed 6G. If the instance is too large, the master-slave synchronization will be stuck, and in serious cases it will bring down the master.

AOF will be replayed during abnormal restart. If the instance is too large, data recovery will be abnormally slow.

Choke point optimization

Analysis: Since Redis is single-threaded when processing requests and instructions, its performance bottleneck is the synchronization blocking problem.

bigkeyquestion

Hazards: Reading and writing bigkey may cause timeout, and redis operates data in a single thread, which may seriously block the entire redis service. Moreover, a key will only be divided into one node, so the pressure of sketching cannot be shared. Bigkey detection: comes with the command bredis-cli-bigkeys. Redis comes with instructions that can only find the largest key among the five data types, which is not very useful and is not recommended. python scanning script. It can locate specific keys, but the accuracy is not high and is not recommended.

rdb_bigkeys tool. A tool written in Go, it is fast and highly accurate. It can also be directly exported to a csv file for easy viewing and recommendation.

Optimization: For non-string type bigkey, the element set can be split into multiples. For example, if a bigkey is split into 1000 keys, the suffix of the key uses hash modulo 1000.

Use local cache. For example, store the business ID version number in redis. Put the specific content in the local cache. Check the redis cache first for each query, and then check the version number with the local cache.

Optimizing bigkey is generally a laborious process. It is recommended to define specifications during development to avoid bigkey problems.

Expiration Policy

Scheduled deletion: Each expired key is given a scheduled job, and it is deleted directly when it expires. It has high memory utilization and high CPU usage. Lazy deletion: When the key is queried, it is judged whether the key has expired. If it has expired, it will be deleted. This results in low CPU usage and high memory utilization. Periodic deletion: scan every once in a while, expired keys are deleted directly, and the CPU and memory utilization are average.

1. Greedy strategy. Redis will set the expired key in a separate dictionary.

2. Scanning process. Select 20 keys from the expired dictionary and delete the expired keys among the 20 keys. If the proportion of deleted keys exceeds 1/4, repeat step 1.

Based on the above logic, in order to solve the problem of thread stuck caused by excessive looping, a timeout mechanism is added to the algorithm. The default time is 25ms.

3. Scan frequency: redis defaults to 10 expiration scans per second.

Redis defaults to lazy deletion and regular deletion.

Optimization: Turn on lazy-free, and the time-consuming operation of releasing memory will be executed in the background thread, supported by redis4.0.

Enable multi-threading mode. Before redis6.0, the expiration policy was a synchronous operation of the main thread. After 6.0, multi-threading is used for processing.

High complexity instructions

It is recommended to use scan to query in batches, and do not use keys. Not applicable to aggregation operations: redis uses a single-threaded model to process requests. When executing commands that are too complex (consuming more CPU resources), subsequent requests will be queued and cause delays, such as SINTER, SINTERSTORW, ZUNIONSTORE, ZINTERSTORE, etc. It is recommended to use scan to find out the elements in the collection in batches and perform aggregation calculations on the client.

Container class data operation: When there are too many container class elements, direct query will cause delays due to the network. It is recommended to query in batches.

When there are too many container elements, deleting keys directly may cause redis to freeze. It is recommended to delete them in batches.

The above is the detailed content of Redis optimization guide: network, memory, disk, blocking points. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

Local fine-tuning of DeepSeek class models faces the challenge of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

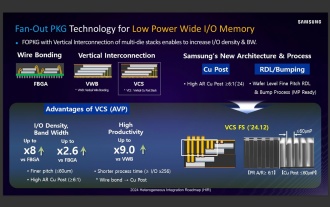

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

According to news from this website on September 3, Korean media etnews reported yesterday (local time) that Samsung Electronics and SK Hynix’s “HBM-like” stacked structure mobile memory products will be commercialized after 2026. Sources said that the two Korean memory giants regard stacked mobile memory as an important source of future revenue and plan to expand "HBM-like memory" to smartphones, tablets and laptops to provide power for end-side AI. According to previous reports on this site, Samsung Electronics’ product is called LPWide I/O memory, and SK Hynix calls this technology VFO. The two companies have used roughly the same technical route, which is to combine fan-out packaging and vertical channels. Samsung Electronics’ LPWide I/O memory has a bit width of 512

DDR5 MRDIMM and LPDDR6 CAMM memory specifications are ready for launch, JEDEC releases key technical details

Jul 23, 2024 pm 02:25 PM

DDR5 MRDIMM and LPDDR6 CAMM memory specifications are ready for launch, JEDEC releases key technical details

Jul 23, 2024 pm 02:25 PM

According to news from this website on July 23, the JEDEC Solid State Technology Association, the microelectronics standard setter, announced on the 22nd local time that the DDR5MRDIMM and LPDDR6CAMM memory technical specifications will be officially launched soon, and introduced the key details of these two memories. The "MR" in DDR5MRDIMM stands for MultiplexedRank, which means that the memory supports two or more Ranks and can combine and transmit multiple data signals on a single channel without additional physical The connection can effectively increase the bandwidth. JEDEC has planned multiple generations of DDR5MRDIMM memory, with the goal of eventually increasing its bandwidth to 12.8Gbps, compared with the current 6.4Gbps of DDR5RDIMM memory.

Lexar God of War Wings ARES RGB DDR5 8000 Memory Picture Gallery: Colorful White Wings supports RGB

Jun 25, 2024 pm 01:51 PM

Lexar God of War Wings ARES RGB DDR5 8000 Memory Picture Gallery: Colorful White Wings supports RGB

Jun 25, 2024 pm 01:51 PM

When the prices of ultra-high-frequency flagship memories such as 7600MT/s and 8000MT/s are generally high, Lexar has taken action. They have launched a new memory series called Ares Wings ARES RGB DDR5, with 7600 C36 and 8000 C38 is available in two specifications. The 16GB*2 sets are priced at 1,299 yuan and 1,499 yuan respectively, which is very cost-effective. This site has obtained the 8000 C38 version of Wings of War, and will bring you its unboxing pictures. The packaging of Lexar Wings ARES RGB DDR5 memory is well designed, using eye-catching black and red color schemes with colorful printing. There is an exclusive &quo in the upper left corner of the packaging.

It is reported that Samsung Electronics has confirmed investment in the 1cnm DRAM memory production line of Pyeongtaek P4 factory and aims to put it into operation in June next year.

Aug 12, 2024 pm 04:31 PM

It is reported that Samsung Electronics has confirmed investment in the 1cnm DRAM memory production line of Pyeongtaek P4 factory and aims to put it into operation in June next year.

Aug 12, 2024 pm 04:31 PM

According to news from this site on August 12, Korean media ETNews reported that Samsung Electronics has internally confirmed its investment plan to build a 1cnm DRAM memory production line at the Pyeongtaek P4 factory. The production line is targeted to be put into operation in June next year. Pyeongtaek P4 is a comprehensive semiconductor production center divided into four phases. In the earlier planning, the first phase was for NAND flash memory, the second phase was for logic foundry, and the third and fourth phases were for DRAM memory. Samsung has introduced DRAM production equipment in the first phase of P4, but has shelved the second phase of construction. 1cnm DRAM is the sixth-generation 20~10nm memory process, and each company's 1cnm (or corresponding 1γnm) products have not yet been officially released. Korean media reported that Samsung Electronics plans to start 1cnm memory production at the end of this year. ▲Samsung Pyeongtaek

iPhone 17 series major upgrade: all series have LTPO screens and up to 12GB of memory

Jul 24, 2024 pm 01:39 PM

iPhone 17 series major upgrade: all series have LTPO screens and up to 12GB of memory

Jul 24, 2024 pm 01:39 PM

Recently, a blogger revealed the parameters of the iPhone 17 series. This series will be equipped with LTPO screens as standard, and the memory will be upgraded to up to 12GB. The iPhone17 series will include four models: iPhone17, iPhone17Pro, iPhone17ProMax and iPhone17Slim, with screen sizes of 6.27 inches, 6.27 inches, 6.86 inches and 6.65 inches respectively. All models will be equipped with LTPO panels and support ProMotion variable refresh rates. This is the first time Apple has provided high refresh rate screens on standard models. In addition, the two Pro models iPhone17Pro and iPhone17ProMax will come standard with 1

Colorful launches iGame Jiachen Year of the Dragon limited memory, 48GB DDR5 6800 set for 1,399 yuan

Jul 27, 2024 am 09:04 AM

Colorful launches iGame Jiachen Year of the Dragon limited memory, 48GB DDR5 6800 set for 1,399 yuan

Jul 27, 2024 am 09:04 AM

According to news from this website on July 26, Colorful announced the launch of the iGame "Jiachen Zhilong" series of memory, which can be used with the previously launched Year of the Dragon limited series of boards. This series of memory will initially provide a 48GB (24G×2) DDR5-6800 (CL34) set, which uses a "Dragon Scale" cooling vest and supports "Dragon Teng" RGB lighting effects. JD.com displays the price at 1,399 yuan. According to reports, the appearance design of this series of memory adopts the traditional Chinese colors of "steamed chestnut" and "缾烼", with "Xiangyun" and "Yufeng" on the front, and the "Jiachen Zhilong" totem on the back, and also uses a gold edge as the whole Modification, with layered light guides that symbolize "increasing improvement". This series of memory uses SK Hynix original factory selected particles, with a main frequency of up to 6800MT/s, and supports X

SK Hynix showcases HBM3E, CMM-DDR5 and other AI memory solutions

Jun 20, 2024 am 08:37 AM

SK Hynix showcases HBM3E, CMM-DDR5 and other AI memory solutions

Jun 20, 2024 am 08:37 AM

According to news from this site on June 19, SK Hynix demonstrated its latest AI memory solution at the HPEDiscover2024 exhibition held in Las Vegas from June 17 to 20. Facing the AI market, SK Hynix exhibited HBM3E memory samples and CXL memory module CMM-DDR5. Compared with systems equipped only with DDR5 DRAM, CMM-DDR5 can increase system bandwidth by up to 50% and capacity by up to 100%. In addition, the company also demonstrated DDR5RDIMM and MCRDIMM memory for servers, and LPCAMM2 memory modules for notebook computers. This website noticed that SK Hynix’s latest enterprise-class solid-state drives also participated in the exhibition, including