Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

How to use Linux pipe commands to improve work efficiency

How to use Linux pipe commands to improve work efficiency

How to use Linux pipe commands to improve work efficiency

Title: How to use Linux pipeline commands to improve work efficiency

In daily work, Linux system is a widely used operating system, and its powerful pipeline command function can Help us process data and tasks efficiently. This article will introduce how to use Linux pipeline commands to improve work efficiency and provide specific code examples.

1. What is the Linux pipeline command?

Linux pipe command is a powerful command line tool that can process the output of one command as the input of another command. By combining multiple commands, complex data processing and task automation can be achieved, improving work efficiency.

2. Commonly used Linux pipeline commands

- grep: used to search for a specified pattern in a file and output matching lines.

- cut: used to extract specified columns from each row of data.

- sort: used to sort input.

- awk: used to process text data and generate reports.

- sed: Used to replace, delete and other operations on text.

- wc: Used to count the number of lines, words and characters in the file.

3. Examples of using Linux pipeline commands to improve work efficiency

- Data analysis and processing

Suppose we have a database that contains student scores The text file "grades.txt" has the format of each line as "student's name, student number, Chinese grade, math grade, English grade". We can achieve the following tasks through pipeline commands:

cat grades.txt | cut -d ' ' -f 3-5 | sort -k 1,1

The above command first reads the contents of the grades.txt file, and then uses the cut command to extract columns 3 to 5 (i.e., Chinese, mathematics, and English scores), Finally, use the sort command to sort by column 1 (student number). This way we can easily analyze and compare student performance.

- Text Processing and Filtering

Suppose we have a text file "access.log" containing server logs, and we want to find out which files contain the keyword "error" OK, and count the number of occurrences. We can use the following pipeline command:

cat access.log | grep 'error' | wc -l

The above command first reads the contents of the access.log file, then uses the grep command to filter the lines containing the keyword "error", and finally uses the wc command to count the filtered lines. The number is the number of times the keyword "error" is included.

- File content modification

Suppose we have a text file "article.txt" containing an English article, and we want to replace all the words "Linux" in it with " Linux system". We can use the following pipeline command:

cat article.txt | sed 's/Linux/Linux系统/g' > new_article.txt

The above command first reads the contents of the article.txt file, then uses the sed command to replace all occurrences of the word "Linux" with "Linux system", and finally the modified The content is output to the new_article.txt file.

Through the above examples, we can see that using Linux pipeline commands can quickly and efficiently process various data and tasks and improve work efficiency. Of course, Linux pipeline commands have many other functions and usages, and readers can further learn and apply them as needed. I hope this article is helpful to everyone, thank you for reading!

The above is the detailed content of How to use Linux pipe commands to improve work efficiency. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

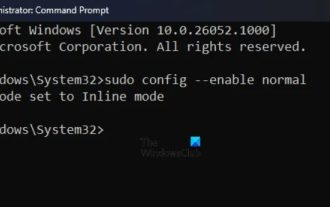

How to run SUDO commands in Windows 11/10

Mar 09, 2024 am 09:50 AM

How to run SUDO commands in Windows 11/10

Mar 09, 2024 am 09:50 AM

The sudo command allows users to run commands in elevated privilege mode without switching to superuser mode. This article will introduce how to simulate functions similar to sudo commands in Windows systems. What is the Shudao Command? Sudo (short for "superuser do") is a command-line tool that allows users of Unix-based operating systems such as Linux and MacOS to execute commands with elevated privileges typically held by administrators. Running SUDO commands in Windows 11/10 However, with the launch of the latest Windows 11 Insider preview version, Windows users can now experience this feature. This new feature enables users to

How to check the MAC address of the network card in Win11? How to use the command to obtain the MAC address of the network card in Win11

Feb 29, 2024 pm 04:34 PM

How to check the MAC address of the network card in Win11? How to use the command to obtain the MAC address of the network card in Win11

Feb 29, 2024 pm 04:34 PM

This article will introduce readers to how to use the command prompt (CommandPrompt) to find the physical address (MAC address) of the network adapter in Win11 system. A MAC address is a unique identifier for a network interface card (NIC), which plays an important role in network communications. Through the command prompt, users can easily obtain the MAC address information of all network adapters on the current computer, which is very helpful for network troubleshooting, configuring network settings and other tasks. Method 1: Use "Command Prompt" 1. Press the [Win+X] key combination, or [right-click] click the [Windows logo] on the taskbar, and in the menu item that opens, select [Run]; 2. Run the window , enter the [cmd] command, and then

Where is hyperv enhanced session mode? Tips for enabling or disabling Hyper-V enhanced session mode using commands in Win11

Feb 29, 2024 pm 05:52 PM

Where is hyperv enhanced session mode? Tips for enabling or disabling Hyper-V enhanced session mode using commands in Win11

Feb 29, 2024 pm 05:52 PM

In Win11 system, you can enable or disable Hyper-V enhanced session mode through commands. This article will introduce how to use commands to operate and help users better manage and control Hyper-V functions in the system. Hyper-V is a virtualization technology provided by Microsoft. It is built into Windows Server and Windows 10 and 11 (except Home Edition), allowing users to run virtual operating systems in Windows systems. Although virtual machines are isolated from the host operating system, they can still use the host's resources, such as sound cards and storage devices, through settings. One of the key settings is to enable Enhanced Session Mode. Enhanced session mode is Hyper

cmdtelnet command is not recognized as an internal or external command

Jan 03, 2024 am 08:05 AM

cmdtelnet command is not recognized as an internal or external command

Jan 03, 2024 am 08:05 AM

The cmd window prompts that telnet is not an internal or external command. This problem must have deeply troubled you. This problem does not appear because there is anything wrong with the user's operation. Users do not need to worry too much. All it takes is a few small steps. Operation settings can solve the problem of cmd window prompting telnet is not an internal or external command. Let’s take a look at the solution to the cmd window prompting telnet is not an internal or external command brought by the editor today. The cmd window prompts that telnet is not an internal or external command. Solution: 1. Open the computer's control panel. 2. Find programs and functions. 3. Find Turn Windows features on or off on the left. 4. Find “telnet client

Super practical! Sar commands that will make you a Linux master

Mar 01, 2024 am 08:01 AM

Super practical! Sar commands that will make you a Linux master

Mar 01, 2024 am 08:01 AM

1. Overview The sar command displays system usage reports through data collected from system activities. These reports are made up of different sections, each containing the type of data and when the data was collected. The default mode of the sar command displays the CPU usage at different time increments for various resources accessing the CPU (such as users, systems, I/O schedulers, etc.). Additionally, it displays the percentage of idle CPU for a given time period. The average value for each data point is listed at the bottom of the report. sar reports collected data every 10 minutes by default, but you can use various options to filter and adjust these reports. Similar to the uptime command, the sar command can also help you monitor the CPU load. Through sar, you can understand the occurrence of excessive load

Artifact in Linux: Principles and Applications of eventfd

Feb 13, 2024 pm 08:30 PM

Artifact in Linux: Principles and Applications of eventfd

Feb 13, 2024 pm 08:30 PM

Linux is a powerful operating system that provides many efficient inter-process communication mechanisms, such as pipes, signals, message queues, shared memory, etc. But is there a simpler, more flexible, and more efficient way to communicate? The answer is yes, that is eventfd. eventfd is a system call introduced in Linux version 2.6. It can be used to implement event notification, that is, to deliver events through a file descriptor. eventfd contains a 64-bit unsigned integer counter maintained by the kernel. The process can read/change the counter value by reading/writing this file descriptor to achieve inter-process communication. What are the advantages of eventfd? It has the following features

What is the correct way to restart a service in Linux?

Mar 15, 2024 am 09:09 AM

What is the correct way to restart a service in Linux?

Mar 15, 2024 am 09:09 AM

What is the correct way to restart a service in Linux? When using a Linux system, we often encounter situations where we need to restart a certain service, but sometimes we may encounter some problems when restarting the service, such as the service not actually stopping or starting. Therefore, it is very important to master the correct way to restart services. In Linux, you can usually use the systemctl command to manage system services. The systemctl command is part of the systemd system manager

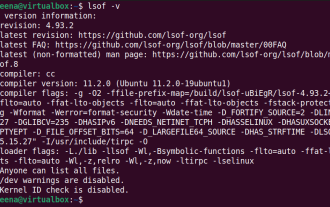

How to use LSOF to monitor ports in real time

Mar 20, 2024 pm 02:07 PM

How to use LSOF to monitor ports in real time

Mar 20, 2024 pm 02:07 PM

LSOF (ListOpenFiles) is a command line tool mainly used to monitor system resources similar to Linux/Unix operating systems. Through the LSOF command, users can get detailed information about the active files in the system and the processes that are accessing these files. LSOF can help users identify the processes currently occupying file resources, thereby better managing system resources and troubleshooting possible problems. LSOF is powerful and flexible, and can help system administrators quickly locate file-related problems, such as file leaks, unclosed file descriptors, etc. Via LSOF Command The LSOF command line tool allows system administrators and developers to: Determine which processes are currently using a specific file or port, in the event of a port conflict