Web Front-end

Web Front-end

CSS Tutorial

CSS Tutorial

14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange

14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange

14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange

No matter how rich the content of the website is, it is meaningless if it is so slow that it cannot be accessed; no matter how good the SEO is, it is useless if the search spiders cannot find it; no matter how user-friendly the website is designed by UE, if the user continues to browse Even if you can't see it, it's empty talk.

What are the most basic things of a website?

——Content? SEO (Search Engine Optimization)? UE (User Experience)? None of them are right! It's speed!

No matter how rich the content of the website is, it is meaningless if it is so slow that it cannot be accessed; no matter how good the SEO is, it is useless if the search spiders cannot catch it; no matter how user-friendly the website is designed by UE, if the user connects Even if you can't see it, it's empty talk.

So the efficiency of web pages is definitely the most noteworthy aspect. How can we improve the efficiency of a web page? Steve Souders (Steve Souders' information http://www.php.cn/) proposed 14 guidelines for improving web page efficiency, and these guidelines will also be the theoretical basis of the YSlow tool we introduce in the next article:

Make Fewer HTTP Requests

Use a Content Delivery Network

Add an Expires Header

Gzip Components

Put CSS at the Top

Move Scripts to the Bottom

Avoid CSS Expressions

Make JavaScript and CSS External

Reduce DNS Lookups

Minify JavaScript

Avoid Redirects

Remove Duplicate Scripts

Configure ETags

Make 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange Cacheable

Here we will explain these guidelines one by one, and I will explain in detail the guidelines that are closely related to developers. My personal skills are really limited, so mistakes and ignorance are inevitable. Please give me some advice from an expert.

Article 1: Make Fewer HTTP Requests Reduce the number of HTTP Request requests as much as possible.

80% of user response time is wasted on the front end. These times are mainly caused by downloading images, style sheets, JavaScript scripts, flash and other files. Reducing the number of Request requests for these resource files will be the focus of improving web page display efficiency.

There seems to be a contradiction here, that is, if I reduce a lot of pictures, styles, scripts or flash, wouldn’t the web page be bare, and how ugly would that be? Actually this is a misunderstanding. We are just saying to reduce it as much as possible, not saying it cannot be used at all. To reduce the number of Request requests for these files, there are of course some tips and suggestions:

1: Use one large picture instead of multiple small pictures.

This is indeed a bit subversive of traditional thinking. In the past, we always thought that the sum of the download speed of multiple small pictures would be less than the download speed of one large picture. But now the results of analyzing multiple pages using the httpwatch tool show that this is not the case.

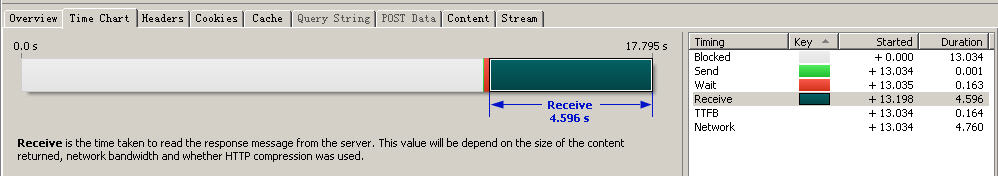

The first picture is the analysis result of a large picture of 337*191px with a size of 40528bytes.

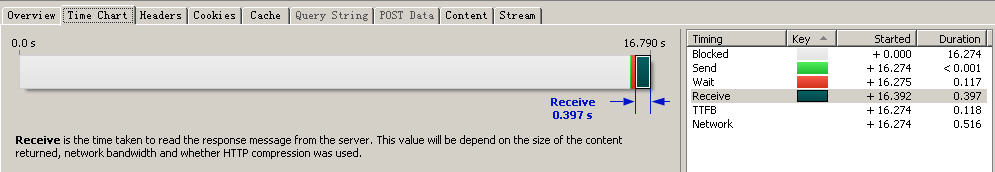

The second picture is the analysis result of a small picture of 280*90px with a size of 13883 bytes.

Analysis results of a 337*191px large image with a size of 40528 bytes (click on the image to view the complete large image)

Analysis results of a 280*90px small picture with a size of 13883 bytes (click on the picture to view the complete large picture)

The first big picture takes time:

Blocked: 13.034s

Send: 0.001s

Wait: 0.163s

Receive: 4.596s

TTFB: 0.164 s

NetWork: 4.760s

Power consumption: 17.795s

The actual time spent transmitting large files is Reveive time, which is 4.596s. Most of the time is used to retrieve the cache and determine The Blocked time to determine whether the link is valid takes 13.034s, accounting for 73.2% of the total time.

The second small picture takes time:

Blocked: 16.274s

Send: less than 0.001s

Wait: 0.117s

Receive: 0.397s

TTFB: 0.118s

NetWork: 0.516s

Power consumption: 16.790s

The actual time spent transferring files is the Reveive time, which is 0.397s, which is indeed longer than The 4.596s for the large file just now is much smaller. But his Blocked time was 16.274s, accounting for 97% of the total time.

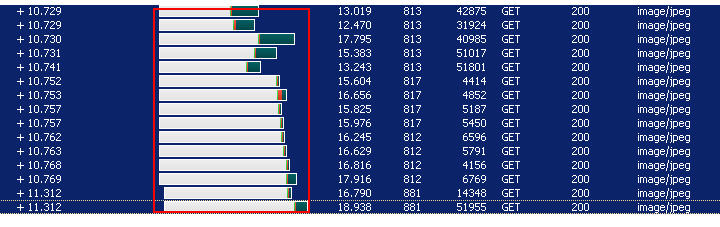

If these data are not enough to convince you, let’s take a look at the picture below. Here's a list of time spent across all images on a web page. Of course, the pictures inside are large and small, with different specifications.

About 80% of the time is the Blocked time used to retrieve the cache and determine whether the link is valid.

The navy one is the Reveive time spent transferring files, while the white one in front is the Blocked time to retrieve the cache and confirm whether the link is valid. The ironclad facts tell us:

The time required to download large files and small files They are indeed different, but the absolute value of the difference is not large. Moreover, the time required for downloading accounts for a small proportion of the total time spent.

About 80% of the time is Blocked time used to retrieve the cache and determine whether the link is valid. The time spent is roughly the same regardless of file size. And it accounts for a huge proportion of the total time spent.

The total time consumed for a 100k large image is definitely greater than the total time consumed for four 25k small images. And the main difference is that the Blocked time of 4 small pictures is definitely greater than the Blocked time of 1 large picture.

So if possible, use large pictures instead of too many trivial small pictures. This is also the reason why flip doors are more efficient than sliding doors implemented by picture replacement.

However, please note: You cannot use a single image that is too large, because that will affect the user experience. For example, using a background image that is several megabytes in size is definitely not a good idea.

2: Merge your css files.

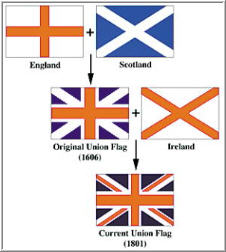

Picture: Merger and Fusion

I made a mistake before, you will know it when you read my series of articles "Organization and Planning of Style Sheets". At that time, in order to facilitate the organization and planning of style sheets, I separated style sheet files used for different purposes and formed different css files. Then reference multiple css files as needed in the page. According to the principle of "reduce the number of HTTP Request requests as much as possible", we know that that is indeed unreasonable, because it will generate more HTTP Request requests. Thereby reducing the efficiency of the web page. Therefore, from the perspective of improving web page efficiency, we should still write all css in the same css file. But the problem comes again. So how to organize and plan style sheets well? This is indeed a contradiction. What I do now is use two versions. Editorial version and published version. The editorial version still uses multiple css files for easier planning and organization. When publishing, merge multiple css files into one file to reduce the number of HTTPRequest requests.

3: Merge your javascript files.

The reasons and solutions are the same as above and will not be discussed again.

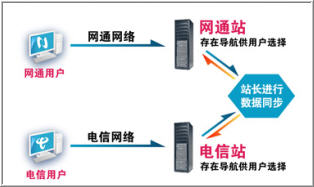

Article 2: Use a Content Delivery Network Use CDN

This may seem very profound, but as long as it is combined with China’s Network characteristics, this is not difficult to understand. "Northern server", "Southern server", "Telecom server", "Netcom server"... these words sound so familiar and depressing. If a telecom user in Beijing tries to open a web page similar to the "Wallpaper Collection" post from the Netcom server in Guangdong, you will have a profound understanding.

Since this is not a criterion within the capabilities of our developers, there is not much to say here.

Picture: This picture has some Chinese characteristics

Article 3: Add an Expires Header Add cycle header

This is not controlled by the developer, but is the responsibility of the website server administrator. So, it doesn’t matter if you as a developer don’t understand and understand. It's better to tell the company's web server administrator about this guideline.

Item 4: Gzip Components enables Gzip compression

Everyone should be familiar with this. The idea of Gzip is to compress the file on the server side first and then transmit it. This has special effects on larger plain text files. Since this is not the job of a developer but a website server administrator, I won’t go into detail here. If you are interested in this, you can contact your company's website server administrator.

Article 5: Put CSS at the Top Put the CSS style at the top of the page.

Whether it is HTML, XHTML or CSS, they are all interpreted languages, not compiled languages. So if the CSS is at the top, then when the browser parses the structure, it can already render the page. In this way, the page structure will appear bare first, and then CSS will render, and the page will suddenly become gorgeous again. This will be too "dramatic" page browsing experience.

Item 6: Move Scripts to the Bottom Put the scripts at the bottom

The reason is the same as item 5. It's just that scripts are generally used for user interaction. So if the page hasn’t come out yet and users don’t even know what the page looks like, then talking about interaction is just nonsense. So, scripts are just the opposite of CSS, scripts should be placed at the bottom of the page.

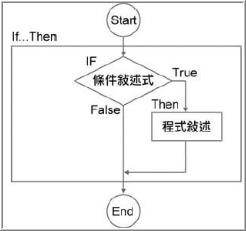

Article 7: Avoid CSS Expressions Avoid using Expressions in CSS

Figure: Expressions in CSS are actually a kind of if judgment

First of all, it is necessary to explain what CSS Expressions are. In fact, it is like if...else...statements in other languages. In this way, simple logical judgments can be made in CSS. Take a simple example -

style>

input{ background-color: span>expression((this.readOnly && this.readOnly==true)?"#0000ff":"#ff0000")}

style>

INPUT TYPE="text" NAME="">

span>INPUT TYPE ="text" NAME="" readonly="true">

In this way, CSS can use different styles depending on some situations. If you are interested in this, you can go to my blog to read related articles - "Series of Articles on Expression in CSS". But the price of Expressions in CSS is extremely high. When your page has many elements that require rendering effects based on judgment, your browser will be in a state of suspended animation for a long time, thus giving users an extremely poor user experience.

Article 8: Make JavaScript and CSS External separate javascript and css into external files

This article seems to be a bit like the first article contradiction. Indeed, from the perspective of the number of HTTP request requests, this does indeed reduce efficiency. But the reason for doing this is because of another important consideration - caching. Because external reference files will be cached by the browser, if the javascript and css are large in size, we will separate them into external files. In this way, when the user only browses once, these large js and css files can be cached, thus greatly improving the efficiency of the user's next visit.

Article 9: Reduce DNS Lookups Reduce DNS queries

DNS domain name resolution system. Everyone knows that the reason why we can remember so many URLs is because we remember words, not things like http://www.php.cn/, and help us combine those words with 202.153.125.45 Such IP addresses are linked to DNS. So what real guiding significance does this article have for us? In fact, there are two:

1: If it is not necessary, please do not put the website on two servers.

2: Do not scatter too many images, css files, js files, flash files, etc. in different network spaces on the web page. This is why a post that only posts wallpaper images from one website will appear much faster than a post with wallpaper images from different websites.

Article 10: Minify JavaScript and CSS Reduce the size of JavaScript and CSS files

This is easy to understand. Remove all unnecessary blank lines, spaces, and comments from your final published version. Obviously manual processing is too inefficient. Fortunately, there are tools for compressing these things everywhere on the Internet. Tools for compressing JavaScript code size can be found everywhere, so I won’t list them here. Here I only provide an online tool website for compressing CSS code size - http://www. php.cn/

It provides a variety of compression methods and can adapt to a variety of requirements.

Article 11: Avoid Redirects

I only interpret this article from the perspective of a web developer. So what can we decipher? 2 o'clock -

1: "This domain name has expired. In 5 seconds, the page will jump to http://www.php.cn/", this sentence does look very familiar. However, I'm wondering, why not link directly to that page?

2: Please write down some link addresses more clearly. For example: write http://www.php.cn/ as http://www.php.cn/ (note the last "/" symbol). It is true that both URLs can access my blog, but in fact, they are different. The result of http://www.php.cn/ is a 301 response, which will be redirected to http:// /www.php.cn/ . But obviously, some time was wasted in the middle.

Article 12 Remove Duplicate Scripts Remove duplicate scripts

Picture: Say "No!" to duplication

The principle of this principle is very simple, but in actual work, many people are prevaricating because of reasons such as "the project is tight on time", "too tired", "not planned well in the early stage"... You can indeed find many reasons not to deal with these redundant and repetitive script codes, if your website does not require higher efficiency and later maintenance.

It is precisely at this point that I would like to remind everyone that some javascript frameworks and javascript packages must be used with caution. At least ask: How much convenience does using this js kit bring us and how much work efficiency is improved? Then, compare it with the negative effects it brings due to redundant and repeated code.

Article 13: Configure ETags Configure your entity tags

First, let’s talk about what Etag is. Etag (Entity tags) entity tags. This tag is a little different from the tag cloud tags you often see online. This Etag is not for users, but for browser caching. Etag is a mechanism for the server to tell the browser cache whether the content in the cache has changed. Through Etag, the browser can know whether the content in the current cache is the latest and whether it needs to be downloaded from the server again. This is somewhat similar to the concept of "Last-Modified". It's a shame that as a web developer there's nothing you can do about it. He is still the job scope of the website server staff. If you are interested in this, you can consult your company's website server administrator.

Article 14: Make 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange Cacheable The above guidelines also apply to 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange

Figure: The use of 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange must be appropriate

Nowadays 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange seems to be a bit mythical. It seems that as long as the web page has 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange, then there will be no efficiency problem. This is actually a kind of misunderstanding. Poor use of 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange will not make your web page more efficient, but will reduce it. 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange is indeed a good thing, but please don't myth it too much. Also consider the above guidelines when using 14 Things to Note on Improving Web Page Efficiency Graphics_Experience Exchange.

Postscript:

Of course, the above are just theoretical guidelines for your reference. Specific situations still need to be dealt with in detail. Theories and principles are only used to guide practical work, but they must not be memorized by rote.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Building an Ethereum app using Redwood.js and Fauna

Mar 28, 2025 am 09:18 AM

Building an Ethereum app using Redwood.js and Fauna

Mar 28, 2025 am 09:18 AM

With the recent climb of Bitcoin’s price over 20k $USD, and to it recently breaking 30k, I thought it’s worth taking a deep dive back into creating Ethereum

Vue 3

Apr 02, 2025 pm 06:32 PM

Vue 3

Apr 02, 2025 pm 06:32 PM

It's out! Congrats to the Vue team for getting it done, I know it was a massive effort and a long time coming. All new docs, as well.

Can you get valid CSS property values from the browser?

Apr 02, 2025 pm 06:17 PM

Can you get valid CSS property values from the browser?

Apr 02, 2025 pm 06:17 PM

I had someone write in with this very legit question. Lea just blogged about how you can get valid CSS properties themselves from the browser. That's like this.

A bit on ci/cd

Apr 02, 2025 pm 06:21 PM

A bit on ci/cd

Apr 02, 2025 pm 06:21 PM

I'd say "website" fits better than "mobile app" but I like this framing from Max Lynch:

Stacked Cards with Sticky Positioning and a Dash of Sass

Apr 03, 2025 am 10:30 AM

Stacked Cards with Sticky Positioning and a Dash of Sass

Apr 03, 2025 am 10:30 AM

The other day, I spotted this particularly lovely bit from Corey Ginnivan’s website where a collection of cards stack on top of one another as you scroll.

Using Markdown and Localization in the WordPress Block Editor

Apr 02, 2025 am 04:27 AM

Using Markdown and Localization in the WordPress Block Editor

Apr 02, 2025 am 04:27 AM

If we need to show documentation to the user directly in the WordPress editor, what is the best way to do it?

Comparing Browsers for Responsive Design

Apr 02, 2025 pm 06:25 PM

Comparing Browsers for Responsive Design

Apr 02, 2025 pm 06:25 PM

There are a number of these desktop apps where the goal is showing your site at different dimensions all at the same time. So you can, for example, be writing

Let's use (X, X, X, X) for talking about specificity

Mar 24, 2025 am 10:37 AM

Let's use (X, X, X, X) for talking about specificity

Mar 24, 2025 am 10:37 AM

I was just chatting with Eric Meyer the other day and I remembered an Eric Meyer story from my formative years. I wrote a blog post about CSS specificity, and