Web Front-end

Web Front-end

JS Tutorial

JS Tutorial

Master the key details of promise specifications and improve your programming skills

Master the key details of promise specifications and improve your programming skills

Master the key details of promise specifications and improve your programming skills

In modern JavaScript programming, asynchronous operations are very common. In the past, to handle asynchronous operations, we used callback functions to handle asynchronous results. However, the problem of nested callback functions soon gave rise to the concept of "callback hell". To solve this problem, JavaScript introduced the Promise specification.

Promise is a specification for handling asynchronous operations, which provides a more elegant way to handle asynchronous code and handle asynchronous results. Its core idea is to use chain calls to handle asynchronous operations.

To master the key details of the Promise specification, you first need to understand the basic characteristics of Promise. Promise has three states: pending (in progress), fulfilled (successful) and rejected (failed). When a Promise object is created, its initial state is pending. When the asynchronous operation completes successfully, the Promise enters the fulfilled state. When the asynchronous operation fails, the Promise enters the rejected state.

A Promise object can register two callback functions through the then method, one for processing the result of a successful asynchronous operation, and the other for processing the result of a failed asynchronous operation. These two callback functions are passed in as the two parameters of the then method. When the Promise is in the fulfilled state, the first callback function will be called and the result of the asynchronous operation will be passed in; when the Promise is in the rejected state, the second callback function will be called and the error message will be passed in.

In addition, Promise also has a catch method to catch possible errors. The catch method receives a callback function as a parameter. When the Promise is in the rejected state, the callback function will be called and error information will be passed in.

In addition to the above basic features, Promise also has some other important features, such as: chain calls of promises, parallel execution of multiple asynchronous operations, error handling, etc. To grasp these details, we need to understand the Promise specification in depth.

When using Promise, some common issues and precautions also need our attention. First, pay attention to Promise error handling. Generally speaking, we should use the catch method at the end of the chain call to handle errors to ensure that all exceptions can be caught. In addition, you should avoid using the throw statement directly in the Promise constructor to throw exceptions, and use the reject method to handle exceptions.

In addition, we should also pay attention to the sequential execution of Promise. Due to the characteristics of Promise, multiple Promise objects can be executed in parallel. However, if we need to perform multiple asynchronous operations in sequence, we can use Promise's chained calls to achieve this.

Finally, we need to pay attention to the performance issues of Promise. Since Promise will continuously create new Promise objects, if the level of chain calls is too deep, it may cause excessive memory usage. In order to solve this problem, we can use async/await or Promise.all and other methods to optimize performance.

In short, mastering the key details of the Promise specification is very important to improve our programming skills. Only by deeply understanding the characteristics and usage of Promise can we better handle asynchronous operations and improve the readability and maintainability of the code. We hope that by learning and practicing the Promise specification, we can handle asynchronous operations more comfortably in JavaScript programming.

The above is the detailed content of Master the key details of promise specifications and improve your programming skills. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

What to do if your Huawei phone has insufficient memory (Practical methods to solve the problem of insufficient memory)

Apr 29, 2024 pm 06:34 PM

What to do if your Huawei phone has insufficient memory (Practical methods to solve the problem of insufficient memory)

Apr 29, 2024 pm 06:34 PM

Insufficient memory on Huawei mobile phones has become a common problem faced by many users, with the increase in mobile applications and media files. To help users make full use of the storage space of their mobile phones, this article will introduce some practical methods to solve the problem of insufficient memory on Huawei mobile phones. 1. Clean cache: history records and invalid data to free up memory space and clear temporary files generated by applications. Find "Storage" in the settings of your Huawei phone, click "Clear Cache" and select the "Clear Cache" button to delete the application's cache files. 2. Uninstall infrequently used applications: To free up memory space, delete some infrequently used applications. Drag it to the top of the phone screen, long press the "Uninstall" icon of the application you want to delete, and then click the confirmation button to complete the uninstallation. 3.Mobile application to

Detailed steps for cleaning memory in Xiaohongshu

Apr 26, 2024 am 10:43 AM

Detailed steps for cleaning memory in Xiaohongshu

Apr 26, 2024 am 10:43 AM

1. Open Xiaohongshu, click Me in the lower right corner 2. Click the settings icon, click General 3. Click Clear Cache

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

Local fine-tuning of DeepSeek class models faces the challenge of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

1. First, enter the Edge browser and click the three dots in the upper right corner. 2. Then, select [Extensions] in the taskbar. 3. Next, close or uninstall the plug-ins you do not need.

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

The familiar open source large language models such as Llama3 launched by Meta, Mistral and Mixtral models launched by MistralAI, and Jamba launched by AI21 Lab have become competitors of OpenAI. In most cases, users need to fine-tune these open source models based on their own data to fully unleash the model's potential. It is not difficult to fine-tune a large language model (such as Mistral) compared to a small one using Q-Learning on a single GPU, but efficient fine-tuning of a large model like Llama370b or Mixtral has remained a challenge until now. Therefore, Philipp Sch, technical director of HuggingFace

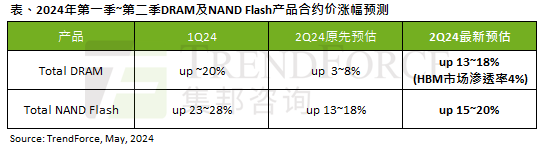

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

According to a TrendForce survey report, the AI wave has a significant impact on the DRAM memory and NAND flash memory markets. In this site’s news on May 7, TrendForce said in its latest research report today that the agency has increased the contract price increases for two types of storage products this quarter. Specifically, TrendForce originally estimated that the DRAM memory contract price in the second quarter of 2024 will increase by 3~8%, and now estimates it at 13~18%; in terms of NAND flash memory, the original estimate will increase by 13~18%, and the new estimate is 15%. ~20%, only eMMC/UFS has a lower increase of 10%. ▲Image source TrendForce TrendForce stated that the agency originally expected to continue to

Which one has better web performance, golang or java?

Apr 21, 2024 am 12:49 AM

Which one has better web performance, golang or java?

Apr 21, 2024 am 12:49 AM

Golang is better than Java in terms of web performance for the following reasons: a compiled language, directly compiled into machine code, has higher execution efficiency. Efficient garbage collection mechanism reduces the risk of memory leaks. Fast startup time without loading the runtime interpreter. Request processing performance is similar, and concurrent and asynchronous programming are supported. Lower memory usage, directly compiled into machine code without the need for additional interpreters and virtual machines.

What does sizeof mean in c language

Apr 29, 2024 pm 07:48 PM

What does sizeof mean in c language

Apr 29, 2024 pm 07:48 PM

sizeof is an operator in C that returns the number of bytes of memory occupied by a given data type or variable. It serves the following purposes: Determines data type sizes Dynamic memory allocation Obtains structure and union sizes Ensures cross-platform compatibility