Technology peripherals

Technology peripherals

AI

AI

Design and implementation of high-performance LLM inference framework

Design and implementation of high-performance LLM inference framework

Design and implementation of high-performance LLM inference framework

1. Overview of large language model inference

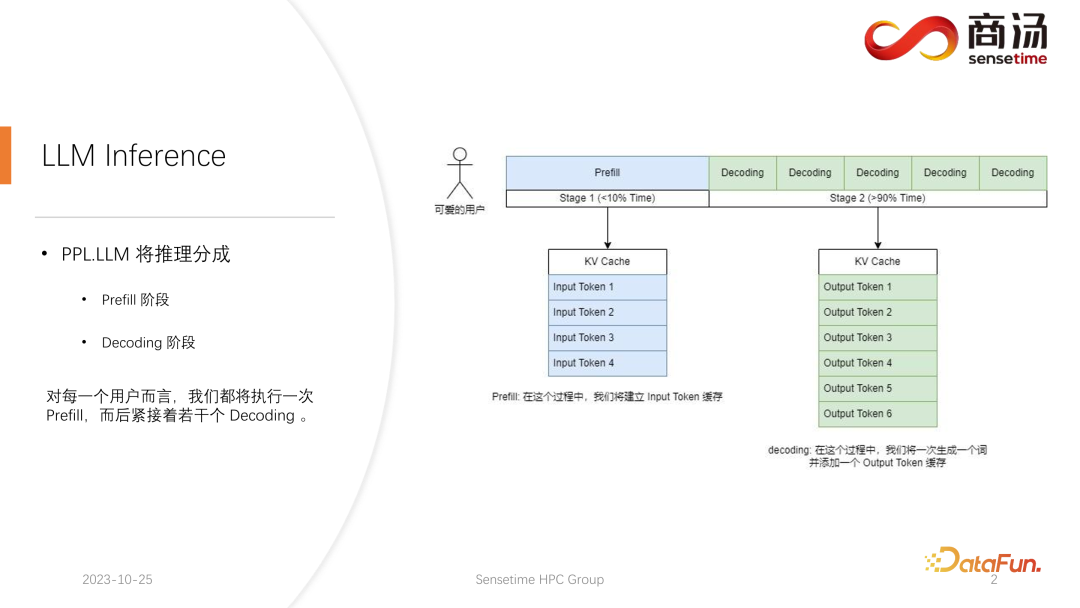

and traditional CNN model inference is different. The inference of large language models is usually divided into two stages: prefill and decoding. The reasoning process generated after each request is initiated will first go through a Prefill process. The prefill process will calculate all the user's input and generate the corresponding KV cache. It will then go through several decoding processes. For each decoding process, the server will generate a character. And put it into the KV cache, and then iterate in sequence.

Since the decoding process is generated character by character, the generation of each answer fragment takes a lot of time and generates a large number of characters. Therefore, the number of decoding stages is very large, accounting for most of the entire reasoning process, exceeding 90%.

In the Prefill process, although a lot of calculations need to be processed because all the words entered by the user have to be calculated at the same time, this is only a one-time process. Therefore, Prefill only accounts for less than 10% of the time in the entire inference process.

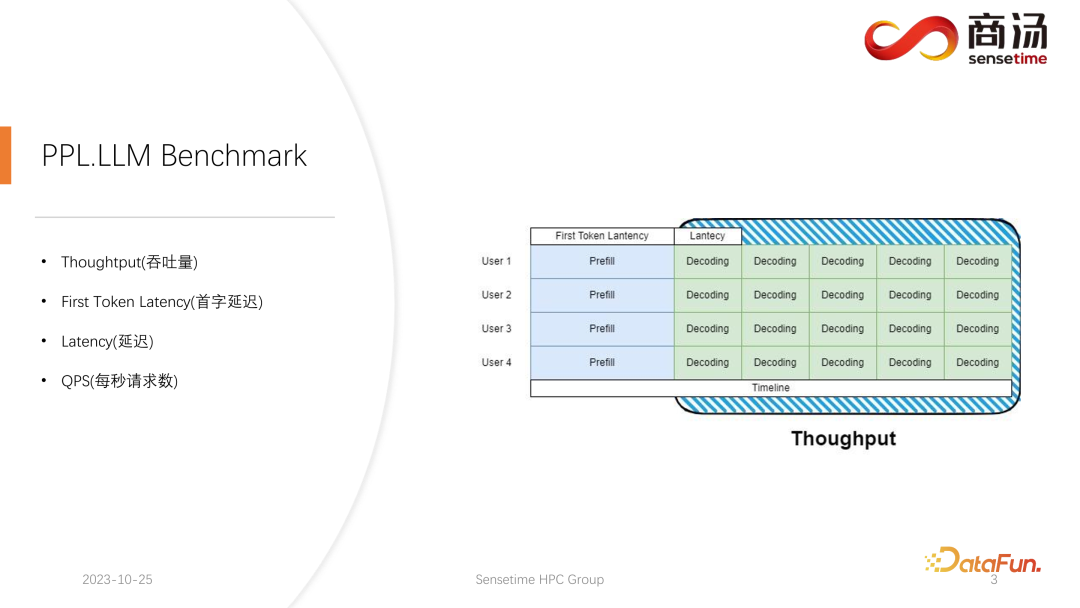

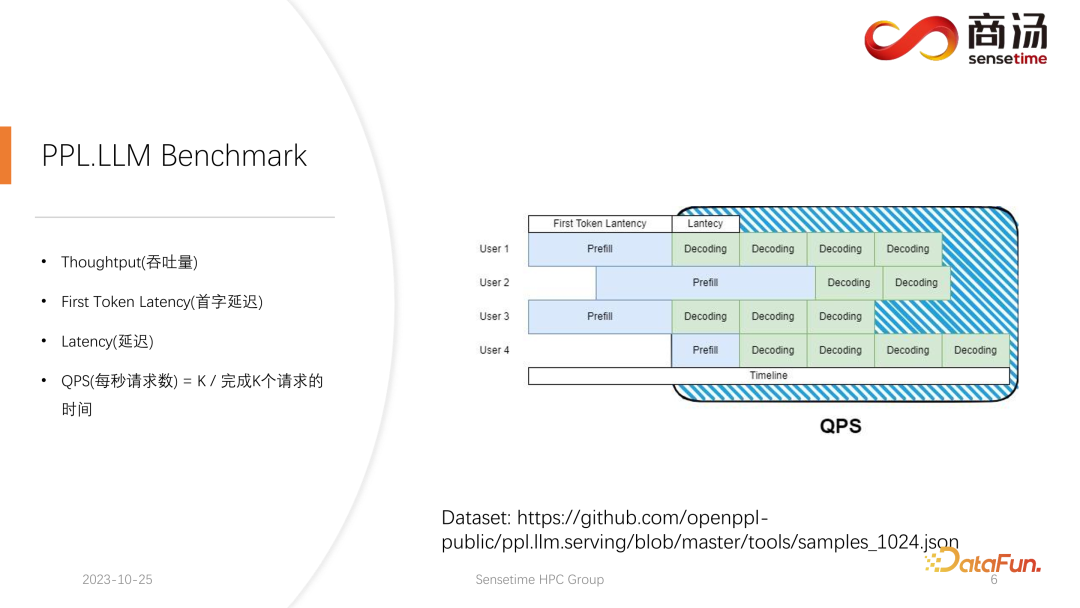

In large language model inference, four key metrics are typically focused on: throughput, first word latency, overall latency, and requests per second (QPS). These performance indicators evaluate the service capabilities of the system from different perspectives. Throughput measures how quickly and efficiently a system handles requests, while first-word latency refers to the time it takes for the system to generate its first token. Overall latency is the time it takes the system to complete the entire inference task. Finally, QPS represents the number of requests the system handles per second. These metrics play a key role in evaluating model performance and system optimization, helping to ensure that the system can handle various inference tasks efficiently.

First, let’s introduce Throughput. From the model inference level, the first thing to focus on is throughput. Throughput refers to how many decodings can be performed per unit time when the system load reaches its maximum, that is, how many characters are generated. The way to test throughput is to assume that all users will arrive at the same time, and these users will ask the same question, these users can start and end at the same time, and the length of the text they generate and the length of the input text are the same of. A complete batch is formed by using exactly the same input. In this case, the system throughput is maximized. But this situation is unrealistic, so this is a theoretical maximum. We measure how many independent decoding stages the system can perform in one second.

Another key indicator is First Token Latency, which is the time it takes for a user to complete the Prefill phase after entering the inference system. This refers to the system's response time to generate the first character. Many users expect to receive an answer within 2-3 seconds after entering a question into the system.

Another important metric is latency. Latency represents the time required for each decoding operation, which reflects the time interval required for a large language model system to generate each character during real-time processing, as well as the smoothness of the generation process. Typically, we want latency to stay under 50 milliseconds, which means we can generate 20 characters per second. In this way, the generation process of large language models will be smoother.

The last metric is QPS (requests per second). It reflects how many user requests can be processed in one second in online system services. The measurement method of this indicator is relatively complex and will be introduced later.

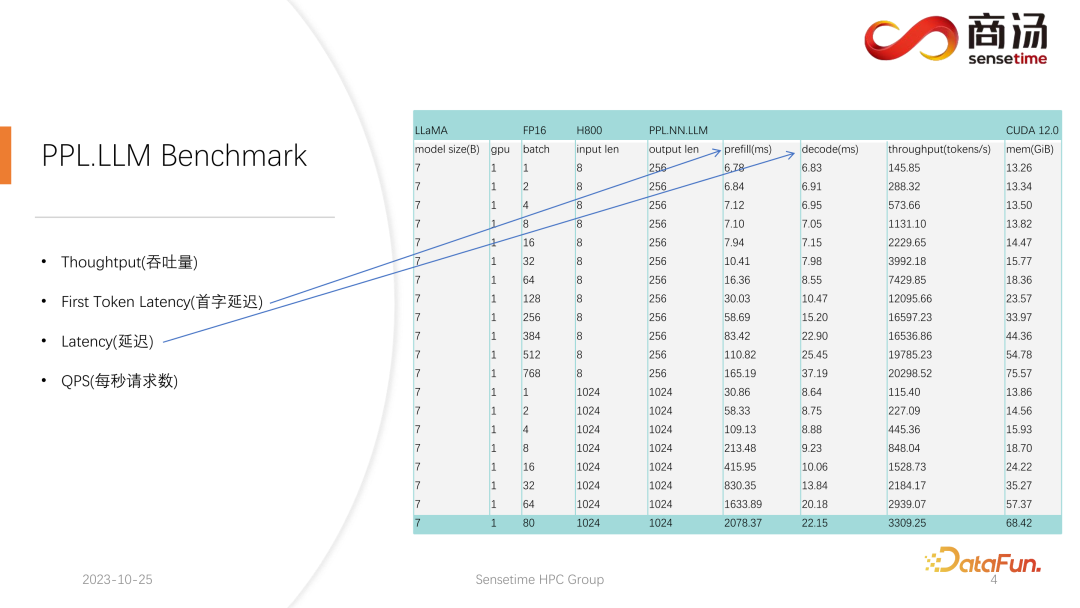

We have conducted relatively complete tests on the two indicators First Token Latency and Latency. These two indicators will change greatly due to different lengths of user input and different batch sizes.

As you can see from the above table, for the same 7B model, if the user's input length changes from 8 to 2048, the Prefill time will change from 6.78 milliseconds until it becomes 2078 milliseconds, which is 2 seconds. . If there are 80 users, and each user enters 1,024 words, then Prefill will take about 2 seconds to run on the server, which is beyond the acceptable range. But if the user input length is very short, for example, only 8 words are entered per visit, even if 768 users arrive at the same time, the first word delay will only be about 165 milliseconds.

The most relevant thing to the first word delay is the user’s input length. The longer the user’s input length, the higher the first word delay will be. If the user input length is short, the first word delay will not become a bottleneck in the entire large language model inference process.

As for the subsequent decoding delay, usually as long as it is not a 100 billion-level model, the decoding delay will be controlled within 50 milliseconds. It is mainly affected by batch size. The larger the batch size, the larger the inference delay will be, but basically the increase will not be very high.

Throughput will actually be affected by these two factors. If the length of the user input and the generated length are very long, the system throughput will not be very high. If both the user input length and the generated length are not very long, the system throughput may reach a very ridiculous level.

Let’s look at QPS. QPS is a very specific metric that indicates how many requests per second the system can handle. When conducting this test, we will use actual data. (We have sampled this data and put it on github.)

The measurement of QPS is not the same as the throughput, because in actual use of large language models In the system, the arrival time of each user is uncertain. Some users may come early, and some may come late, and the length of the generation after each user completes Prefill is also uncertain. Some users may exit after generating 4 words, while others may need to generate more than 20 words.

In the Prefill stage, in actual online reasoning, because users actually generate different lengths, they will encounter a problem: some users will generate it in advance, while some users will have to generate it in advance. It won't end until a lot of length later. During a build like this, there are many places where the GPU will be idle. Therefore, in the actual inference process, our QPS cannot take full advantage of throughput. Our throughput may be great, but the actual processing power may be poor because the processing is full of holes that cannot use the graphics card. Therefore, in terms of the QPS indicator, we will have many specific optimization solutions to avoid calculation holes or the inability to effectively utilize the graphics card, so that the throughput can fully serve users.

2. Optimizing the inference performance of large language models

Next, let’s enter the inference process of large language models and see what we have done. What optimizations have made the system achieve relatively good results in terms of QPS and throughput and other indicators.

1. LLM inference process

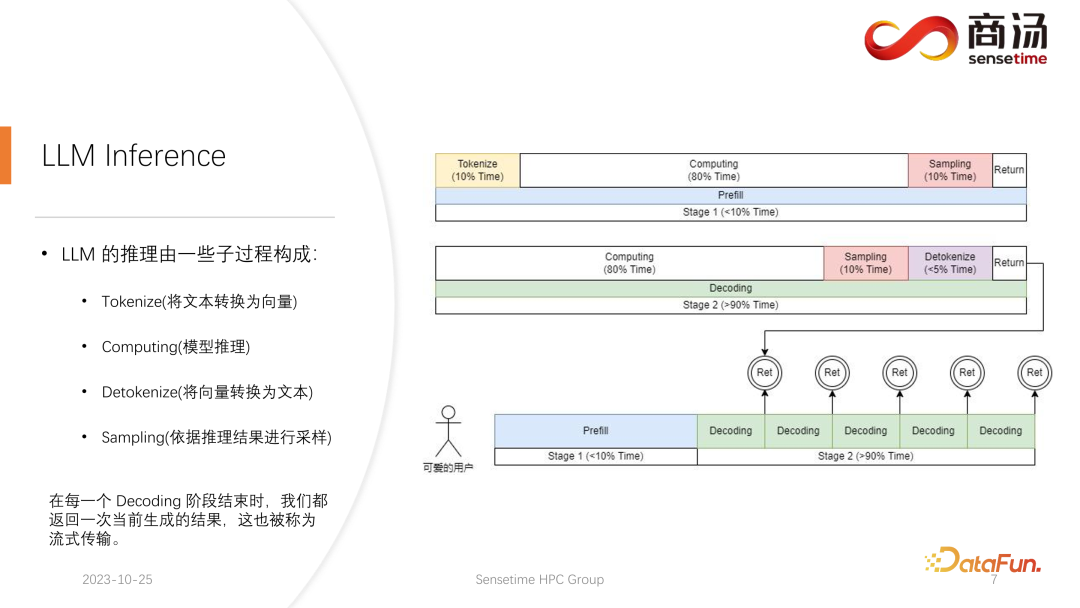

First, let’s introduce the inference process of the large language model in detail. As mentioned in the previous article, each request must go through two stages: prefill and decoding. In the prefill stage, at least four things must be done:

The first thing is to process the user’s input. Vectorization, the process of tokenize refers to converting the text input by the user into a vector. Compared with the entire prefill stage, it takes up about 10% of the time, which comes at a cost.

After that, the real prefill calculation will be performed, which will take up about 80% of the time.

After calculation, sampling will be performed. This process is generally used in Pytorch, such as sample and top p. Argmax is used in large language model inference. All in all, it is a process of generating the final word based on the results of the model. This process takes up 10% of the time.

Finally, the refill result is returned to the customer, which takes a relatively short time, accounting for about 2% to 5% of the time.

The Decoding stage does not require tokenize. Every time you do decoding, it will start directly from calculation. The entire decoding process will take up 80% of the time, and the subsequent sampling, which is the process of sampling and generating words, will also take up. 10% of the time. But it will take a detokenize time. Detokenize means that after a word is generated, the generated word is a vector and needs to be decoded back to text. This operation will take up about 5% of the time. Finally, the generated word will be Return to user.

When a new request comes in, after the prefill is completed, decoding will be iteratively performed. After each decoding stage, the result will be returned to the client on the spot. This generation process is very common in large language models, and we call this method streaming.

2. Optimization: pipeline pre- and post-processing and high-performance sampling

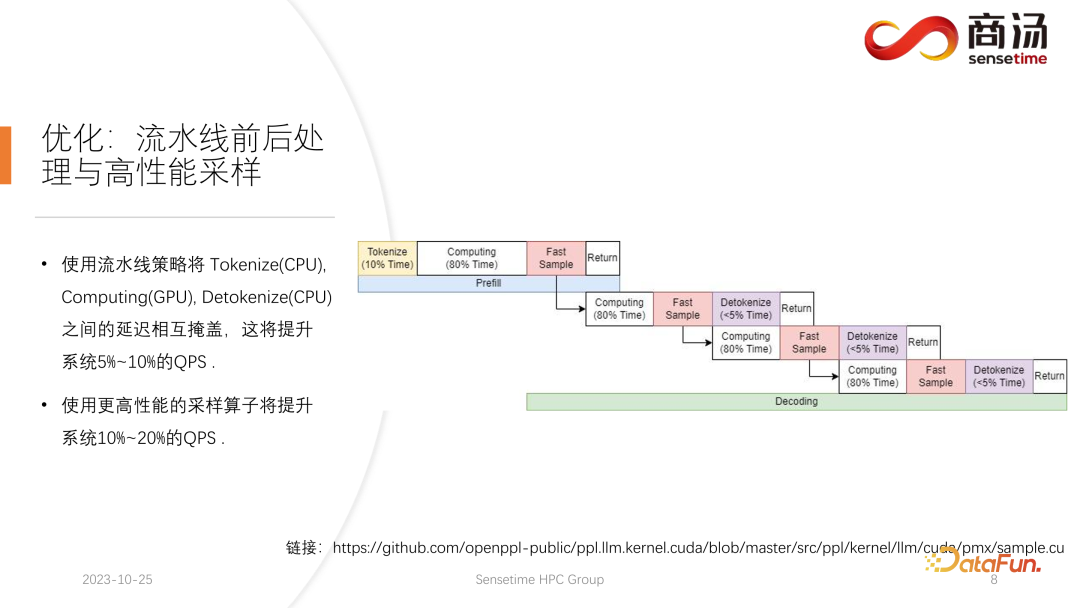

The first thing to be introduced here The first optimization is pipeline optimization, whose purpose is to maximize the graphics card utilization.

In the large language model inference process, the processes of tokenize, fast sample and detokenize have nothing to do with the calculation of the model. We can imagine the reasoning of the entire large language model as such a process. During the process of executing prefill, after I get the word vector of the fast sample, I can immediately start the next stage of decoding without waiting for the result to be returned, because the result has already been on the GPU. When a decoding is completed, there is no need to wait for detokenize to be completed, and the next decoding can be started immediately. Because detokenize is a CPU process, the latter two processes only involve the return of results to the user and do not involve any GPU operations. And after executing the sampling process, we already know what the next generated word is. We have obtained all the data we need and can start the next operation immediately without waiting for the completion of the next two processes.

Three thread pools are used in the implementation of PPL.LLM:

The first thread pool is responsible for executing the tokenize process;

The third thread pool is responsible for executing the subsequent fast sample and the process of returning results and detokenizing;

The middle thread pool is used to perform computing process.

These three thread pools asynchronously isolate these three parts of delay from each other, thereby masking these three parts of delay as much as possible. This will bring a 10% to 20% QPS improvement to the system, which is the first optimization we do.

3. Optimization: Dynamic batch processing

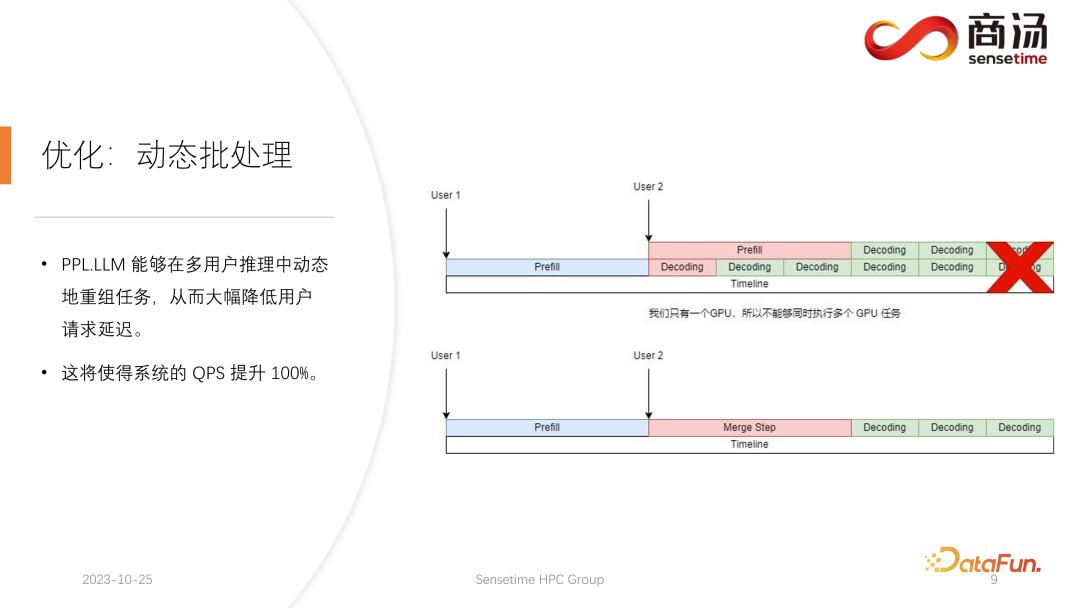

After this, PPL.LLM can still be executed One of the more interesting optimizations is called dynamic batching.

As mentioned in the previous article, in the actual reasoning process, the user generation length is different, and the user arrival time is also different. Therefore, there will be a situation where if the current GPU is in the inference process, there is already a request for online inference. Halfway through the inference, the second request is inserted. At this time, the generation process of the second request will Conflicts with the generation process of the first request. Because we only have one GPU and can only run tasks serially on this GPU, we cannot simply parallelize them on the GPU.

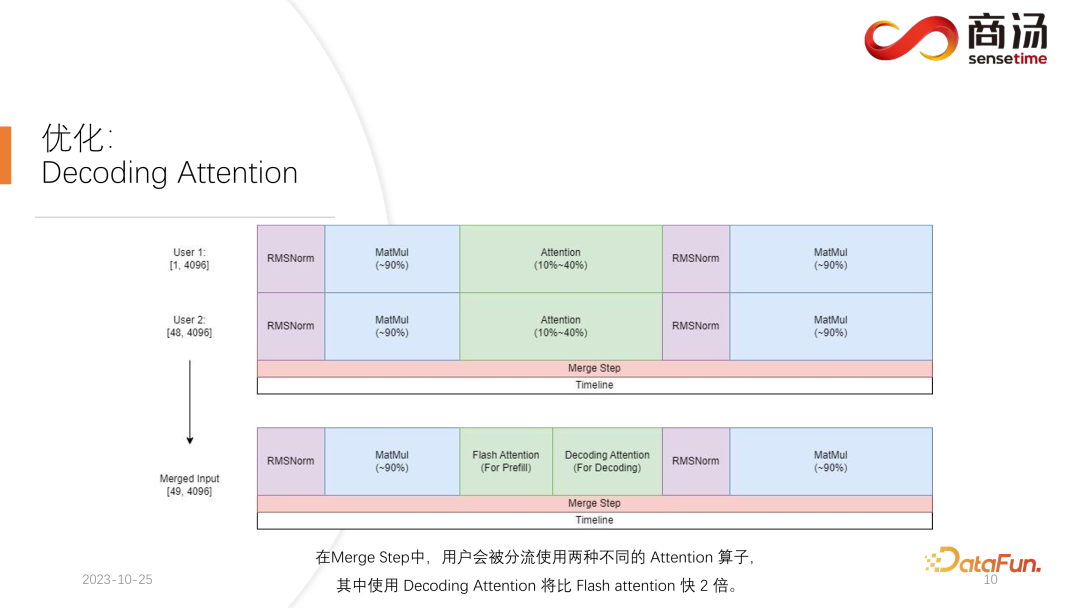

Our approach is to mix the prefill phase of the second request with the decoding phase corresponding to the first request to generate a new phase name. for the Merge Step. In this Merge Step, not only the decoding of the first request will be performed, but the Prefill of the second request will also be performed. This feature exists in many large language model inference systems, and its implementation has increased the QPS of large language models by 100%.

The specific process is that the first request generation process is halfway through, which means that it will have an input with a length of 1 when decoding, and the second request is a new entry. Yes, during the Prefill process, there will be an input with a length of 48. Splicing these two inputs to each other along the first dimension, the length of the spliced input is 49, and the hidden dimension is the input of 4096. In this input of length 49, the first word is requested first, and the remaining 48 words are requested second.

Because in large model reasoning, the operators that need to be experienced, such as RMSNorm, matrix multiplication and attention, have the same structure whether they are used for decoding or prefill. Therefore, the spliced input can be directly put into the entire network and run. We only need to differentiate in one place, and that is attention. During the attention process or during the execution of the self attention operator, we will do a data shunt, shunt all decoding requests into one wave, shunt all prefill requests into another wave, and execute two different Operation. All prefill requests will execute Flash Attention; all decoding users will execute a very special operator called Decoding Attention. After the attention operator is executed separately, these user inputs will be spliced together again to complete the calculation of other operators.

For the Merge Step, in fact, when each request comes, we will splice this request together with the input of all requests currently on the system to complete this calculation. Then continue to do decoding, which is the implementation of dynamic batch processing in a large language model.

4. Optimization: Decoding Attention

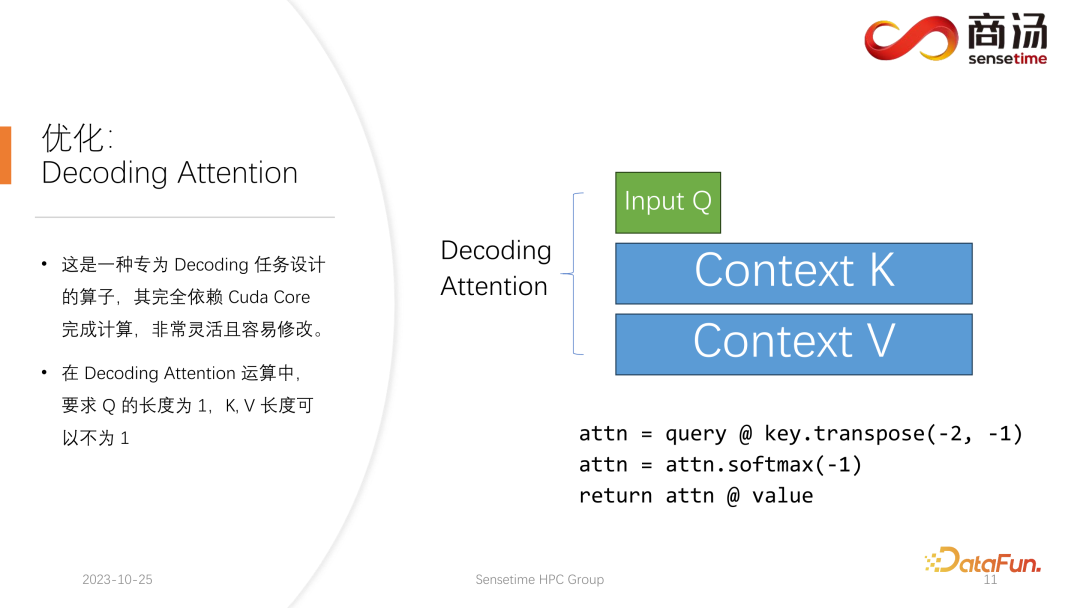

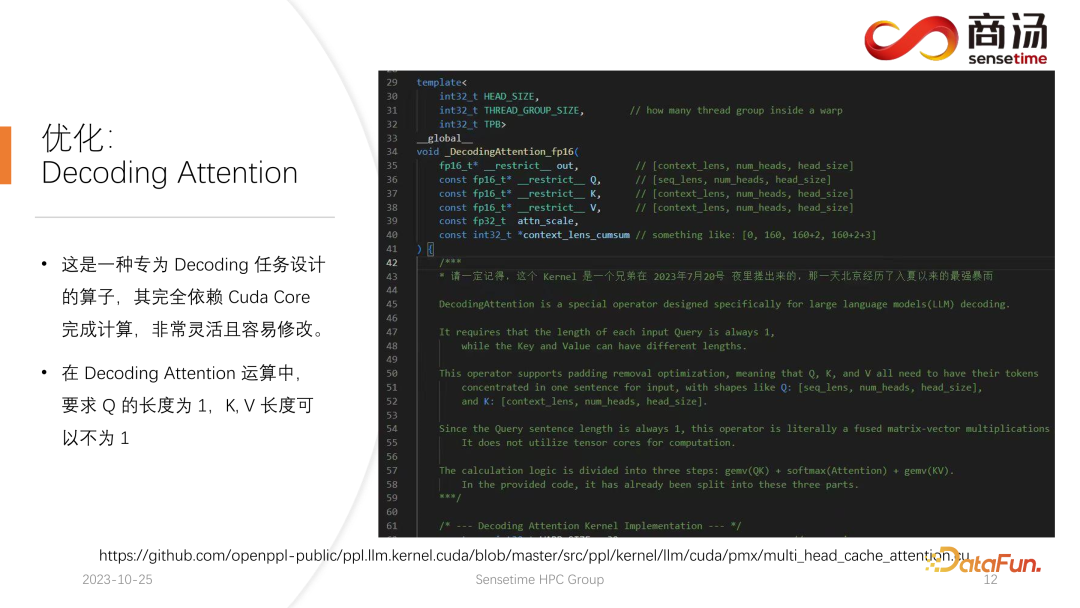

##Decoding Attention operator, unlike Flash Attention operator That's famous, but it's actually much faster than Flash Attention in handling decoding tasks.

This is an operator specially designed for decoding tasks. It relies entirely on Cuda Core and does not rely on Tensor Core to complete calculations. It is very flexible and easy to modify, but it has a limitation, because its characteristic is in the operation of decoding tensor, so it requires that the length of the input q must be 1, but the lengths of k and v are variable. This is a limitation of Decoding Attention. Under this limitation, we can do some specific optimizations.

This specific optimization makes the implementation of the attention operator in the decoding stage faster than Flash Attention. This implementation is now open source and you can visit it at the URL in the picture above.

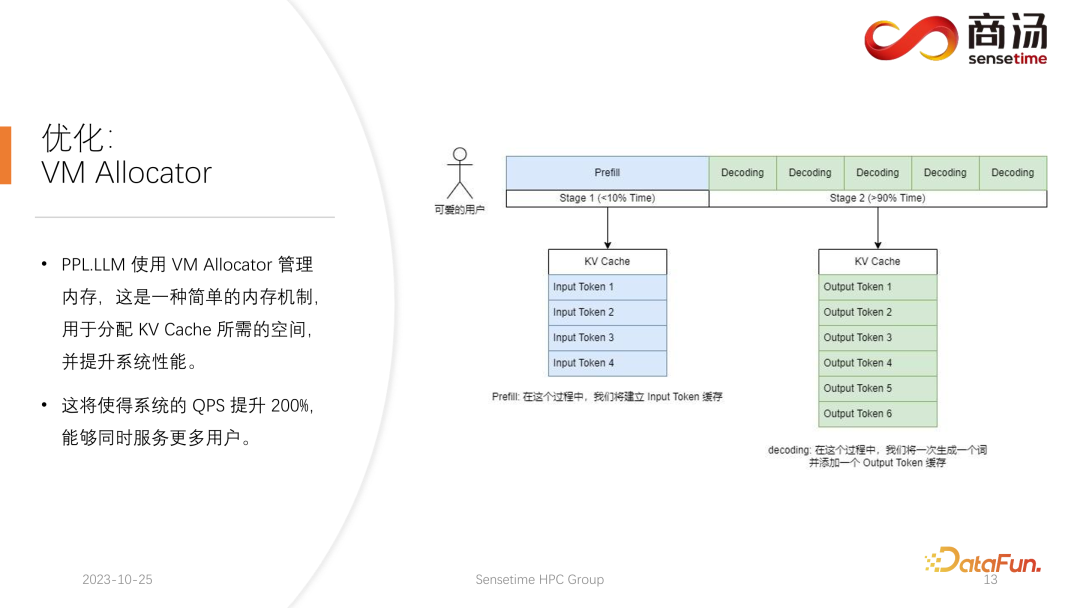

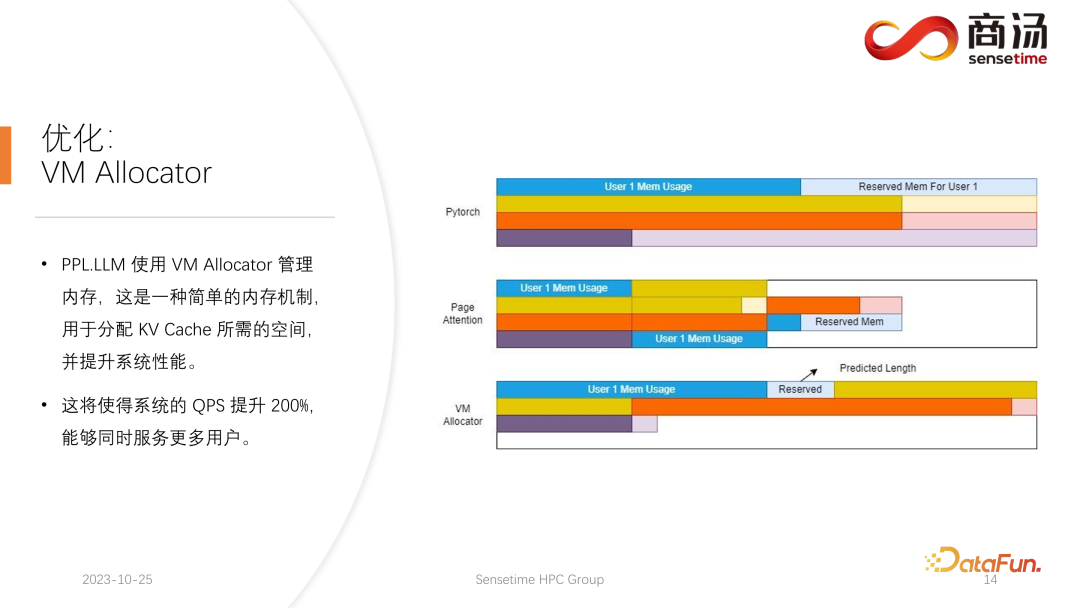

5. Optimization: VM Allocator

Another optimization is Virtual Memory Allocator, corresponding to Page Attention optimization. When a request comes, it goes through the prefill phase and the decoding phase. All its input tokens will generate a KV cache. This KV cache records all the historical information of this request. So how much KV cache space should be allocated to such a request to satisfy it to complete this generation task? If it is divided too much, the video memory will be wasted. If it is divided too little, it will hit the cut-off position of the KV cache during the decoding stage, and there will be no way to continue to generate it.

In order to solve this problem, there are 3 solutions.

Pytorch's memory management method is to reserve a long enough space for each request, usually 2048 or 4096, to ensure that 4096 words are generated. But the actual generated length of most users will not be that long, so a lot of memory space will be wasted.

Page Attention uses another video memory management method. Allows the user to continuously add video memory during the generation process. Similar to paging storage or memory paging in the operating system. When a request comes, the system will allocate a small piece of video memory for the request. This small piece of video memory is usually only enough to generate 8 characters. When the request generates 8 characters, the system will add a piece of video memory, and the result can be added again. When writing to this block of video memory, the system will maintain a linked list between the video memory block and the video memory block, so that the operator can output normally. When the generated length continues to grow, the allocation of video memory blocks will continue to be added to the user, and the list of allocations of video memory blocks can be dynamically maintained, so that the system does not have a large amount of wasted resources and does not need to reserve too much video memory for this request. space.

PPL.LLM uses the Virtual Memory management mechanism to predict the required generation length for each request. After each request comes in, a continuous space will be allocated directly to it, and the length of this continuous space is predicted. However, it may be difficult to achieve in theory, especially in the online reasoning stage. It is impossible to clearly know how long the content will be generated for each request. Therefore we recommend training a model to do this. Because even if we adopt a model like Page Attention, we will still encounter problems. Page Attention During the running process, at a specific point in time, for example, there are already four requests on the current system, and there are still 6 blocks of video memory left in the system that have not been allocated. At this time, we have no way of knowing whether new requests will come in and whether we can continue to provide services for them, because the current four requests have not ended yet, and new video memory blocks may continue to be added to them in the future. So even with the Page Attention mechanism, it is still necessary to predict the actual generation length of each user. Only in this way can we know whether we can accept input from a new user at a specific point in time.

This is something that none of our current reasoning systems can do, including PPL. However, the management mechanism of Virtual Memory still allows us to avoid the waste of video memory to a large extent, thereby increasing the overall QPS of the system to about 200%.

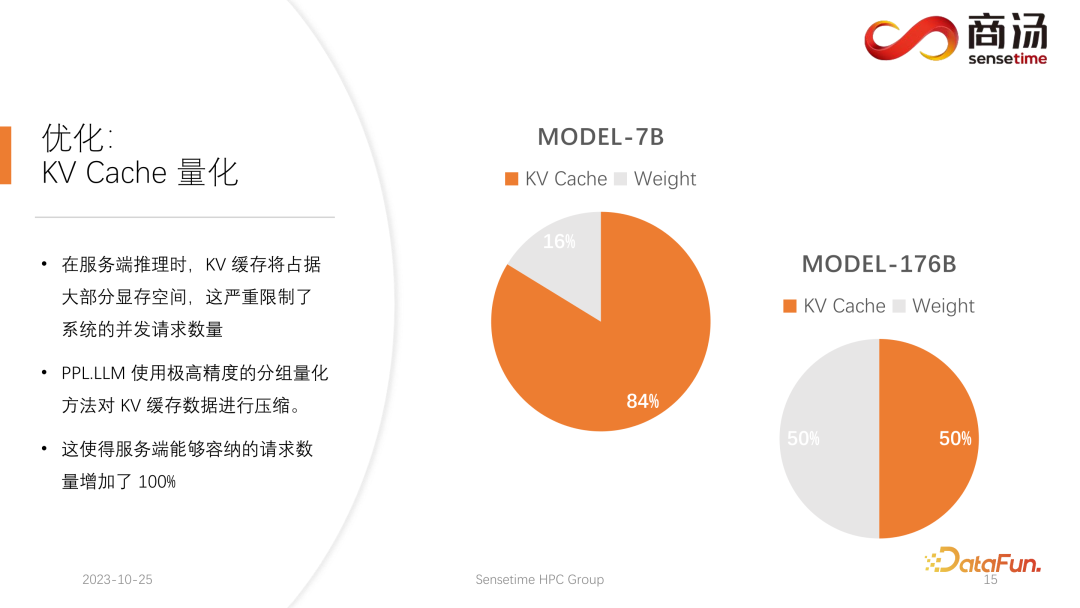

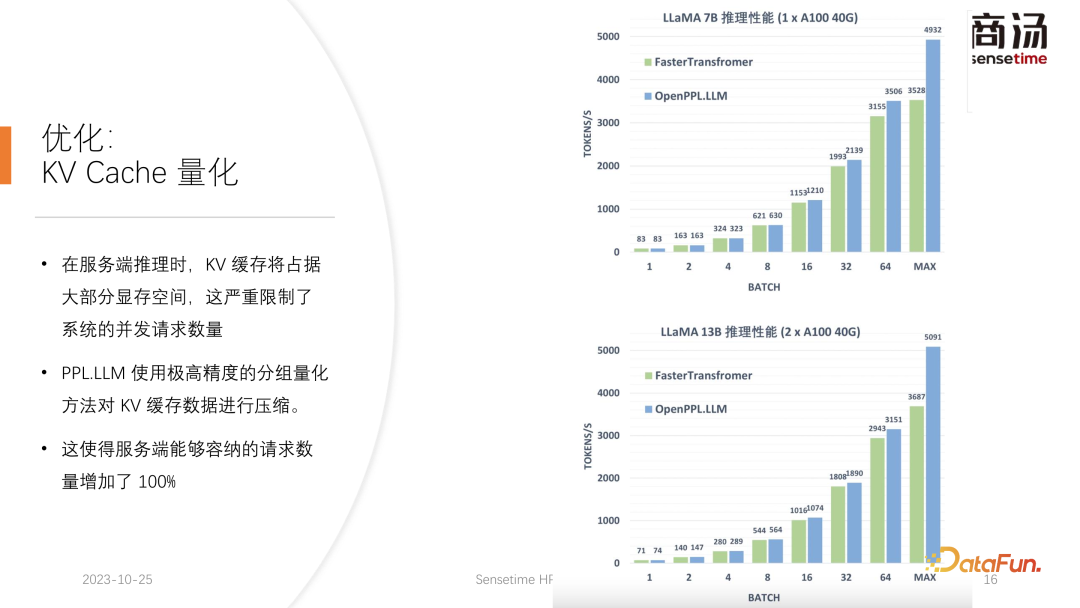

6. Optimization: KV Cache Quantification

Another optimization being done by PPL.LLM , which is the quantification of the KV cache. During the server-side inference process, the KV cache will occupy most of the video memory space, which will seriously limit the number of concurrent requests of the system.

It can be seen that large language models such as the 7B model are run on the server side, especially servers with large memory such as A100 and H100. At that time, its KV cache will occupy 84% of the video memory space, and for a large model such as 176B, its KV cache will also occupy more than 50% of the cache space. This will severely limit the number of concurrency of the model. After each request arrives, a large amount of video memory needs to be allocated to it. In this way, the number of requests cannot be increased, and thus QPS and throughput cannot be improved.

PPL.LLM uses a very special quantization method, group quantization, to compress the data in the KV cache. That is to say, for the original FP16 data, an attempt will be made to quantize it to INT8. This will reduce the size of the KV cache by 50% and increase the number of requests that the server can accommodate by 100%.

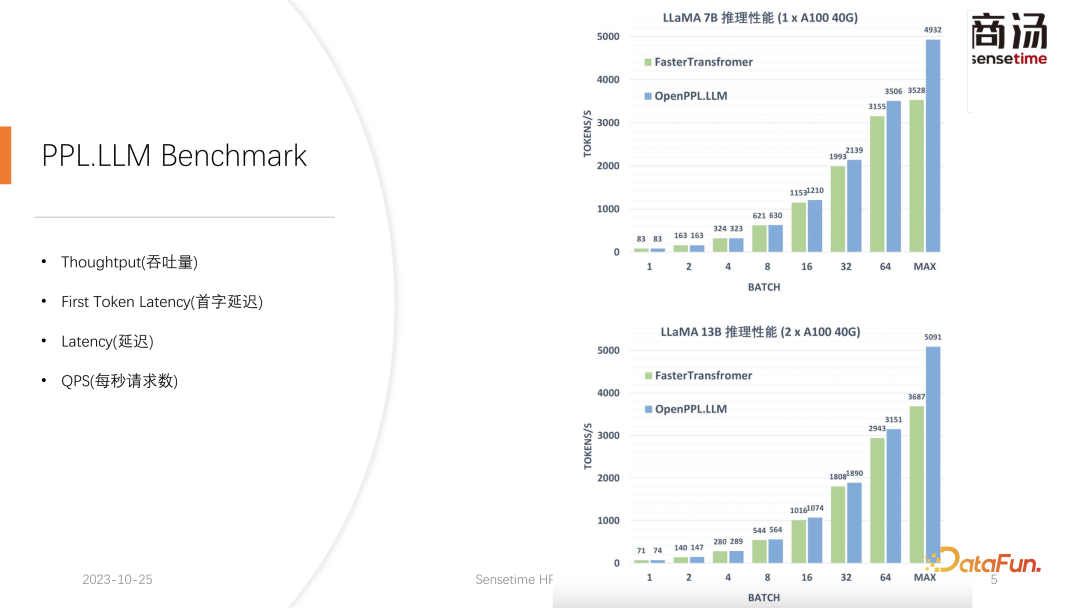

The reason why the throughput can be improved by about 50% compared to Faster Transformer is precisely due to the increase in batch size brought about by KV cache quantization.

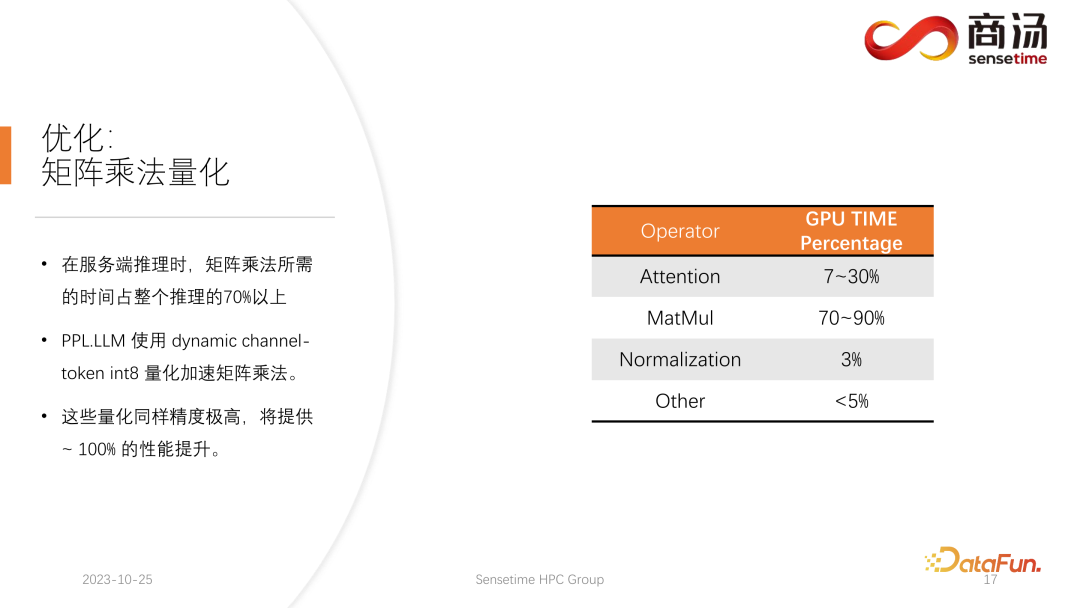

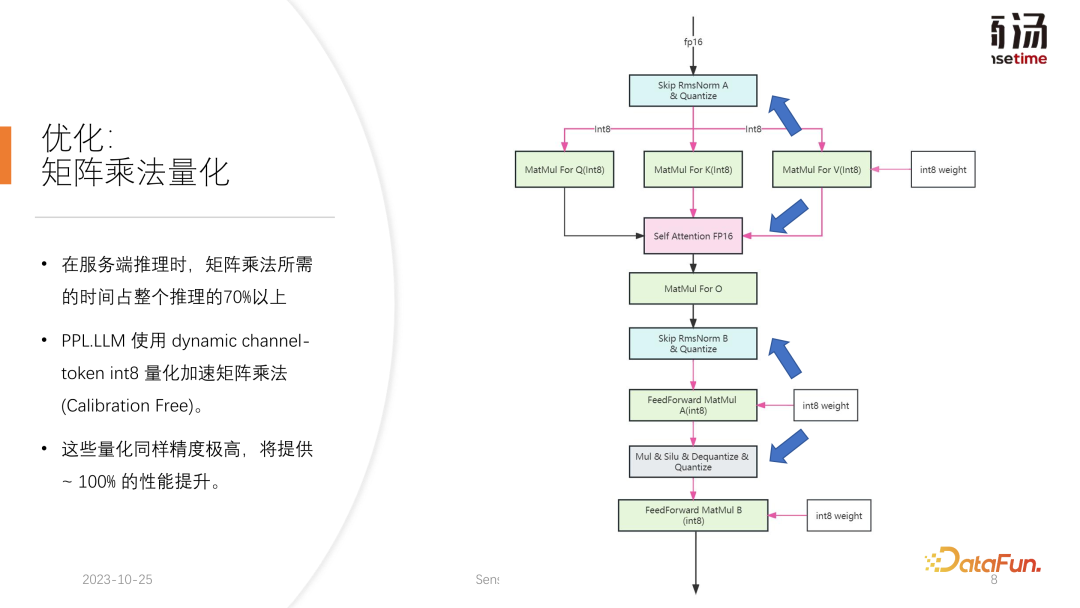

7. Optimization: Matrix multiplication quantization

After KV cache quantization, we made an update Fine-grained quantization of matrix multiplication. In the entire server-side inference process, matrix multiplication accounts for more than 70% of the entire inference time. PPL.LLM uses a dynamic per-channel/per-token alternating hybrid quantization method to accelerate matrix multiplication. These quantizations are also extremely accurate and can improve performance by nearly 100%.

The specific method is to integrate a quantization operator on the basis of the RMSNorm operator. This quantization operator will be added to the RMSNorm operator. Based on the function, it counts its Token information, counts the maximum and minimum values of each token, and quantifies this data along the dimensions of the token. That is to say, the data after RMSNorm will be converted from FP16 to INT8, and this time the quantization is fully dynamic and does not require calibration. In the subsequent QKV matrix multiplication, these three matrix multiplications will be per-channel quantized. The data they receive is INT8, and their weights are also INT8, so these matrix multiplications can perform full INT8 matrix multiplications. Their output will be accepted by Soft Attention, but a dequantization process will be performed before acceptance. This time the dequantization process will be merged with the soft attention operator.

The subsequent O matrix multiplication does not perform quantization, and the calculation process of Soft Attention itself does not perform any quantization. In the subsequent FeedForward process, these two matrices are also quantized in the same way, fused with the RMSNorm above, or fused with activation functions such as Silu and Mul above. Their solution quantization operators will be fused with their downstream operators.

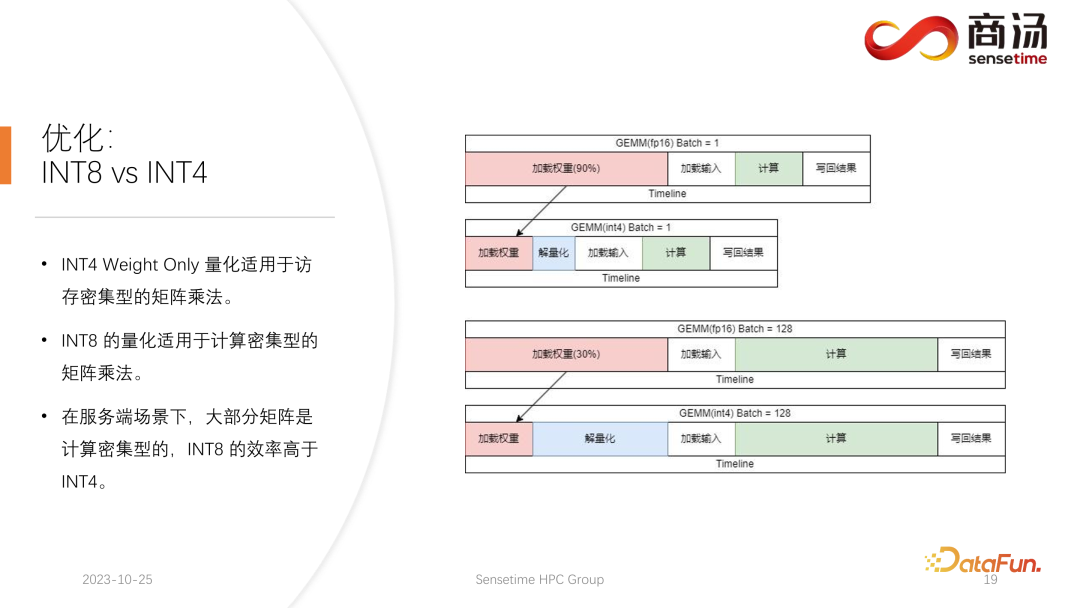

8. Optimization: INT8 vs INT4

The current academic community pays quantitative attention to large language models The points may mainly focus on INT4, but in the process of server-side reasoning, it is actually more suitable to use INT8 quantification.

INT4 quantization is also called Weight Only quantization. The significance of this quantization method is that when the batch size is relatively small during the large language model inference process, during the calculation process of matrix multiplication, 90% of the time is spent loading weights. Because the size of the weights is very large, and the time to load the input is very short, their input, that is, activation is also very short, the calculation time is not very long, and the time to write back the results is also not very long, which means that this calculation sub is a memory access-intensive operator. In this case, we will choose INT4 quantization, provided that the batch is small enough. After each weight is loaded using INT4 quantization, a dequantization process will follow. This dequantization will dequantize the weight from INT4 to FP16. After going through the dequantization process, the subsequent calculations are exactly the same as FP16. That is to say, the quantization of INT4 Weight Only is suitable for memory access-intensive matrix multiplication, and its calculation The process is still completed by the FP16 computing device.

When the batch is large enough, such as 64 or 128, INT4's Weight Only quantization will not bring any performance improvement. Because if the batch is large enough, the calculation time will be very long. And INT4 Weight Only quantization has a very bad point. The amount of calculation required for its de-quantization process will increase with the increase of the batch (GEMM Batch). As the input batch increases, the time for de-quantization will also increase. It will get longer and longer. When the batch size reaches 128, the time loss caused by dequantization and the performance advantage brought by loading weights have canceled each other out. In other words, when the batch size reaches 128, INT4 matrix quantization will not be faster than FP16 matrix quantization, and the performance advantage is minimal. About when the batch is equal to 64, the Weight Only quantization of INT4 will only be 30% faster than that of FP16. When the batch is 128, it will only be about 20% faster or even less.

But for INT8, the most different thing between INT8 quantization and INT4 quantization is that it does not require any de-quantization process, and its calculation can be doubled in time. . When batch is equal to 128, from FP16 quantization to INT8, the time to load weights will be halved, and the calculation time will also be halved, which will bring about 100% acceleration.

In a server-side scenario, especially because there will be a constant influx of requests, most matrix multiplications will be computationally intensive. In this case, if you want to pursue the ultimate throughput, the efficiency of INT8 is actually higher than INT4. This is also one of the reasons why we mainly promote INT8 on the server side in the implementation we have completed so far.

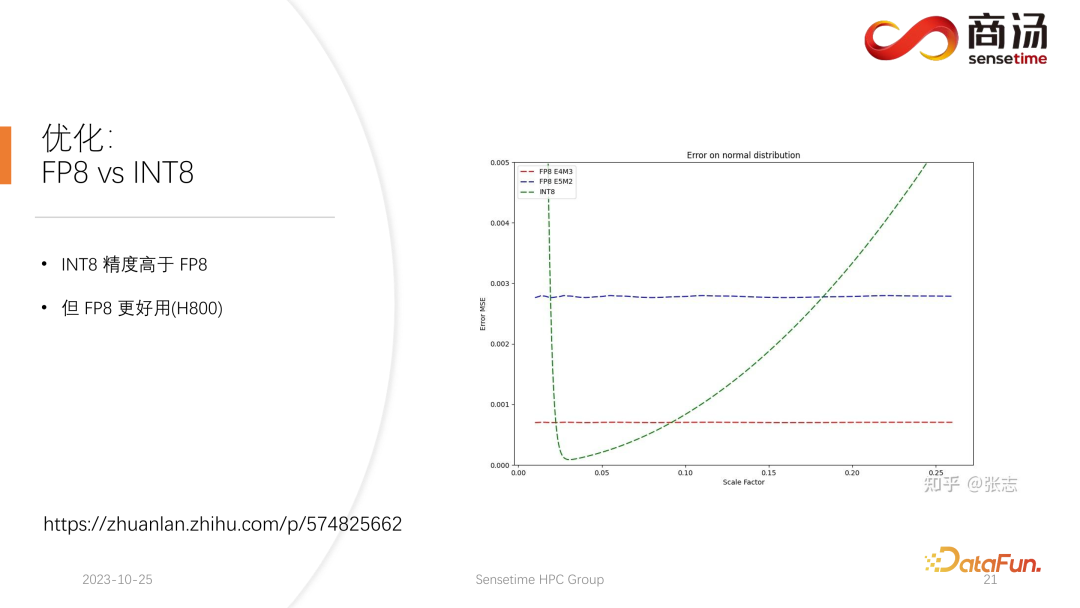

9. Optimization: FP8 vx INT8

##On H100, H800, 4090, we may Quantization of FP8 will be performed. Data formats such as FP8 were introduced in Nvidia's latest generation of graphics cards. The accuracy of INT8 is theoretically higher than that of FP8, but FP8 will be more useful and perform better. We will also promote the implementation of FP8 in subsequent updates to the server-side inference process. As can be seen in the figure above, the error of FP8 is about 10 times larger than that of INT8. INT8 will have a quantized size factor, and the quantization error of INT8 can be reduced by adjusting the size factor. The quantization error of FP8 is basically independent of the size factor. It is not affected by the size factor, which means that we basically do not need to do any calibration on it. But its error is generally higher than INT8.

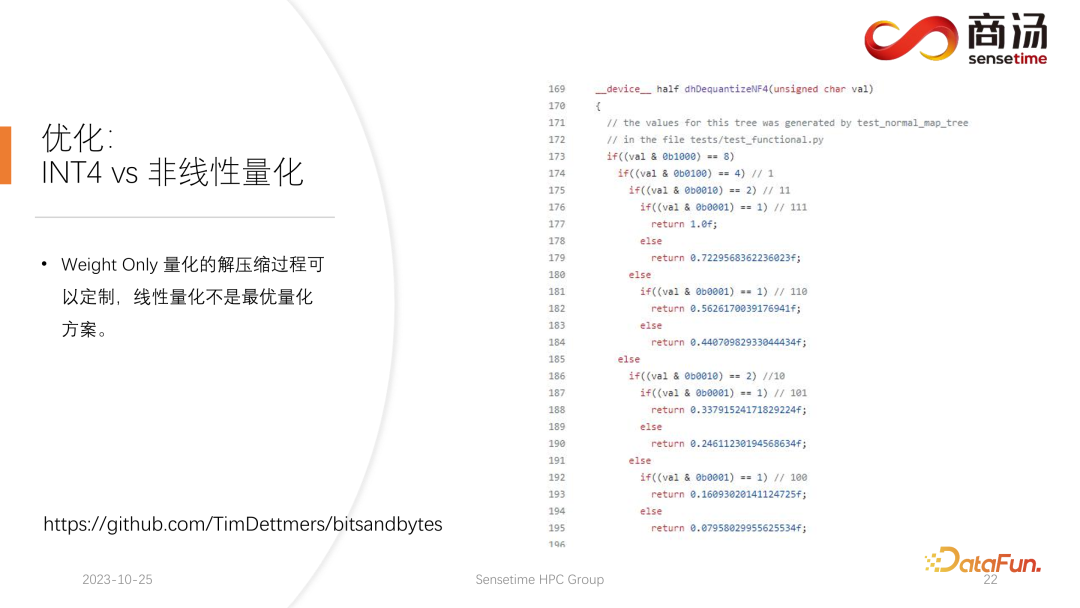

10. Optimization: INT4 vs non-linear quantization

PPL.LLM In subsequent updates, the matrix quantization of INT4 will also be updated. This Weight Only matrix quantization mainly serves the terminal side, for devices such as mobile terminals where the batch is fixed to 1. In subsequent updates, it will gradually change from INT4 to non-linear quantization. Because in the calculation process of Weight Only, there will be a dequantization process. This dequantization process is actually customizable and may not be a linear dequantization process. Using other dequantization processes and quantization processes will make this time The calculation accuracy is higher.

A typical example is the quantization of NF4 mentioned in a paper. This quantification will actually be quantified and de-quantized through a table method. , is a kind of nonlinear quantization. In subsequent updates of PPL.LLM, we will try to use such quantization to optimize device-side inference.

3. Hardware for large language model inference

Finally, let’s introduce the hardware for large language model processing.

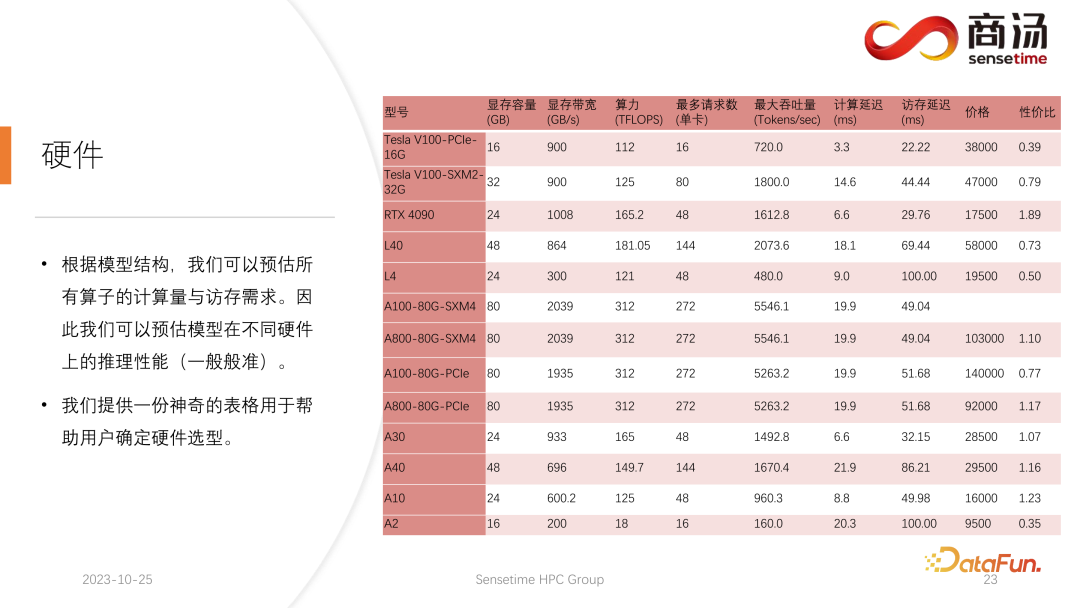

#Once the model structure is determined, we will know its specific calculation amount, how much memory access is required, and how much calculation amount is required. At the same time, you will also know the bandwidth, computing power, price, etc. of each graphics card. After determining the structure of the model and determining the hardware indicators, we can use these indicators to calculate the maximum throughput of inferring a large model on this graphics card, what is the calculation delay, and how much memory access time is required. A very specific table can be calculated. We will make this table public in subsequent information. You can access this table to see which graphics card models are most suitable for large language model inference.

For large language model inference, because most operators are memory access-intensive, memory access latency will always be higher than calculation latency. Because the parameter matrix of the large language model is indeed too large, even on the A100/80G, when the batch size is opened to 272, its calculation delay is small, but the memory access delay will be higher. Therefore, many of our optimizations start from memory access. When selecting hardware, our main direction is to choose equipment with relatively high bandwidth and large video memory. This allows the large language model to support more requests and faster memory access during inference, and the corresponding throughput will be higher.

The above is the content shared this time. All relevant information is placed on the network disk, see the link above. All our code has also been open sourced on github. You are welcome to communicate with us at any time.

4. Q & A

Q1: Is there any memory access problem like Softmax in Flash Attention that is optimized in PPL.LLM?

A1: Decoding Attention is a very special operator. The length of its Q is always 1, so it will not face a very large amount of memory access in Softmax like Flash Attention. In fact, during the execution of Decoding Attention, it is the complete execution of Softmax, and it does not need to be executed as quickly as Flash Attention.

Q2: Why is the Weight Only quantization of INT4 linearly related to batch? Is this a fixed number?

A2: This is a good question. First of all, this solution quantization is not as everyone thinks. It only needs to stuff the weight from INT4 back to FP16. If you only do this, The solution depends on how many weights there are. In fact, this is not the case, because this is a solution quantization integrated into matrix multiplication. You cannot quantize all the weight solutions before performing matrix multiplication, put them there and then read them. In this way the quantization of INT4 we did is meaningless. It is constantly solving the quantization during the execution process. Because we will perform block matrix multiplication, the number of reads and writes for each weight is not 1. It needs to be calculated continuously. This number is actually related to the batch. . That is to say, different from the previous methods of optimizing quantization, there will be separate quantization operators and solution quantization operators. For the insertion of two operators, the solution quantization is directly integrated into the operator. We are performing matrix multiplication, so the number of times we need to solve the quantization is not once.

Q3: Can the inverse quantization calculation in KV Cache be masked by cache imitation?

A3: According to our tests, it can be covered up, and in fact there is far more left. The inverse quantization and quantization in KV calculation will be integrated into the self attention operator, specifically Decoding Attention. According to tests, this operator may be masked even with 10 times the amount of calculation. Even the delay of memory access cannot cover it up. Its main bottleneck is memory access, and its calculation amount is far from reaching the level that can cover up its memory access. Therefore, the inverse quantization calculation in the KV cache is basically a well-covered thing for this operator.

The above is the detailed content of Design and implementation of high-performance LLM inference framework. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Translator | Bugatti Review | Chonglou This article describes how to use the GroqLPU inference engine to generate ultra-fast responses in JanAI and VSCode. Everyone is working on building better large language models (LLMs), such as Groq focusing on the infrastructure side of AI. Rapid response from these large models is key to ensuring that these large models respond more quickly. This tutorial will introduce the GroqLPU parsing engine and how to access it locally on your laptop using the API and JanAI. This article will also integrate it into VSCode to help us generate code, refactor code, enter documentation and generate test units. This article will create our own artificial intelligence programming assistant for free. Introduction to GroqLPU inference engine Groq

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud, the company behind the Plaud Note AI Voice Recorder (available on Amazon for $159), has announced a new product. Dubbed the NotePin, the device is described as an AI memory capsule, and like the Humane AI Pin, this is wearable. The NotePin is

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

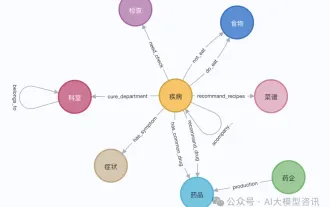

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

Graph Retrieval Enhanced Generation (GraphRAG) is gradually becoming popular and has become a powerful complement to traditional vector search methods. This method takes advantage of the structural characteristics of graph databases to organize data in the form of nodes and relationships, thereby enhancing the depth and contextual relevance of retrieved information. Graphs have natural advantages in representing and storing diverse and interrelated information, and can easily capture complex relationships and properties between different data types. Vector databases are unable to handle this type of structured information, and they focus more on processing unstructured data represented by high-dimensional vectors. In RAG applications, combining structured graph data and unstructured text vector search allows us to enjoy the advantages of both at the same time, which is what this article will discuss. structure

Google AI announces Gemini 1.5 Pro and Gemma 2 for developers

Jul 01, 2024 am 07:22 AM

Google AI announces Gemini 1.5 Pro and Gemma 2 for developers

Jul 01, 2024 am 07:22 AM

Google AI has started to provide developers with access to extended context windows and cost-saving features, starting with the Gemini 1.5 Pro large language model (LLM). Previously available through a waitlist, the full 2 million token context windo

How to create a scalable API gateway using NIO technology in Java functions?

May 04, 2024 pm 01:12 PM

How to create a scalable API gateway using NIO technology in Java functions?

May 04, 2024 pm 01:12 PM

Answer: Using NIO technology you can create a scalable API gateway in Java functions to handle a large number of concurrent requests. Steps: Create NIOChannel, register event handler, accept connection, register data, read and write handler, process request, send response

Deploy large language models locally in OpenHarmony

Jun 07, 2024 am 10:02 AM

Deploy large language models locally in OpenHarmony

Jun 07, 2024 am 10:02 AM

This article will open source the results of "Local Deployment of Large Language Models in OpenHarmony" demonstrated at the 2nd OpenHarmony Technology Conference. Open source address: https://gitee.com/openharmony-sig/tpc_c_cplusplus/blob/master/thirdparty/InferLLM/docs/ hap_integrate.md. The implementation ideas and steps are to transplant the lightweight LLM model inference framework InferLLM to the OpenHarmony standard system, and compile a binary product that can run on OpenHarmony. InferLLM is a simple and efficient L

Understanding GraphRAG (1): Challenges of RAG

Apr 30, 2024 pm 07:10 PM

Understanding GraphRAG (1): Challenges of RAG

Apr 30, 2024 pm 07:10 PM

RAG (RiskAssessmentGrid) is a method that enhances existing large language models (LLM) with external knowledge sources to provide more contextually relevant answers. In RAG, the retrieval component obtains additional information, the response is based on a specific source, and then feeds this information into the LLM prompt so that the LLM's response is based on this information (enhancement phase). RAG is more economical compared to other techniques such as trimming. It also has the advantage of reducing hallucinations by providing additional context based on this information (augmentation stage) - your RAG becomes the workflow method for today's LLM tasks (such as recommendation, text extraction, sentiment analysis, etc.). If we break this idea down further, based on user intent, we typically look at