Technology peripherals

Technology peripherals

AI

AI

Mastering Business AI: Building an Enterprise-Grade AI Platform with RAG and CRAG

Mastering Business AI: Building an Enterprise-Grade AI Platform with RAG and CRAG

Mastering Business AI: Building an Enterprise-Grade AI Platform with RAG and CRAG

Browse our guide to learn how to make the most of AI technology for your business. Learn about things like RAG and CRAG integration, vector embedding, LLM and prompt engineering, which will be beneficial for businesses looking to apply artificial intelligence responsibly.

Build an AI-Ready platform for enterprises

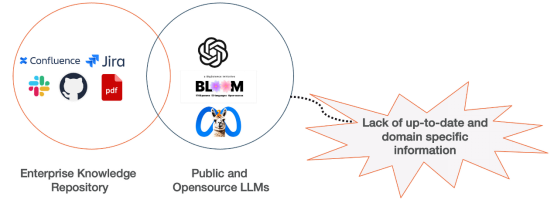

##EnterprisesatWhen introducing generative AI, there are many business risks that require strategic management. These risks are often interrelated and range from potential bias leading to compliance issues to a lack of domain knowledge. Key issues include reputational damage, compliance with legal and regulatory standards (especially in relation to customer interactions), intellectual property infringement, ethical issues and privacy issues (especially when processing personal or identifiable data).

#To address these challenges, hybrid strategies such as retrieval-augmented generation (RAG) are proposed. RAG technology can improve the quality of artificial intelligence-generated content and make enterprise artificial intelligence plans safer and more reliable. This strategy effectively addresses issues such as lack of knowledge and misinformation, while also ensuring compliance with legal and ethical guidelines and preventing reputational damage and non-compliance.

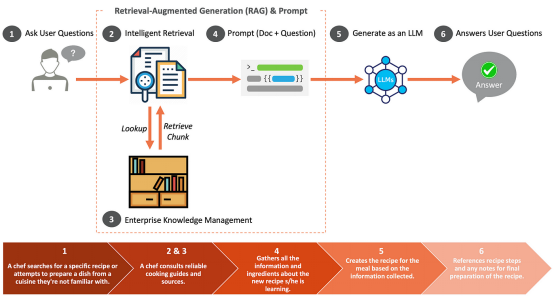

Retrieval Augmented Generation (RAG) is an advanced method that improves the accuracy and accuracy of artificial intelligence content creation by integrating information from enterprise knowledge bases. reliability. Think of RAG as a master chef who relies on innate talent, thorough training and creative flair, all backed by a thorough understanding of the fundamentals of cooking. When it comes time to use unusual spices or fulfill requests for novel dishes, chefs consult reliable culinary references to ensure the best use of ingredients.

#Just like a chef can cook a variety of cuisines, artificial intelligence systems such as GPT and LLaMA-2 can also generate content on various topics. However, when it comes time to provide detailed and accurate information, especially when dealing with novel cuisine or browsing large amounts of corporate data, they turn to special tools to ensure the accuracy and depth of the information.

What if the retrieval phase of the RAG is insufficient?

CRAG is a corrective intervention designed to enhance the stability of the RAG setting. CRAG utilizes T5 to evaluate the relevance of retrieved documents. When corporate-sourced documents are deemed irrelevant, web searches may be used to fill information gaps.

Architectural considerations for building artificial intelligence solutions at the enterprise level

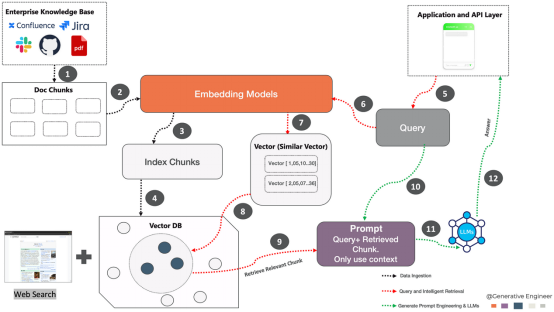

Architecture from the ground up It is built around three core pillars: data ingestion, query and intelligent retrieval, generation of prompt engineering, and large language models

.

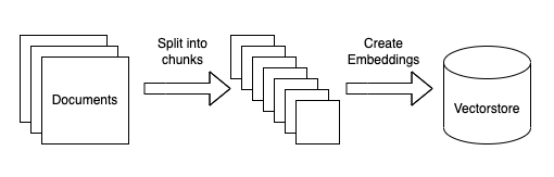

dataPhotographInput:The first step is to convert the content of the company document into an easy-to-query format. This transformation is done using an embedding model, following the following sequence of operations

- ##Data segmentation:

- Various documents from enterprise knowledge sources such as Confluence, Jira and PDF are extracted into the system. This step involves breaking the document into manageable parts, often called "chunks." Embedding model:

- These document chunks are then passed to the embedding model. An embedding model is a neural network that converts text into a numerical form (vector) that represents the semantics of the text, making it understandable by machines. Index block:

- The vectors produced by the embedding model are then indexed. Indexing is the process of organizing data in a way that facilitates efficient retrieval. Vector database:

- Save all vector embeddings in a vector database. And save the text represented by each embed in a different file, making sure to include a reference to the corresponding embed.

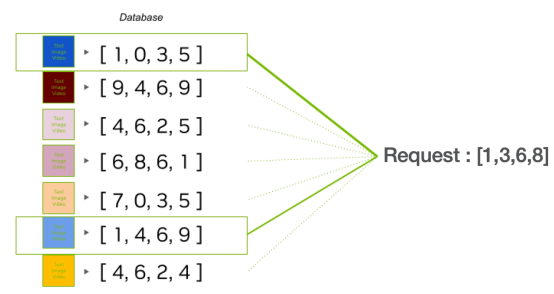

Query and smart retrieval: Once the inference server receives the user’s question, it converts it into a vector through an embedding process that uses the same model in Documentation embedded in the knowledge base. The vector database is then searched to identify vectors that are closely related to the user's intent and fed to a large language model (LLM) to enrich the context.

5.Queries: Queries from the application and API layers. The query is what a user or other application enters when searching for information.

6.Embedded query retrieval: Use the generated Vector.Embedding in the vector Start a search in the database index. Choose the number of vectors you want to retrieve from the vector database; this number will be proportional to the number of contexts you plan to compile and use to solve the problem.

7.Vectors (similar vectors): This process identifies similar vectors, These vectors represent chunks of documents that are relevant to the query context.

8.Retrieve related vectors:

Retrieve relevant vectors from the vector database. For example, in the context of a chef, it might equate to two related vectors: a recipe and a preparation step. Corresponding fragments will be collected and provided with the prompt.

9.Retrieve related blocks: The system obtains and is identified as the query The relevant vector matches the document part. Once the relevance of the information has been assessed, the system determines next steps. If the information is completely consistent, it will be ranked according to importance. If the information is incorrect, the system discards it and looks for better information online.

generateTipsEngine and LLMs: Build TipsEngine for guiding large languages It is crucial that the model gives the right answer. It involves creating clear and precise questions that take into account any data gaps. This process is ongoing and requires regular adjustments for better response. It’s also important to make sure the questions are ethical, free of bias and avoid sensitive topics.

10. Prompt Engineering: The retrieved chunks are then used with the original query to create the prompt. This hint is designed to effectively convey query context to the language model.

11. LLM (Large Language Model): Engineering tips are handled by large language models. These models can generate human-like text based on the input they receive.

12. Answer: Finally, the language model uses the context provided by the hint and the retrieved chunks to generate Answers to queries. That answer is then sent back to the user through the application and API layers.

Conclusion

This blog explores the use of artificial intelligence The complex process of integration into software development highlights the transformative potential of building an enterprise generative AI platform inspired by CRAG. By addressing the complexities of just-in-time engineering, data management, and innovative retrieval-augmented generation (RAG) approaches, we outline ways to embed AI technology into the core of business operations. Future discussions will further delve into the Generative AI framework for intelligent development, examining specific tools, techniques, and strategies for maximizing the use of AI to ensure A smarter, more efficient development environment.

Source| https://www.php.cn/link/1f3e9145ab192941f32098750221c602

Author| Venkat Rangasamy

The above is the detailed content of Mastering Business AI: Building an Enterprise-Grade AI Platform with RAG and CRAG. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

What are the top ten virtual currency trading platforms? Ranking of the top ten virtual currency trading platforms in the world

Feb 20, 2025 pm 02:15 PM

What are the top ten virtual currency trading platforms? Ranking of the top ten virtual currency trading platforms in the world

Feb 20, 2025 pm 02:15 PM

With the popularity of cryptocurrencies, virtual currency trading platforms have emerged. The top ten virtual currency trading platforms in the world are ranked as follows according to transaction volume and market share: Binance, Coinbase, FTX, KuCoin, Crypto.com, Kraken, Huobi, Gate.io, Bitfinex, Gemini. These platforms offer a wide range of services, ranging from a wide range of cryptocurrency choices to derivatives trading, suitable for traders of varying levels.

Do I need to use flexbox in the center of the Bootstrap picture?

Apr 07, 2025 am 09:06 AM

Do I need to use flexbox in the center of the Bootstrap picture?

Apr 07, 2025 am 09:06 AM

There are many ways to center Bootstrap pictures, and you don’t have to use Flexbox. If you only need to center horizontally, the text-center class is enough; if you need to center vertically or multiple elements, Flexbox or Grid is more suitable. Flexbox is less compatible and may increase complexity, while Grid is more powerful and has a higher learning cost. When choosing a method, you should weigh the pros and cons and choose the most suitable method according to your needs and preferences.

How to adjust Sesame Open Exchange into Chinese

Mar 04, 2025 pm 11:51 PM

How to adjust Sesame Open Exchange into Chinese

Mar 04, 2025 pm 11:51 PM

How to adjust Sesame Open Exchange to Chinese? This tutorial covers detailed steps on computers and Android mobile phones, from preliminary preparation to operational processes, and then to solving common problems, helping you easily switch the Sesame Open Exchange interface to Chinese and quickly get started with the trading platform.

Top 10 cryptocurrency trading platforms, top ten recommended currency trading platform apps

Mar 17, 2025 pm 06:03 PM

Top 10 cryptocurrency trading platforms, top ten recommended currency trading platform apps

Mar 17, 2025 pm 06:03 PM

The top ten cryptocurrency trading platforms include: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

How to calculate c-subscript 3 subscript 5 c-subscript 3 subscript 5 algorithm tutorial

Apr 03, 2025 pm 10:33 PM

How to calculate c-subscript 3 subscript 5 c-subscript 3 subscript 5 algorithm tutorial

Apr 03, 2025 pm 10:33 PM

The calculation of C35 is essentially combinatorial mathematics, representing the number of combinations selected from 3 of 5 elements. The calculation formula is C53 = 5! / (3! * 2!), which can be directly calculated by loops to improve efficiency and avoid overflow. In addition, understanding the nature of combinations and mastering efficient calculation methods is crucial to solving many problems in the fields of probability statistics, cryptography, algorithm design, etc.

Top 10 virtual currency trading platforms 2025 cryptocurrency trading apps ranking top ten

Mar 17, 2025 pm 05:54 PM

Top 10 virtual currency trading platforms 2025 cryptocurrency trading apps ranking top ten

Mar 17, 2025 pm 05:54 PM

Top Ten Virtual Currency Trading Platforms 2025: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

What are the safe and reliable digital currency platforms?

Mar 17, 2025 pm 05:42 PM

What are the safe and reliable digital currency platforms?

Mar 17, 2025 pm 05:42 PM

A safe and reliable digital currency platform: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.

Recommended safe virtual currency software apps Top 10 digital currency trading apps ranking 2025

Mar 17, 2025 pm 05:48 PM

Recommended safe virtual currency software apps Top 10 digital currency trading apps ranking 2025

Mar 17, 2025 pm 05:48 PM

Recommended safe virtual currency software apps: 1. OKX, 2. Binance, 3. Gate.io, 4. Kraken, 5. Huobi, 6. Coinbase, 7. KuCoin, 8. Crypto.com, 9. Bitfinex, 10. Gemini. Security, liquidity, handling fees, currency selection, user interface and customer support should be considered when choosing a platform.