As the media frantically hypes Sora, OpenAI’s introductory material calls Sora a “world simulator.” The term world model has come into view again, but there are few articles introducing world models.

Here we review what a world model is and discuss whether Sora is a world simulator.

When the words world/world and environment/environment are mentioned in the field of AI, usually It is to distinguish it from the intelligent body/agent.

The fields where most agents are studied are reinforcement learning and robotics.

So we can see that world models and world modeling appear earliest and most often in papers in the field of robotics.

The word world models that has the greatest impact today may be this article named "world models" that Jurgen posted on arxiv in 2018. The article was eventually titled "Recurrent World Models" The title "Facilitate Policy Evolution" was published at NeurIPS'18.

The paper does not define what World models are, but instead makes an analogy to the mental model of the human brain in cognitive science, citing the 1971 of literature.

The mental model is the human brain’s mirror image of the surrounding world

The mental model introduced in Wikipedia, It is clearly pointed out that it may participate in cognition, reasoning, and decision-making processes. And when it comes to mental model, it mainly includes two parts: mental representations and mental simulation.

an internal representation of external reality, hypothesized to play a major role in cognition, reasoning and decision-making. The term was coined by Kenneth Craik in 1943 who suggested that the mind constructs " small-scale models" of reality that it uses to anticipate events.

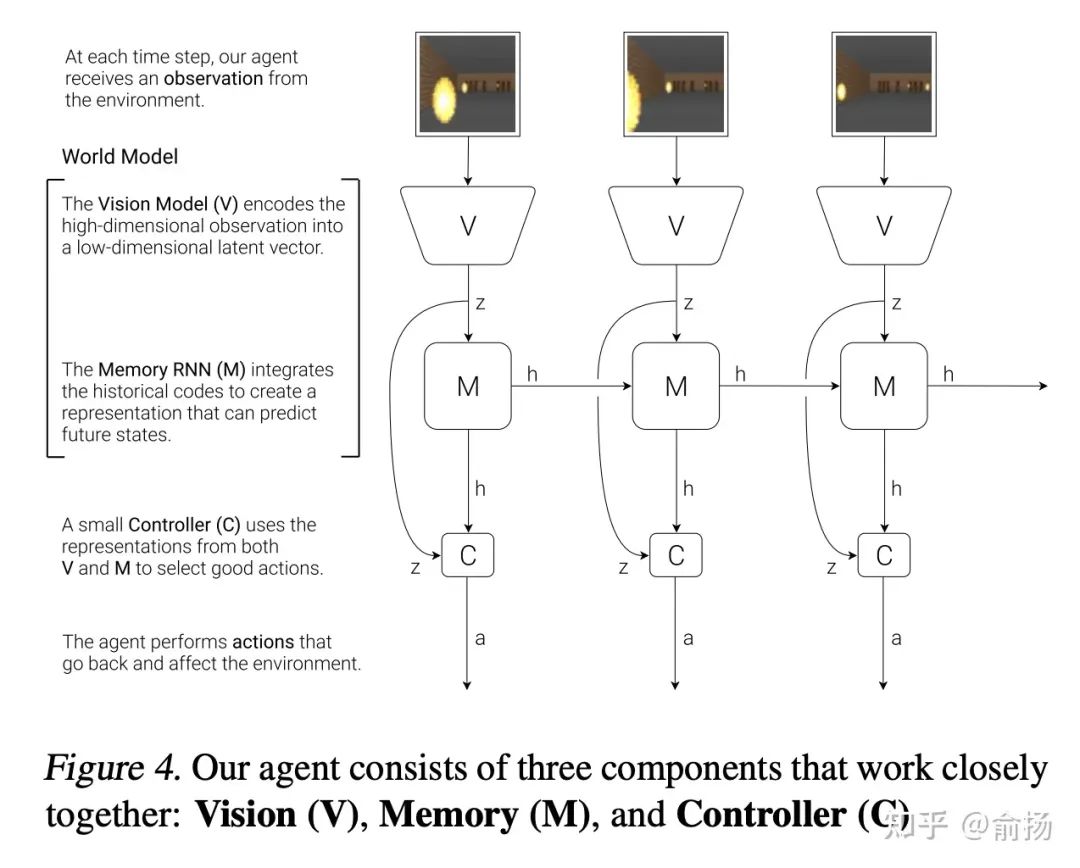

It's still a bit confusing, but the structure diagram in the paper clearly explains what a world model is.

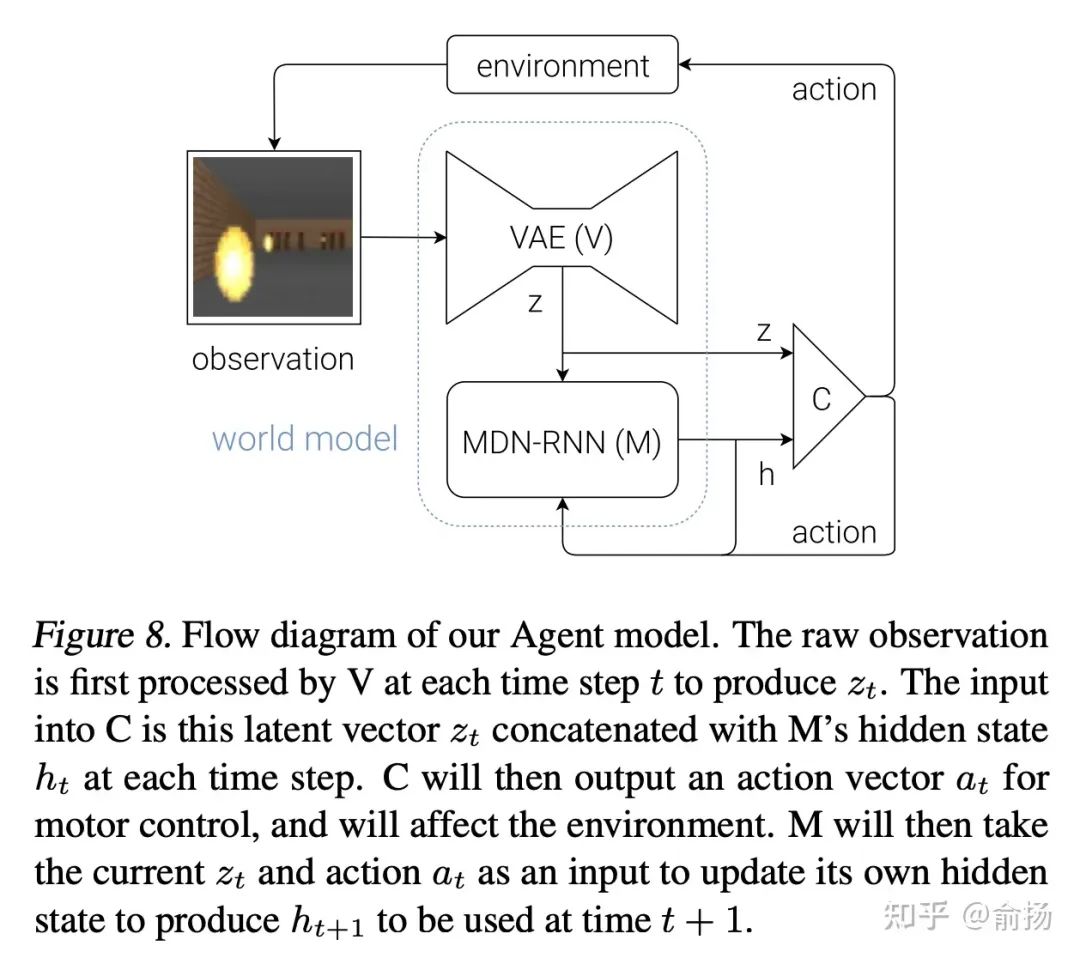

The vertical V->z in the figure is the low-dimensional representation of the observation, implemented with VAE, and the horizontal M->h-> M->h is the representation of the sequence that predicts the next moment, which is implemented using RNN. The two parts add up to the World Model.

In other words, the World model mainly includes state representation and transition model, which also corresponds to mental representations and mental simulation.

When you see the picture above, you may think, aren’t all sequence predictions world models?

In fact, students who are familiar with reinforcement learning can see at a glance that the structure of this picture is wrong (incomplete), and the real structure is the picture below. The input of RNN is not only It's z, and there's action. This is not the usual sequence prediction (will adding an action be very different? Yes, adding an action can allow the data distribution to change freely, which brings huge challenges).

#Jurgen’s paper belongs to the field of reinforcement learning.

So, aren’t there many model-based RL in reinforcement learning? What is the difference between the model and the world model? The answer is there is no difference, it is the same thing. Jurgen first said a paragraph

The basic meaning is that no matter how many model-based RL work, I am the RNN pioneer, RNN is the one who makes the model. Invented, I just want to do it.

In the early version of Jurgen's article, he also mentioned a lot of model-based RL. Although he learned the model, he did not fully train RL in the model.

The RL is not fully trained in the model. In fact, it is not the difference between the models of model-based RL, but the long-standing frustration of the model-based RL direction: the model is not accurate enough and the training is completely in the model. The RL effect is very poor. This problem has only been solved in recent years.

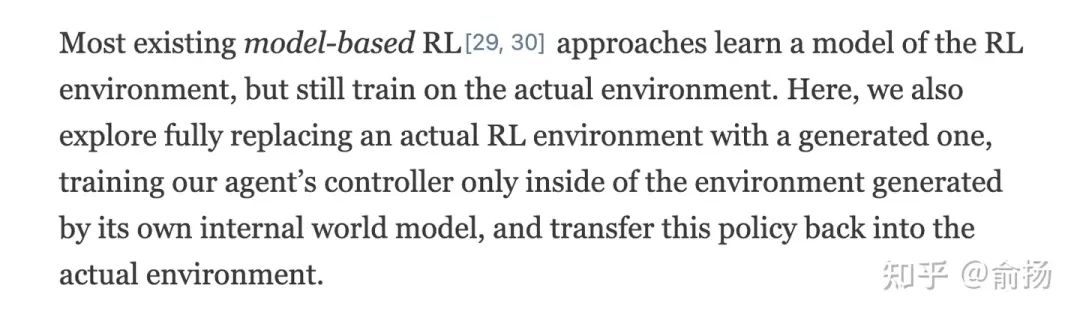

The smart Sutton realized the problem of inaccurate model a long time ago. In 1990, the paper Integrated Architectures for Learning, Planning and Reacting based on Dynamic Programming that proposed the Dyna framework (published on ICML, which was the first workshop to be a conference), called this model an action model, emphasizing predicting the results of action execution.

RL learns from real data (line 3) while learning from the model (line 5) to prevent inaccurate model learning from poor strategy.

#As you can see, the world model is very important for decision-making. If you can obtain an accurate world model, you can find the optimal decision in reality by trial and error in the world model.

This is the core function of the world model: counterfactual reasoning/Counterfactual reasoning, that is, even for decisions that have not been seen in the data, decisions can be inferred in the world model the result of.

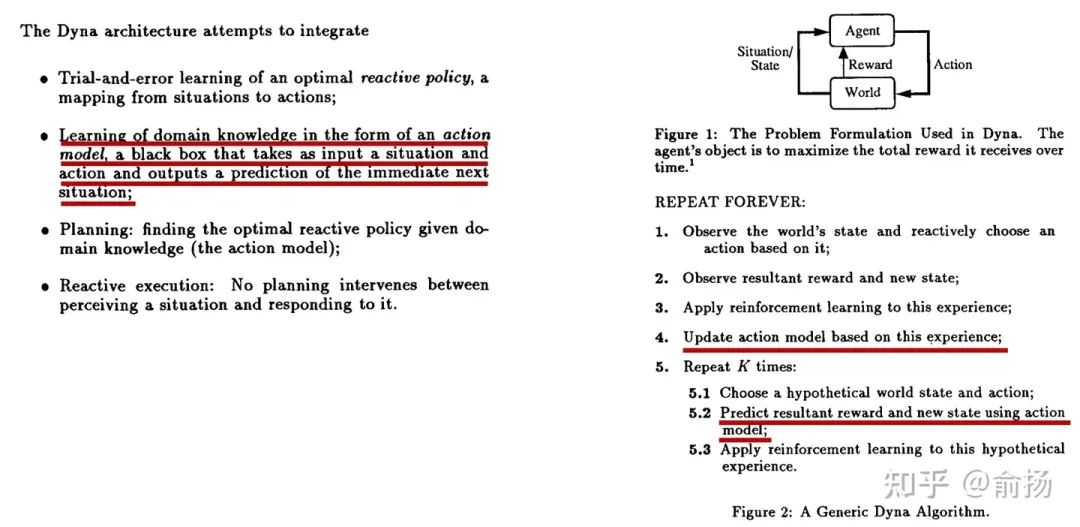

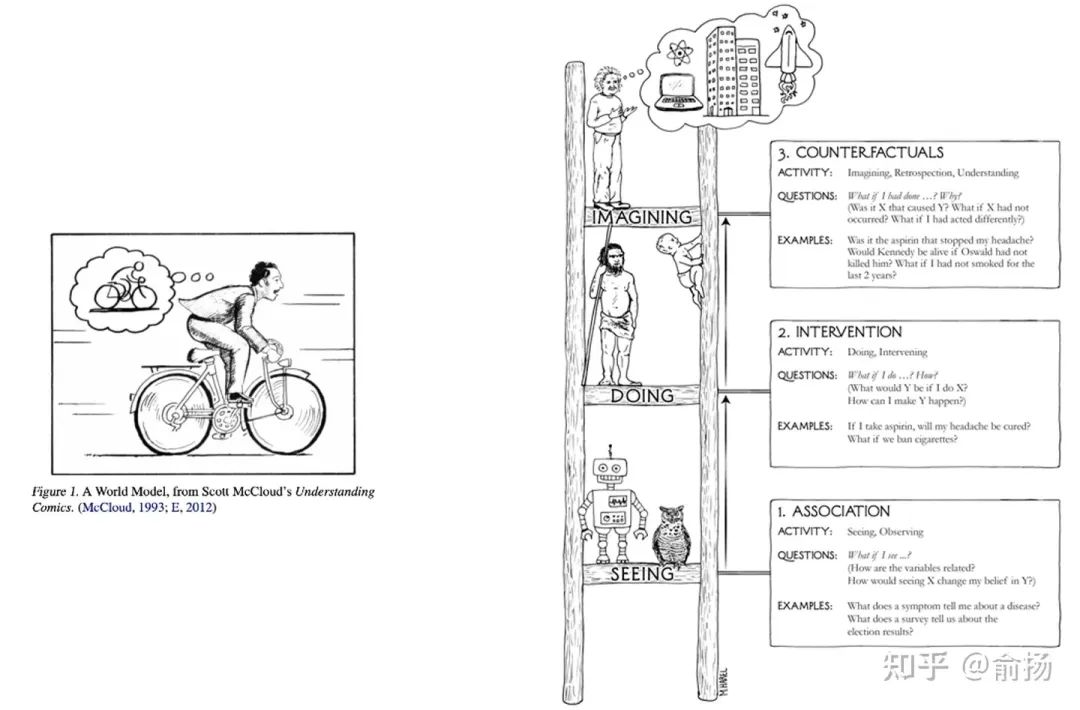

Students who understand causal reasoning will be familiar with the term counterfactual reasoning. In the popular science book The book of why, Turing Award winner Judea Pearl draws a causal ladder, with the lowest level It is "association", which is what most prediction models today are mainly doing; the middle layer is "intervention", and exploration in reinforcement learning is a typical intervention; the top layer is counterfactual, answering the what if question through imagination. The schematic diagram Judea drew for counterfactual reasoning is what scientists imagine in their brains, which is similar to the schematic diagram Jurgen used in his paper.

Left: Schematic diagram of the world model in Jurgen’s paper. Right: The ladder of cause and effect in Judea’s book.

We can conclude here that AI researchers’ pursuit of world models is an attempt to transcend data, conduct counterfactual reasoning, and pursue the ability to answer what if questions. This is an ability that humans naturally have, but the current AI is still very poor at it. Once a breakthrough is made, AI decision-making capabilities will be greatly improved, enabling scenario applications such as fully autonomous driving.

The word simulator appears more in the engineering field, and it works the same as a world model. Try those things that are difficult to High-cost, high-risk trial and error of real-world implementation. OpenAI seems to want to re-form a phrase, but the meaning remains the same.

The video generated by Sora can only be guided by vague prompt words, making it difficult to control accurately. Therefore, it is more of a video tool and is difficult to use as a counterfactual reasoning tool to accurately answer what if questions.

It is even difficult to evaluate how strong Sora’s generation ability is, because it is completely unclear how different the demo video is from the training data.

What’s even more disappointing is that these demos show that Sora has not accurately learned the laws of physics. I have seen someone point out the inconsistency with physical laws in the videos generated by Sora [OpenAI releases Vincent video model Sora, AI can understand the physical world in motion. Is this a world model? What does it mean? ]

I guess that OpenAI releases these demos based on very sufficient training data, even including data generated by CG. However, even so, the physical laws that can be described by equations with a few variables are still not grasped.

OpenAI believes that Sora proves a route to simulators of the physical world, but it seems that simply stacking data is not the path to more advanced intelligent technology.

The above is the detailed content of Nanda Yu Yang's in-depth interpretation: What is a 'world model”?. For more information, please follow other related articles on the PHP Chinese website!