读书笔记 《MySQL技术内幕 InnoDB存储引擎》_MySQL

bitsCN.com

缘由

在微博上看到李嘉诚自述的视频中有这么一句话,大意是:我很喜欢读书,我通常读完一本书,把它记到脑子里,再去换另一本书。当时我突有感想,这些年工作,买过的书也不少,有80余本,基本上每本都是经典的好书,也算是有点收藏的味道吧。但是很多书我都是翻一翻,满足自己对某一方面知识的渴望,但自己真的能记在脑力里的却不多,于是在2012年的年尾,伴随着自己的失业,我也打算好好的选择一些书继续阅读,争取读完了,能记住一些,再换下一本。

状态

首读 —— 《MySQL技术内幕 InnoDB存储引擎》 At 2012/12/20

前言

我不是DBA,我是一名开发者,所以站在开发者的角度来读这本书对自己还是有不少收获的,至少以后在项目中设计和使用数据库的过程中,可以考虑到如何更好的和DBA进行有效的沟通。

获取的知识

InnoDB存储引擎 master thread 的问题?

InnoDB的主线程的代码,在每秒执行的任务中:存在固定的只刷新100个脏页到磁盘、合并20个插入缓冲。在写密集的App中,每秒中可以能产生大于100个的脏页,或是产生大于20个插入缓冲,此时的master thread似乎会忙不过来,或者说它总是做得很慢。即使磁盘能在1秒内处理多于100个页的写入和20个插入缓冲的合并,由于hard coding(硬编码)master thread也只会选择刷新100个脏页和合并20个插入缓冲。同时,当发生宕机需要恢复时,由于很多数据还没有刷新回磁盘,所以可能会导致恢复需要很快的时间,尤其是对于insert buffer。

解决办法

InnoDB Plugin提供了一个参数,用来表示磁盘IO的吞吐量,参数为 innodb_io_capacity,默认值为200。对于刷新到磁盘的数量,会按照 innodb_io_capacity的百分比来刷新相对数量的页。规则如下:

* 在合并插入缓冲时,合并插入缓冲的数量为 innodb_io_capacity 数值的5%。

* 在从缓冲区刷新脏页时,刷新脏页的数量为 innodb_io_capacity。

如果你使用了SSD类的磁盘,或者将几块磁盘做了RAID,当你的存储拥有更高的IO速度时,完全可以将 innodb_io_capacity 的值调得再高点,知道符合你的磁盘IO的吞吐量为止。

慢查询日志

MySQL允许用户通过 long_query_time 参数来设置,默认值是10,代表10秒。默认情况下,MySQL数据库并不启动慢查询日志,需要我们手工将这个参数(log_slow_queries)设为ON,然后启动。

* 注意1

当设置了long_query_time后,MySQL数据库会记录运行时间超过该值的所有SQL语句,但对于运行时间正好等于long_query_time的情况,并不会被记录下。

* 注意2

从MySQL5.1开始,long_query_time开始以微秒记录SQL语句运行时间。

另一个和慢查询日志有关的参数是 log_queries_not_using_indexes,如果运行的SQL语句没有使用索引,则MySQL数据库同样会将这条SQL语句记录到慢查询日志文件。

使用 mysqldumpslow 命令可以分析慢查询日志文件

mysqldumpslow nh122-190-slow.log

MySQL5.1开始可以将慢查询的日志记录放入一张表中,这使我们的查询更加直观。慢查询表在MySQL数据库中,名为slow_log。

参数log_output指定了慢查询输出的格式,默认为FILE,你可以将它设为TABLE,然后就可以查询mysql数据库的slow_log表了。

set global log_output='TABLE';

分区表

MySQL 5.1 后添加对表分区的支持,当然支持的分区类型为水平分区(一表中不同行的记录分配到不同的物理文件中)。此外,MySQL数据库的分区是局部分区索引,一个分区中既存放了数据又存放了索引。

show variables like '%partition%'/G;

MySQL目前支持的分区类型有:

* RANGE分区:行数据基于属于一个给定连续区间的列值放入分区。MySQL5.5开始支持RANGE COLUMNS的分区。

* LIST分区:和RANGE分区类似,只是LIST分区面向的是离散的值。MySQL5.5开始支持LIST COLUMNS的分区。

* HASH分区:根据用户自定义的表达式的返回值来进行分区,返回值不能为负数。

* KEY分区:根据MySQL数据库提供的哈希函数来进行分区。

* 不论创建何种类型的分区,如果表中存在主键或者是唯一索引时,分区别必须是唯一索引的一个组成部分。唯一索引可以是允许NULL值的,并且分区列只要是唯一索引的一个组成部分,不需要整个唯一索引列都是分区列。

* 当建表时没有指定主键,唯一索引时,可以指定任何一个列为分区列。

B+树索引

B+树索引其本质就是B+树在数据库中的实现,但是B+的索引在数据库中有一个特定就是高扇出性,因此在数据库中,B+树的高度一般都在2-3层,也就是对于查询某一键值的行记录,最多只需要2到3次IO,而对于当前的硬盘速度,2-3次IO也就意味着查询时间只需要0.02-0.03秒。

什么时候使用B+树索引

* 访问高选择性字段并从表中取出很少一部分行时,对这个字段添加B+树索引是非常有必要的。

聚集索引和辅助索引

InnoDB存储引擎是索引组织表,即表中数据按照主键顺序存放。而聚集索引就是按照每张表的主键构造一颗B+树,并且叶节点中存放着整张表的行记录数据,因此也让聚集索引的叶节点成为数据页。

每张表只能拥有一个聚集索引。

辅助索引(非聚集索引),叶级别不包含行的全部数据。叶节点除了包含键值以外,每个叶级别中的索引行还包含了一个书签,该书签用来告诉InnoDB存储引擎。

事务的隐式提交

不好的事务习惯

* 在循环中提交

create procedure load1(count int unsigned)begindeclare s int unsigned default 1;declare c char(80) default repreat('a',80);while s <p>* 使用自动提交</p><p>自动提交并不是好习惯,因为这对于初级DBA容易犯错,另外对于一些开发人员可能产生错误的理解,如我们在上面提到的循环提交问题。MySQL数据库默认设置使用自动提交。可以使用如下语句来改变当然自动提交的方式</p><pre class="brush:php;toolbar:false">set autocommit=0;* 使用自动回滚

create procedure sp_auto_rollback_demo()begindeclare exit handler for sqlexception rollback;start transaction;insert into b select 1;insert into c select 2;insert into b select 1;insert into b select 3;commit;end;

bitsCN.com

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

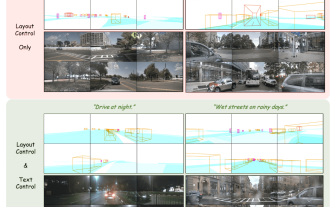

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

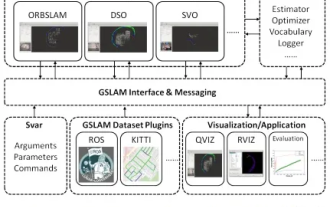

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

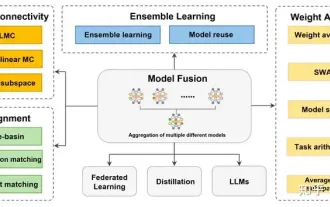

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion