Can generative AI save the telecom industry?

At the MWC 2024 conference being held recently, NVIDIA released a series of announcements, including cooperation with ARM, ServiceNow and SoftBank, the establishment of the AI-RAN Alliance, and A major agreement has been struck with Telenor that will give Telenor access to Nvidia's latest hardware and enterprise AI software to support the many artificial intelligence use cases employed in its operations.

Discuss the broader relationship between the telecommunications industry and generative AI

Chris Penrose, head of global telecommunications business development at NVIDIA, discussed the relationship between the telecommunications industry and generative AI in an interview with industry media Deeper connections between AI have been studied in depth.

When asked about the biggest issue facing the telecom industry, he said: "I would say that telecom companies are investing heavily in 5G right now, but that doesn't necessarily translate into significant revenue growth. Improvement. They need to find ways to ensure that they're getting a return on their investment. In the early days, a lot of people were excited about killer apps that would take that value, and I think we're actually at an interesting moment now because I believe that generative AI has The potential to be a killer app is emerging.

Because existing applications are using generative AI as part of their user interface. There is really an opportunity now, whether it is providing infrastructure as a service , or providing training as a service, inference as a service, or even AI applications, are all new opportunities driven by generative AI. Telecom companies hope to use generative AI to gain some additional revenue so that the investment can be returned and they can They all hope to use 5G infrastructure in a way that attracts more attention.” The fields of generative AI play an important role, but they are not necessarily central to driving action. Currently, generative AI is a key business focus of large technology companies such as Google and Microsoft.

Penrose said: "We are seeing a lot of telecommunications companies starting to take action because this is the next wave of technology. Many countries and regions are asking this question, 'We What kind of infrastructure is needed to drive AI progress?' That's why we're talking about setting up AI factories or setting up sovereign AI clouds in these countries.

So, are people starting to see more and more How are telcos thinking about playing in this space? Telcos typically own and operate their own data centers with enough space and power, which is something users really need, and telcos also know how to run large infrastructure projects.

This is a very specific type of build that needs to be done to truly unlock generative AI. In order to improve performance, hundreds or thousands of GPUs need to be employed to be able to train on the AI model, which looks like It doesn't necessarily look like a standard deployment. So we need to encourage them to take a step forward and that's a decision that the telcos have to make and they have to embark on this journey. The telcos have a lot of advantages, such as being close to the government, being close to the business Customers have good cooperation and they are trustworthy partners in their own countries. So, can they really be at the forefront of this wave of technology?"

The above is the detailed content of Can generative AI save the telecom industry?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

NVIDIA dialogue model ChatQA has evolved to version 2.0, with the context length mentioned at 128K

Jul 26, 2024 am 08:40 AM

The open LLM community is an era when a hundred flowers bloom and compete. You can see Llama-3-70B-Instruct, QWen2-72B-Instruct, Nemotron-4-340B-Instruct, Mixtral-8x22BInstruct-v0.1 and many other excellent performers. Model. However, compared with proprietary large models represented by GPT-4-Turbo, open models still have significant gaps in many fields. In addition to general models, some open models that specialize in key areas have been developed, such as DeepSeek-Coder-V2 for programming and mathematics, and InternVL for visual-language tasks.

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

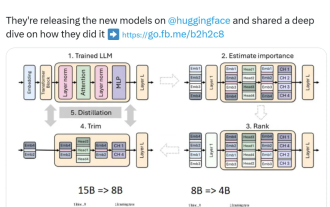

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

Compliant with NVIDIA SFF-Ready specification, ASUS launches Prime GeForce RTX 40 series graphics cards

Jun 15, 2024 pm 04:38 PM

According to news from this site on June 15, Asus has recently launched the Prime series GeForce RTX40 series "Ada" graphics card. Its size complies with Nvidia's latest SFF-Ready specification. This specification requires that the size of the graphics card does not exceed 304 mm x 151 mm x 50 mm (length x height x thickness). ). The Prime series GeForceRTX40 series launched by ASUS this time includes RTX4060Ti, RTX4070 and RTX4070SUPER, but it currently does not include RTX4070TiSUPER or RTX4080SUPER. This series of RTX40 graphics cards adopts a common circuit board design with dimensions of 269 mm x 120 mm x 50 mm. The main differences between the three graphics cards are

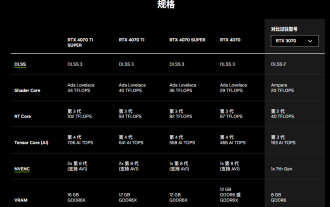

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

Nvidia releases GDDR6 memory version of GeForce RTX 4070 graphics card, available from September

Aug 21, 2024 am 07:31 AM

According to news from this site on August 20, multiple sources reported in July that Nvidia RTX4070 and above graphics cards will be in tight supply in August due to the shortage of GDDR6X video memory. Subsequently, speculation spread on the Internet about launching a GDDR6 memory version of the RTX4070 graphics card. As previously reported by this site, Nvidia today released the GameReady driver for "Black Myth: Wukong" and "Star Wars: Outlaws". At the same time, the press release also mentioned the release of the GDDR6 video memory version of GeForce RTX4070. Nvidia stated that the new RTX4070's specifications other than the video memory will remain unchanged (of course, it will also continue to maintain the price of 4,799 yuan), providing similar performance to the original version in games and applications, and related products will be launched from