As Kubernetes and container technology become increasingly mature, more and more enterprises are beginning to migrate their business applications to the cloud. By adopting cloud-native architecture, these enterprises can better support the rapid development and stable operation of their businesses.

As cloud computing develops in-depth into the development process, the maturity of Serverless architecture has brought cloud native technology to a new stage - Serverless takes the public cloud's elastic scalability, operation-free maintenance, fast access and other features to the extreme. It greatly reduces the user's cost of use, allowing users and enterprises to only focus on their business logic and achieve agile development in the true sense.

In order to better support enterprises in realizing the new serverless cloud native concept in business applications, the Volcano Engine cloud native team is comprehensively upgrading and innovating products, covering many aspects such as concept, system design and architecture design. . They are committed to giving full play to the advantages and value of Serverless technology to help enterprises run business applications more efficiently.

From Node Center to Serverless Architecture

The traditional Kubernetes architecture usually takes the node as the core, which means that the team It is necessary to build clusters on resource nodes such as cloud servers, and perform operation and maintenance management around these nodes. The traditional Kubernetes cluster architecture requires managing the deployment, monitoring, and maintenance of container applications on different nodes. This approach requires more manpower and time investment to maintain the stability and reliability of the entire cluster. However, with the development of technology, a new type of Kubernetes architecture has emerged, namely an architecture based on service mesh. In an architecture based on a service grid, network functions are abstracted and unified through specialized service grid components. As the business scale expands and the number of nodes increases, this architecture becomes increasingly important in container applications. There are usually some challenges in deployment and operation, mainly including the following aspects:

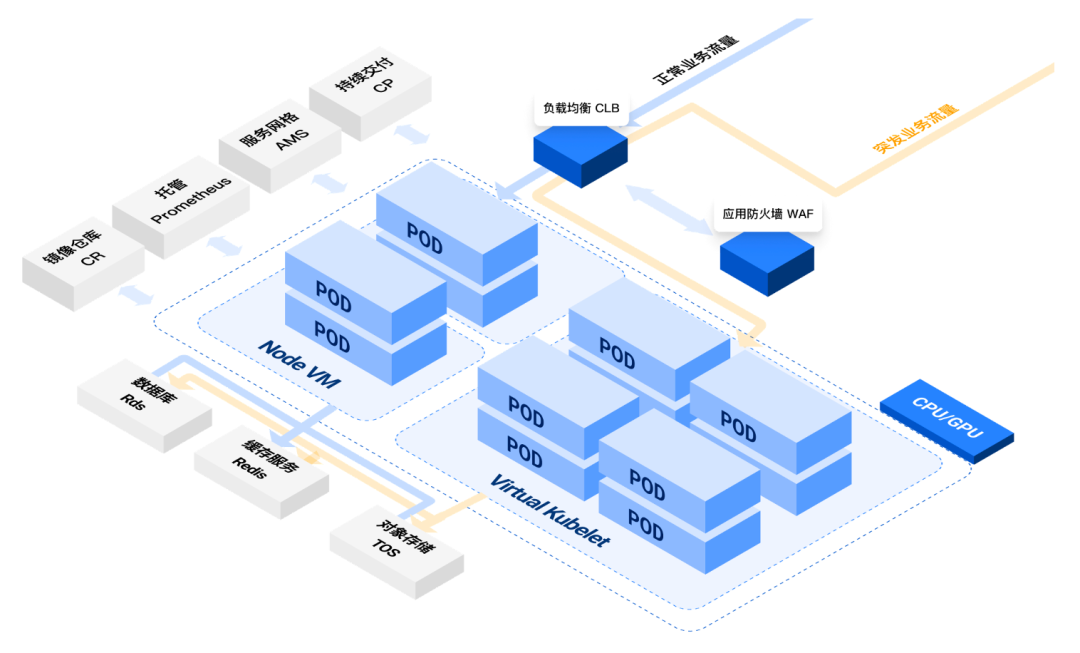

(Volcengine Container Instance, referred to as VCI) is a new cloud native solution launched for the above situation. It is abstracted from ByteDance’s internal cloud native technology for many years. Accumulation is a serverless and containerized computing service - Through Virtual Kubelet technology and the Volcano Engine container service VKE, the elastic container instance VCI can provide users with flexible computing and Kubernetes orchestration functions, supporting Fast startup, high-concurrency creation, and secure sandbox container isolation.

By integrating the advantages of Serverless and containers, Elastic Container Instance (VCI) can provide enterprises with an efficient, reliable and secure operating environment, allowing users to focus on developing and running cloud-native architecture applications without having to worry about The management and maintenance of the underlying infrastructure, and only paying for the resources required for actual business operation, help enterprises more reasonably control cloud costs.

No operation and maintenance of infrastructure, no planning of computing resourcesIn the traditional self-built Kubernetes cluster architecture, whether it is the control plane or the data plane, as a container group Pod The running carrier and nodes are located at the core of the Kubernetes architecture. When node computing resources are insufficient, computing resources need to be supplemented by adding nodes to support the deployment or expansion of business workloads.

On the cloud, various managed Kubernetes services represented by Volcano Engine Container Service VKE will replace users in managing and operating the Kubernetes control plane, providing managed services with SLA guarantees. For the data plane that runs users' actual business workloads, users usually need to operate and maintain working nodes by themselves, and the computing resources provided by the nodes need to be planned in advance according to business characteristics. For example, when business peaks require advance expansion, Business downturns require downsizing.

Therefore, in the traditional node-centered Kubernetes architecture, node operation and maintenance often requires a lot of energy from the technical team; at the same time, how to balance the issue of advanced planning of resources and resource costs has also become a "refined application for enterprises. Questions that we have to think about after the era of "cloud ".

Elastic Container Instance VCI provides Serverless and containerized computing services. Each VCI will only provide the operating environment and computing resources for a single Pod. Users do not need to care about the operation and maintenance and capacity planning of the underlying nodes. They only need to Just deploy the container application. When using VCI to deploy container applications, you can use native Kubernetes semantics, which is no different from the node center architecture.

At the resource level, the elastic container instance VCI uses the Volcano Engine computing infrastructure as a resource pool to provide container computing resources of multiple resource types to facilitate users to flexibly choose according to business needs. At the same time, users do not need to pay attention to resource capacity planning issues. VCI provides massive computing resources and will not cause phenomena such as Pod drift and rescheduling that are common in traditional node-centric architectures, which will further cause service interruptions, performance fluctuations, Problems such as data inconsistency and scheduling delays.

Finally, the seamless integration of container service VKE and elastic container instance VCI not only realizes full hosting of the Kubernetes cluster control plane, but also provides users with full Serverless data plane (i.e. elastic container instance VCI). Hosting, operation-free, and secure isolation greatly reduce users’ operation and maintenance costs on Kubernetes infrastructure and eliminate the complexity of capacity planning, allowing users to focus more on their business applications.

Ultimate elasticity, you get what you need

Flexibility and savings

According to Flexera in the "2022 State of the Cloud Report" According to the disclosed survey data, the self-assessed cloud cost waste of the interviewed companies reached 32%, and "cost" has become one of the cloud challenges that companies are most concerned about for three consecutive years - "refined cloud usage" has begun to gain traction. More and more enterprises are paying attention to how to save cloud costs while meeting business development needs, which has become an important challenge that enterprises need to solve when using the cloud.

Elastic Container Instance VCI focuses on the enterprise's "cloud cost control" needs from the beginning of its product design, emphasizing refined billing based on the actual amount of resources used, supporting multiple billing models, and helping enterprises truly make good use of the cloud. .

Refined billing: Elastic container instance VCI is billed according to the resources actually used by the user container group (Pod). Billing resources include vCPU, memory, GPU, etc. At the same time, the billing time of the VCI instance is its running time, which is the time used by the user Pod from downloading the container image to stopping running. It is accurate to the second level, truly realizing billing based on actual usage.

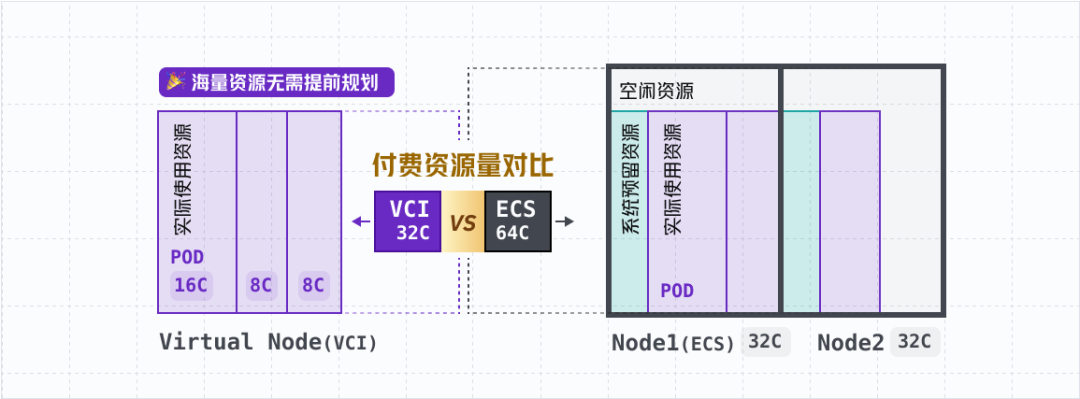

Improve the packing rate: Under the cloud native architecture, compared with traditional computing resources, another advantage of elastic container instances is to reduce idle resources and improve the packing rate, thereby reducing user costs. computing resource usage costs. Specifically, when a Kubernetes cluster uses cloud server ECS as a working node, it needs to run the system components required by Kubernetes on the node. Therefore, some computing resources need to be reserved, and there is a certain additional cost; and if an elastic container instance VCI is used as the computing resource , you only need to pay for the resources actually used by the business Pod:

Rich billing methods: The current elastic container instance VCI has officially supported pay-as-you-go billing, and will launch an invitation test event for the preemptive instance (Spot) payment model, as well as reserved instances and flexible reservations. Prepaid models such as instances are also supported by plans. By providing multiple payment models, we hope to help users further optimize cost management and budgeting of computing resources, and choose different payment models for different business scenarios, thereby better coordinating business resource needs and cloud cost planning.

Bounce out

Elastic Container Instance VCI fully integrates the computing resource infrastructure of Volcano Engine to provide computing power, and provides a variety of instance specification families for users to choose based on different underlying hardware capabilities, providing differentiated computing, storage, and network performance. , supporting coverage of a variety of business applications and service scenarios.

CPU General-purpose specification family: Such as general-purpose computing u1, general-purpose n3i, etc., which provide balanced vCPU, memory, and network capabilities to meet the needs of large-scale users. Service requirements in most scenarios.

GPU Computing type specification family: Such as GPU computing type gni2 (equipped with NVIDIA A10 GPU graphics card), GPU computing type g1v (equipped with NVIDIA V100 GPU graphics card), etc. It can provide a cost-effective use experience in various AI computing scenarios such as large model training, text and image generation, and task reasoning.

The latest general computing type u1 Instance specification family relies on the Volcano engine resource pooling technology and intelligent scheduling algorithm for dynamic resource management , can provide enterprises with a stable supply of computing power, and support a variety of processors and flexible processor memory ratios, from fine-grained small specifications such as 0.25C-0.5Gi, 0.5C-1Gi, 1C-2Gi to 24C-48Gi , 32C-256Gi and other large specifications are available for selection, with extremely high cost performance!

Backed by a huge supply of computing resources, the elastic container instance VCI also provides users with an industry-leading elastic resource priority scheduling strategy : Combined with the container service VKE, we support users to use their own Define the resource policy (ResourcePolicy) to elastically schedule to different types of computing resource pools such as cloud servers ECS and elastic container instances VCI, and perform scheduling and allocation according to percentage thresholds, priority control and other strategies according to actual business scenarios.

This means that users can combine the cloud server ECS computing resource pool and the elastic container instance VCI computing resource pool to enjoy massive Volcano Engine computing resources; at the same time, combine the Cluster Autoscaler capabilities and VCI in the container cluster , even if computing resources are not requested in advance in the container cluster, users can obtain elastic resources in a timely manner to efficiently support business applications.

bounce fast

Faced with unpredictable business traffic peaks in online business, it is crucial to ensure stable business operation and user experience; at the critical moment of business development, Quickly popping up computing resources and quickly enabling business applications are also critical. Elastic container instances are also naturally suitable for these scenarios.

On the one hand, the elastic capability of the Volcano Engine Elastic Container Instance (VCI) can meet the needs of tens of thousands of cores per minute vCPU computing resources, ensuring that When business needs it, sufficient computing resources are quickly available. When the traffic peak is over and the business workload decreases, elastic computing resources can be quickly released, making cloud costs more economical.

On the other hand, container image pulling often takes a long time, which is even more serious when large-scale Pods are started concurrently. Elastic container instance VCI not only has a variety of container image acceleration capabilities provided by VKE, such as container image lazy loading (image lazy loading), P2P container image distribution, etc., but also has unique acceleration capabilities such as container image caching capabilities. According to actual test data, Container image caching can control the overall startup time of a Pod with a container image (data volume within 100 G) to the level of ten seconds, helping users greatly improve efficiency and reduce cloud costs.

Conclusion

Since its official launch in 2022, Elastic Container Instance VCI has served many large and medium-sized enterprise customers and has been fully utilized in Bytedance’s internal and external business scenarios. practical test. The Volcano Engine cloud native team also continuously polishes product capabilities based on customer service experience and continues to improve the quality and stability of product services:

The Volcano Engine cloud native team is mainly responsible for the construction of the PaaS product system in the Volcano Engine public cloud and privatized scenarios. Combined with ByteDance’s many years of cloud native technology stack experience and best practices, it helps Enterprises accelerate digital transformation and innovation. Products include container services, image warehouses, distributed cloud native platforms, function services, service grids, continuous delivery, observable services, etc.

Facing 2024, Elastic Container Instance VCI will continue to improve and explore under the Serverless Kubernetes product architecture and form, providing more internal and external customers with no operation and maintenance of infrastructure, no planning of computing resources, and ultimate flexibility. Serverless containerized products and services can better support customers' various GPU and CPU computing power needs in AIGC, bioinformatics scientific computing, social e-commerce and other business scenarios.

The above is the detailed content of Volcano Engine elastic container instance: a powerful tool for transforming from a node-centric architecture to a serverless architecture. For more information, please follow other related articles on the PHP Chinese website!