Technology peripherals

Technology peripherals

AI

AI

Understand in one article: the connections and differences between AI, machine learning and deep learning

Understand in one article: the connections and differences between AI, machine learning and deep learning

Understand in one article: the connections and differences between AI, machine learning and deep learning

In today’s wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. tide. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery.

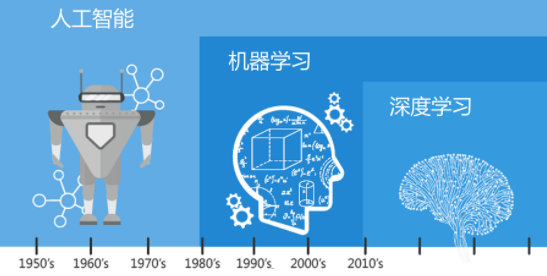

Let’s take a look at this picture first.

It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, which is an important component of artificial intelligence. The connections and mutual promotion between these fields enable the continuous development and improvement of artificial intelligence technology.

What is artificial intelligence?

Artificial Intelligence (AI) is a broad concept whose main goal is to develop computing systems that can simulate, extend or even surpass human intelligence. It has specific applications in many fields, such as:

- Image Recognition (Image Recognition) is an important branch of AI, which is dedicated to studying how to enable computers to obtain data through visual sensors and perform operations based on these data. Analysis to identify objects, scenes, behaviors and other information in images, simulating the recognition and understanding process of visual signals by the human eye and brain.

- Natural Language Processing (NLP) is the ability of computers to understand and generate human natural language. It covers a variety of tasks such as text classification, semantic analysis, machine translation, etc., and strives to simulate human listening. Talk about intelligent behaviors in reading and writing.

- Computer Vision (CV) includes image recognition in a broader sense. It also involves image analysis, video analysis, three-dimensional reconstruction and other aspects. It aims to allow computers to extract information from two-dimensional or three-dimensional images. "Seeing" and understanding the world is a deep imitation of the human visual system.

- Knowledge Graph (KG) is a structured data model used to store and represent entities and their complex relationships with each other. It simulates the accumulation and development of human knowledge in the cognitive process. The ability to utilize knowledge and the process of reasoning and learning based on prior knowledge.

These high-end technologies are researched and applied around the core concept of “simulating human intelligence”. They focus on the development of different perception dimensions (such as vision, hearing, thinking logic, etc.), and jointly promote the continuous development and progress of artificial intelligence technology.

What is machine learning?

Machine Learning (ML) is a crucial branch in the field of artificial intelligence (AI). It uses various algorithms to enable computer systems to automatically learn rules and patterns from data to make predictions and decisions, thereby enhancing and expanding the capabilities of human intelligence.

For example, when training a cat recognition model, the machine learning process is as follows:

- Data preprocessing: First, preprocess a large number of collected cat and non-cat pictures. Processing, including scaling, grayscale, normalization and other operations, and converting the image into a feature vector representation. These features may come from manually designed feature extraction techniques, such as Haar-like features, local binary patterns (LBP) or Feature descriptors commonly used in other computer vision fields.

- Feature selection and dimensionality reduction: Select key features according to the characteristics of the problem, remove redundant and irrelevant information, and sometimes use PCA, LDA and other dimensionality reduction methods to further reduce feature dimensions and improve algorithm efficiency.

- Model training: Then use the preprocessed labeled data set to train the selected machine learning model, and optimize the model performance by adjusting the model parameters so that the model can distinguish given features. Pictures of cats and non-cats.

- Model evaluation and verification: After training is completed, the model is evaluated using an independent test set to ensure that the model has good generalization ability and can be accurately applied to new unseen samples.

The top 10 commonly used machine learning algorithms are: decision tree, random forest, logistic regression, SVM, naive Bayes, K nearest neighbor algorithm, K-means algorithm, Adaboost algorithm, neural network, Marr Koff et al.

What is deep learning?

Deep Learning (DL) is a special form of machine learning. It simulates the way the human brain processes information through a deep neural network structure, thereby automatically extracting complex feature representations from the data.

For example, when training a cat recognition model, the deep learning process is as follows:

(1) Data preprocessing and preparation:

- Collect a large number of A dataset containing cat and non-cat images, cleaned and annotated to ensure that each image has a corresponding label (such as "cat" or "non-cat").

- Image preprocessing: Adjust all images to a uniform size, perform normalization processing, data enhancement and other operations.

(2) Model design and construction:

- Choose a deep learning architecture. For image recognition tasks, Convolutional Neural Network (CNN) is usually used. CNN can effectively extract local features of images and abstract them through multi-layer structures.

- Build a model hierarchy, including convolutional layers (for feature extraction), pooling layers (to reduce the amount of calculation and prevent overfitting), fully connected layers (to integrate and classify features), and possible batch reduction Unified layer, activation function (such as ReLU, sigmoid, etc.).

(3) Initialization parameters and setting hyperparameters:

- To initialize the weights and biases of each layer in the model, random initialization or a specific initialization strategy can be used.

- Set hyperparameters such as learning rate, optimizer (such as SGD, Adam, etc.), batch size, training period (epoch), etc.

(4) Forward propagation:

- Input the preprocessed image into the model, and perform operations such as convolution, pooling, and linear transformation at each layer , and finally obtain the predicted probability distribution of the output layer, that is, the probability that the model determines that the input picture is a cat.

(5) Loss function and backpropagation:

- Use the cross-entropy loss function or other suitable loss function to measure the difference between the model prediction results and the real label difference.

- After calculating the loss, execute the back propagation algorithm to calculate the gradient of the loss with respect to the model parameters in order to update the parameters.

(6) Optimization and parameter update:

- Use gradient descent or other optimization algorithms to adjust model parameters based on gradient information, with the purpose of minimizing the loss function.

- During each training iteration, the model will continue to learn and adjust parameters, gradually improving its ability to recognize cat images.

(7) Verification and evaluation:

- Regularly evaluate the model performance on the verification set, monitor changes in accuracy, precision, recall and other indicators to This guides hyperparameter tuning and early stopping strategies during model training.

(8) Training completion and testing:

- When the model’s performance on the validation set becomes stable or reaches the preset stopping conditions, stop training.

- Finally, evaluate the generalization ability of the model on an independent test set to ensure that the model can effectively identify cats on new unseen samples.

The difference between deep learning and machine learning

The difference between deep learning and machine learning is:

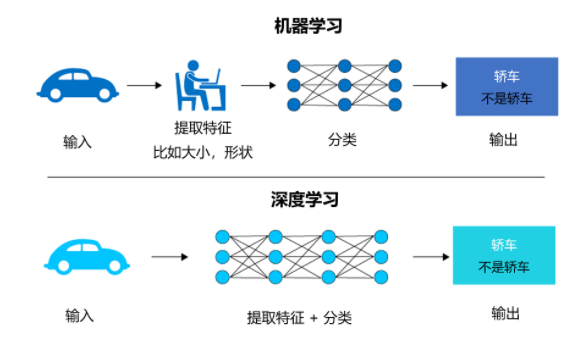

1. Method of solving problems

Machine Learning algorithms usually rely on human-designed feature engineering, that is, key features are extracted in advance based on background knowledge of the problem, and then models are built based on these features and optimized solutions are performed.

Deep learning adopts an end-to-end learning method, automatically generating high-level abstract features through multi-layer nonlinear transformation, and these features are continuously optimized during the entire training process, without manual selection and construction of features. Closer to the cognitive processing method of the human brain.

For example, if you want to write a software to identify a car, if you use machine learning, you need to manually extract the characteristics of the car, such as size and shape; and if you use deep learning, Then the artificial intelligence neural network will extract these features on its own, but it requires a large number of pictures labeled as cars to learn.

2. Application Scenarios

The application of machine learning in fingerprint recognition, characteristic object detection and other fields has basically met the requirements of commercialization.

Deep learning is mainly used in text recognition, face technology, semantic analysis, intelligent monitoring and other fields. At present, it is also rapidly deploying in intelligent hardware, education, medical and other industries.

3. Required amount of data

Machine learning algorithms can also show good performance in small sample cases. For some simple tasks or problems where features are easy to extract, less data Satisfactory results can be achieved.

Deep learning usually requires a large amount of annotated data to train deep neural networks. Its advantage is that it can directly learn complex patterns and representations from the original data. Especially when the data size increases, the performance of the deep learning model improves. more significant.

4. Execution time

In the training phase, because the deep learning model has more layers and a large number of parameters, the training process is often time-consuming and requires the support of high-performance computing resources, such as GPU cluster.

In comparison, machine learning algorithms (especially those lightweight models) usually have smaller training time and computing resource requirements, and are more suitable for rapid iteration and experimental verification.

The above is the detailed content of Understand in one article: the connections and differences between AI, machine learning and deep learning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S