Technology peripherals

Technology peripherals

AI

AI

Just one sentence to make the picture move. Apple uses large model animation to generate, and the result can be edited directly.

Just one sentence to make the picture move. Apple uses large model animation to generate, and the result can be edited directly.

Just one sentence to make the picture move. Apple uses large model animation to generate, and the result can be edited directly.

Currently, the amazing innovative capabilities of large-scale models continue to impact the creative field, especially representatives of video generation technology like Sora. Although Sora has led a new generation of trends, it may be worth paying attention to Apple's latest research results now.

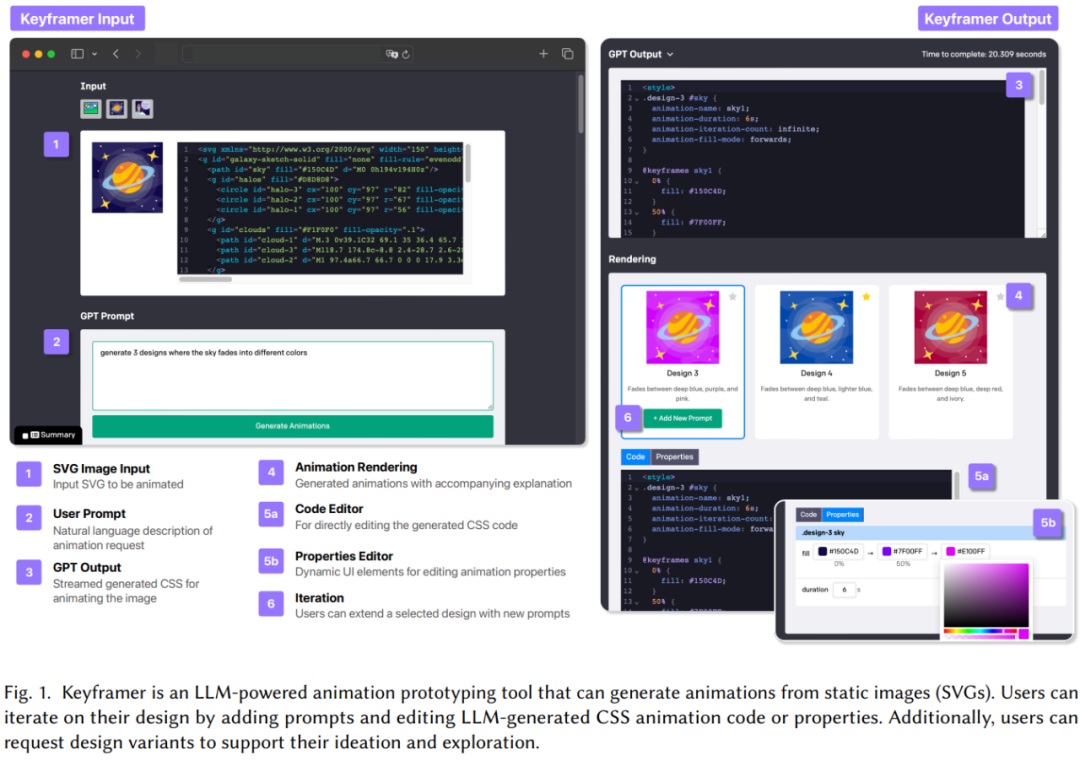

Apple researchers recently released a framework called "Keyframer" that can use large language models to generate animations. This framework allows users to easily create animations for static 2D images through natural language prompts. This research demonstrates the potential of language models in designing animations, providing animation designers with more efficient and intuitive tools.

Paper address: https://arxiv.org/pdf/2402.06071.pdf

Specific Specifically, this research combines emerging design principles based on language prompt design artifacts and the code generation capabilities of LLM to build a new AI-driven animation tool Keyframer. Keyframer allows users to create animated illustrations from static 2D images through natural language prompts. With GPT-4, Keyframer can generate CSS animation code to animate the input SVG (Scalable Vector Graphic).

In addition, Keyframer supports users to directly edit the generated animation through multiple editor types.

Users can continuously improve their designs using the design variants generated by LLM through repeated prompts and requests, thereby thinking in new design directions. However, Keyframer has not yet been made public.

In doing this research, Apple stated that the application of LLM in animation has not been fully explored and brings new challenges, such as how users can effectively describe motion in natural language. . While Vincentian graphics tools such as Dall・E and Midjourney are currently great, animation design requires more complex considerations, such as timing and coordination, that are difficult to fully summarize in a single prompt.

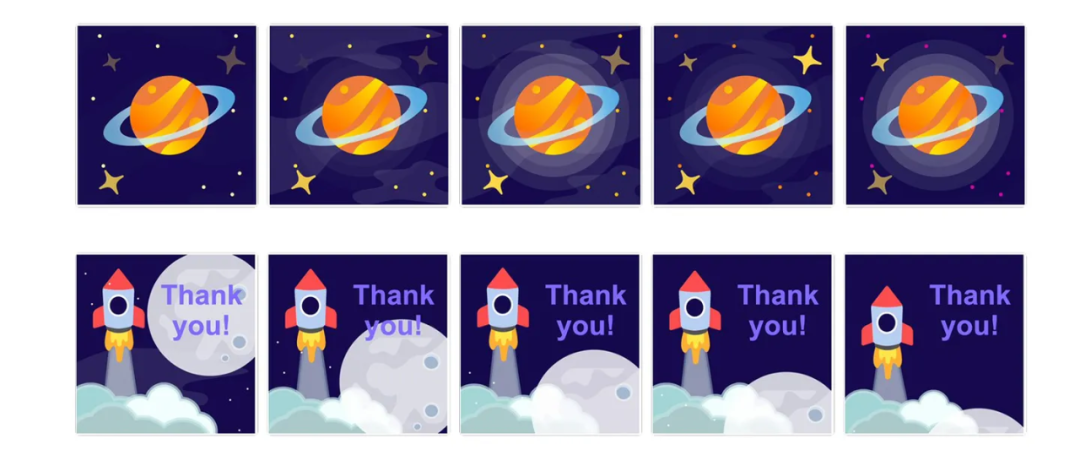

Users simply upload an image, enter something like "let the stars twinkle" into the prompt box, and click Generate to see the effects of this study.

Users can generate multiple animation designs in a batch and adjust properties such as color code and animation duration in separate windows. No coding experience is required as Keyframer automatically converts these changes to CSS, and the code itself is fully editable. This description-based approach is much simpler than other forms of AI-generated animation, which often require several different applications and some coding experience.

Introduction to Keyframer

Keyframer is an LLM-powered application designed to create animations from static images. Keyframer leverages the code generation capabilities of LLM and the semantic structure of static vector graphics (SVG) to generate animations based on natural language cues provided by the user.

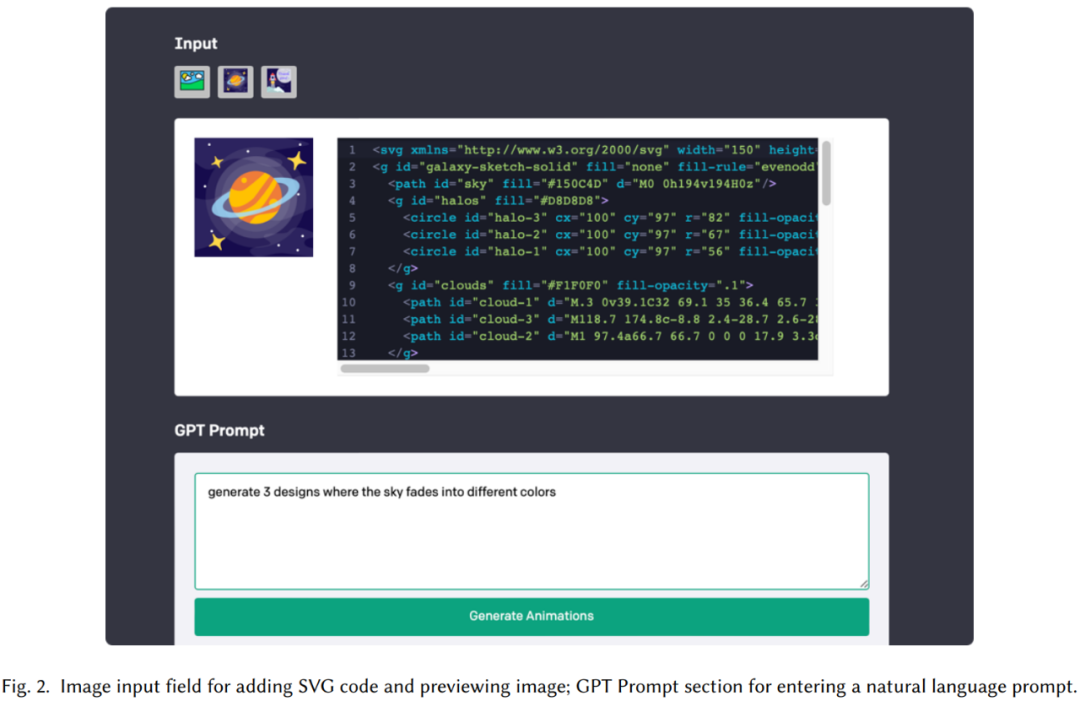

Input: The system provides an input area where users can paste what they want SVG image code to animate (SVG is a standard and popular image format commonly used in illustrations for its scalability and compatibility on multiple platforms). In Keyframer, a rendering of the SVG is displayed next to the code editor so that the user can preview the visual design of the image. As shown in Figure 2, the SVG code for the Saturn illustration contains identifiers such as sky, rings, etc.

GPT Prompts: This system allows users to enter natural language prompts to create animations. Users can request a single design (make the planet rotate) or multiple design variations (create a design with 3 twinkling stars), and then click the Generate Animation button to start the request. Before passing user requests to GPT, the study refines its prompts with the complete raw SVG XML and specifies the format of the LLM response.

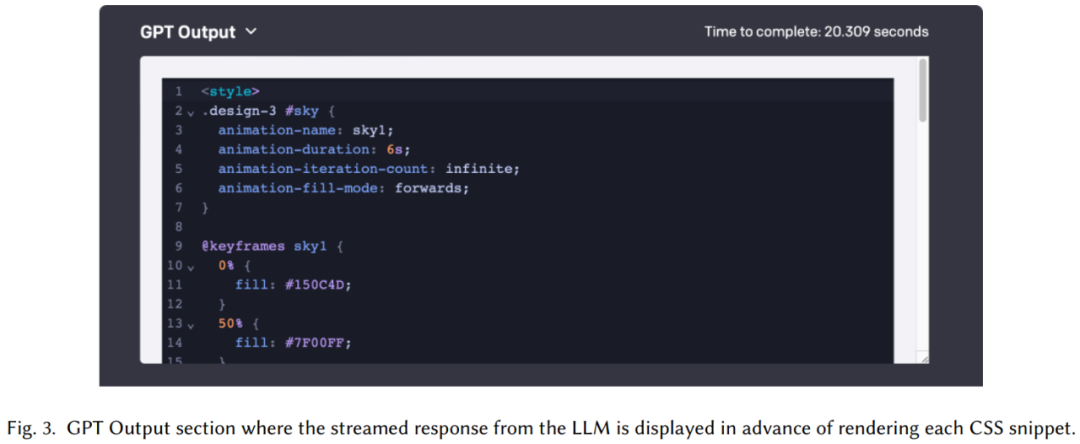

GPT Output: Once the prompt request starts, GPT transmits the response, which consists of one or more CSS fragments, as shown in Figure 3.

Rendering: The rendering part includes (1) each animation is visually rendered and rendered by LLM Generated 1-sentence explanation (2) A series of editors for modifying designs.

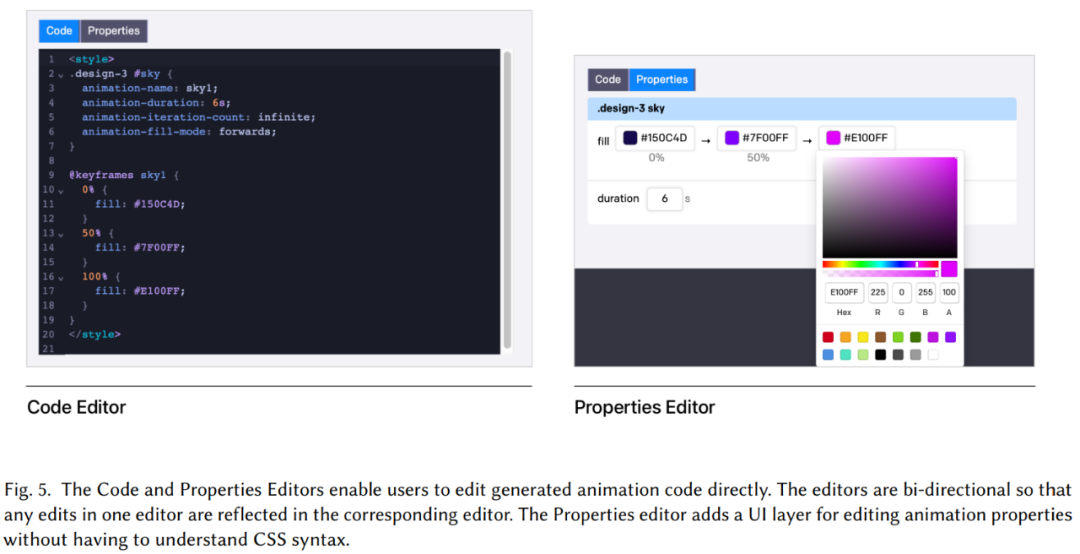

The code editor is implemented using CodeMirror; the property editor provides property-specific UI for editing code, for example, to edit color, the study provides a color picker. Figure 5 shows the code editor and property editor icons.

Iteration: To support users to go deeper in the animation creation process (DG1) Exploration, the study also provides a feature that allows users to iteratively build on the generated animation using prompts. There is a button "Add New Prompt" below each generated design; clicking this button opens a new form at the bottom of the page for the user to extend their design with new prompts.

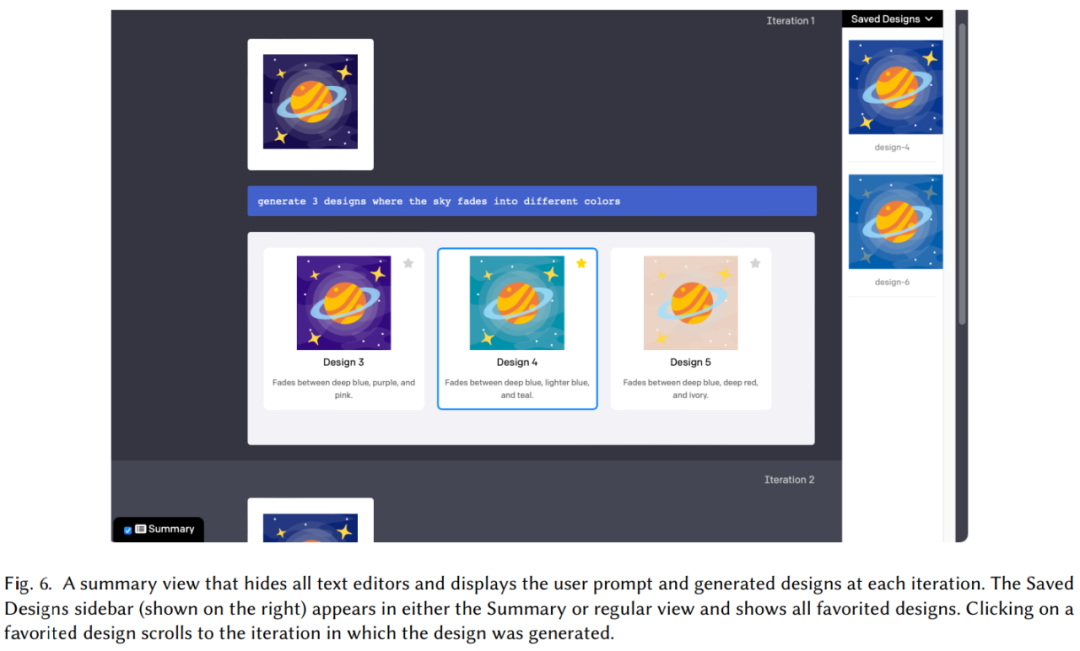

Save the designed sidebar and summary . The system allows users to star designs and add them to the sidebar, as shown on the right side of Figure 6. In addition, the system has a summary mode that hides all text editors and displays animations and their prompts, allowing users to quickly revisit previous prompts and designs.

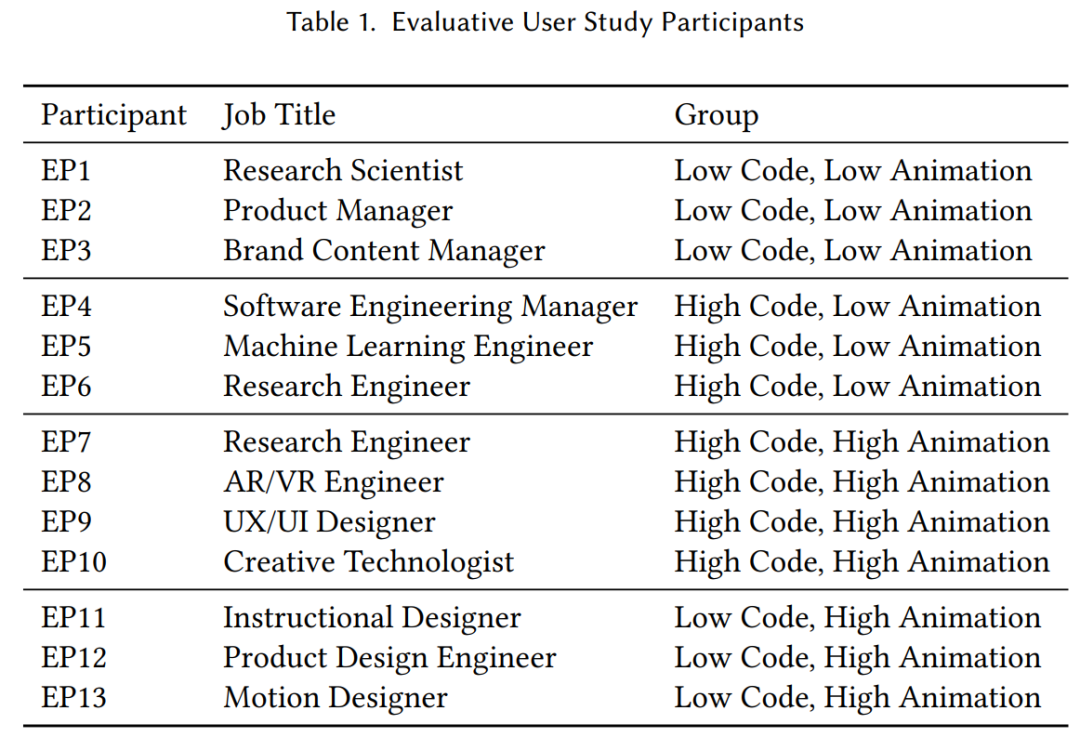

During the experiment, the Apple team selected 13 participants (6 women, 7 men) to try out Keyframer. Table 1 provides some information about the participants and the skills they mastered.

Even professional motion designer "EP13" sees the potential of Keyframer to expand its capabilities: "I'm a little worried that these tools will replace our work because its potential is so great. But if you think about it carefully, this research will only improve our skills. It should be something to be happy about."

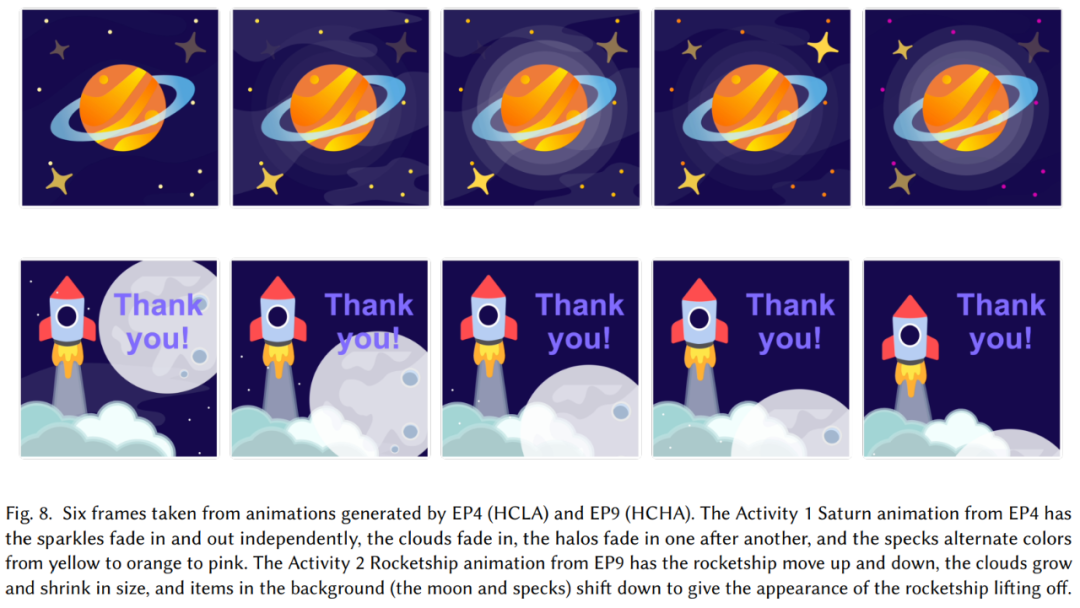

Overall, participation users are satisfied with their Keyframer experience. Participants gave an average score of 3.9, ranging between satisfied (4) and neutral (3). Participants generated 223 designs. On average, each participant generated 17.2 designs. Figure 8 shows an example of the final animation for two participants.

Please refer to the original paper for more technical details.

The above is the detailed content of Just one sentence to make the picture move. Apple uses large model animation to generate, and the result can be edited directly.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

How to change the size of a Bootstrap list?

Apr 07, 2025 am 10:45 AM

How to change the size of a Bootstrap list?

Apr 07, 2025 am 10:45 AM

The size of a Bootstrap list depends on the size of the container that contains the list, not the list itself. Using Bootstrap's grid system or Flexbox can control the size of the container, thereby indirectly resizing the list items.

How to implement nesting of Bootstrap lists?

Apr 07, 2025 am 10:27 AM

How to implement nesting of Bootstrap lists?

Apr 07, 2025 am 10:27 AM

Nested lists in Bootstrap require the use of Bootstrap's grid system to control the style. First, use the outer layer <ul> and <li> to create a list, then wrap the inner layer list in <div class="row> and add <div class="col-md-6"> to the inner layer list to specify that the inner layer list occupies half the width of a row. In this way, the inner list can have the right one

How to add icons to Bootstrap list?

Apr 07, 2025 am 10:42 AM

How to add icons to Bootstrap list?

Apr 07, 2025 am 10:42 AM

How to add icons to the Bootstrap list: directly stuff the icon into the list item <li>, using the class name provided by the icon library (such as Font Awesome). Use the Bootstrap class to align icons and text (for example, d-flex, justify-content-between, align-items-center). Use the Bootstrap tag component (badge) to display numbers or status. Adjust the icon position (flex-direction: row-reverse;), control the style (CSS style). Common error: The icon does not display (not

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

When converting strings to objects in Vue.js, JSON.parse() is preferred for standard JSON strings. For non-standard JSON strings, the string can be processed by using regular expressions and reduce methods according to the format or decoded URL-encoded. Select the appropriate method according to the string format and pay attention to security and encoding issues to avoid bugs.

What changes have been made with the list style of Bootstrap 5?

Apr 07, 2025 am 11:09 AM

What changes have been made with the list style of Bootstrap 5?

Apr 07, 2025 am 11:09 AM

Bootstrap 5 list style changes are mainly due to detail optimization and semantic improvement, including: the default margins of unordered lists are simplified, and the visual effects are cleaner and neat; the list style emphasizes semantics, enhancing accessibility and maintainability.

How to view Bootstrap's grid system

Apr 07, 2025 am 09:48 AM

How to view Bootstrap's grid system

Apr 07, 2025 am 09:48 AM

Bootstrap's mesh system is a rule for quickly building responsive layouts, consisting of three main classes: container (container), row (row), and col (column). By default, 12-column grids are provided, and the width of each column can be adjusted through auxiliary classes such as col-md-, thereby achieving layout optimization for different screen sizes. By using offset classes and nested meshes, layout flexibility can be extended. When using a grid system, make sure that each element has the correct nesting structure and consider performance optimization to improve page loading speed. Only by in-depth understanding and practice can we master the Bootstrap grid system proficiently.

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to center images in containers for Bootstrap

Apr 07, 2025 am 09:12 AM

How to center images in containers for Bootstrap

Apr 07, 2025 am 09:12 AM

Overview: There are many ways to center images using Bootstrap. Basic method: Use the mx-auto class to center horizontally. Use the img-fluid class to adapt to the parent container. Use the d-block class to set the image to a block-level element (vertical centering). Advanced method: Flexbox layout: Use the justify-content-center and align-items-center properties. Grid layout: Use the place-items: center property. Best practice: Avoid unnecessary nesting and styles. Choose the best method for the project. Pay attention to the maintainability of the code and avoid sacrificing code quality to pursue the excitement