Detecting AI-generated images using texture contrast detection

In this article we will introduce how to develop a deep learning model to detect images generated by artificial intelligence.

Many deep learning methods for detecting AI-generated images are based on how the image was generated or the characteristics/semantics of the image, usually these The model can only recognize specific objects generated by artificial intelligence, such as people, faces, cars, etc.

However, the method proposed in this study, titled "Rich and Poor Texture Contrast: A Simple yet Effective Approach for AI-generated Image Detection" overcomes these challenges and has broader applicability. We’ll dive into this research paper to illustrate how it effectively solves problems faced by other methods of detecting AI-generated images.

Generalization problem

When we use a model (such as ResNet-50) to recognize images generated by artificial intelligence, the model will Learning based on the semantics of images. If we train a model to recognize AI-generated car images, using real images and different AI-generated car images for training, then the model will only be able to get information about cars from these data, but not for other objects. for accurate identification.

Although training can be performed on data of various objects, this method takes a long time and can only achieve an accuracy of approximately 72% on unknown data. Although accuracy can be improved by increasing the number of training times and the amount of data, we cannot obtain unlimited training data.

That is to say, there is a big problem with the generalization of the current detection model. In order to solve this problem, the paper proposes the following method

Smash&Reconstruction

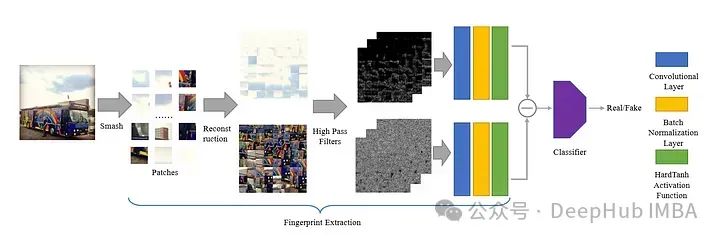

This paper introduces a unique method for preventing models from learning AI-generated features from the shape of images during training. The author proposes a method called Smash&Reconstruction to achieve this goal.

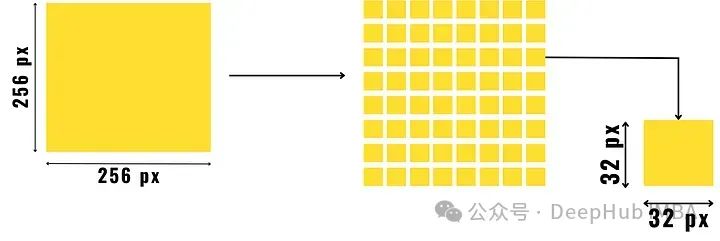

In this method, the image is divided into small blocks of predetermined size and then rearranged to generate a new image. This is just a brief overview as additional steps are required before forming the final input image for the generative model.

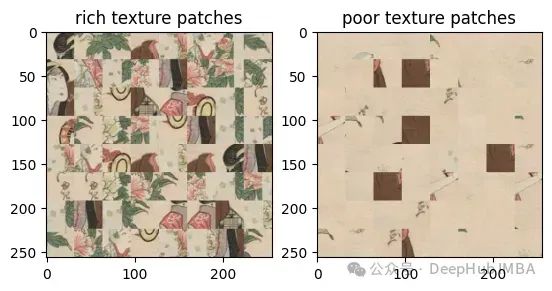

After dividing the image into small pieces, we divide the small pieces into two groups, one group is the texture-rich small pieces, and the other is Small pieces with poor texture.

A detailed area in an image, such as an object or the boundary between two areas of contrasting color, becomes a rich texture block. Richly textured areas have a large variation in pixels compared to textured areas that are primarily background, such as the sky or still water.

Calculate texture richness metrics

Start by dividing the image into small chunks of predetermined size, as shown in the image above. Then find the pixel gradients of these image patches (i.e. find the difference in pixel values in the horizontal, diagonal and anti-diagonal directions and add them together) and separate them into rich texture patches and poorly textured patches .

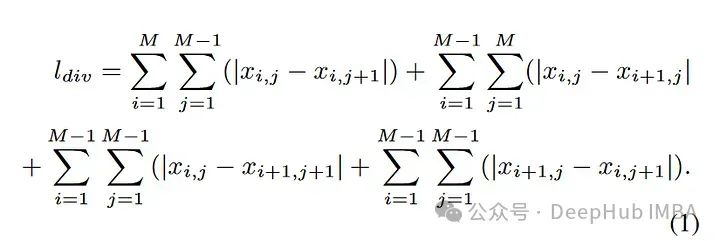

Compared with blocks with poor texture, texture-rich blocks have higher pixel gradient values. The formula for calculating the image gradient value is as follows:

Separate the image based on pixel contrast to obtain two composite images. This process is a complete process that this article calls "Smash&Reconstruction".

This allows the model to learn the details of the texture instead of the content representation of the object

fingerprint

Most of the fingerprint-based methods are limited by the image generation technology, these models/algorithms can only detect images produced by specific methods/similar methods such as diffusion, GAN or other CNN-based image generation method).

To solve this problem precisely, the paper has divided these image patches into rich or poor textures. The author then proposed a new method of identifying fingerprints in images generated by artificial intelligence, which is the title of the paper. They proposed to find the contrast between rich and texture-poor patches in the image after applying 30 high-pass filters.

How does the contrast between rich and poor texture patches help?

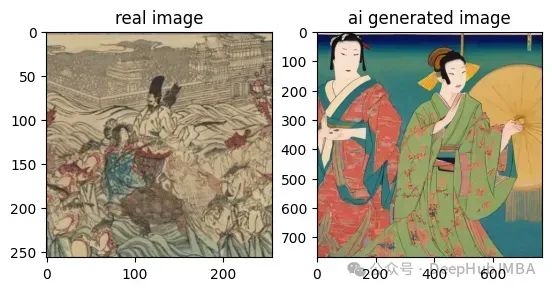

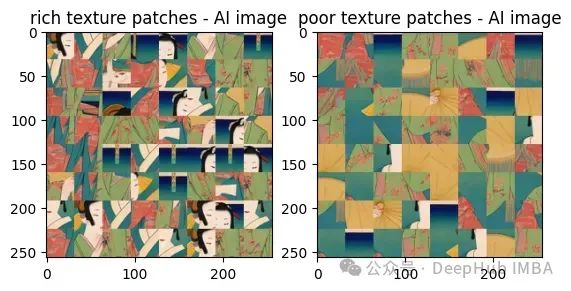

For better understanding, we compare images side by side, real images and AI generated images.

It is difficult to view these two images with the naked eye, right?

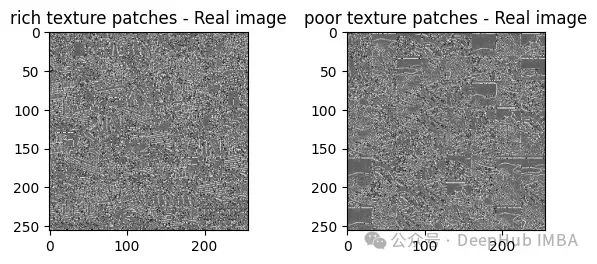

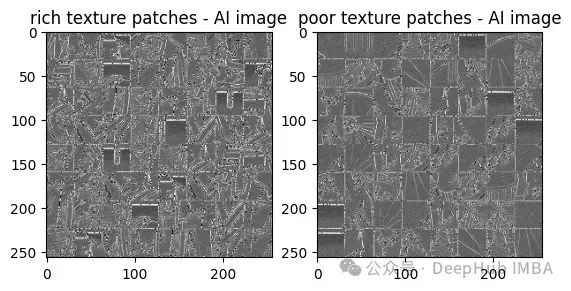

The paper first uses the Smash&Reconstruction process :

Contrast between each image after applying 30 high-pass filters on them:

From these results we can see that the contrast between the AI-generated images and the real images is comparable Than, the contrast between rich and poor texture patches is much higher.

In this way, we can see the difference with the naked eye, so we can put the contrast results into the trainable model and input the result data into the classifier. This is the purpose of our paper. Model architecture:

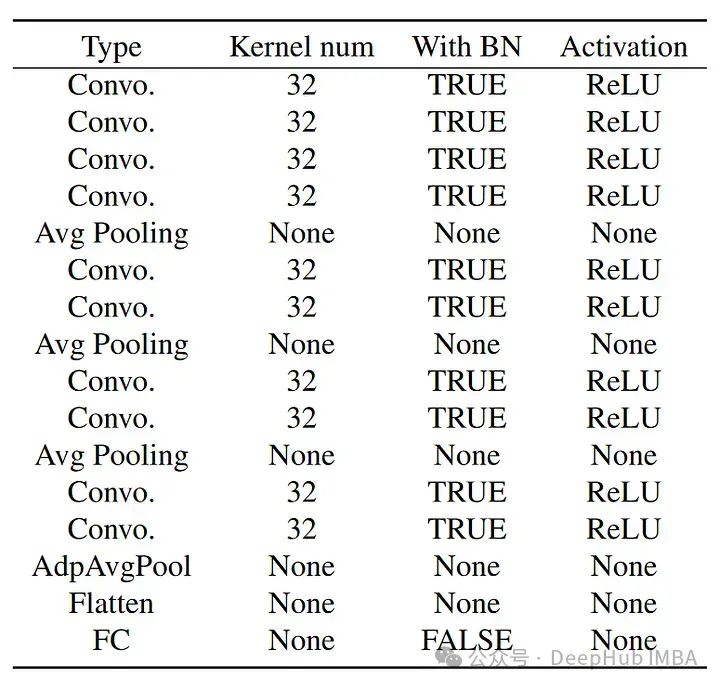

The structure of the classifier is as follows:

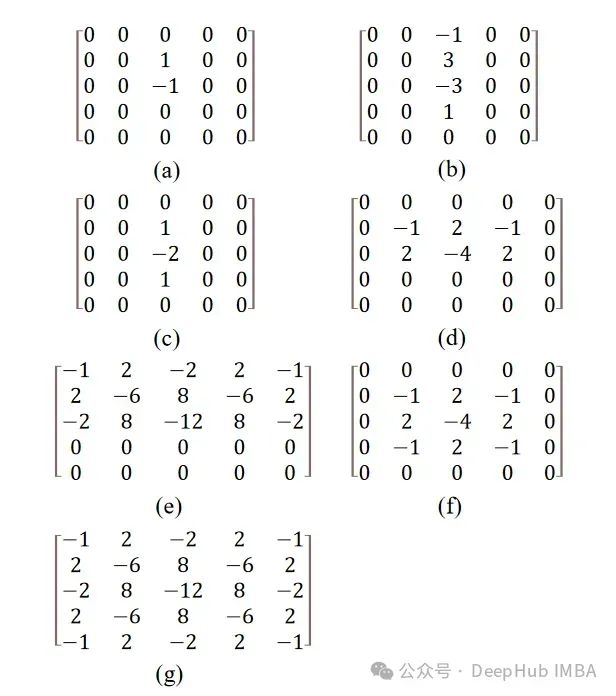

The paper mentions 30 high-pass filters, which were originally introduced for steganalysis.

Note: There are many ways to steganographically image. Broadly speaking, as long as information is hidden in a picture in some way and is difficult to discover through ordinary means, it can be called picture steganography. There are many related studies on steganalysis, and those who are interested can check the relevant information.

The filter here is a matrix value applied to the image using a convolution method. The filter used is a high-pass filter, which only allows the high-frequency features of the image to pass through it. High-frequency features typically include edges, fine details, and rapid changes in intensity or color.

All filters except (f) and (g) are rotated at an angle before being reapplied to the image, thus forming a total of 30 filter. The rotation of these matrices is done using affine transformations, which are done using SciPy.

Summary

The results of the paper have reached a verification accuracy of 92%, and it is said that if more training is done, there will be better results As a result, this is a very interesting research. I also found the training code. If you are interested, you can study it in depth:

Paper: https://arxiv.org/abs/2311.12397

Code: https://github.com/hridayK/Detection-of-AI-generated-images

The above is the detailed content of Detecting AI-generated images using texture contrast detection. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

Iyo One: Part headphone, part audio computer

Aug 08, 2024 am 01:03 AM

Iyo One: Part headphone, part audio computer

Aug 08, 2024 am 01:03 AM

At any time, concentration is a virtue. Author | Editor Tang Yitao | Jing Yu The resurgence of artificial intelligence has given rise to a new wave of hardware innovation. The most popular AIPin has encountered unprecedented negative reviews. Marques Brownlee (MKBHD) called it the worst product he's ever reviewed; The Verge editor David Pierce said he wouldn't recommend anyone buy this device. Its competitor, the RabbitR1, isn't much better. The biggest doubt about this AI device is that it is obviously just an app, but Rabbit has built a $200 piece of hardware. Many people see AI hardware innovation as an opportunity to subvert the smartphone era and devote themselves to it.

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G

What are the top ten virtual currency trading platforms? Ranking of the top ten virtual currency trading platforms in the world

Feb 20, 2025 pm 02:15 PM

What are the top ten virtual currency trading platforms? Ranking of the top ten virtual currency trading platforms in the world

Feb 20, 2025 pm 02:15 PM

With the popularity of cryptocurrencies, virtual currency trading platforms have emerged. The top ten virtual currency trading platforms in the world are ranked as follows according to transaction volume and market share: Binance, Coinbase, FTX, KuCoin, Crypto.com, Kraken, Huobi, Gate.io, Bitfinex, Gemini. These platforms offer a wide range of services, ranging from a wide range of cryptocurrency choices to derivatives trading, suitable for traders of varying levels.