Technology peripherals

Technology peripherals

AI

AI

Is the self-vehicle state everything you need for open-loop end-to-end autonomous driving?

Is the self-vehicle state everything you need for open-loop end-to-end autonomous driving?

Is the self-vehicle state everything you need for open-loop end-to-end autonomous driving?

Original title: Is Ego Status All You Need for Open-Loop End-to-End Autonomous Driving?

Paper link: https://arxiv.org/abs/2312.03031

Code link: https://github.com/NVlabs/BEV-Planner

Author unit: Nanjing University NVIDIA

Paper Idea:

End-to-end autonomous driving has recently emerged as a promising research direction, aiming to pursue automation from a full-stack perspective. Along this line, much recent work follows an open-loop evaluation setting to study planning behavior on nuScenes. This article explores this issue in more depth by conducting a thorough analysis and revealing the puzzle in more detail. This paper initially observed that the nuScenes dataset, which features relatively simple driving scenes, leads to underutilization of perceptual information in end-to-end models that integrate ego status, such as the ego's speed. These models tend to rely primarily on the self-vehicle state for future path planning. In addition to the limitations of the dataset, the paper notes that current indicators do not provide a comprehensive assessment of planning quality, leading to potentially biased conclusions drawn from existing benchmarks. To solve this problem, this paper introduces a new metric to evaluate whether the predicted trajectory follows the road. This paper further proposes a simple baseline capable of achieving competitive results without relying on perceptual annotations. Given the limitations of existing benchmarks and metrics, this article recommends that the academic community re-evaluate relevant mainstream research and carefully consider whether the continued pursuit of state-of-the-art technology will yield convincing general conclusions.

Main contributions:

The open-loop autonomous driving model based on nuScenes is significantly affected by the state of the vehicle (speed, acceleration, yaw angle). These factors are used in planning plays a key role in performance. When the self-vehicle state is involved, the model's trajectory prediction is mainly controlled by it, which may lead to reduced utilization of sensory information.

Existing planning metrics may not fully assess the actual performance of the model. There may be significant differences in the evaluation results of models under different indicators. Therefore, it is recommended to introduce more diverse and comprehensive indicators to evaluate the performance of the model to avoid the model only performing well under certain indicators and ignoring other potential risks.

Compared to achieving state-of-the-art performance on existing nuScenes datasets, developing more applicable datasets and metrics is considered a more critical and urgent challenge.

Thesis design:

The goal of end-to-end autonomous driving is to comprehensively consider perception and planning and implement it in a full-stack manner [1, 5, 32, 35]. The basic motivation is to view the perception of autonomous vehicles (AVs) as a means to achieve a goal (planning), rather than overly relying on some perception metric for fitting.

Unlike perception, planning is usually more open-ended and difficult to quantify [6, 7]. Ideally, the open nature of planning will support a closed-loop evaluation setup where other agents can react to the self-vehicle's behavior and the raw sensor data can change accordingly. However, agent behavior modeling and real-world data simulation [8, 19] in closed-loop simulators remain challenging unsolved problems so far. Therefore, closed-loop evaluation inevitably introduces considerable domain gaps from the real world.

On the other hand, open-loop evaluation aims to treat human driving as a real situation and formulate planning as imitation learning [13]. This representation allows direct use of real-world datasets through simple log replay, avoiding domain gaps from simulations. It also provides other advantages, such as the ability to train and validate models in complex and diverse traffic scenarios that are often difficult to generate with high fidelity in simulations [5]. Because of these benefits, an established research area focuses on open-loop end-to-end autonomous driving using real-world datasets [2, 12, 13, 16, 43].

Currently popular end-to-end autonomous driving methods [12, 13, 16, 43] usually use nuScenes[2] for open-loop evaluation of their planning behaviors. For example, UniAD [13] studied the impact of different perception task modules on the final planning behavior. However, ADMLP [45] recently pointed out that a simple MLP network can also achieve state-of-the-art planning results relying only on ego status information. This inspired this article to raise an important question:

Does open-loop end-to-end autonomous driving only require ego status information?

The answer to this article is yes and no, taking into account the pros and cons of using ego status information in current benchmarks:

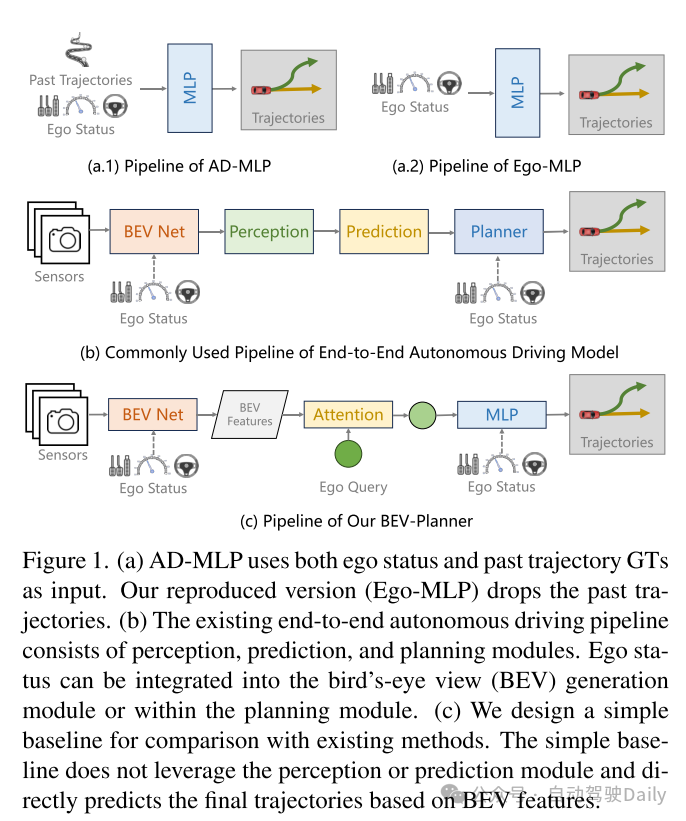

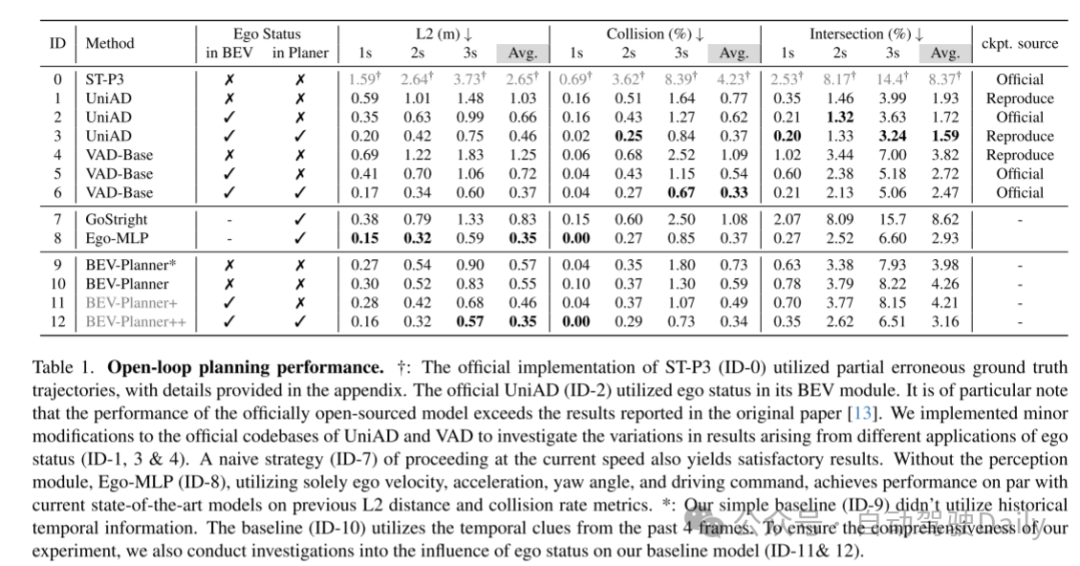

yes. The information in ego status, such as speed, acceleration and yaw angle, should obviously be beneficial to the execution of planning tasks. To verify this, this paper solves an open problem of AD-MLP and removes the use of historical trajectory ground truths (GTs) to prevent potential label leakage. The model reproduced in this paper, Ego-MLP (Figure 1 a.2), relies only on ego status and is comparable to state-of-the-art methods in terms of existing L2 distance and collision rate metrics. Another observation is that only existing methods [13, 16, 43] that incorporate ego status information into the planning module can achieve comparable results to Ego-MLP. Although these methods employ additional perceptual information (tracking, HD maps, etc.), they have not been shown to be superior to Ego-MLP. These observations verify the dominant role of ego status in end-to-end autonomous driving open-loop evaluation.

no. Obviously, as a safety-critical application, autonomous driving should not rely solely on the ego status when making decisions. So why does it happen that the most advanced planning results can be achieved using only ego status? To answer this question, this paper presents a comprehensive set of analyzes covering existing open-loop end-to-end autonomous driving approaches. This paper identifies major shortcomings in existing research, including aspects related to datasets, evaluation metrics, and specific model implementations. The paper enumerates and details these flaws in the remainder of this section:

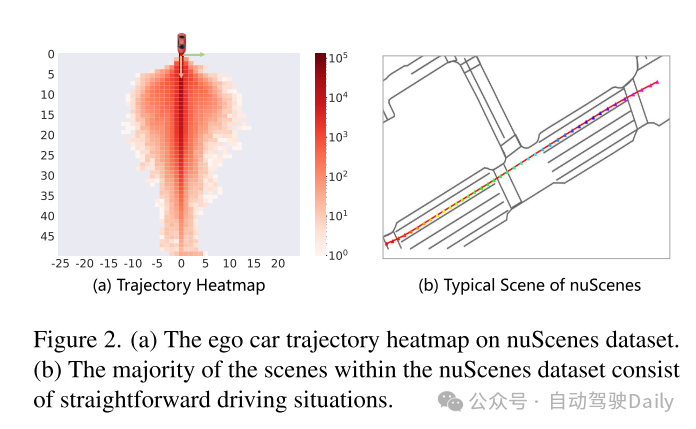

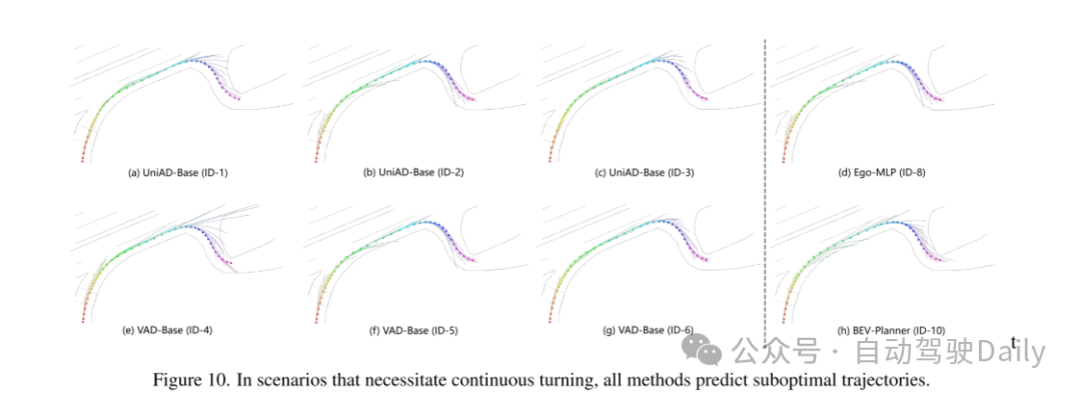

Dataset imbalance. NuScenes is a commonly used benchmark for open-loop evaluation tasks [11–13, 16, 17, 43]. However, the analysis in this paper shows that 73.9% of the nuScenes data involves straight-line driving scenarios, as reflected by the trajectory distribution shown in Figure 2. For these straight-line driving scenarios, maintaining the current speed, direction, or steering rate is sufficient most of the time. Therefore, ego status information can be easily used as a shortcut to adapt to planning tasks, which leads to the strong performance of Ego-MLP on nuScenes.

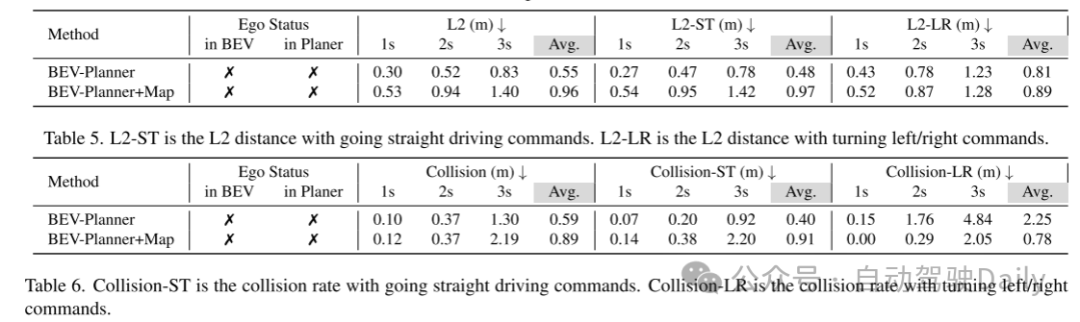

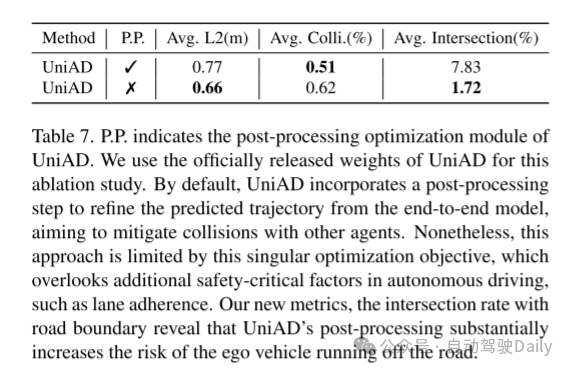

The existing evaluation indicators are not comprehensive. NuScenes The remaining 26.1% of the data involves more challenging driving scenarios and may be better benchmarks for planning behavior. However, this paper believes that the widely used current evaluation indicators, such as the L2 distance between the prediction and the planned true value and the collision rate between the self-vehicle and surrounding obstacles, cannot accurately measure the quality of the model's planning behavior. By visualizing numerous predicted trajectories generated by various methods, this paper notes that some high-risk trajectories, such as driving off the road, may not be severely penalized in existing metrics. In order to respond to this problem, this paper introduces a new evaluation metric for calculating the interaction rate between the predicted trajectory and the road boundary. The benchmark undergoes a substantial shift when focusing on intersection rates with road boundaries. Under this new evaluation metric, Ego-MLP tends to predict trajectories that deviate more frequently from the road than UniAD.

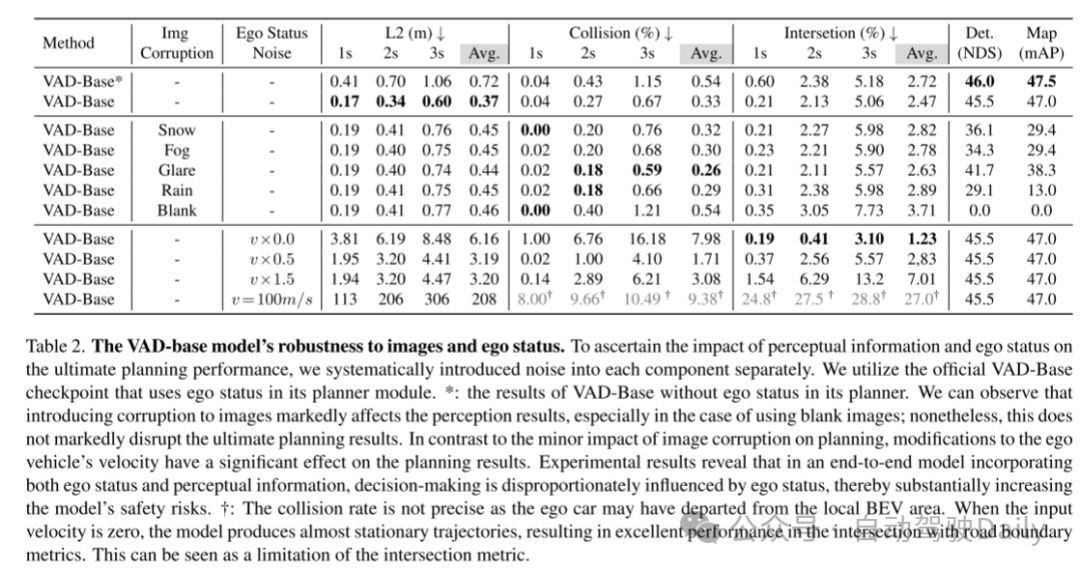

The ego status bias contradicts the driving logic. Since the ego status may lead to overfitting, this article further observes an interesting phenomenon. The experimental results of this paper show that in some cases, completely removing visual input from existing end-to-end autonomous driving frameworks does not significantly reduce the quality of planning behaviors. This contradicts basic driving logic, since perception is expected to provide useful information for planning. For example, blocking all camera inputs in VAD [16] results in complete failure of the perception module, but only minimal degradation in planning if there is ego status. However, changing the input's own velocity can significantly affect the final predicted trajectory.

In short, this article speculates that recent efforts in the field of end-to-end autonomous driving and its state-of-the-art results on nuScenes are likely due to over-reliance on ego status, coupled with simple driving scenarios caused by its dominant position. Furthermore, current evaluation metrics are insufficient in comprehensively assessing the quality of model predicted trajectories. These open questions and shortcomings may underestimate the potential complexity of the planning task and create a misleading impression that ego status is all you need in open-loop end-to-end autonomous driving everything.

The potential interference of ego status in current open-loop end-to-end autonomous driving research raises another question: whether it can be offset by removing ego status from the entire model This impact? However, it is worth noting that even if the influence of ego status is excluded, the reliability of open-loop autonomous driving research based on the nuScenes data set is still questionable.

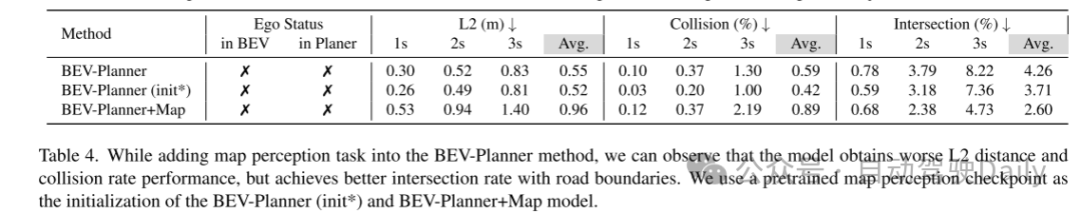

figure 1. (a) AD-MLP uses both ego status and the true value of past trajectories as input. The version reproduced in this article (Ego-MLP) has removed the past trace. (b) The existing end-to-end autonomous driving process includes perception, prediction and planning modules. The ego status can be integrated into the bird's eye view (BEV) generation module or planning module. (c) This paper designs a simple baseline for comparison with existing methods. This simple baseline does not utilize perception or prediction modules, but directly predicts the final trajectory based on BEV features.

figure 2. (a) Heat map of vehicle trajectories in the nuScenes dataset. (b) Most scenes in the nuScenes dataset consist of straight driving situations.

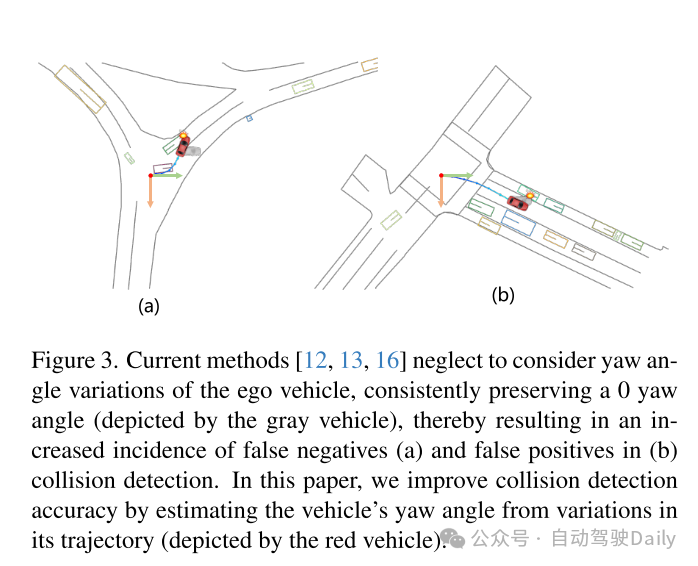

image 3. Current methods [12, 13, 16] neglect to consider the yaw angle change of the own vehicle and always maintain a 0 yaw angle (represented by the gray vehicle), resulting in false negative (a) and false positive (b) collision detection events. Increase. This paper estimates the vehicle's yaw angle (represented by the red vehicle) by estimating changes in the vehicle's trajectory to improve the accuracy of collision detection.

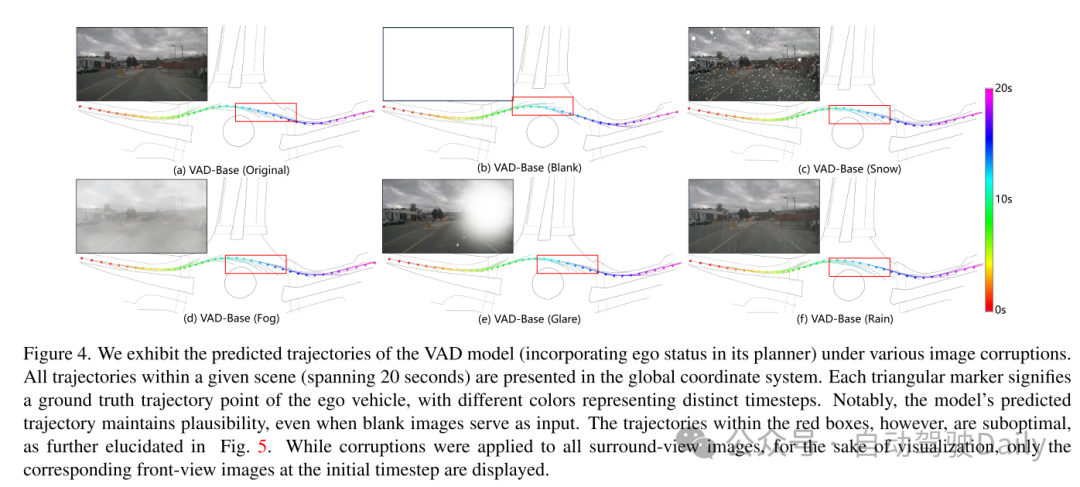

Figure 4. This paper shows the predicted trajectories of a VAD model (which incorporates ego status in its planner) under various image corruption scenarios. All trajectories in a given scene (spanning 20 seconds) are presented in a global coordinate system. Each triangle mark represents the real trajectory point of the self-vehicle, and different colors represent different time steps. It is worth noting that even if the input is a blank image, the model's predicted trajectory remains reasonable. However, the trajectories within the red box are suboptimal, as further elaborated in Figure 5. Although all surround-view images are corrupted, for ease of visualization, only the front-view image corresponding to the initial time step is shown.

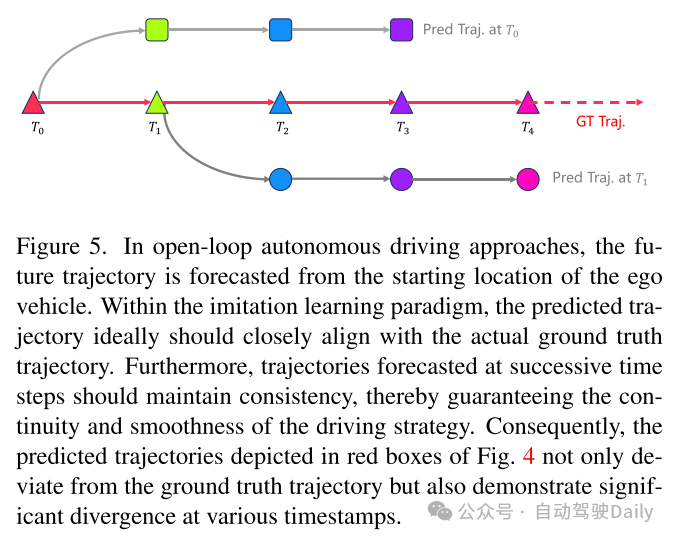

Figure 5. In open-loop autonomous driving methods, future trajectories are predicted from the starting position of the self-vehicle. Within the imitation learning paradigm, the predicted trajectories should ideally be closely aligned with the actual ground-truth trajectories. In addition, the predicted trajectories at consecutive time steps should remain consistent to ensure the continuity and smoothness of the driving strategy. Therefore, the predicted trajectory shown in the red box in Figure 4 not only deviates from the true trajectory, but also shows significant divergence at different timestamps.

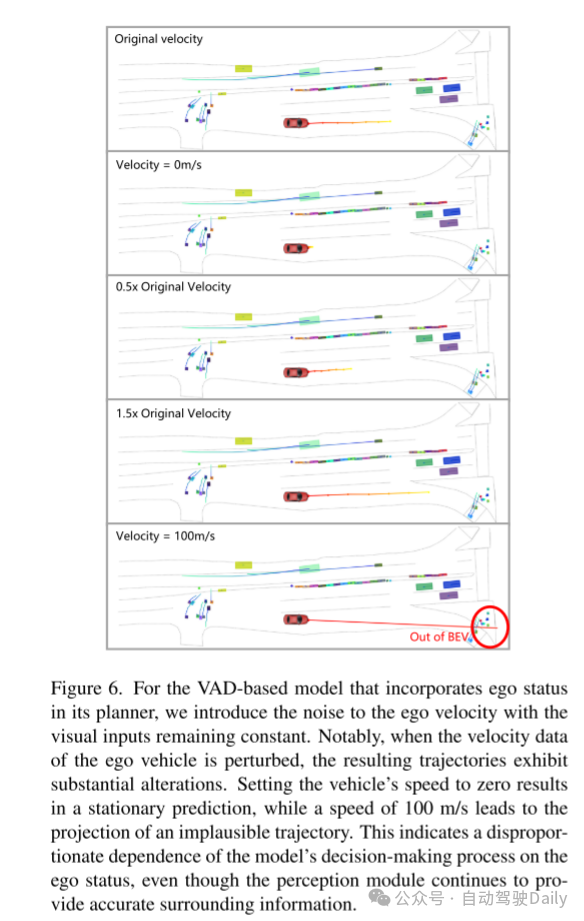

Figure 6. For a VAD-based model that incorporates ego status in its planner, this paper introduces noise to the ego speed while keeping the visual input constant. It is worth noting that when the self-vehicle's speed data is perturbed, the resulting trajectory shows significant changes. Setting the vehicle's speed to zero results in predictions of stationary motion, while a speed of 100 m/s results in predictions of unrealistic trajectories. This indicates that although the perception module continues to provide accurate surrounding information, the model's decision-making process relies too much on the ego status.

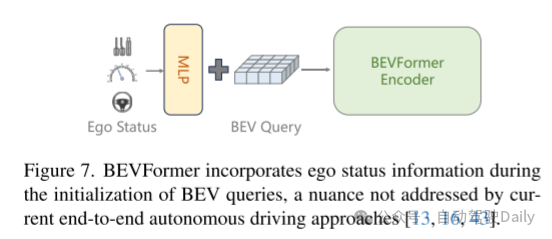

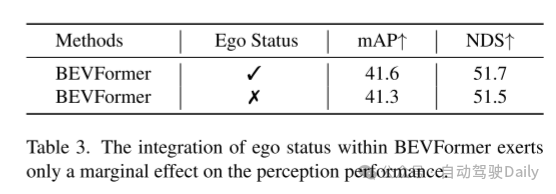

Figure 7. BEVFormer combines ego status information in the initialization process of BEV query, which is a detail not covered by current end-to-end autonomous driving methods [13, 16, 43].

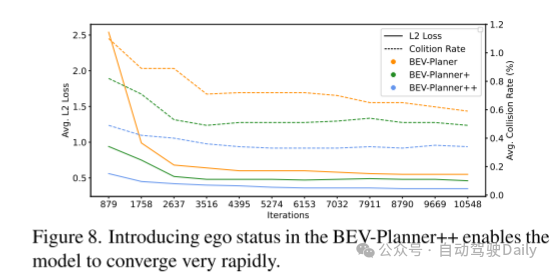

Figure 8. The introduction of ego status information into BEV-Planner enables the model to converge very quickly.

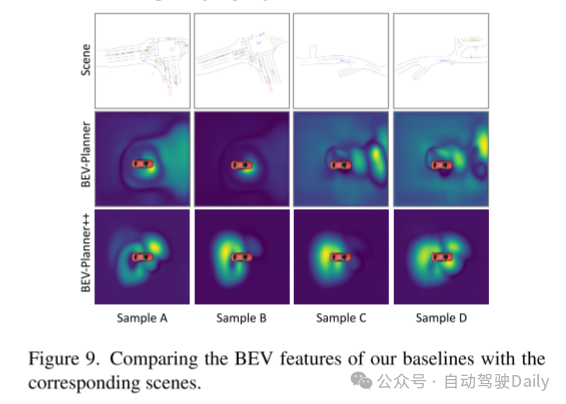

Figure 9. Compare the BEV characteristics of this article's baseline with the corresponding scenarios.

Experimental results:

##Summary of the paper :

This article provides an in-depth analysis of the inherent shortcomings of current open-loop end-to-end autonomous driving methods. The goal of this paper is to contribute research results and promote the progressive development of end-to-end autonomous driving.Citation:

Li Z, Yu Z, Lan S, et al. Is Ego Status All You Need for Open-Loop End-to-End Autonomous Driving? [J]. arXiv preprint arXiv:2312.03031, 2023.The above is the detailed content of Is the self-vehicle state everything you need for open-loop end-to-end autonomous driving?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Introduction to how to use the joiplay simulator

May 04, 2024 pm 06:40 PM

Introduction to how to use the joiplay simulator

May 04, 2024 pm 06:40 PM

The jojplay simulator is a very easy-to-use mobile phone simulator. It supports computer games to run on mobile phones and has very good compatibility. Some players don’t know how to use it. The editor below will introduce how to use it. How to use joiplay simulator 1. First, you need to download the Joiplay body and RPGM plug-in. It is best to install them in the order of body-plug-in. The apk package can be obtained in the Joiplay bar (click to get >>>). 2. After Android is completed, you can add games in the lower left corner. 3. Fill in the name casually, and press CHOOSE on executablefile to select the game.exe file of the game. 4. Icon can be left blank or you can choose your favorite picture.

How to enable vt on MSI motherboard

May 01, 2024 am 09:28 AM

How to enable vt on MSI motherboard

May 01, 2024 am 09:28 AM

How to enable VT on MSI motherboard? What are the methods? This website has carefully compiled the MSI motherboard VT enable methods for the majority of users. Welcome to read and share! The first step is to restart the computer and enter the BIOS. What should I do if the startup speed is too fast and I cannot enter the BIOS? After the screen lights up, keep pressing "Del" to enter the BIOS page. The second step is to find the VT option in the menu and turn it on. Different models of computers have different BIOS interfaces and different names for VT. Situation 1: 1. Enter After entering the BIOS page, find the "OC (or overclocking)" - "CPU Features" - "SVMMode (or Intel Virtualization Technology)" option and change the "Disabled"

How to enable vt on ASRock motherboard

May 01, 2024 am 08:49 AM

How to enable vt on ASRock motherboard

May 01, 2024 am 08:49 AM

How to enable VT on ASRock motherboard, what are the methods and how to operate it. This website has compiled the ASRock motherboard vt enable method for users to read and share! The first step is to restart the computer. After the screen lights up, keep pressing the "F2" key to enter the BIOS page. What should I do if the startup speed is too fast and I cannot enter the BIOS? The second step is to find the VT option in the menu and turn it on. Different models of motherboards have different BIOS interfaces and different names for VT. 1. After entering the BIOS page, find "Advanced" - "CPU Configuration (CPU) Configuration)" - "SVMMOD (virtualization technology)" option, change "Disabled" to "Enabled"

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

How to install Windows system on tablet computer

May 03, 2024 pm 01:04 PM

How to install Windows system on tablet computer

May 03, 2024 pm 01:04 PM

How to flash the Windows system on BBK tablet? The first way is to install the system on the hard disk. As long as the computer system does not crash, you can enter the system and download things, you can use the computer hard drive to install the system. The method is as follows: Depending on your computer configuration, you can install the WIN7 operating system. We choose to download Xiaobai's one-click reinstallation system in vivopad to install it. First, select the system version suitable for your computer, and click "Install this system" to next step. Then we wait patiently for the installation resources to be downloaded, and then wait for the environment to be deployed and restarted. The steps to install win11 on vivopad are: first use the software to check whether win11 can be installed. After passing the system detection, enter the system settings. Select the Update & Security option there. Click

Life Restart Simulator Guide

May 07, 2024 pm 05:28 PM

Life Restart Simulator Guide

May 07, 2024 pm 05:28 PM

Life Restart Simulator is a very interesting simulation game. This game has become very popular recently. There are many ways to play in the game. Below, the editor has brought you a complete guide to Life Restart Simulator. Come and take a look. What strategies are there? Life Restart Simulator Guide Guide Features of Life Restart Simulator This is a very creative game in which players can play according to their own ideas. There are many tasks to complete every day, and you can enjoy a new life in this virtual world. There are many songs in the game, and all kinds of different lives are waiting for you to experience. Life Restart Simulator Game Contents Talent Card Drawing: Talent: You must choose the mysterious small box to become an immortal. A variety of small capsules are available to avoid dying midway. Cthulhu may choose

Introduction to joiplay simulator font setting method

May 09, 2024 am 08:31 AM

Introduction to joiplay simulator font setting method

May 09, 2024 am 08:31 AM

The jojplay simulator can actually customize the game fonts, and can solve the problem of missing characters and boxed characters in the text. I guess many players still don’t know how to operate it. The following editor will bring you the method of setting the font of the jojplay simulator. introduce. How to set the joiplay simulator font 1. First open the joiplay simulator, click on the settings (three dots) in the upper right corner, and find it. 2. In the RPGMSettings column, click to select the CustomFont custom font in the third row. 3. Select the font file and click OK. Be careful not to click the "Save" icon in the lower right corner, otherwise the default settings will be restored. 4. Recommended Founder and Quasi-Yuan Simplified Chinese (already in the folders of the games Fuxing and Rebirth). joi

How to delete the thunder and lightning simulator application? -How to delete applications in Thunderbolt Simulator?

May 08, 2024 pm 02:40 PM

How to delete the thunder and lightning simulator application? -How to delete applications in Thunderbolt Simulator?

May 08, 2024 pm 02:40 PM

The official version of Thunderbolt Simulator is a very professional Android emulator tool. So how to delete the thunder and lightning simulator application? How to delete applications in Thunderbolt Simulator? Let the editor give you the answer below! How to delete the thunder and lightning simulator application? 1. Click and hold the icon of the app you want to delete. 2. Wait for a while until the option to uninstall or delete the app appears. 3. Drag the app to the uninstall option. 4. In the confirmation window that pops up, click OK to complete the deletion of the application.