Technology peripherals

Technology peripherals

AI

AI

Comprehensively surpassing ViT, Meituan, Zhejiang University, etc. proposed VisionLLAMA, a unified architecture for visual tasks

Comprehensively surpassing ViT, Meituan, Zhejiang University, etc. proposed VisionLLAMA, a unified architecture for visual tasks

Comprehensively surpassing ViT, Meituan, Zhejiang University, etc. proposed VisionLLAMA, a unified architecture for visual tasks

For more than half a year, Meta’s open source LLaMA architecture has been tested in LLM and has been a great success (stable training and easy scaling).

Following ViT’s research ideas, can we truly achieve the architectural unification of language and images with the help of the innovative LLaMA architecture?

On this proposition, a recent study VisionLLaMA has made progress. VisionLLaMA has significantly improved compared to the original ViT class method in many mainstream tasks such as image generation (including the underlying DIT that Sora relies on) and understanding (classification, segmentation, detection, self-supervision).

- ##Paper title: VisionLLaMA: A Unified LLaMA Interface for Vision Tasks

- Paper address: https://arxiv.org/abs/2403.00522

- ## Code address: https://github .com/Meituan-AutoML/VisionLLaMA

Research background

Large language model is a hot topic in current academic research, among which LLaMA is the most influential and representative work One, many of the latest research works are carried out based on this architecture, and most of the solutions for various applications are built on this series of open source models. In the advancement of multimodal models, many of these methods rely on LLaMA for text processing and visual transformers like CLIP for visual perception. At the same time, many efforts are devoted to speeding up the inference speed of LLaMA and reducing the storage cost of LLaMA. All in all, LLaMA is now the de facto most versatile and important large language model architecture.

The success of the LLaMA architecture led the author of this article to propose a simple and interesting idea: whether the architecture can be equally effective in visual modalities success? If the answer is yes, then both visual and language models can use the same unified architecture and benefit from the various dynamic deployment techniques designed for LLaMA. However, this is a complex issue because there are some obvious differences between the two modalities.

There are significant differences in how text sequences and visual tasks process data. On the one hand, text sequences are one-dimensional data, while vision tasks require processing more complex two- or multi-dimensional data. On the other hand, for visual tasks, it is usually necessary to use a pyramid-structured backbone network to improve performance, while the LLaMA encoder has a relatively simple structure. Additionally, efficiently processing image and video inputs of different resolutions is a challenge. These differences need to be fully considered in cross-research between textual and visual domains to find more effective solutions.The purpose of this paper is to address these challenges and bridge the architectural gap between different modalities, proposing the LLaMA architecture adapted to vision tasks. With this architecture, issues related to modal differences can be solved and visual and linguistic data can be processed uniformly, leading to better results.

The main contributions of this article are as follows:

1. This article proposes VisionLLaMA, a visual transformer architecture similar to LLaMA to reduce language and visual architectural differences between.

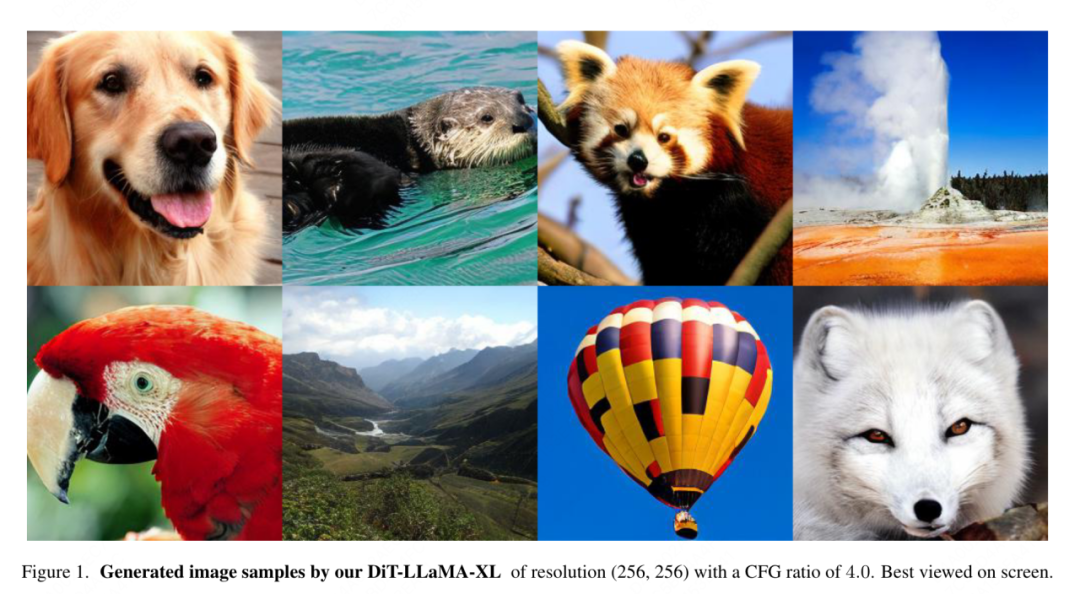

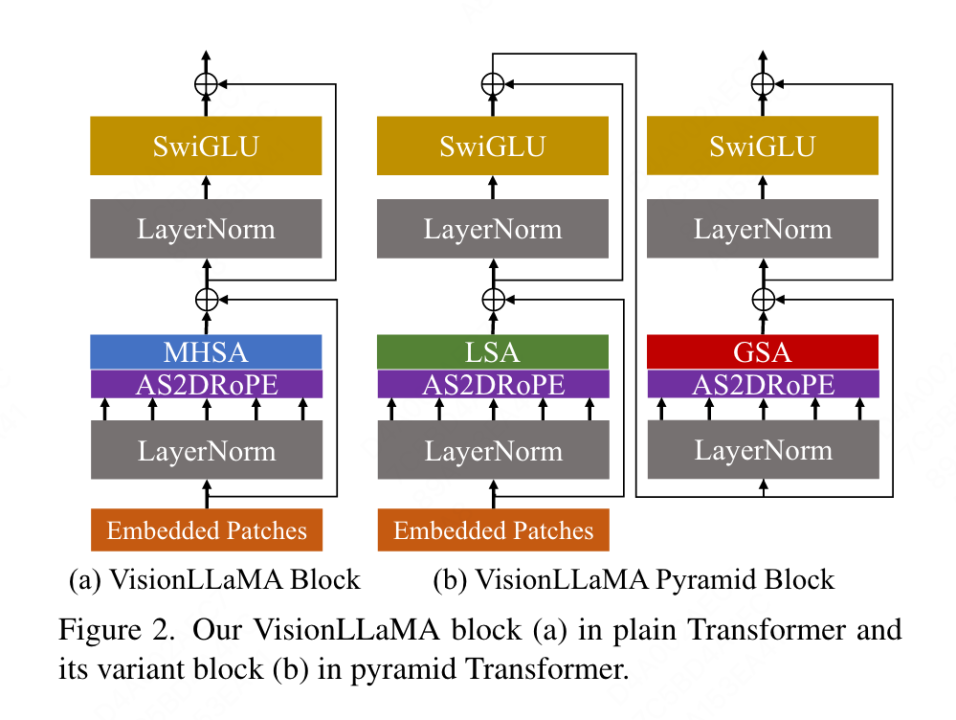

2. This paper examines ways to adapt VisionLLaMA to common vision tasks, including image understanding and creation (Figure 1). This paper investigates two well-known vision architecture schemes (regular structure and pyramid structure) and evaluates their performance in supervised and self-supervised learning scenarios. Additionally, this paper proposes AS2DRoPE (i.e., Autoscaling 2D RoPE), which extends rotational position encoding from 1D to 2D and utilizes interpolation scaling to accommodate arbitrary resolutions.

3. Under precise evaluation, VisionLLaMA significantly outperforms the current mainstream and precisely fine-tuned vision in many representative tasks such as image generation, classification, semantic segmentation and object detection. transformer. Extensive experiments show that VisionLLaMA has faster convergence speed and better performance than existing vision transformers.

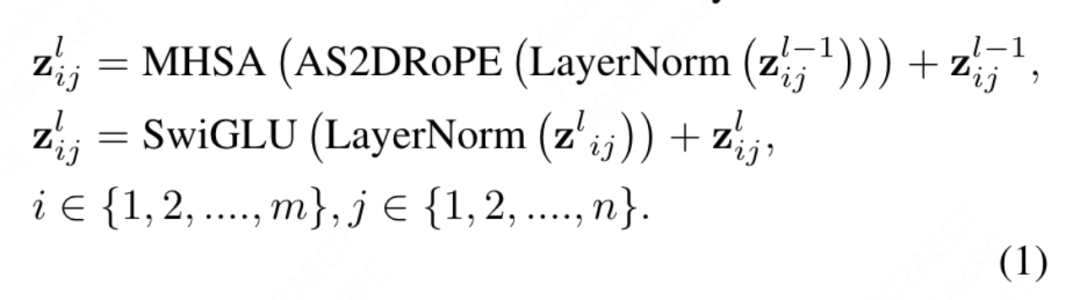

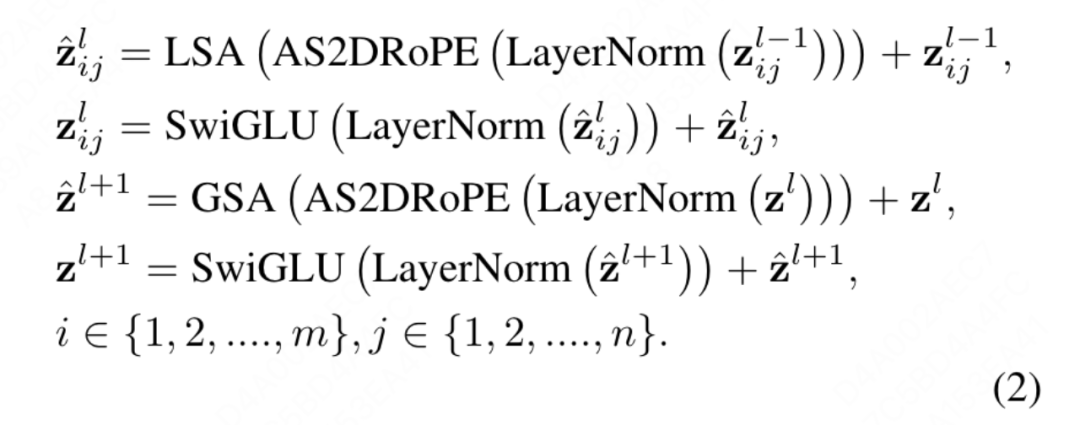

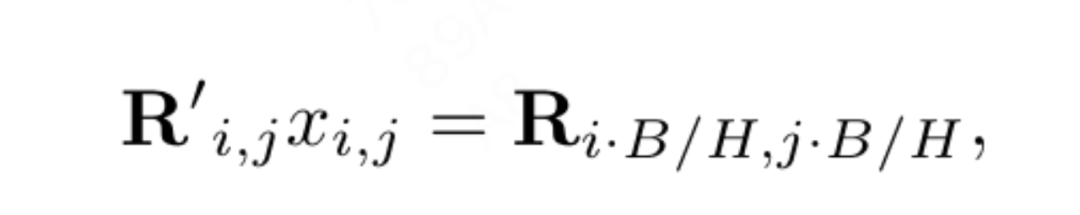

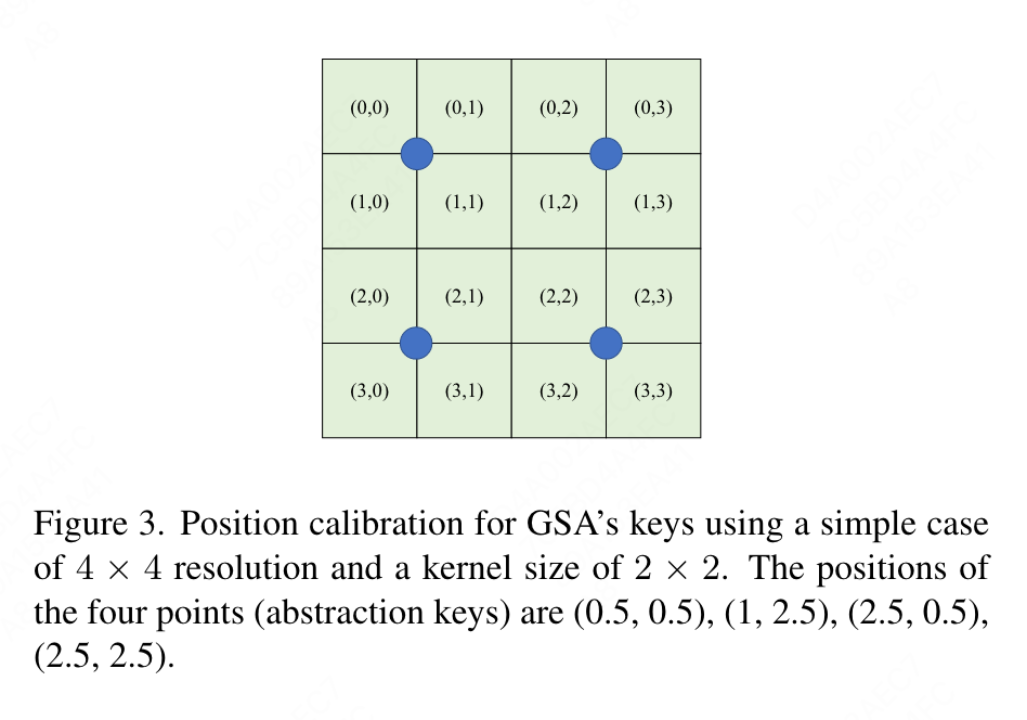

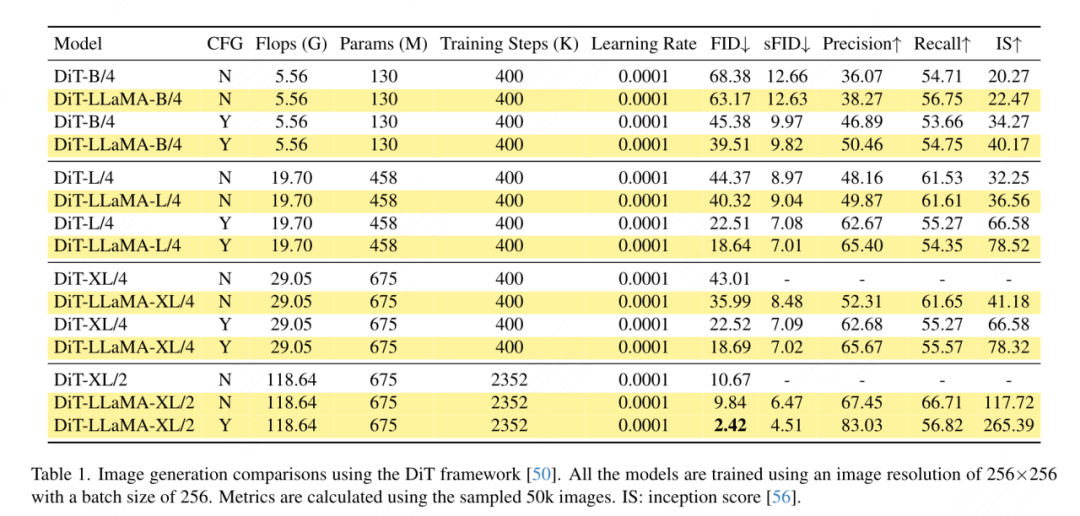

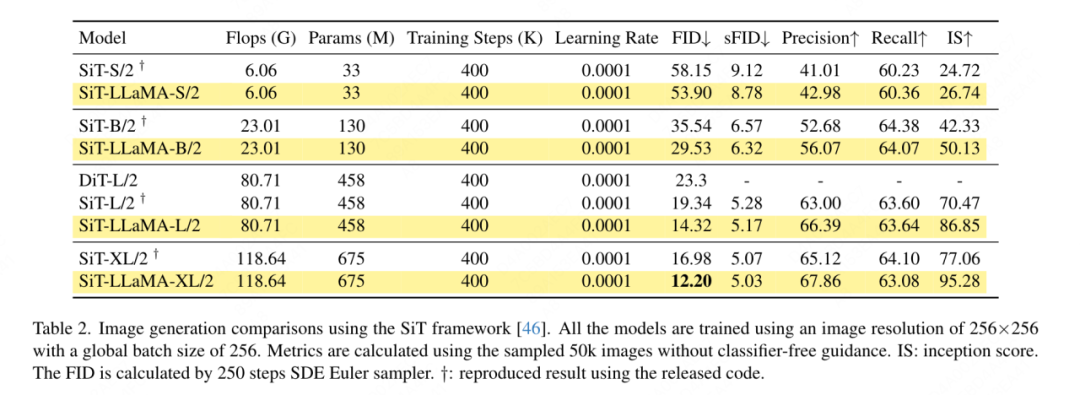

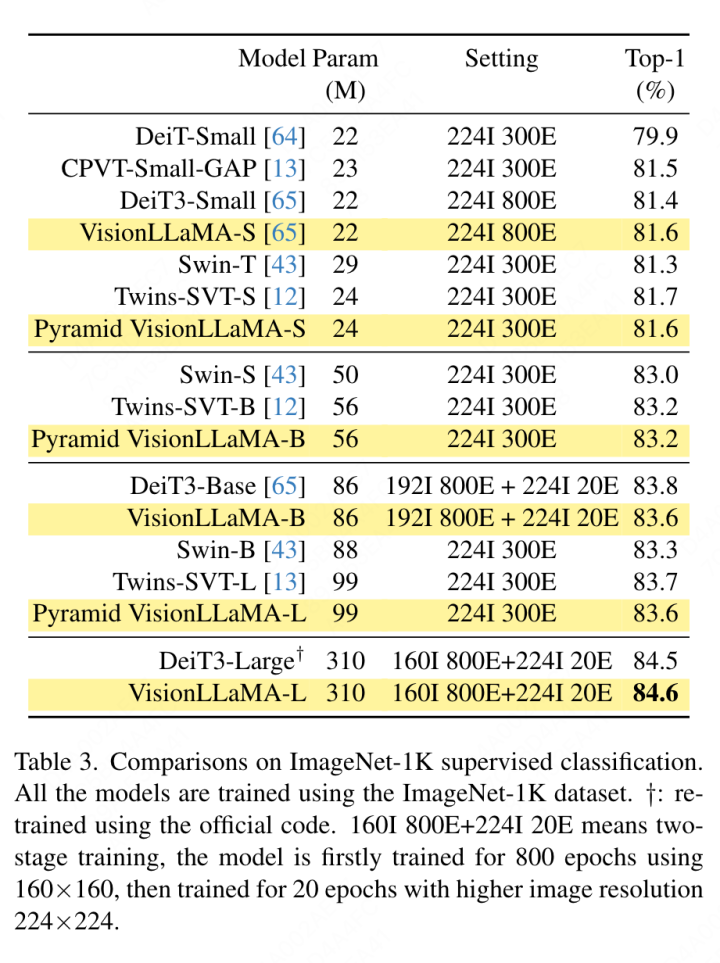

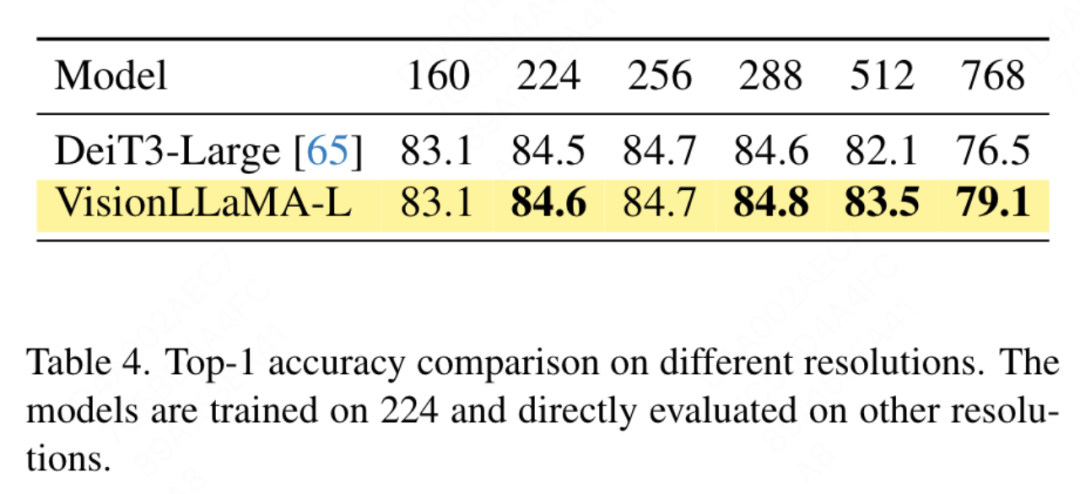

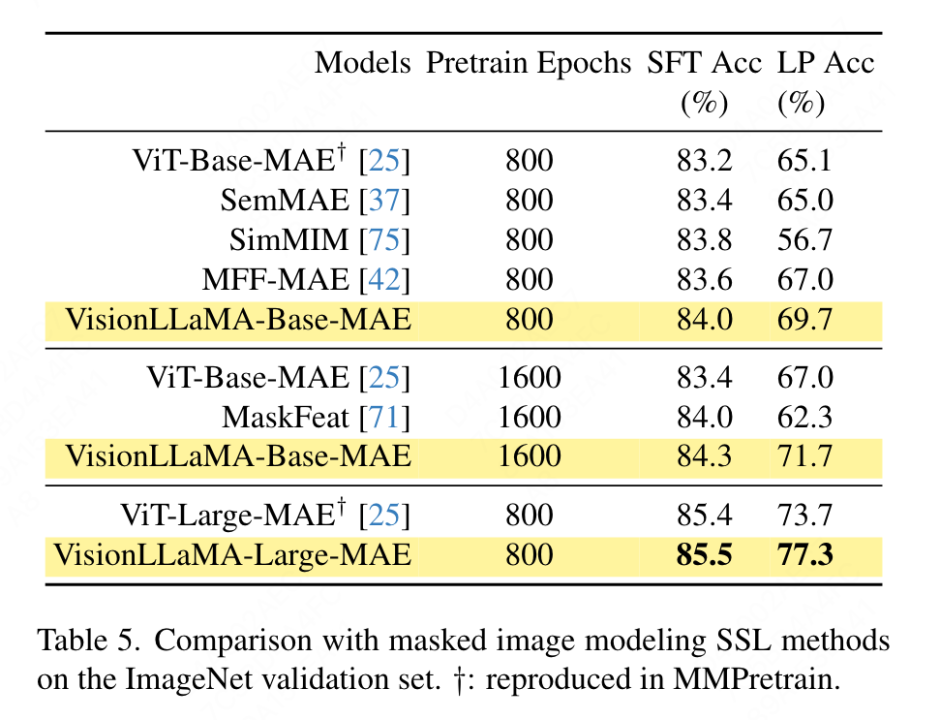

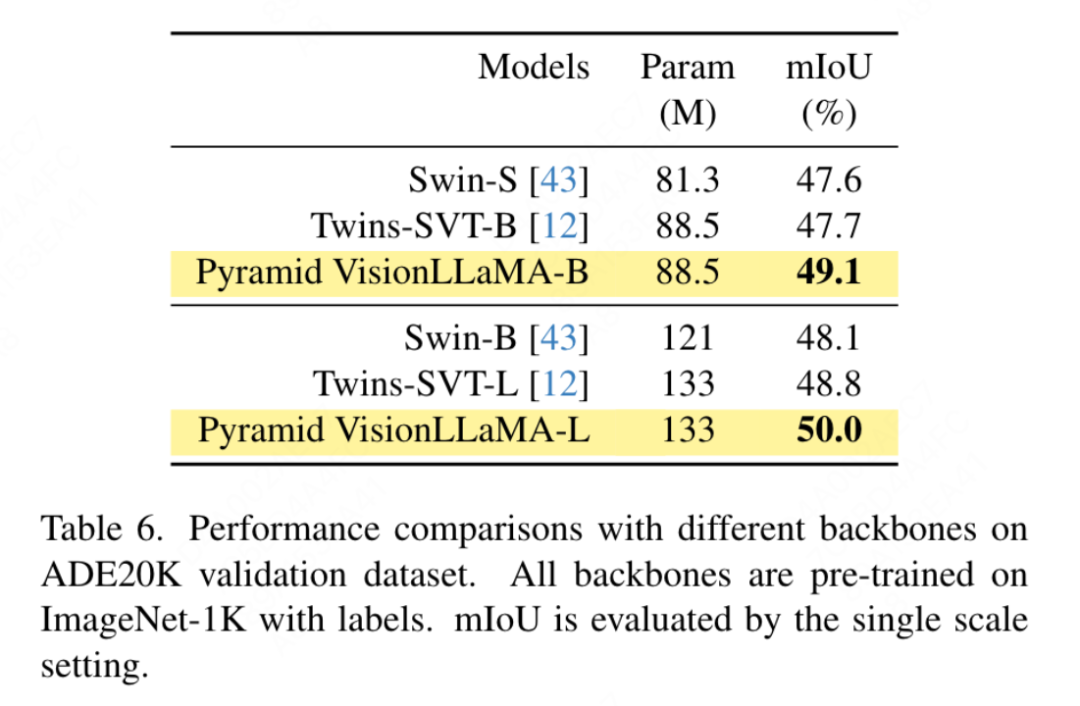

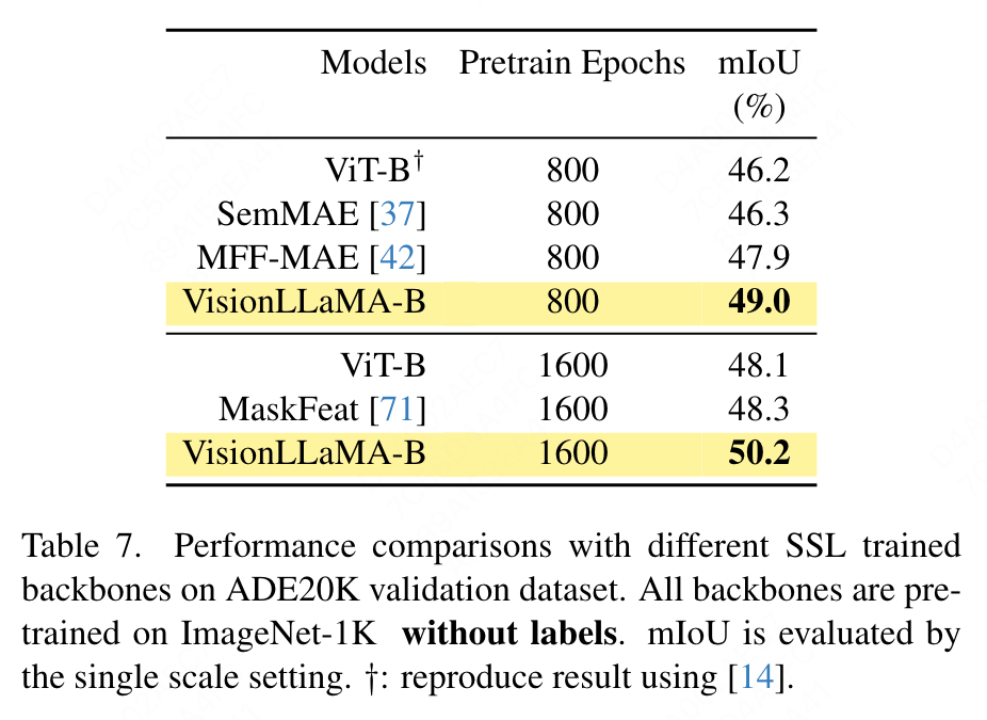

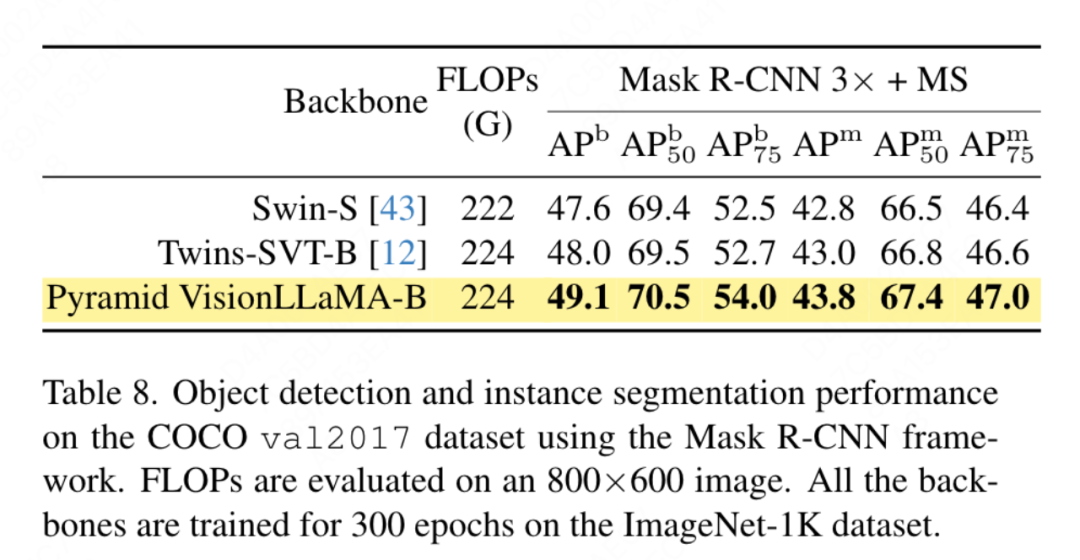

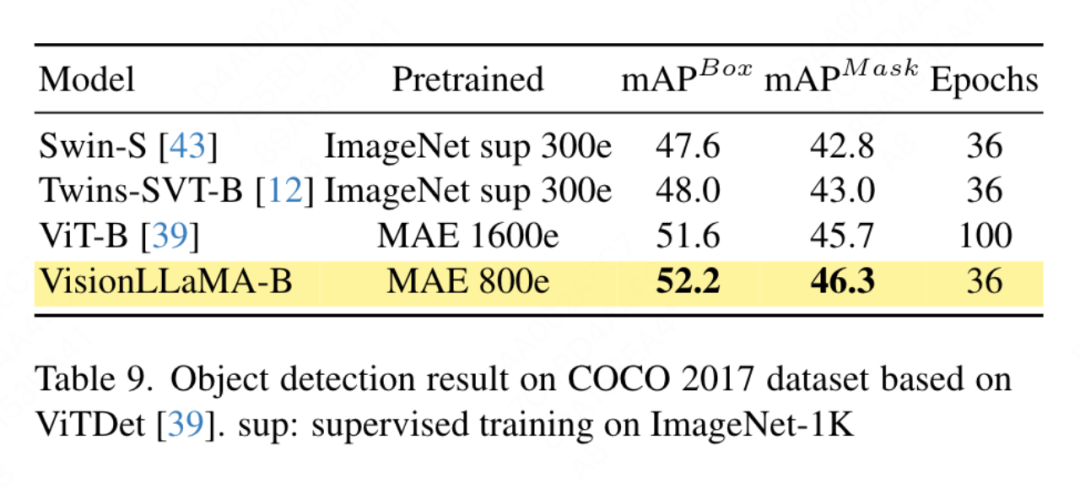

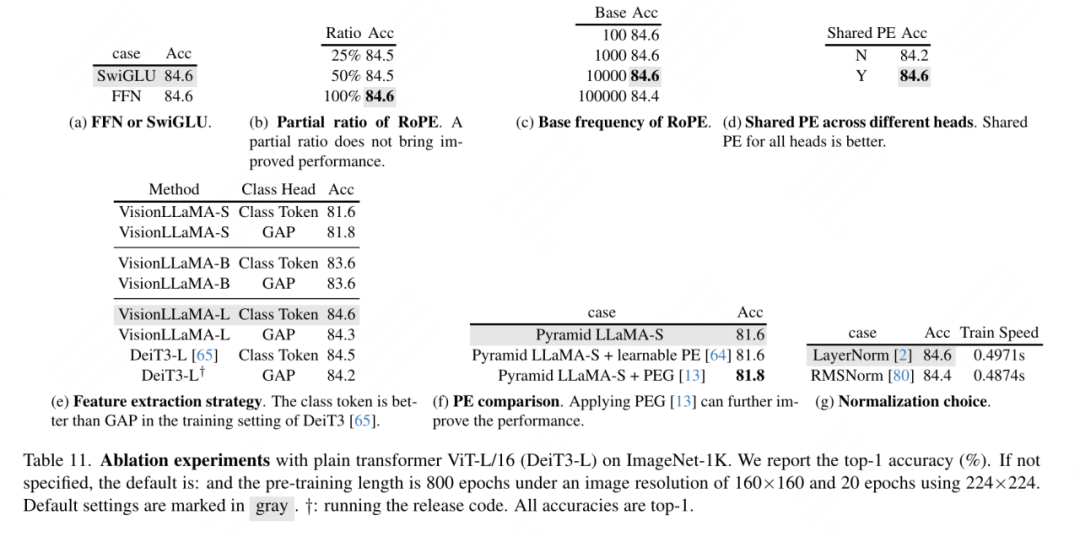

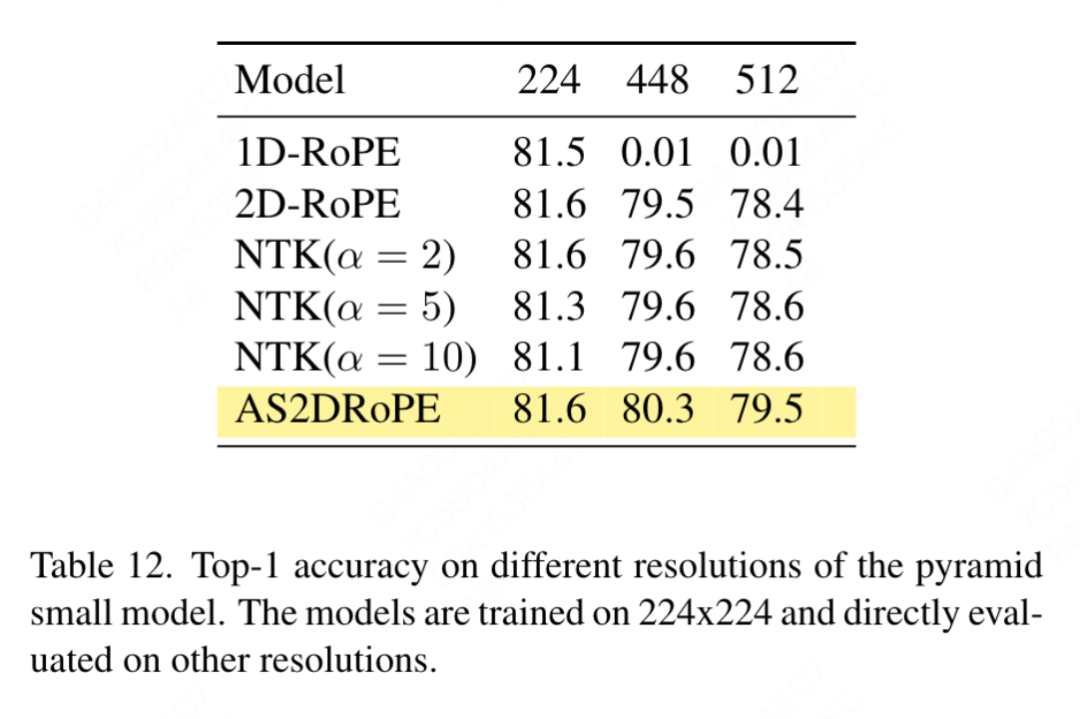

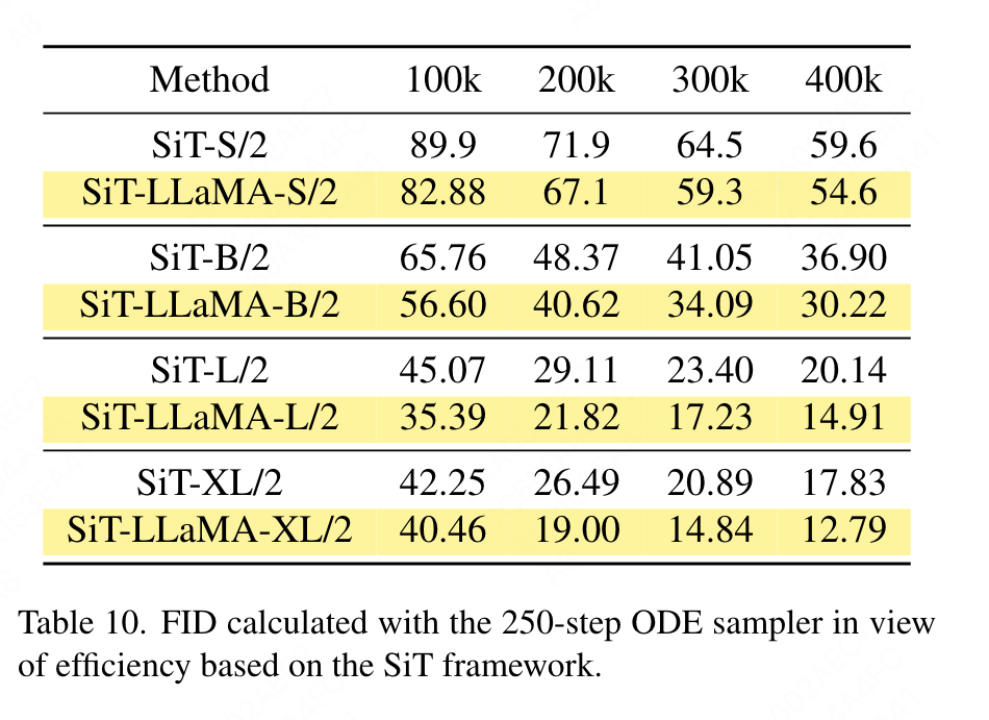

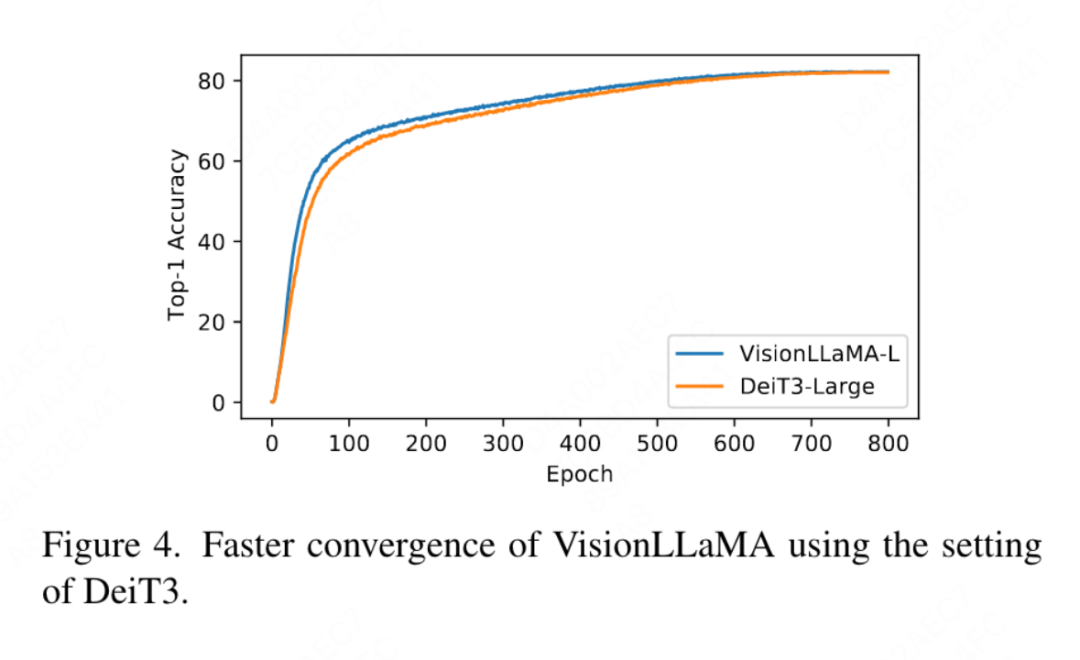

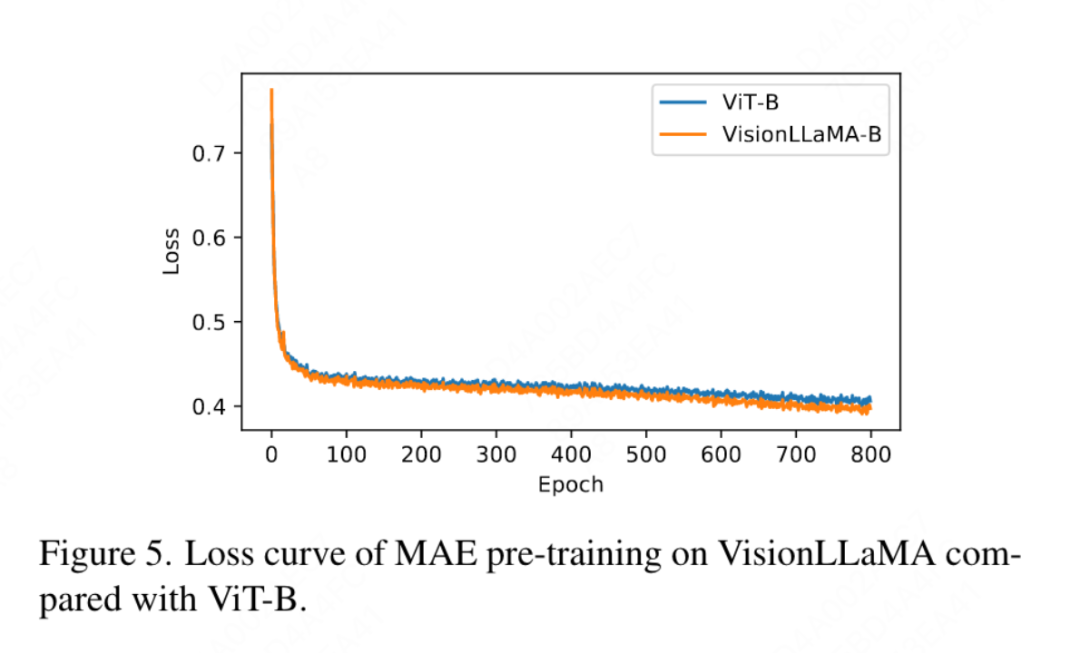

##General Transformer The conventional VisionLLaMA proposed in this article follows the ViT process and retains the architectural design of LLaMA as much as possible. For an image, it is first transformed and flattened into a sequence, then a category token is added at the beginning of the sequence, and the entire sequence is processed through L VisionLLaMA blocks. Unlike ViT, VisionLLaMA does not add positional encoding to the input sequence because VisionLLaMA's blocks contain positional encoding. Specifically, this block differs from the standard ViT block in two ways: self-attention with positional encoding (RoPE) and SwiGLU activation. This article still uses LayerNorm instead of RMSNorm because this article experimentally found that the former performs better (see Table 11g). The structure of block is shown in Figure 2(a). This paper finds that directly applying 1D RoPE in vision tasks cannot generalize well to different resolutions, so it is extended to a two-dimensional form: Pyramid Structure Transformer Applying VisionLLaMA to a Swin-like window-based transformer is very simple, so this article chooses to use a stronger Explore how to build a powerful pyramid structure transformer on Baseline Twins. The original architecture of Twins leverages conditional positional coding, interleaved local-global information exchange in the form of local-global attention. These components are common across transformers, which means it's not difficult to apply VisionLLaMA to various transformer variations. The goal of this article is not to invent a new visual transformer with a pyramid structure, but how to adjust the basic design of VisionLLaMA based on the existing design. Therefore, this article follows the architecture and hyperparameters The principle of minimal modification. Following the naming method of ViT, two consecutive blocks can be written as: where LSA is the local self-attention operation within the group, and GSA is Attention via global subsampling by interacting with representative key values in each subwindow. This article removes the conditional position encoding in the pyramid structure VisionLLaMA because the position information is already included in AS2DRoPE. In addition, the category token is removed and GAP (global average pooling) is used before the classification head. The block structure under this setting is shown in Figure 2 (b). Expand one-dimensional RoPE to two-dimensional: processing different input resolutions is Common requirements in vision tasks. Convolutional neural networks use a sliding window mechanism to handle variable lengths. In contrast, most visual transformers apply local window operations or interpolation, e.g. DeiT uses bicubic interpolation when trained on different resolutions; CPVT uses convolution-based positional encoding. This paper evaluates the performance of 1D RoPE and finds that it has the highest accuracy at 224×224 resolution. However, when the resolution increases to 448×448, the accuracy drops sharply and even reaches 0. Therefore, this paper extends the one-dimensional RoPE to two dimensions. For the multi-head self-attention mechanism, the 2D RoPE is shared among different heads. Positional interpolation helps 2D RoPE generalize better: Inspired by some work using interpolation to extend the context window of LLaMA, with the participation of higher resolutions, VisionLLaMA Expand the 2D context window in a similar manner. Unlike language tasks with enlarged fixed context lengths, visual tasks such as object detection often handle different sampling resolutions in different iterations. This article uses an input resolution of 224×224 to train a small model, and evaluates the performance of a larger resolution without retraining, guiding this article to better apply the interpolation or heterodyne strategy. After experiments, this article chose to apply automatic scaling interpolation (AS2DRoPE) based on "anchor resolution". The calculation method for processing the square image of H × H and the anchor point resolution of B × B is as follows: This calculation method is highly efficient And no additional costs will be introduced. If the training resolution remains unchanged, AS2DRoPE degenerates into 2D RoPE. Due to the need to add location information to the summarized key values, this article provides special treatment for GSA under the pyramid structure setting. These subsampled keys are generated through abstraction on the feature map. This paper uses convolution with kernel size k×k and stride k. As shown in Figure 3, the coordinates of the generated key values can be expressed as the average of the sampled features. This paper comprehensively evaluates the effectiveness of VisionLLaMA on tasks such as image generation, classification, segmentation and detection. By default, all models in this article are trained on 8 NVIDIA Tesla A100 GPUs. Image generation Image generation based on DiT framework: This article chooses the DiT framework VisionLLaMA is applied because DiT is a representative work using Vision Transformer and DDPM for image generation. This article replaces DiT's original vision transformer with VisionLLaMA, while keeping other components and hyperparameters unchanged. This experiment demonstrates the versatility of VisionLLaMA on image generation tasks. Same as DiT, this article sets the sample steps of DDPM to 250, and the experimental results are shown in Table 1. In keeping with most methodologies, FID is considered as the primary metric and is evaluated on other secondary metrics such as sFID, Precision/Recall, Inception Score. The results show that VisionLLaMA significantly outperforms DiT across various model sizes. This article also extends the number of training steps of the XL model to 2352k to evaluate whether our model has the advantage of faster convergence or still performs better under longer training period settings. The FID of DiT-LLaMA-XL/2 is 0.83 lower than that of DiT-XL/2, indicating that VisionLLaMA not only has better computational efficiency but also has higher performance than DiT. Some examples generated using XL models are shown in Figure 1. Image generation based on SiT framework: SiT framework significantly improves the performance of image generation using visual transformers. This article replaces the vision transformer in SiT with VisionLLaMA to evaluate the benefits of a better model architecture, which this article calls SiT-LLaMA. The experiments retained all remaining settings and hyperparameters in SiT, all models were trained using the same number of steps, and linear interpolant and velocity models were used in all experiments. For a fair comparison, we also rerun the published code and sampled 50k 256×256 images using an SDE sampler (Euler) with 250 steps, and the results are shown in Table 2. SiT-LLaMA outperforms SiT in models at various capacity levels. Compared to SiT-L/2, SiT-LLaMA-L/2 decreases 5.0 FID, which is larger than the improvement brought by the new framework (4.0 FID). This paper also shows a more efficient ODE sampler (dopri5) in Table 13, and the performance gap with our method still exists. Similar conclusions can be drawn as in the SiT paper: SDEs have better performance than their ODE counterparts. Image Classification on ImageNet This section focuses on the fully supervised training of the model on the ImageNet-1K data set, excluding the influence of other data sets or distillation techniques, all models All were trained using the ImageNet-1K training set, and the accuracy results on the validation set are shown in Table 3. Comparison of conventional vision transformers: DeiT3 is the current state-of-the-art conventional vision transformer, which proposes a special data augmentation and performs an extensive hyperparameter search to improve performance. DeiT3 is sensitive to hyperparameters and prone to overfitting. Replacing category tokens with GAP (global average pooling) will cause the accuracy of the DeiT3-Large model to drop by 0.7% after 800 epochs of training. Therefore, this article uses category tokens instead of GAP in regular transformers. The results are shown in Table 3, where VisionLLaMA achieves top-1 accuracy comparable to DeiT3. Accuracy at a single resolution does not provide a comprehensive comparison. This paper also evaluates the performance at different image resolutions, and the results are shown in Table 4. For DeiT3, we use bicubic interpolation for learnable positional encoding. Although the two models have comparable performance at 224×224 resolution, the gap widens when the resolution increases, which means that our method has better generalization ability at different resolutions, which is good for the target detection and many other downstream tasks. Visual transformer comparison of pyramid structure: This article uses the same architecture as Twins-SVT, and the detailed configuration is listed in Table 17. This article removes the conditional position encoding because VisionLLaMA already includes a rotational position encoding. Therefore, VisionLLaMA is a convolution-free architecture. This article follows all settings including hyperparameters in Twins-SVT, which is consistent with Twins-SVT. This article does not use category tokens, but applies GAP. The results are shown in Table 3. Our method achieves comparable performance to Twins at all model levels and is always better than Swin. This article uses the ImageNet dataset to evaluate two common methods of self-supervised visual transformers, while The training data is limited to ImageNet-1K, and any components that can improve performance using CLIP, DALLE or distillation are removed. The implementation of this article is based on the MMPretrain framework, utilizing the MAE framework and using VisionLLaMA to replace the encoder, while keeping other components unchanged. This control experiment can evaluate the effectiveness of this method. Furthermore, we use the same hyperparameter settings as the compared methods, under which we still achieve significant performance improvements compared to powerful baselines. Full fine-tuning setup: In the current setup, the model is first initialized with pre-trained weights and then additionally trained with fully trainable parameters. VisionLLaMA-Base was trained on ImageNet for 800 epochs and achieved a top-1 accuracy of 84.0%, which is 0.8% higher than ViT-Base. The method in this article trains about 3 times faster than SimMIM. This paper also increases the training period to 1600 to verify whether VisionLLaMA can maintain its advantage with sufficient training resources. VisionLLaMA-Base achieves new SOTA results among MAE variants, with a top-1 accuracy of 84.3%, a 0.9% improvement over ViT-Base. Considering that full fine-tuning has the risk of performance saturation, the improvement of this method is very significant. Linear probing: A recent work suggests that linear probing metric is a more reliable assessment of representational learning. In the current setup, the model is initialized by pre-trained weights from the SSL stage. Then, during training, the entire backbone network is frozen except for the classifier head. The results are shown in Table 5: at a training cost of 800 epochs, VisionLLaMA-Base outperforms ViTBase-MAE by 4.6%. It also outperforms ViT-Base-MAE trained for 1600 epochs. When VisionLLaMA is trained for 1600 epochs, VisionLLaMA-Base achieves a top1 accuracy of 71.7%. This method is also extended to VisionLLaMA-Large, which improves by 3.6% compared to ViT-Large. Semantic Segmentation on ADE20K Dataset According to the settings of Swin, this article uses semantic segmentation on the ADE20K data set to evaluate the effectiveness of this method. For a fair comparison, this paper restricts the baseline model to only use ImageNet-1K for pre-training. This article uses the UpperNet framework and replaces the backbone network with the pyramid structure VisionLLaMA. The implementation of this article is based on the MMSegmentation framework. The number of model training steps is set to 160k, and the global batch size is 16. The results are shown in Table 6. At similar FLOPs, our method outperforms Swin and Twins by more than 1.2% mIoU. This article uses the UpperNet framework in For semantic segmentation on the ADE20K dataset, VisionLLaMA is used to replace the ViT backbone while keeping other components and hyperparameters unchanged. The implementation of this article is based on MMSegmentation, and the results are shown in Table 7. For the pre-training set of 800 epochs, VisionLLaMA-B significantly improved ViT-Base by 2.8% mIoU. Our method is also significantly better than some other improvements, such as introducing additional training objectives or features, which will bring additional overhead to the training process and reduce the training speed. In contrast, VisionLLaMA only involves the replacement of the base model and has fast training speed. This paper further evaluates the performance of 1600 longer pre-training epochs, and VisionLLaMA-B achieves 50.2% mIoU on the ADE20K validation set, which improves the performance of ViT-B by 2.1% mIoU. Object detection on the COCO dataset This paper evaluates the performance of the pyramid structure VisionLLaMA on the target detection task on the COCO dataset. This paper uses the Mask RCNN framework and replaces the backbone network with a pyramid-structured VisionLLaMA pre-trained on the ImageNet-1K dataset for 300 epochs, similar to Swin's setup. Therefore, our model has the same number of parameters and FLOPs as Twins. This experiment can be used to verify the effectiveness of this method on target detection tasks. The implementation of this article is based on the MMDetection framework. Table 8 shows the results of the standard 36 epoch training cycle (3×). The model of this article is better than Swin and Twins. Specifically, VisionLLaMA-B outperforms Swin-S by 1.5% box mAP and 1.0% mask mAP. Compared with the stronger baseline Twins-B, our method has the advantage of 1.1% higher box mAP and 0.8% higher mask mAP. This article applies VisionLLaMA based on the ViTDet framework. The framework leverages conventional vision transformers to achieve comparable performance to the corresponding pyramid-structured vision transformers. This paper uses the Mask RCNN detector and replaces the vit-Base backbone network with the VisionLLaMA-Base model, which is pretrained with MAE for 800 epochs. The original ViTDet converges slowly and requires specialized training strategies, such as longer training epochs, to achieve optimal performance. During the training process, this paper found that VisionLLaMA achieved similar performance after 30 epochs. Therefore, this paper directly applied the standard 3x training strategy. The training cost of our method is only 36% of the baseline. Unlike the compared methods, our method does not perform optimal hyperparameter search. The results are shown in Table 9. VisionLLaMA outperforms ViT-B by 0.6% on box mAP and 0.8% on mask mAP. Ablation experiment This article chooses to conduct ablation experiments on the ViT-Large model by default, because this article observes that the variance produced by this model in multiple runs is small. Ablation of FFN and SwiGLU: This paper replaces FFN with SwiGLU, and the results are shown in Table 11a. Due to the obvious performance gap, this paper chooses to use SwiGLU to avoid introducing additional modifications to the LLaMA architecture. Ablation of normalization strategies: This paper compares two widely used normalization methods in transformers, RMSNorm and LayerNorm, and the results are shown in Table 11g. The latter has better final performance, suggesting that re-centering invariance is also important in vision tasks. This article also calculates the average time spent per iteration to measure the training speed, where LayerNorm is only 2% slower than RMSNorm. Therefore, this article chooses LayerNorm instead of RMSNorm for more balanced performance. Partial position encoding: This paper uses RoPE to adjust the ratio of all channels. The results are shown in Table 11b. The results show that setting the ratio at a small threshold can obtain good performance, and there is no observation between different settings. There is a significant performance difference. Therefore, this article retains the default settings in LLaMA. Basic frequency: This paper changes and compares the basic frequency, and the results are shown in Table 11c. The results show that the performance is robust to a wide range of frequencies. Therefore, this article retains the default values in LLaMA to avoid additional special handling at deployment time. Shared position encoding between each attention head: This paper finds that sharing the same PE between different heads (the frequency in each head varies from 1 to 10000) is better than independent PE (frequency varying from 1 to 10000 in all channels) is better and the results are shown in Table 11d. Feature abstraction strategy: This paper compares two common feature extraction strategies on a large parameter scale model (-L): category token and GAP. The results are shown in Table 11e , using category tokens is better than GAP, which is different from the conclusion obtained in PEG [13]. However, the training settings for the two methods are quite different. This paper also conducted additional experiments using DeiT3-L and reached similar conclusions. This article further evaluates the performance of the "small" (-S) and "base" (-B) models. Interestingly, the opposite conclusion was observed in small models, and there is reason to suspect that the higher drop-path rate used in DeiT3 makes parameter-free abstraction methods such as GAP difficult to achieve. the desired effect. Positional encoding strategy: This paper also evaluates other absolute positional encoding strategies, such as learnable positional encoding and PEG, on the pyramid structure VisionLLaMA-S. Due to the existence of a strong baseline, this paper uses the “small” model, and the results are shown in Table 11f: learnable PE does not improve performance, PEG slightly improves the baseline from 81.6% to 81.8%. This article does not include PEG as an essential component for three reasons. First, this paper attempts to make minimal modifications to LLaMA. Second, the goal of this paper is to propose a general approach for various tasks such as ViT. For masked image frameworks like MAE, PEG increases training costs and may hurt performance on downstream tasks. In principle, sparse PEG can be applied under the MAE framework, but deployment-unfriendly operators will be introduced. Whether sparse convolutions contain as much positional information as their dense versions remains an open question. Third, the modality-free design paves the way for further research covering other modalities beyond text and visuals. Sensitivity to input size: Without training, this article further compares the performance of increased resolution and common resolution, and the results are shown in Table 12. The pyramid structure transformer is used here because it is more popular for downstream tasks than the corresponding non-hierarchical version. It is not surprising that the performance of 1D-RoPE is severely affected by resolution changes. NTK-Aware interpolation with α = 2 achieves similar performance to 2D-RoPE, which is actually NTKAware (α = 1). AS2DRoPE demonstrates the best performance at larger resolutions. Convergence speed: For image generation, this paper studies the Performance, storing weights to calculate fidelity metrics at 100k, 200k, 300k and 400k iterations respectively. Since SDE is significantly slower than ODE, we chose to use the ODE sampler in this article. The results in Table 10 show that VisionLLaMA converges much faster than ViT on all models. SiT-LLaMA with 300,000 training iterations even outperforms the baseline model with 400,000 training iterations. This article also compares the top-1 accuracy of 800 epochs of fully supervised training using DeiT3-Large on ImageNet in Figure 4, showing that VisionLLaMA Converges faster than DeiT3-L. This paper further compares the training loss of 800 epochs of the ViT-Base model under the MAE framework, and is illustrated in Figure 5. VisionLLaMA has a lower training loss at the beginning and maintains this trend until the end. VisionLLaMA overall architecture design

Training or inference beyond sequence length limitations

Experimental results

Ablation experiment and discussion

Discussion

The above is the detailed content of Comprehensively surpassing ViT, Meituan, Zhejiang University, etc. proposed VisionLLAMA, a unified architecture for visual tasks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,