Technology peripherals

Technology peripherals

AI

AI

OccFusion: A simple and effective multi-sensor fusion framework for Occ (Performance SOTA)

OccFusion: A simple and effective multi-sensor fusion framework for Occ (Performance SOTA)

OccFusion: A simple and effective multi-sensor fusion framework for Occ (Performance SOTA)

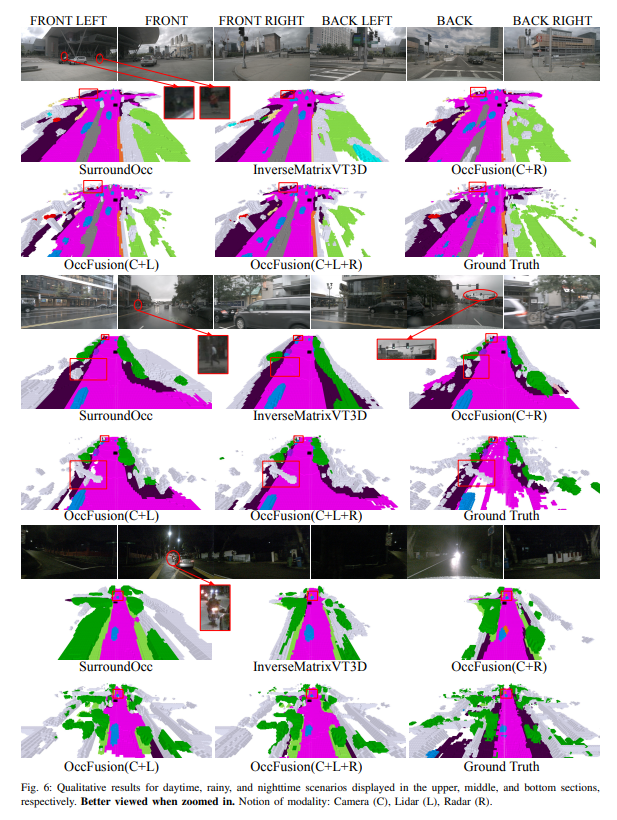

A comprehensive understanding of 3D scenes is crucial in autonomous driving, and recent 3D semantic occupancy prediction models have successfully addressed the challenge of describing real-world objects with different shapes and categories. However, existing 3D occupancy prediction methods rely heavily on panoramic camera images, which makes them susceptible to changes in lighting and weather conditions. By integrating the capabilities of additional sensors such as lidar and surround-view radar, our framework improves the accuracy and robustness of occupancy prediction, resulting in top performance on the nuScenes benchmark. Furthermore, extensive experiments on the nuScene dataset, including challenging nighttime and rainy scenes, confirm the superior performance of our sensor fusion strategy across various sensing ranges.

Paper link: https://arxiv.org/pdf/2403.01644.pdf

Paper name: OccFusion: A Straightforward and Effective Multi-Sensor Fusion Framework for 3D Occupancy Prediction

The main contributions of this paper are summarized as follows:

- A multi-sensor fusion framework is proposed to integrate camera, lidar and radar information to perform 3D semantic occupancy prediction tasks.

- In the 3D semantic occupancy prediction task, our method is compared with other state-of-the-art (SOTA) algorithms to demonstrate the advantages of multi-sensor fusion.

- Thorough ablation studies were conducted to evaluate the performance gains achieved by different sensor combinations under challenging lighting and weather conditions, such as night and rain.

- Considering various sensor combinations and challenging scenarios, a comprehensive study was conducted to analyze the impact of perceptual range factors on the performance of our framework in the 3D semantic occupancy prediction task!

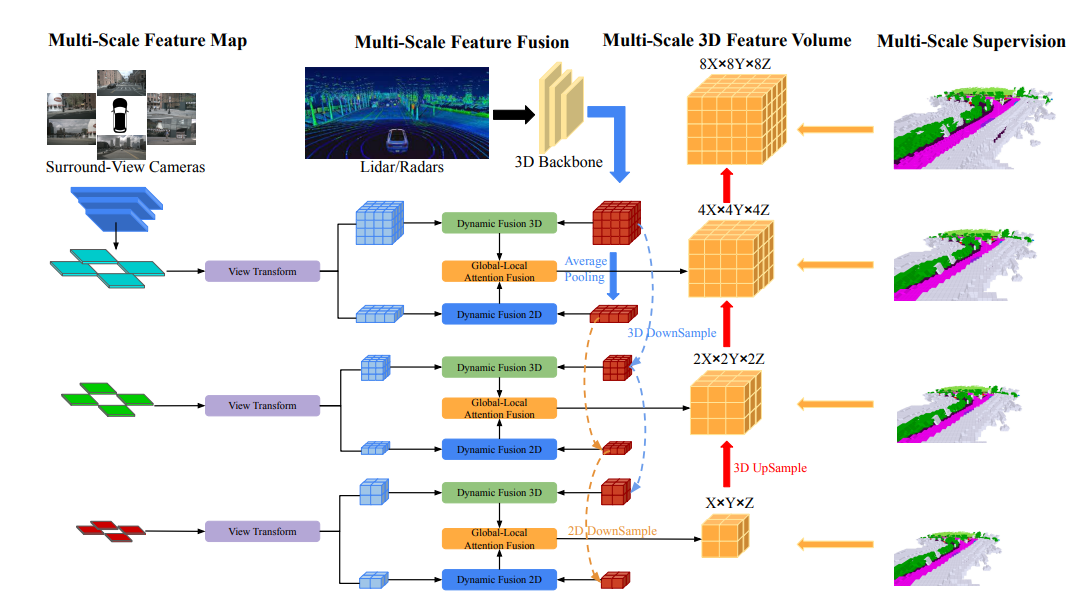

Network structure overview

The overall architecture of OccFusion is as follows. First, surround view images are input into a 2D backbone to extract multi-scale features. Subsequently, view transformation is performed at each scale to obtain global BEV features and local 3D feature volume at each level. The 3D point clouds generated by lidar and surround radar are also input into the 3D backbone to generate multi-scale local 3D feature quantities and global BEV features. Dynamic fusion 3D/2D modules at each level combine the capabilities of cameras and lidar/radar. After this, the merged global BEV features and local 3D feature volume at each level are fed into the global-local attention fusion to generate the final 3D volume at each scale. Finally, the 3D volume at each level is upsampled and skip-connected with a multi-scale supervision mechanism.

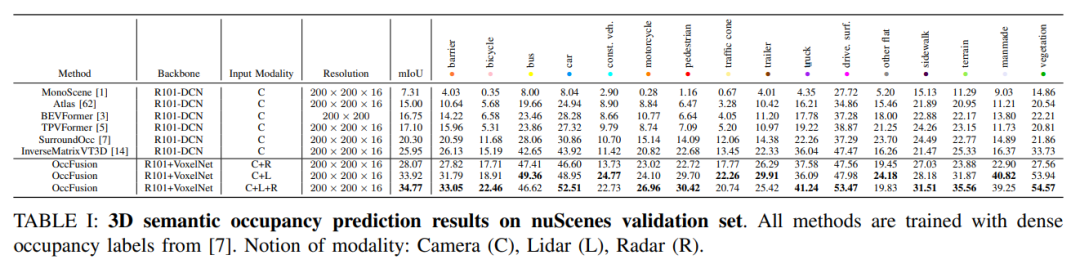

Experimental comparative analysis

On the nuScenes validation set, various methods based on dense occupancy label training are demonstrated in 3D semantics Results in occupancy forecasts. These methods involve different modal concepts including camera (C), lidar (L) and radar (R).

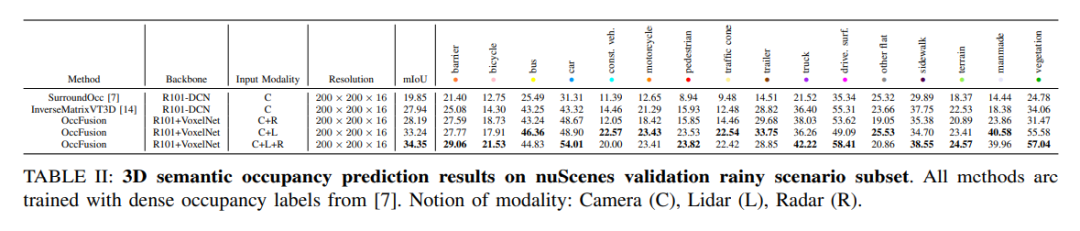

On the rainy scene subset of the nuScenes dataset, we predict 3D semantic occupancy and use dense occupancy labels for training. In this experiment, we considered data from different modalities such as camera (C), lidar (L), radar (R), etc. The fusion of these modes can help us better understand and predict rainy scenes, providing an important reference for the development of autonomous driving systems.

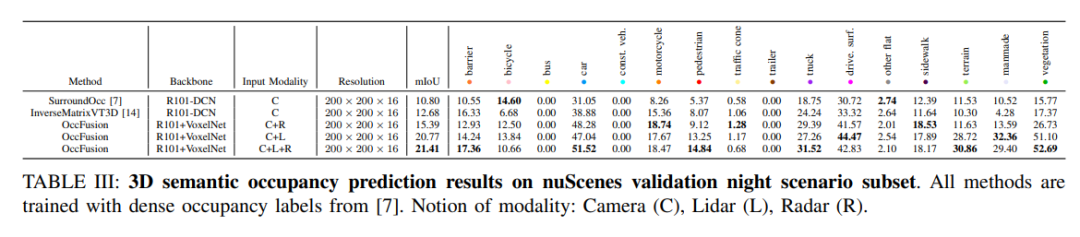

#nuScenes validates 3D semantic occupancy prediction results for a subset of nighttime scenes. All methods are trained using dense occupancy labels. Modal concepts: camera (C), lidar (L), radar (R).

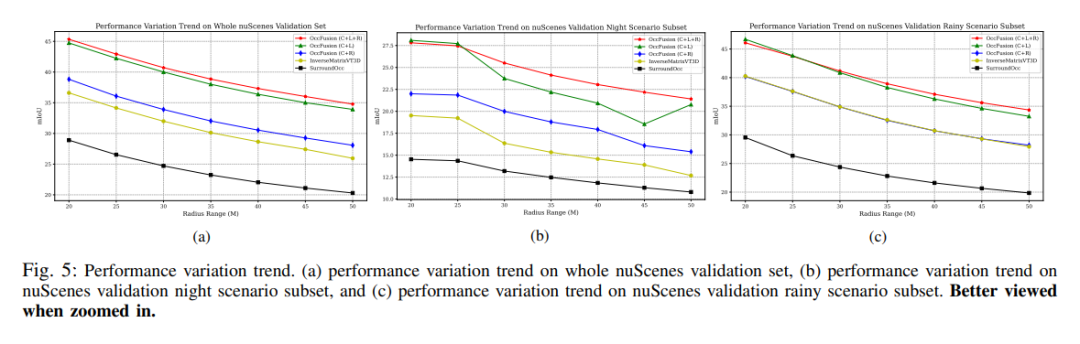

Performance change trend. (a) Performance change trend of the entire nuScenes validation set, (b) nuScenes validation night scene subset, and (c) nuScene validation performance change trend of the rainy scene subset.

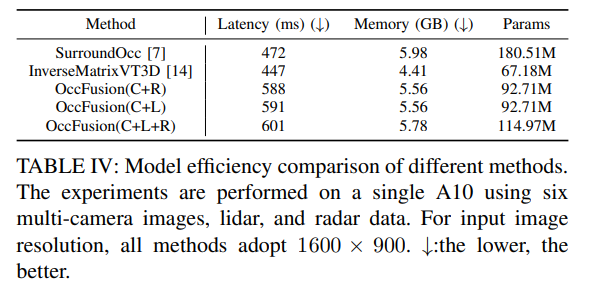

Table 4: Comparison of model efficiency of different methods. Experiments were conducted on an A10 using six multi-camera images, lidar and radar data. For input image resolution, 1600×900 is used for all methods. ↓:The lower the better.

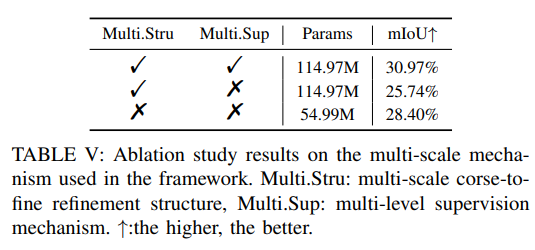

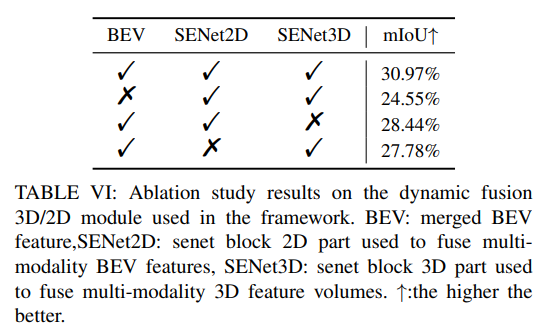

More ablation experiments:

The above is the detailed content of OccFusion: A simple and effective multi-sensor fusion framework for Occ (Performance SOTA). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Wow awesome! Samsung Galaxy Ring experience: 2999 yuan real smart ring

Jul 19, 2024 pm 02:31 PM

Wow awesome! Samsung Galaxy Ring experience: 2999 yuan real smart ring

Jul 19, 2024 pm 02:31 PM

Samsung officially released the national version of Samsung Galaxy Ring on July 17, priced at 2,999 yuan. Galaxy Ring's real phone is really the 2024 version of "WowAwesome, this is my exclusive moment". It is the electronic product that makes us feel the freshest in recent years (although it sounds like a flag) besides Apple's Vision Pro. (In the picture, the rings on the left and right are Galaxy Ring↑) Samsung Galaxy Ring specifications (data from the official website of the Bank of China): ZephyrRTOS system, 8MB storage; 10ATM waterproof + IP68; battery capacity 18mAh to 23.5mAh (different sizes

Upgrade to full screen! iPhone SE4 advanced to September

Jul 24, 2024 pm 12:56 PM

Upgrade to full screen! iPhone SE4 advanced to September

Jul 24, 2024 pm 12:56 PM

Recently, new news about iPhone SE4 was revealed on Weibo. It is said that the back cover process of iPhone SE4 is exactly the same as that of the iPhone 16 standard version. In other words, iPhone SE4 will use a glass back panel and a straight screen and straight edge design. It is reported that iPhone SE4 will be released in advance to September this year, which means it is likely to be unveiled at the same time as iPhone 16. 1. According to the exposed renderings, the front design of iPhone SE4 is similar to that of iPhone 13, with a front camera and FaceID sensor on the notch screen. The back uses a layout similar to the iPhoneXr, but it only has one camera and does not have an overall camera module.

Xiaomi 15 series full codenames revealed: Dada, Haotian, Xuanyuan

Aug 22, 2024 pm 06:47 PM

Xiaomi 15 series full codenames revealed: Dada, Haotian, Xuanyuan

Aug 22, 2024 pm 06:47 PM

The Xiaomi Mi 15 series is expected to be officially released in October, and its full series codenames have been exposed in the foreign media MiCode code base. Among them, the flagship Xiaomi Mi 15 Ultra is codenamed "Xuanyuan" (meaning "Xuanyuan"). This name comes from the Yellow Emperor in Chinese mythology, which symbolizes nobility. Xiaomi 15 is codenamed "Dada", while Xiaomi 15Pro is named "Haotian" (meaning "Haotian"). The internal code name of Xiaomi Mi 15S Pro is "dijun", which alludes to Emperor Jun, the creator god of "The Classic of Mountains and Seas". Xiaomi 15Ultra series covers

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

Evaluating the cost/performance of commercial support for a Java framework involves the following steps: Determine the required level of assurance and service level agreement (SLA) guarantees. The experience and expertise of the research support team. Consider additional services such as upgrades, troubleshooting, and performance optimization. Weigh business support costs against risk mitigation and increased efficiency.

iPhone 18 revealed to use Samsung sensor

Jul 25, 2024 pm 10:42 PM

iPhone 18 revealed to use Samsung sensor

Jul 25, 2024 pm 10:42 PM

It is reported that Apple’s upcoming iPhone 18 series is expected to use image sensors made by Samsung. This change is expected to break Sony’s monopoly in Apple’s supply chain. 1. According to reports, Samsung has set up a dedicated team to meet Apple’s requirements. Samsung will provide Apple with a 48-megapixel, 1/2.6-inch ultra-wide-angle image sensor starting in 2026, indicating that Apple is no longer completely dependent on Sony sensor supply. Apple has nearly a thousand suppliers and its supply chain management strategy is flexible and changeable. Apple usually allocates at least two suppliers for each component to promote supplier competition and obtain better prices. Introducing Samsung as a sensor supplier can optimize Apple's cost structure and may affect Sony's market position. Samsung image sensor technology capabilities

OPPO Find X7 is a masterpiece! Capture your every moment with images

Aug 07, 2024 pm 07:19 PM

OPPO Find X7 is a masterpiece! Capture your every moment with images

Aug 07, 2024 pm 07:19 PM

In this fast-paced era, OPPO Find X7 can use its imaging power to let us savor every beautiful moment in life. Whether it's magnificent mountains, rivers, lakes, or seas, warm family gatherings, or encounters and surprises on the street, it can help you record them with "unparalleled" picture quality. From the outside, the camera Deco design of Find It looks very recognizable and has a high-end feel. The inside is also unique, starting with the basic hardware configuration. FindX7 maintains the previous

Xiaomi's 100-yuan phone Redmi 14C design specifications revealed, will be released on August 31

Aug 23, 2024 pm 09:31 PM

Xiaomi's 100-yuan phone Redmi 14C design specifications revealed, will be released on August 31

Aug 23, 2024 pm 09:31 PM

Xiaomi's Redmi brand is gearing up to add another budget phone to its portfolio - the Redmi 14C. The device is confirmed to be released in Vietnam on August 31st. However, ahead of the launch, the phone's specifications have been revealed via a Vietnamese retailer. Redmi14CR Redmi often brings new designs in new series, and Redmi14C is no exception. The phone has a large circular camera module on the back, which is completely different from the design of its predecessor. The blue color version even uses a gradient design to make it look more high-end. However, Redmi14C is actually an economical mobile phone. The camera module consists of four rings; one houses the main 50-megapixel sensor, and another may house the camera for depth information.

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

The learning curve of a PHP framework depends on language proficiency, framework complexity, documentation quality, and community support. The learning curve of PHP frameworks is higher when compared to Python frameworks and lower when compared to Ruby frameworks. Compared to Java frameworks, PHP frameworks have a moderate learning curve but a shorter time to get started.