Technology peripherals

Technology peripherals

AI

AI

The new model that challenges OpenAI is now available for free, with 40% of the computing power and performance approaching GPT-4

The new model that challenges OpenAI is now available for free, with 40% of the computing power and performance approaching GPT-4

The new model that challenges OpenAI is now available for free, with 40% of the computing power and performance approaching GPT-4

On Thursday, American AI startup Inflection AI officially released a new generation of large language model Inflection-2.5.

According to reports, Inflection-2.5 will combine powerful LLM technology and Inflection’s unique “empathy fine-tuning” feature, integrating the characteristics of high emotional intelligence and high IQ. It can obtain factual information through the Internet, and its performance is comparable to leading large-scale models such as GPT-4 and Gemini.

Inflection-2.5 is now available to all Pi users for free on PC, iOS and Android apps. After a simple test by Heart of the Machine, we found that there is still a certain gap compared with GPT-4, but it is still worth a try. Interested users can experience it themselves.

Link: https://pi.ai/talk

It is worth noting that Inflection -2.5 achieves performance close to GPT-4, while the training process only uses 40% of the computing power of GPT-4.

Inflection AI points out that a new generation of large-scale models has made significant progress in areas such as intelligent coding and mathematics. These advances will translate into concrete improvements to key industry benchmarks, ensuring Pi remains at the forefront of technology. In addition, Pi also integrates world-class real-time web search capabilities to ensure that users have access to high-quality breaking news and the latest information.

Inflection-2.5 vs GPT-4

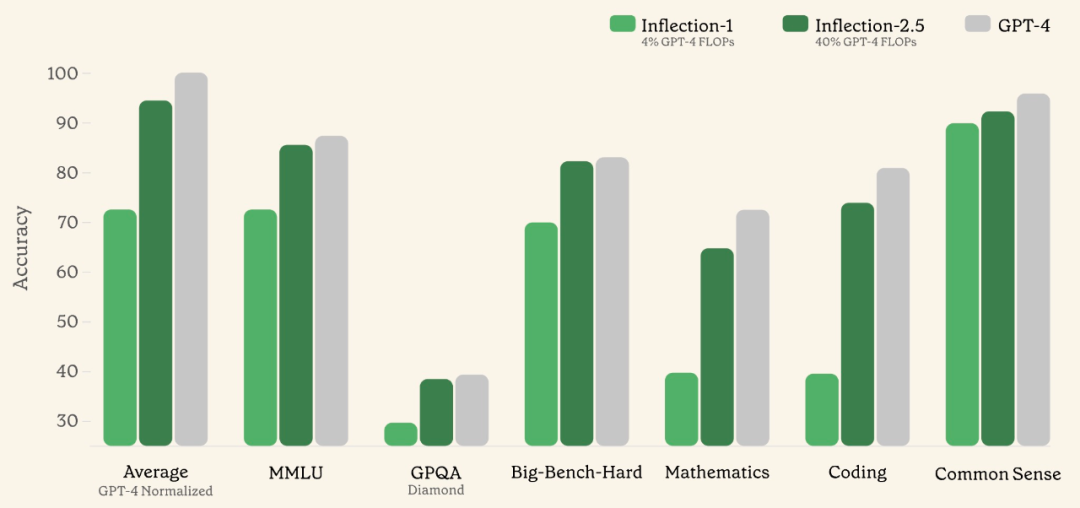

The FLOP used in Inflection-1 training is about 4% of GPT-4, in various In "IQ-oriented" tasks, its average performance is about 72% of the GPT-4 level. Now, Inflection-2.5 achieves an average performance of over 94% of GPT-4, despite using only 40% of GPT-4’s FLOPs for training. As shown in the figure below, the performance of Inflection-2.5 has achieved significant improvements across the board, with the greatest improvements in STEM domain knowledge.

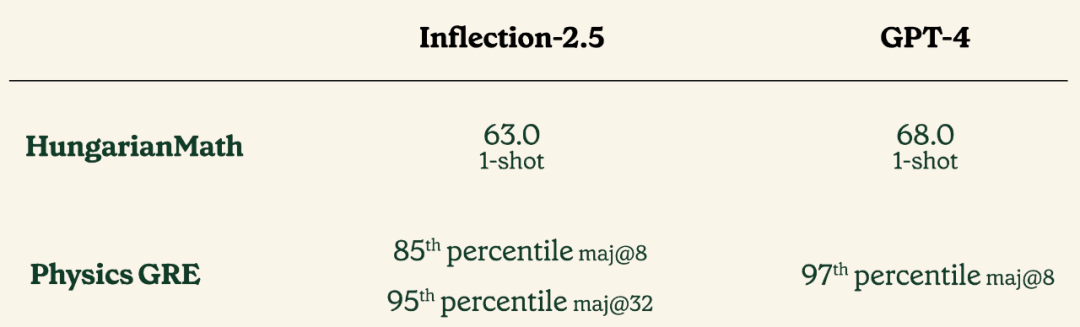

The results of Inflection-2.5 on two different STEM exams - the Hungarian Mathematics Examination and the Physics Graduate Record Examination (GRE) - are as follows:

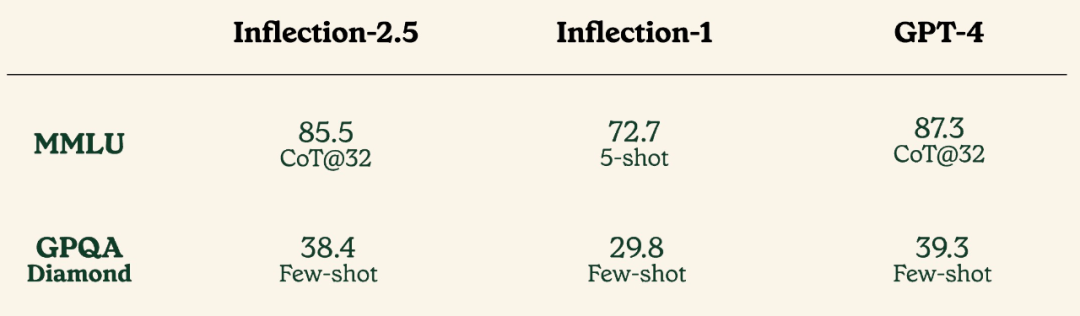

As shown in the table below, the study also evaluated Inflection-2.5 on the MMLU benchmark and GPQA Diamond benchmark. The MMLU benchmark covers 57 disciplines in STEM, humanities, social sciences, and more, effectively testing an LLM’s comprehensive knowledge capabilities, while the GPQA Diamond benchmark is an extremely difficult expert-level benchmark.

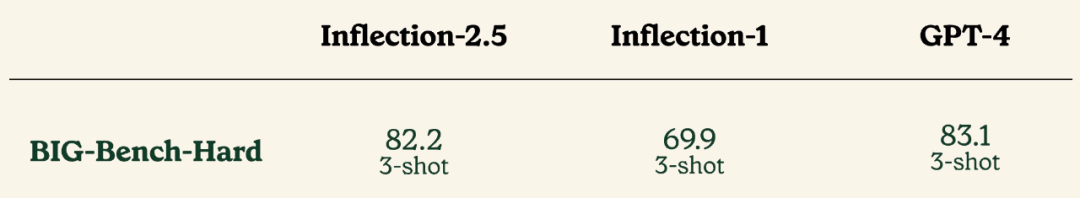

On the BIG-Bench-Hard benchmark, Inflection-2.5 improves performance by more than 10% than Inflection-1 and is comparable to GPT-4 Comparable. The BIG-Bench-Hard benchmark mainly covers problems that are difficult to solve with large language models.

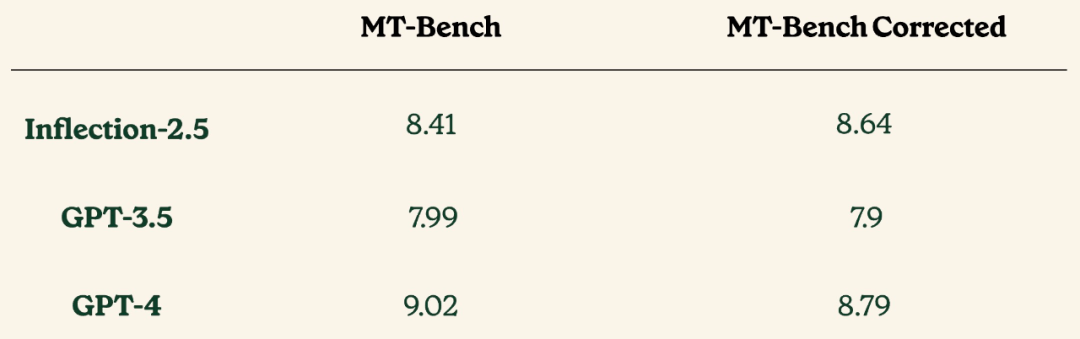

The study was also evaluated on the MT-Bench benchmark. However, the research team realized that the benchmark had a large portion (nearly 25%) of sample examples in the Reasoning, Mathematics, and Coding categories with incorrect reference solutions or flawed premises. Therefore, the study corrected these examples and performed the evaluation experiments again, and the results are shown in the following table:

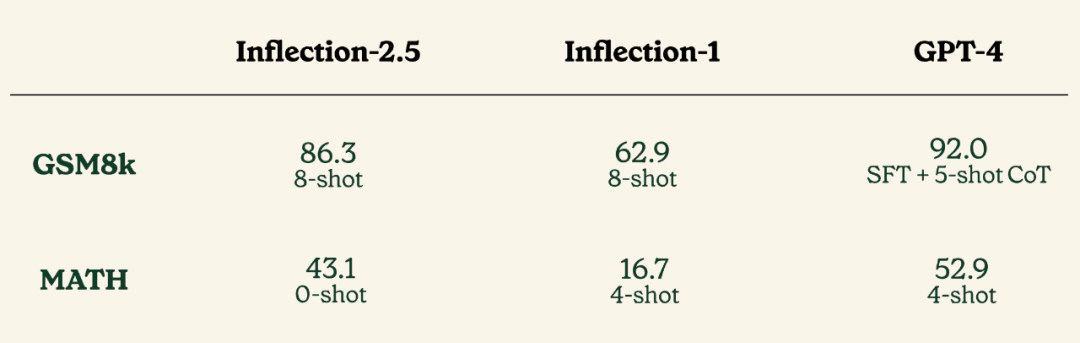

Evaluation on GSM8k and MATH benchmarks The results show that Inflection-2.5 is a significant improvement over Inflection-1 in terms of math and coding capabilities:

To further test the coding of Inflection-2.5 Ability, this study conducted evaluation experiments on two coding benchmarks, MBPP and HumanEval, and the results are shown in the following table:

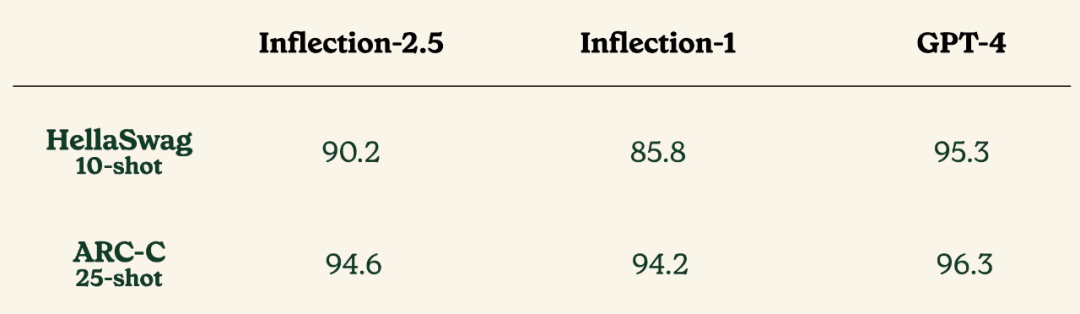

The research team evaluated Inflection-2.5 on HellaSwag and ARC-C, as well as various models on common sense and scientific benchmarks. Judging from the results below, Inflection-2.5 achieves strong performance on these benchmarks.

Additionally, all of the above evaluations were done using models that now support Pi. However, it is also important to note that the user experience may vary slightly due to network retrieval (the above benchmark does not use network retrieval), the structure of the few-shot prompts, and other production aspects.

In general, Inflection-2.5 maintains Pi’s “heart-centered” features and extremely high security standards, becoming a more comprehensive and useful model.

In recent times, the technology competition for large language models has entered a fierce stage. Among many technology companies, Mistral AI (Mistral Large ), Anthropic (Claude 3) stand out, and the new technology proposed achieves capabilities close to GPT-4 and Gemini Ultra. Inflection-2.5, which appeared yesterday, seems to be joining the first echelon.

As a star startup in Silicon Valley, Inflection AI has a long history. It was established in 2022. The three co-founders are Mustafa Suleyman, the original co-founder of DeepMind, and the co-founder of Linkedln. Reid Hoffman, and former DeepMind chief scientist Karen Simonyan.

In June last year, Inflection AI announced that it had received US$1.3 billion in financing from Microsoft, Nvidia, Reid Hoffman, Bill Gates, and former Google CEO Eric Schmidt led the investment. Currently, Inflection AI has become the fourth largest generative AI startup in the world.

The above is the detailed content of The new model that challenges OpenAI is now available for free, with 40% of the computing power and performance approaching GPT-4. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to correctly display the locally installed 'Jingnan Mai Round Body' on the web page?

Apr 05, 2025 pm 10:33 PM

How to correctly display the locally installed 'Jingnan Mai Round Body' on the web page?

Apr 05, 2025 pm 10:33 PM

Using locally installed font files in web pages Recently, I downloaded a free font from the internet and successfully installed it into my system. Now...

Where to get the material for H5 page production

Apr 05, 2025 pm 11:33 PM

Where to get the material for H5 page production

Apr 05, 2025 pm 11:33 PM

The main sources of H5 page materials are: 1. Professional material website (paid, high quality, clear copyright); 2. Homemade material (high uniqueness, but time-consuming); 3. Open source material library (free, need to be carefully screened); 4. Picture/video website (copyright verified is required). In addition, unified material style, size adaptation, compression processing, and copyright protection are key points that need to be paid attention to.

How to make sure the bottom of a 3D object is fixed on the map using Mapbox and Three.js in Vue?

Apr 04, 2025 pm 06:42 PM

How to make sure the bottom of a 3D object is fixed on the map using Mapbox and Three.js in Vue?

Apr 04, 2025 pm 06:42 PM

How to use Mapbox and Three.js in Vue to adapt three-dimensional objects to map viewing angles. When using Vue to combine Mapbox and Three.js, the created three-dimensional objects need to...

How to select a child element with the first class name item through CSS?

Apr 05, 2025 pm 11:24 PM

How to select a child element with the first class name item through CSS?

Apr 05, 2025 pm 11:24 PM

When the number of elements is not fixed, how to select the first child element of the specified class name through CSS. When processing HTML structure, you often encounter different elements...

How to quickly build a foreground page using AI programming tools?

Apr 04, 2025 pm 08:24 PM

How to quickly build a foreground page using AI programming tools?

Apr 04, 2025 pm 08:24 PM

Quickly build the front-end page: Shortcuts for back-end developers As a back-end developer with three to four years of experience, you may be interested in basic JavaScript, CSS...

Setting flex: 1 1 0 What is the difference between setting flex-basis and not setting flex-basis?

Apr 05, 2025 am 09:39 AM

Setting flex: 1 1 0 What is the difference between setting flex-basis and not setting flex-basis?

Apr 05, 2025 am 09:39 AM

The difference between flex:110 in Flex layout and flex-basis not set In Flex layout, how to set flex...

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

Does H5 page production require continuous maintenance?

Apr 05, 2025 pm 11:27 PM

The H5 page needs to be maintained continuously, because of factors such as code vulnerabilities, browser compatibility, performance optimization, security updates and user experience improvements. Effective maintenance methods include establishing a complete testing system, using version control tools, regularly monitoring page performance, collecting user feedback and formulating maintenance plans.