In the large model competition, another dark horse emerged -

Inflection-2.5, created by DeepMind and Mustafa Suleyman's large model startup.

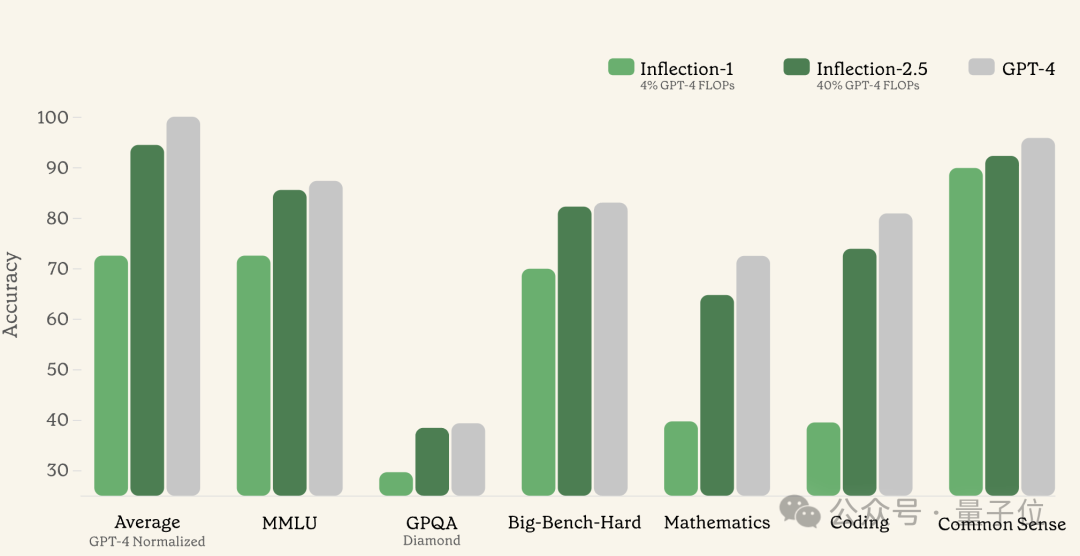

Using only 40% of computing resources for training, its performance exceeds 90% of GPT-4, and it is especially good at coding and mathematics.

The early Inflection model only consumed 4% of the computing resources during training, reaching 72% of the GPT-4 performance.

Based on the Inflection model, the company also launched the web-based conversational robot Pi, which focuses on "high emotional intelligence" and "personalization" and also supports Chinese.

Since its birth, Pi's highest daily activity has reached 1 million, a total of 4 billion messages have been generated, and the average conversation length has reached 33 minutes.

With this upgrade of the basic model, Pi also ushered in its new version.

Picture

Picture

So, whether Inflection, or Pi, is so strong, the qubits conducted some actual measurements.

Open Pi's page, you will see such a minimalist interface, and you can click Next directly without logging in.

Picture

Picture

After Next several times in a row, enter the way you want Pi to call us.

Picture

Picture

After that, select the voice and recommended topics, just skip it, and then enter the formal chat interface.

Picture

Picture

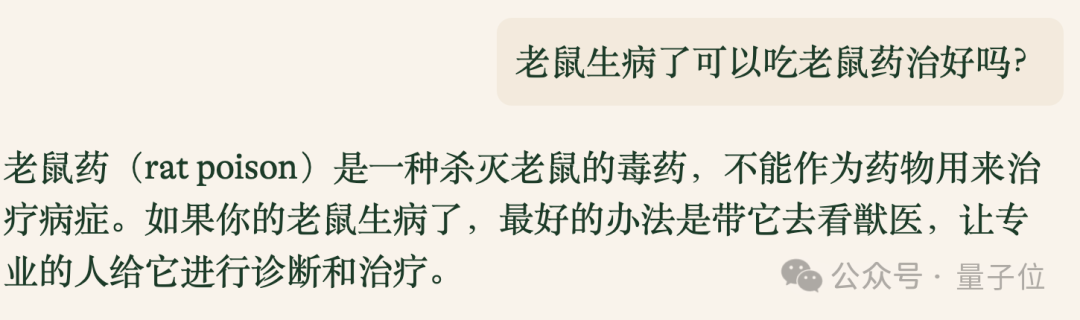

A simple test found that Pi supports Chinese dialogue. In this case, first arrange the mentally retarded Benchmark.

The first question is, can a sick mouse be cured by taking rat poison? Pi successfully answered it.

Picture

Picture

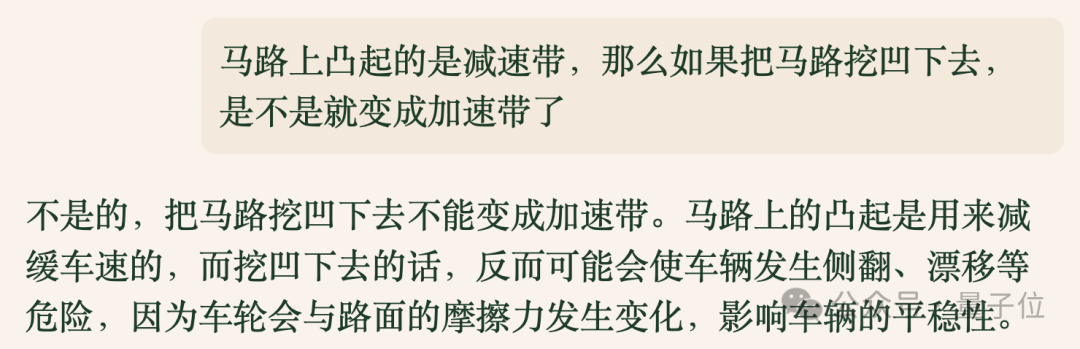

Another "trap" question, but I still didn't fall for it this time.

Picture

Picture

After the two questions, although there was no dramatic effect, it seemed that I had a certain understanding of Chinese.

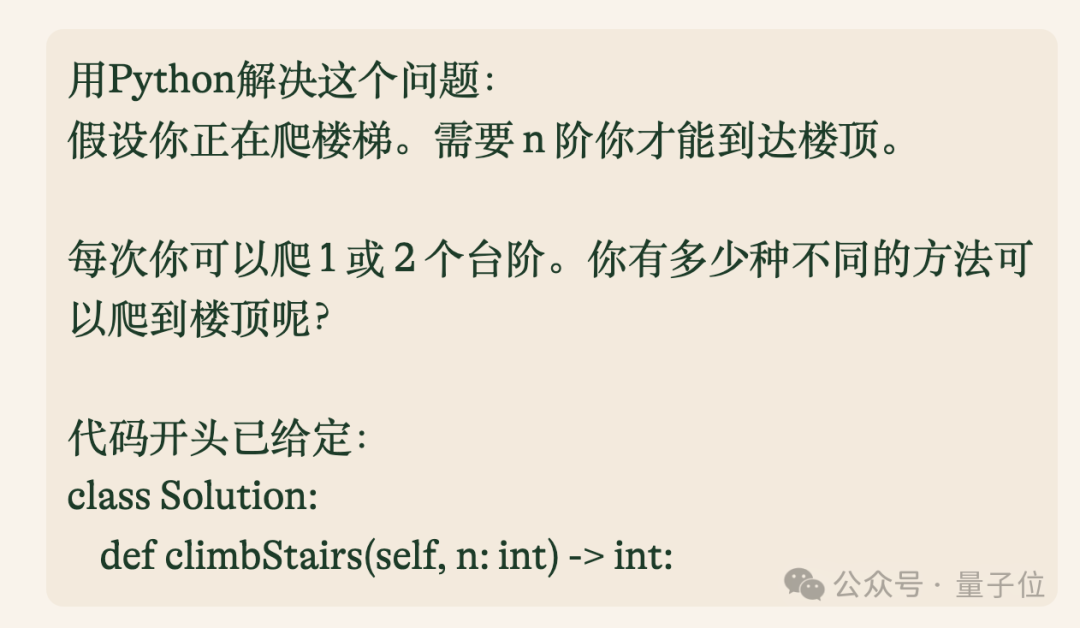

Next, let’s focus on the math and coding abilities that the official claims to be “particularly good at”.

The first is a programming question involving dynamic programming.

Picture

Picture

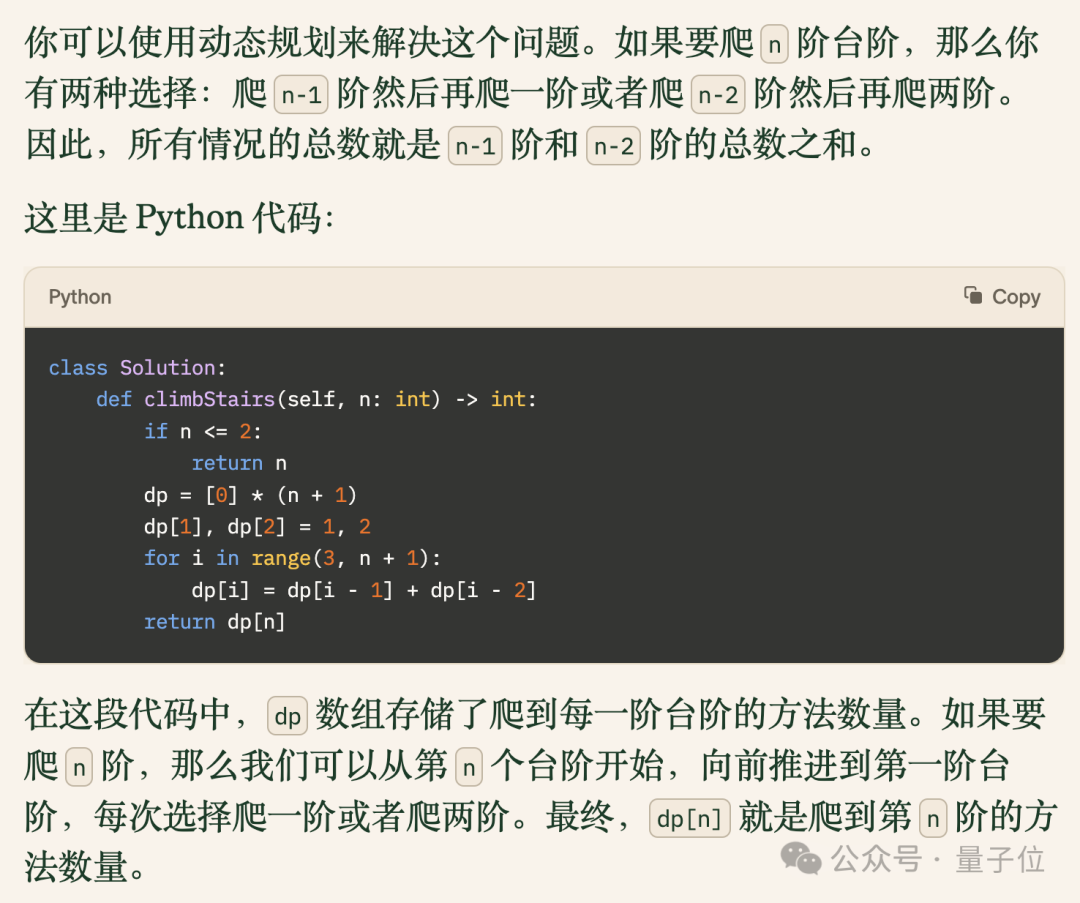

The code given by Pi successfully solves this problem and comes with a clear explanation.

Picture

Picture

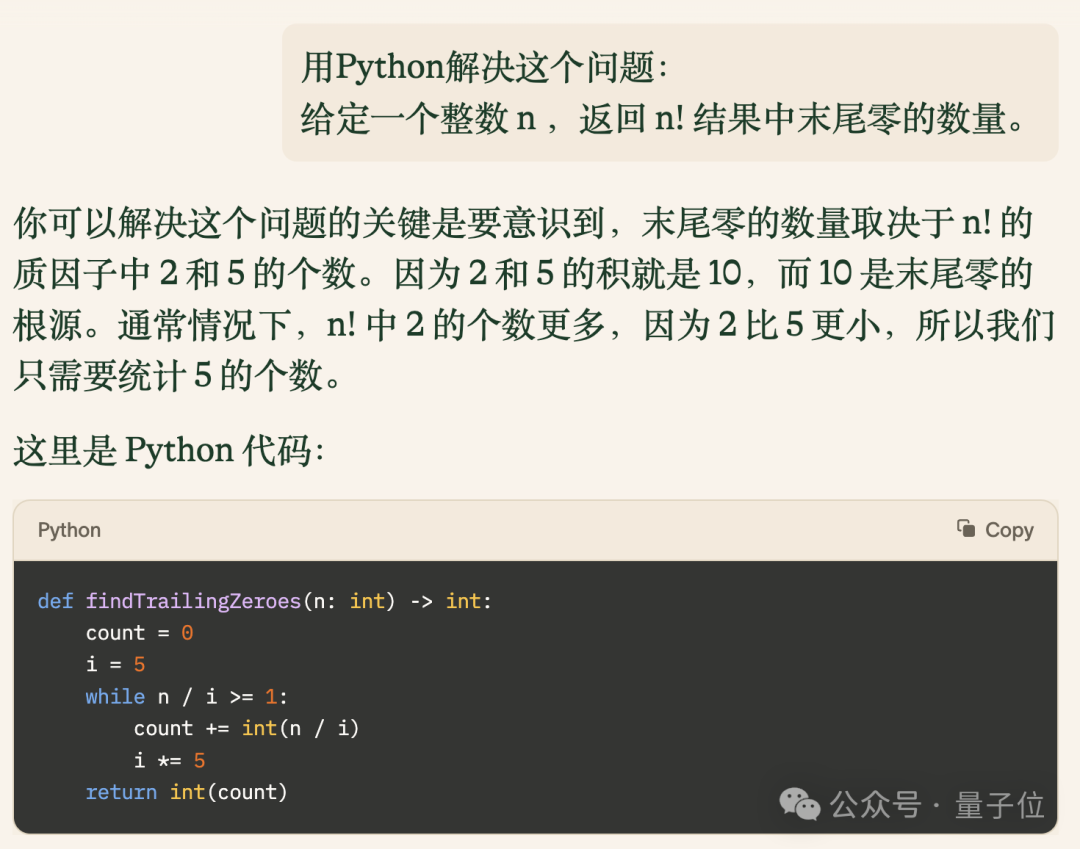

Next, let’s increase the difficulty and let it analyze how many zeros are at the end of the factorial of a number.

Picture

Picture

The code given by Pi is not only correct, but also concise and efficient, running faster than 73.8% of users on LeetCode.

Picture

Picture

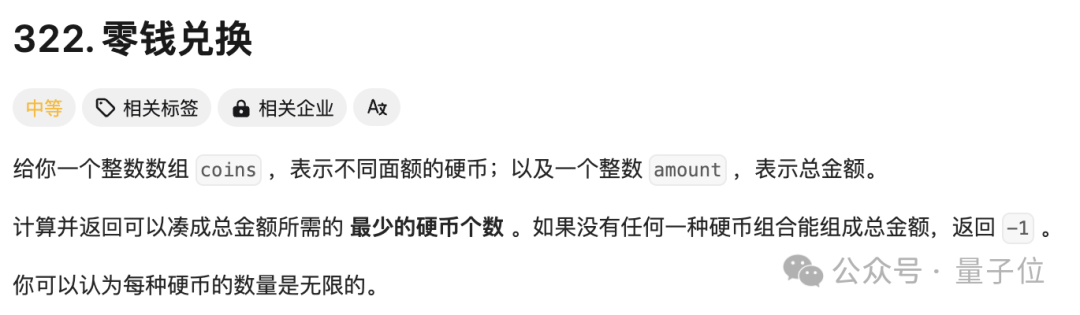

Finally, let’s increase the difficulty and end the code part of the test with a question with a pass rate of 47.5%.

Picture

Picture

After reading the code, let’s test Pi’s mathematical ability and let it do questions about derivatives:

Find the extreme point of the function f(x)=x³ 2x²-1

The answer is completely correct and very detailed.

Of course, if you want to be good at mathematics, logical thinking is essential, so in addition to the regular mathematics questions, we also used a classic question to test Pi's logical thinking, and the results were not bad.

Through the performance of Pi, we can see that the Inflection-2.5 model behind it is indeed remarkable.

Judging from the test data released by the official itself, the performance of Inflection-2.5 is closely followed by GPT-4 in terms of comprehensive capabilities and individual sub-items.

Taking mathematics and code as an example, Inflection-2.5 has made a significant leap forward than version 1.0 in tests such as MATH and HumanEval.

In addition to these conventional data sets, Inflection also challenged the Hungarian college entrance examination mathematics test questions and the GRE physics test, and the results were almost tied with GPT-4.

Even more tricky, there are people who have built a BIG-Bench data set using problems that are difficult to understand with large models, and Inflection-2.5 challenged the Hard subset of it, and the result is far behind GPT-4 Less than a point.

So, what kind of company is behind Inflection-2.5?

This company is called Inflection AI. It was founded in 2022 by DeepMind Lianchuang Mustafa Suleyman and others. There are currently more than 70 people.

Also from DeepMind, there is senior researcher Karen Simonyan, who is now the chief scientist of Inflection AI.

In addition, LinkedIn co-creator Reid Hoffman also participated in the founding of Inflection AI.

Since its inception, Inflection AI has received a total of US$1.5 billion in financing from giants such as NVIDIA, Microsoft, and Bill Gates.

Currently, Pi based on Inflection is still free, but CEO Suleyman also said that it is unrealistic to use love to generate electricity all the time. In the long run, there will still be charges.

Friends who want to experience it may have to hurry up~

Portal: https://pi.ai

The above is the detailed content of The training effect of 40% computing power is comparable to GPT-4, and the new achievements of DeepMind jointly created large model entrepreneurship were measured. For more information, please follow other related articles on the PHP Chinese website!

Ouyi trading platform app

Ouyi trading platform app

Python online playback function implementation method

Python online playback function implementation method

What does data encryption storage include?

What does data encryption storage include?

The role of validate function

The role of validate function

Solid state drive data recovery

Solid state drive data recovery

What is the difference between webstorm and idea?

What is the difference between webstorm and idea?

Second-level domain name query method

Second-level domain name query method

What is the transfer limit of Alipay?

What is the transfer limit of Alipay?

What should I do if eDonkey Search cannot connect to the server?

What should I do if eDonkey Search cannot connect to the server?