Technology peripherals

Technology peripherals

AI

AI

MIT Genesis nuclear fusion breaks world record! High-temperature superconducting magnets unlock stellar energy, is artificial sun about to be born?

MIT Genesis nuclear fusion breaks world record! High-temperature superconducting magnets unlock stellar energy, is artificial sun about to be born?

MIT Genesis nuclear fusion breaks world record! High-temperature superconducting magnets unlock stellar energy, is artificial sun about to be born?

The Holy Grail of clean energy has been captured?

"Overnight, the MIT team reduced the cost per watt of the fusion reactor to almost 1/40, making the commercial use of nuclear fusion technology possible"!

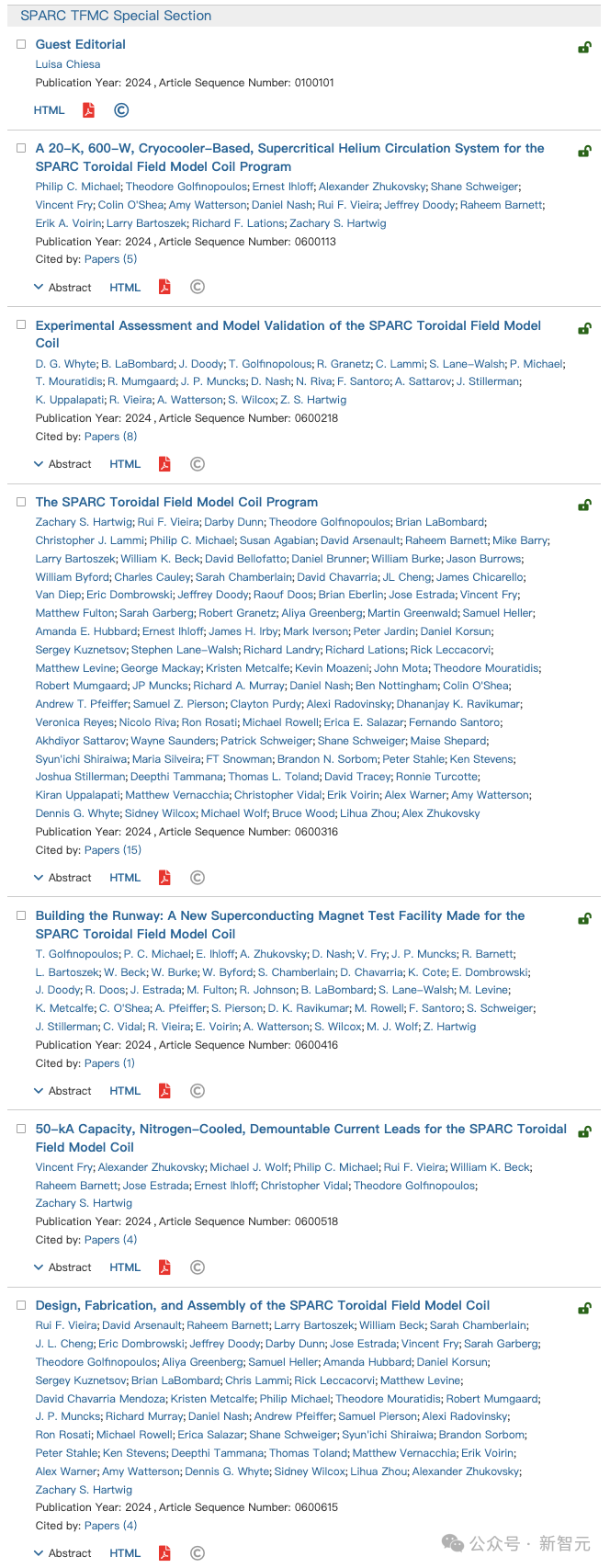

Recently, the MIT Center for Plasma Science and Fusion and the Commonwealth Fusion Systems (CFS) published a comprehensive report.

This report cites six independent research papers published in the March special issue of "IEEE Transactions on Applied Superconductivity", proving:

MIT's use of "high-temperature superconducting magnets" and non-insulation design in the 2021 experiment is completely feasible and reliable.

It also verified that the unique superconducting magnet used by the team in the experiment is sufficient as the basis for a nuclear fusion power plant.

This indicates that "nuclear fusion" will soon become a commercialized technology from a scientific research project in a laboratory.

Paper address:

https://ieeexplore.ieee.org/xpl/tocresult. jsp?isnumber=10348035&punumber=77

And it all starts with the world-record nuclear fusion experiment at MIT in 2021.

"Superconducting Magnet" sets a world record for magnetic field strength

In the early morning of September 5, 2021, at the MIT Plasma Science and Fusion Center (PSFC ) laboratory, engineers achieved a major milestone -

# A new type of magnet made of "high-temperature superconducting material" has reached the size of 20 tesla World record for scale magnetic field strength.

You know, 20 tesla is exactly the magnetic field strength required to build a nuclear fusion power plant.

Scientists predict that it is expected to produce a net power output and potentially usher in an era of virtually unlimited power generation.

The experiment proved successful while meeting all the criteria set for the design of a new fusion device, known as SPARC, for which magnets are a key enabling technology.

The exhausted engineers opened the champagne to celebrate the proud achievements. They have made long and arduous efforts for this.

But the scientists did not stop at their work.

Over the next few months, the team disassembled and examined the magnet's components, poring over and analyzing data from hundreds of instruments that recorded the details of the tests.

They also conducted two other tests on the same magnet, ultimately testing it to its limits to learn the details of any possible failure modes.

The purpose is to further verify whether the superconducting magnet in their experiment can work stably in various extreme scenarios.

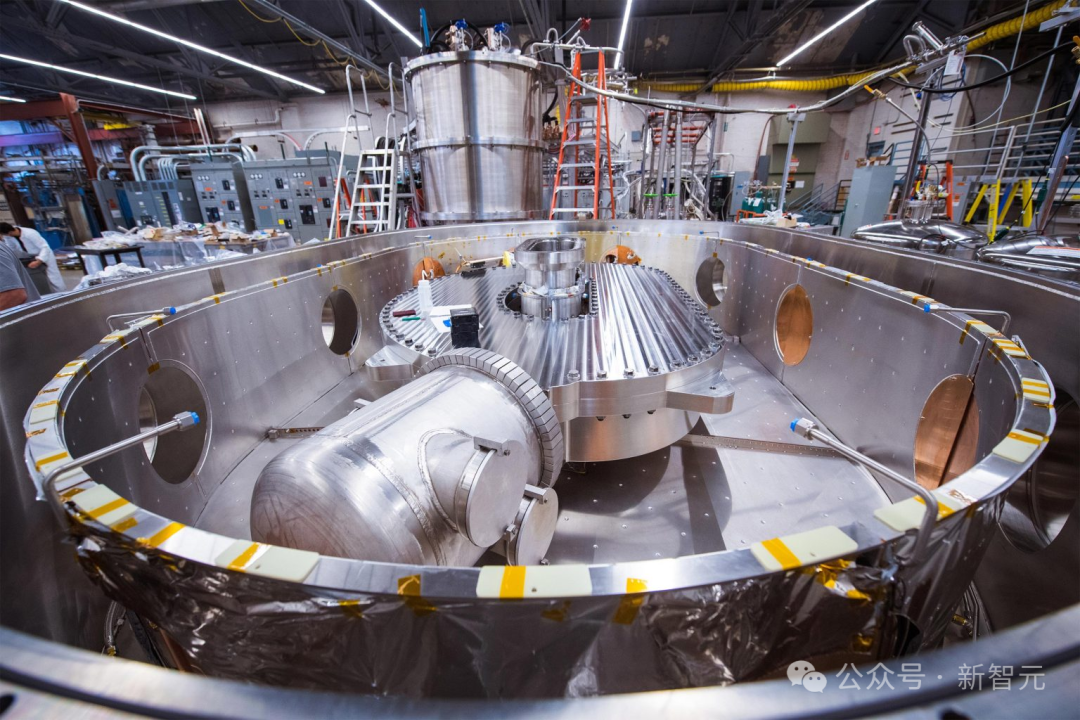

A team puts magnets into cryostat containers

Nuclear fusion power generation, cost reduced by 40 times

Dennis Whyte, a professor of engineering at Hitachi America who recently stepped down as PSFC director, said, "In my opinion, the successful test of the magnet is the most important thing in fusion research in the past 30 years."

As experimental results show, existing superconducting magnets are powerful enough to potentially achieve fusion energy.

The only disadvantage is that due to its huge size and cost, it will never be practical or economically feasible.

Subsequent tests conducted by the researchers showed that such a powerful magnet can still be practical even if its size is greatly reduced.

"Overnight, the cost per watt of fusion reactors dropped nearly 40 times in one day."

Now nuclear fusion has a chance. "Tokamak" is currently the most widely used fusion experimental device design.

"In my opinion, the tokamak has a chance to become affordable because, given the known constraints of physical rules, we can drastically reduce the time required to achieve fusion. This is a qualitative leap in terms of the size and cost of the device required.”

Six papers detail comprehensive data from MIT magnet testing.

The analysis then shows that the new generation of nuclear fusion devices designed by MIT and CFS, as well as similar designs by other commercial fusion companies, are scientifically feasible. .

It is nuclear fusion, and it is a breakthrough in superconductivity

Nuclear fusion is the process of combining light atoms into heavy atoms. Provides energy for the sun and stars.

But exploiting this process on Earth has proven to be a difficult challenge.

Over the past few decades, great efforts have been made in the research of experimental devices, even billions of dollars have been spent.

The goal that has been pursued but never achieved is to build a fusion power plant that produces more energy than it consumes.

During operation, such a power plant can generate electricity without emitting greenhouse gases and will not produce large amounts of radioactive waste.

The fuel for nuclear fusion, which comes from hydrogen extracted from seawater, is almost endless.

# However, for nuclear fusion to be successful, the fuel must be compressed at extremely high temperatures and pressures.

Since no known material can withstand such temperatures, extremely powerful magnetic fields must be used to confine the fuel.

If you want to generate such a strong magnetic field, you need a "superconducting magnet." However, all previous nuclear fusion magnets were made of superconducting materials, which require a temperature of about 100 degrees Celsius above absolute zero. A low temperature of 4 degrees (4 kelvins, or -270 degrees Celsius).

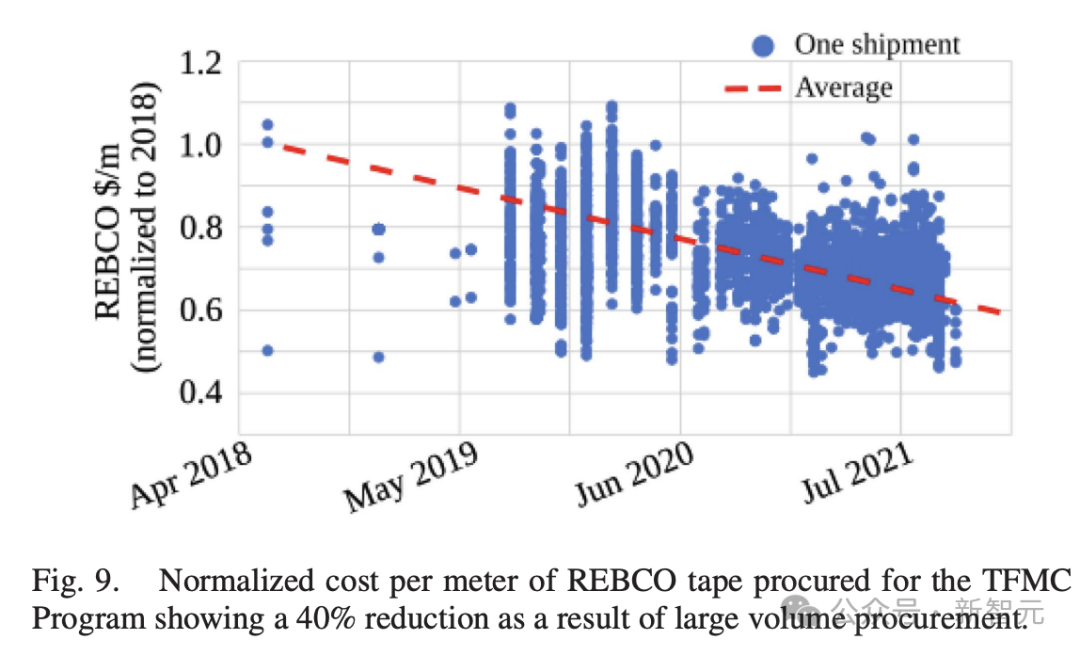

In recent years, a new material called REBCO (rare earth barium copper oxide) has begun to be used in nuclear fusion magnets.

It allows nuclear fusion magnets to operate at temperatures of 20 kelvins, which although only 16 kelvins higher than 4 kelvins, has significant advantages in terms of material properties and practical engineering.

The new high-temperature superconducting material is a redesign of almost all the principles used to make superconducting magnets.

If this new high-temperature superconducting material is used to manufacture superconducting magnets, it is not just an improvement on the basis of predecessors, but also requires innovation and research and development from scratch.

A new paper in the journal Transactions on Applied Superconductivity describes the details of this redesign process, and patent protection is already in place.

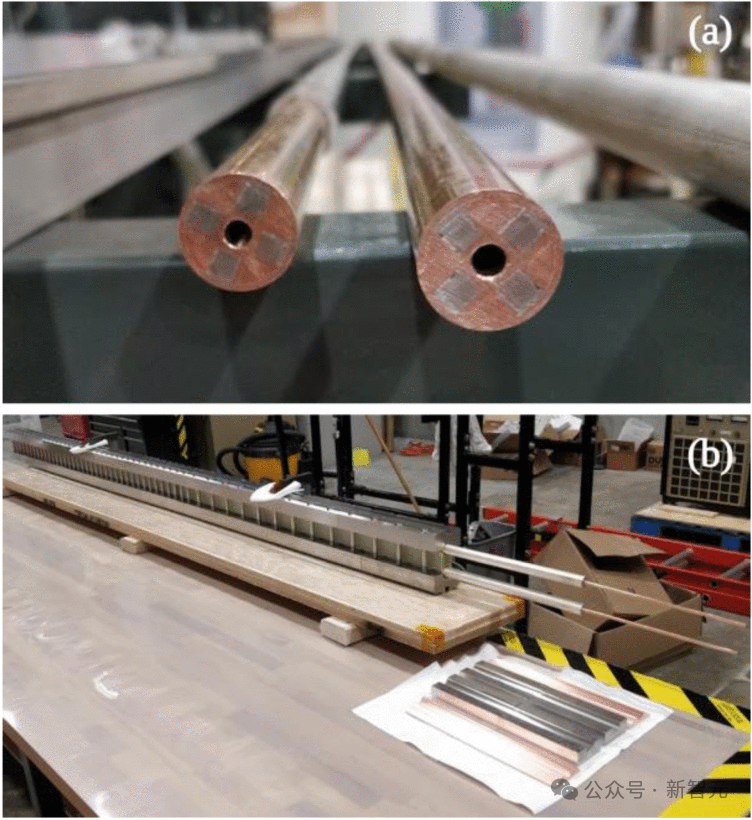

In order to make full use of REBCO, researchers redesigned an industrial scalable high-current "VIPER REBCO" cable based on the TSTC architecture.

VIPER REBCO cable has these obvious advantages:

- has less than 5% stable current degradation.

- Features rugged removable headers in the 2-5nΩ range;

- For the first time, two different cable quench tests can be performed on full-size conductors under fusion-related conditions suitable for REBCO's low normal zone propagation velocities.

Key innovation: No insulation layer design

Another incredible design in this superconducting magnet is the removal of the thin and flat magnet Insulator surrounding the superconducting strip.

In traditional designs, superconducting magnets are surrounded by insulating materials to protect them from short circuits.

In this new superconducting magnet, the superconducting strip is completely exposed.

Scientists rely on REBCO’s stronger conductivity to keep electrical current flowing accurately through the material.

Zach Hartwig, a professor in MIT’s Department of Nuclear Science and Engineering who is responsible for developing the superconducting magnets, said: “When we began this project in 2018, the development of large-scale high-field magnets using high-temperature superconductors was The technology is still in a very early stage, and only small experiments can be carried out."

"Our magnet research and development project was based on this scale and completed a full-scale magnet in a very short period of time. Research and development."

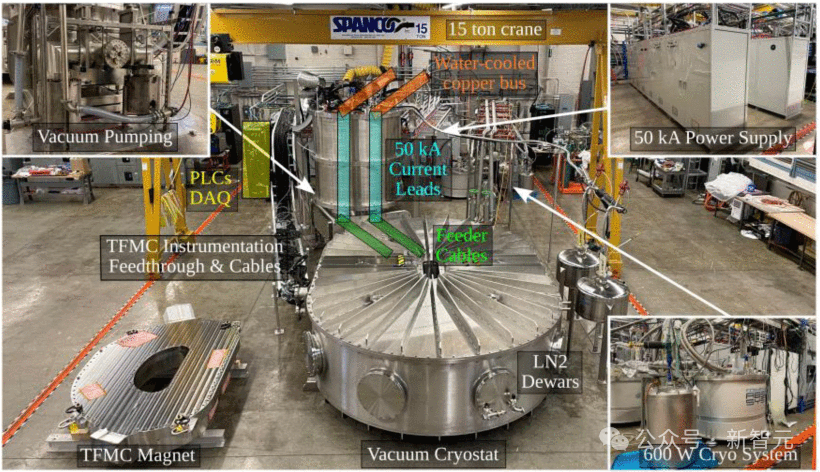

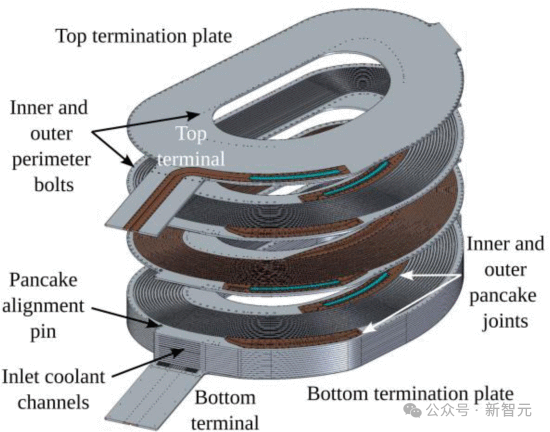

The team finally manufactured a magnet of nearly 10 tons, which produced a stable and uniform magnetic field higher than 20 Tesla.

"The standard way to make these magnets is to wrap a conductor around the windings and put an insulation layer between the windings. You need the insulation layer to handle unexpected events such as shutdowns. high voltage".

"The advantage of removing this layer of insulation is that it is a low-voltage system. It greatly simplifies the manufacturing process and schedule."

This also leaves plenty of room for cooling or more strength structure.

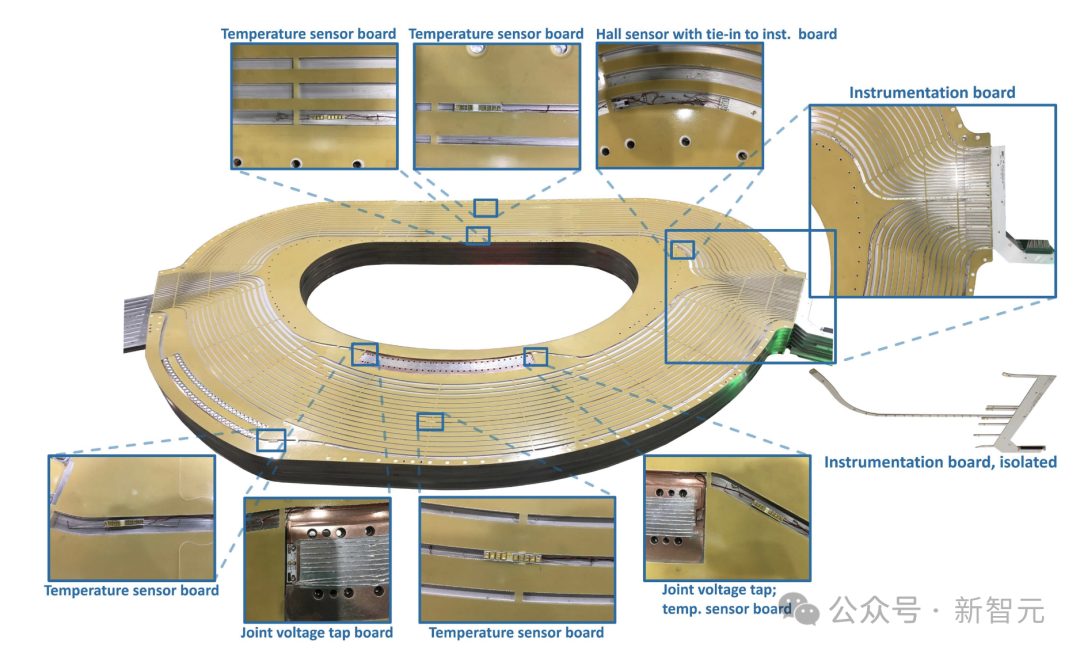

The slightly smaller magnet assembly forms the donut-shaped cavity of the SPARC nuclear fusion device that CFS is building.

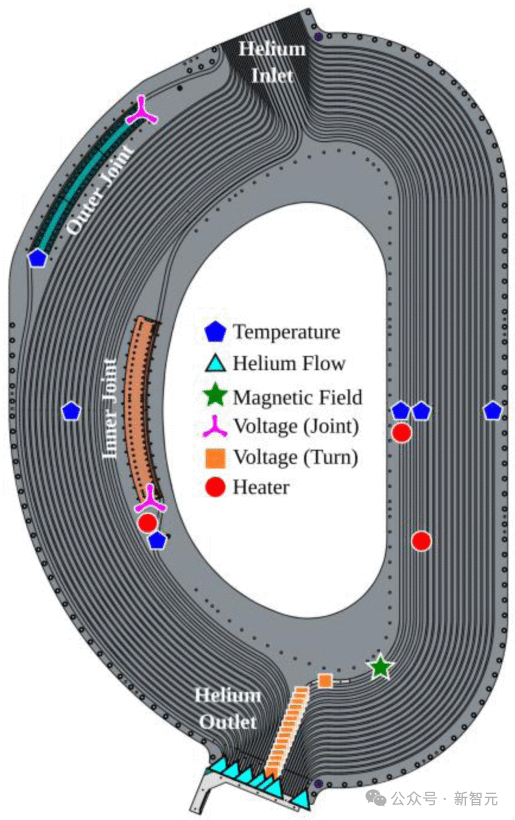

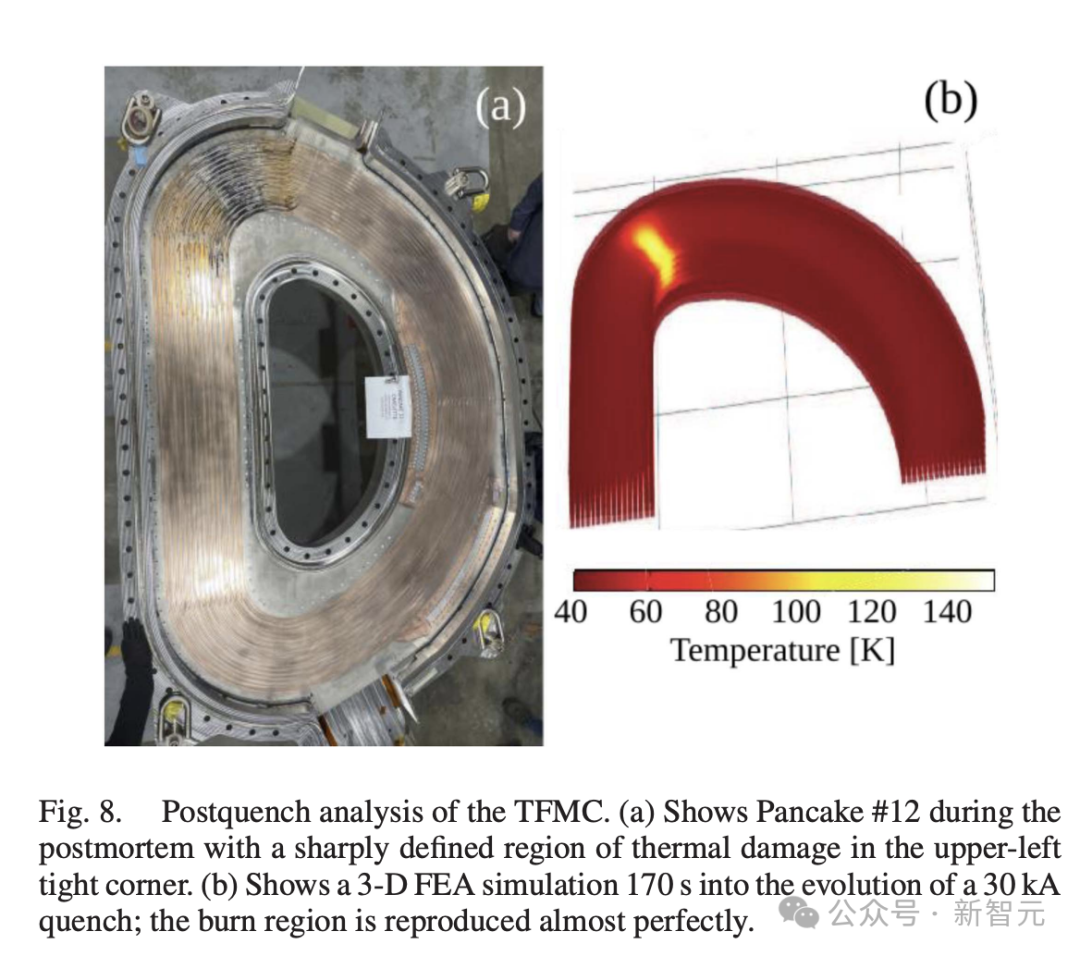

This cavity is composed of 16 plates called "pancakes". One side of each plate is wrapped with a spiral super The conduction band, and the other side is the helium cooling channel.

"However, the design without insulation layer is very risky in the eyes of most people, and even in the testing stage, there is a great risk." Professor

said, "This is the first magnet of sufficient scale to explore the issues involved in designing, manufacturing and testing magnets using this non-insulating layer and non-twisting technology."

"The entire community was surprised when the team announced that this was an uninsulated coil."

Extreme testing has been completed, large-scale commercial use is imminent?

The first experiments described in a previous paper have demonstrated that such a design and manufacturing process is not only feasible but also very stable, although some researchers had expressed doubts.

The next two tests, also conducted at the end of 2021, pushed the operating conditions of the equipment to the limit by deliberately creating unstable conditions, including completely turning off the input power, which may Can cause catastrophic overheating.

This situation is called "quenching" and is considered the worst case scenario that may occur during the operation of such magnets, which may directly destroy the equipment.

Part of the test plan, Hartwig said, is “to actually go out and intentionally quench a full-size magnet so that we can get critical data at the right scale and under the right conditions to Promote scientific development and verify design codes."

"Then take the magnet apart and see what went wrong, why it went wrong, and how we can do the next iteration to fix it ...The end result turned out to be a very successful experiment."

The final test ended with one corner of 16 "pancakes" melting, but produced a wealth of new information, Hartwig said.

First, they have been using several different computational models to design and predict aspects of the magnet's performance, and in most cases the models are consistent in their overall predictions , and has been well verified through a series of tests and actual measurements.

However, when predicting the "quenching" effect, the model's prediction results deviated, so it is necessary to obtain experimental data to evaluate the effectiveness of the model.

The model developed by the researchers almost accurately predicts how the magnet will heat up, how much it will rise when quenching begins, and the resulting damage to the magnet.

The experiments accurately describe the physics at play and allow scientists to understand which models will be useful in the future and which models will be inaccurate.

After testing the performance of all aspects of the coil, the scientists deliberately made the worst possible simulation of the coil.

It was found that the damaged area of the coil only accounted for a few percent of the coil volume.

Based on this result, they continued to make modifications to the design, anticipating that they would be able to prevent damage on this scale to the magnets of an actual nuclear fusion device, even under the most extreme conditions.

Professor Hartwig emphasized that the reason why the team was able to complete such a record-breaking new magnet design and achieve it very quickly in the first time The speed with which it was completed was mainly due to the deep knowledge, expertise and equipment accumulated over decades of Alcatel C-Mod Tokamak, the Francis Bit Magnet Laboratory and other work carried out by PSFC.

In the future, the experiment will continue to advance to achieve large-scale commercial use of clean electricity.

The above is the detailed content of MIT Genesis nuclear fusion breaks world record! High-temperature superconducting magnets unlock stellar energy, is artificial sun about to be born?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

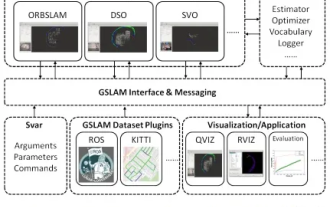

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

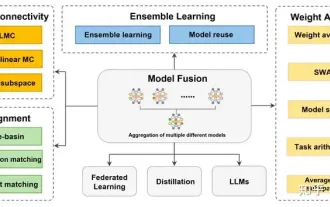

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion