Technology peripherals

Technology peripherals

AI

AI

How does the diffusion model build a new generation of decision-making agents? Beyond autoregression, simultaneously generate long sequence planning trajectories

How does the diffusion model build a new generation of decision-making agents? Beyond autoregression, simultaneously generate long sequence planning trajectories

How does the diffusion model build a new generation of decision-making agents? Beyond autoregression, simultaneously generate long sequence planning trajectories

Imagine that when you are standing in the room and preparing to walk towards the door, are you gradually planning the path through autoregression? In effect, your path is generated as a whole in one go.

The latest research points out that the planning module using the diffusion model can generate long sequence trajectory plans at the same time, which is more in line with human decision-making methods. In addition, the diffusion model can also provide more optimized solutions for existing decision-making intelligence algorithms in terms of policy representation and data synthesis.

A review paper written by a team from Shanghai Jiao Tong University"Diffusion Models for Reinforcement Learning: A Survey"combines the application of diffusion models in fields related to reinforcement learning. The review points out that existing reinforcement learning algorithms face challenges such as error accumulation in long sequence planning, limited policy expression capabilities, and insufficient interactive data, while the diffusion model has demonstrated advantages in solving reinforcement learning problems and bringing new ideas to address the long-standing challenges mentioned above. Paper link: https://arxiv.org/abs/2311.01223

This review classifies the role of diffusion models in reinforcement learning and summarizes successful cases of diffusion models in different reinforcement learning scenarios. . Finally, the review looks forward to the future development direction of using diffusion models to solve reinforcement learning problems.

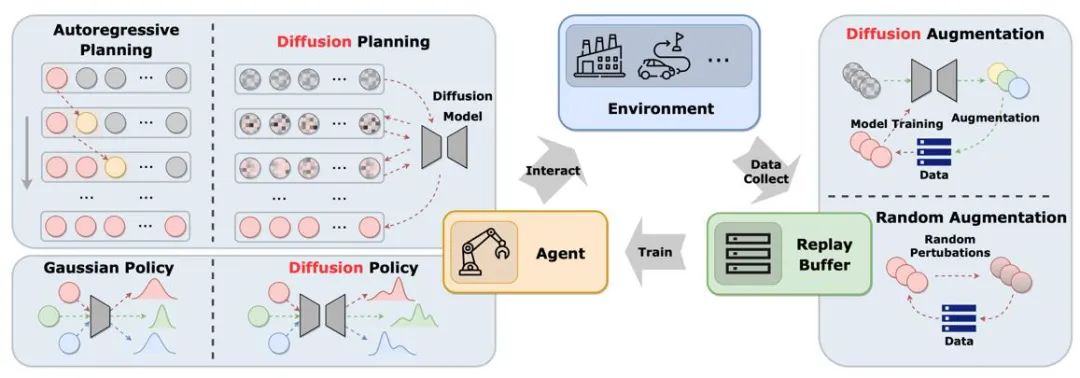

The figure shows the role of the diffusion model in the classic agent-environment-experience replay pool cycle. Compared with traditional solutions, the diffusion model introduces new elements into the system and provides more comprehensive information interaction and learning opportunities. In this way, the agent can better adapt to changes in the environment and optimize its decisions

The article classifies and compares the application methods and characteristics of diffusion models based on the different roles they play in reinforcement learning.

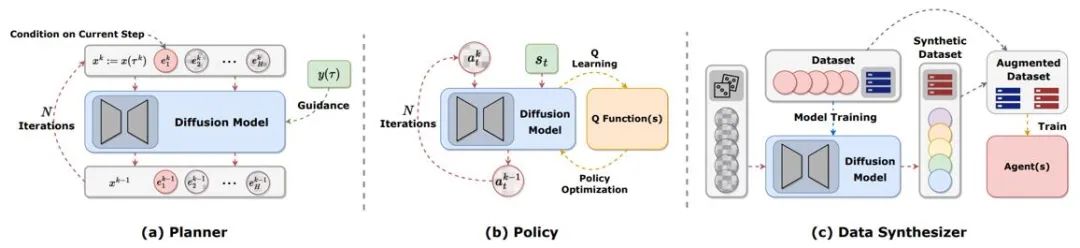

Figure 2: The different roles that diffusion models play in reinforcement learning.

Trajectory planning

Planning in reinforcement learning refers to making decisions in imagination by using dynamic models and then choosing Appropriate actions to maximize cumulative rewards. The process of planning often explores sequences of actions and states to improve the long-term effectiveness of decisions. In model-based reinforcement learning (MBRL) frameworks, planning sequences are often simulated in an autoregressive manner, resulting in accumulated errors. Diffusion models can generate multi-step planning sequences simultaneously. The targets generated by existing articles using diffusion models are very diverse, including (s,a,r), (s,a), only s, only a, etc. To generate high-reward trajectories during online evaluation, many works use guided sampling techniques with or without classifiers.

Policy representation

The diffusion planner is more similar to MBRL in traditional reinforcement learning. In contrast, the diffusion planner will Models as policies are more similar to model-free reinforcement learning. Diffusion-QL first combines the diffusion strategy with the Q-learning framework. Because diffusion models are far more capable of fitting multimodal distributions than traditional models, diffusion strategies perform well in multimodal data sets sampled by multiple behavioral strategies. The diffusion strategy is the same as the ordinary strategy, usually using the state as a condition to generate actions while considering maximizing the Q (s,a) function. Methods such as Diffusion-QL add a weighted value function term when training the diffusion model, while CEP constructs a weighted regression target from an energy perspective, using the value function as a factor to adjust the action distribution learned by the diffusion model.

Data synthesis

Diffusion model can be used as a data synthesizer to alleviate data scarcity in offline or online reinforcement learning The problem. Traditional reinforcement learning data enhancement methods can usually only slightly perturb the original data, while the powerful distribution fitting capabilities of the diffusion model allow it to directly learn the distribution of the entire data set and then sample new high-quality data.

Other types

In addition to the above categories, there are also some scattered works using diffusion models in other ways. For example, DVF estimates a value function using a diffusion model. LDCQ first encodes the trajectory into the latent space and then applies the diffusion model on the latent space. PolyGRAD uses a diffusion model to dynamically transfer the learning environment, allowing policy and model interaction to improve policy learning efficiency.

Applications in different reinforcement learning related problems

Offline reinforcement learning

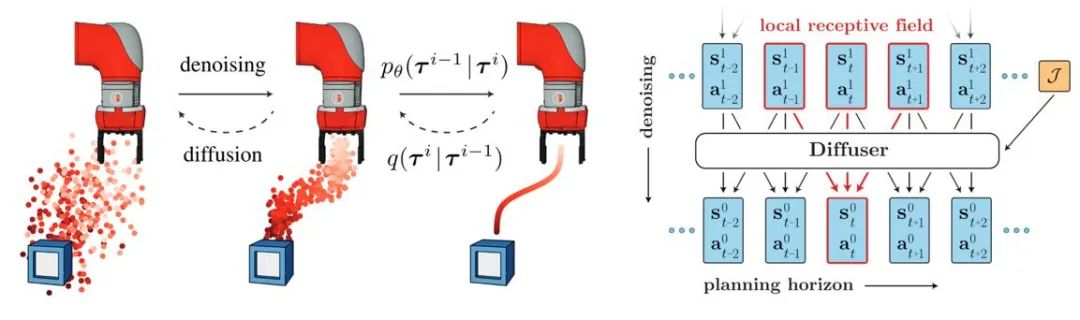

The introduction of the diffusion model helps the offline reinforcement learning strategy fit multi-modal data distribution and expands the representation ability of the strategy. Diffuser first proposed a high-reward trajectory generation algorithm based on classifier guidance and inspired a lot of subsequent work. At the same time, the diffusion model can also be applied in multi-task and multi-agent reinforcement learning scenarios.

Figure 3: Diffuser trajectory generation process and model diagram

Online reinforcement learning

Researchers have proven that the diffusion model also has the ability to optimize value functions and strategies in online reinforcement learning. For example, DIPO re-labels action data and uses diffusion model training to avoid the instability of value-guided training; CPQL has verified that the single-step sampling diffusion model as a strategy can balance exploration and utilization during interaction.

Imitation learning

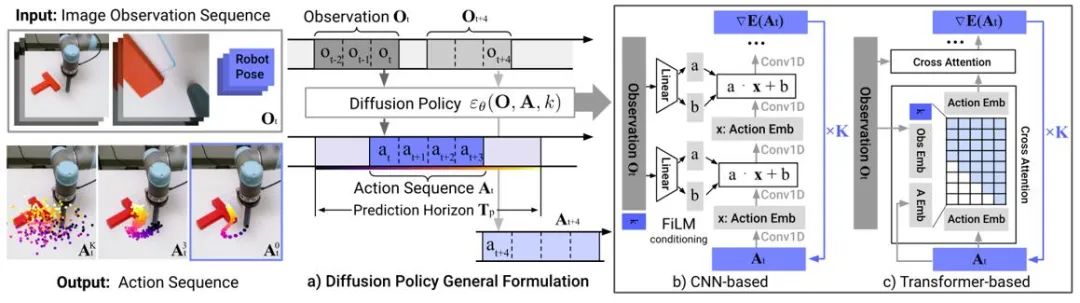

Imitation learning reconstructs expert behavior by learning from expert demonstration data. The application of diffusion models helps improve policy representation capabilities and learn diverse task skills. In the field of robot control, research has found that diffusion models can predict closed-loop action sequences while maintaining temporal stability. Diffusion Policy uses a diffusion model of image input to generate robot action sequences. Experiments show that the diffusion model can generate effective closed-loop action sequences while ensuring timing consistency.

Figure 4: Diffusion Policy model diagram

Trajectory generation

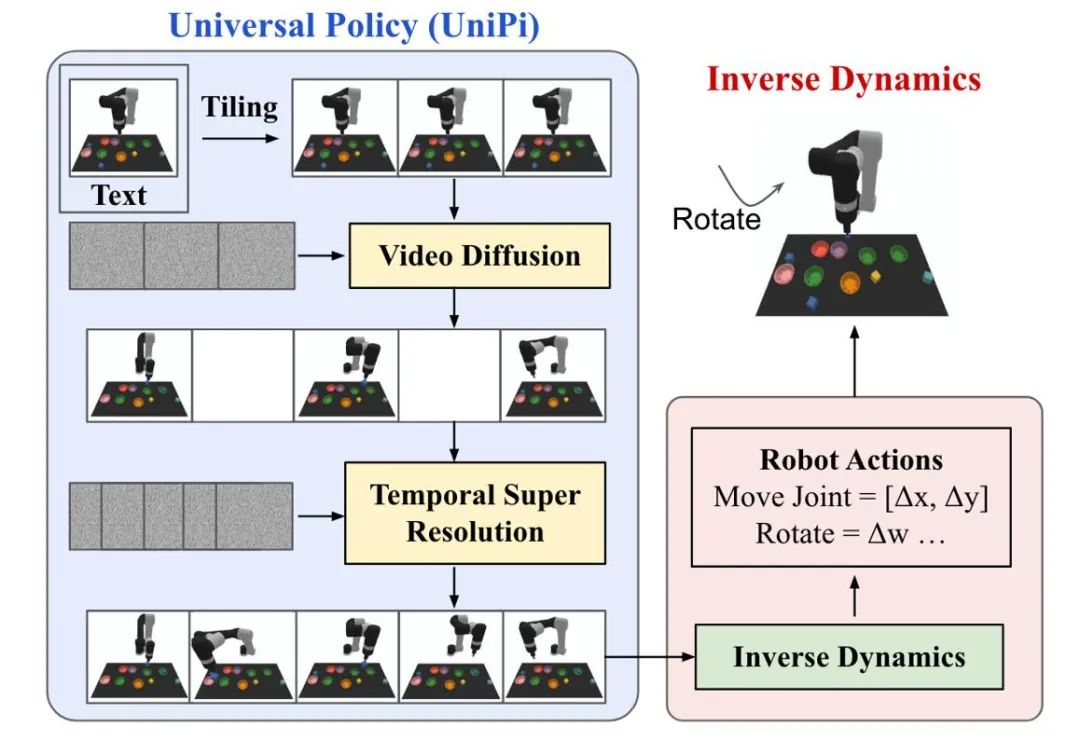

The trajectory generation of the diffusion model in reinforcement learning mainly focuses on two types of tasks: human action generation and robot control. Action data or video data generated by diffusion models are used to build simulation simulators or train downstream decision-making models. UniPi trains a video generation diffusion model as a general strategy, and achieves cross-body robot control by accessing different inverse dynamics models to obtain underlying control commands.

Figure 5: Schematic diagram of UniPi’s decision-making process.

Data enhancement

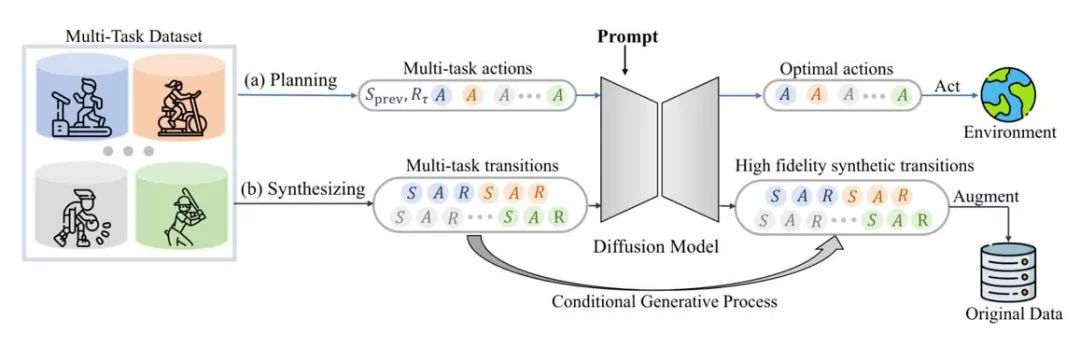

The diffusion model can also directly fit the original data distribution, while maintaining authenticity Provide a variety of dynamically expanded data. For example, SynthER and MTDiff-s generate complete environment transfer information of the training task through the diffusion model and apply it to policy improvement, and the results show that the diversity and accuracy of the generated data are better than historical methods.

Figure 6: Schematic diagram of MTDiff for multi-task planning and data enhancement

Future Outlook

Generative simulation environment

As shown in Figure 1, existing research mainly uses the diffusion model to overcome the problem of agents and Due to the limitations of the experience replay pool, there are relatively few studies on using diffusion models to enhance simulation environments. Gen2Sim uses the Vincentian graph diffusion model to generate diverse manipulable objects in the simulation environment to improve the generalization ability of precision robot operations. Diffusion models also have the potential to generate state transition functions, reward functions, or adversary behavior in multi-agent interactions in a simulation environment.

Add security constraints

By using safety constraints as sampling conditions for the model, agents based on the diffusion model can make decisions that satisfy specific constraints. Guided sampling of the diffusion model allows new security constraints to be continuously added by learning additional classifiers, while the parameters of the original model remain unchanged, thus saving additional training overhead.

Retrieval enhancement generation

Retrieval enhancement generation technology can enhance model capabilities by accessing external data sets, in large languages The model has been widely used. The performance of diffusion-based decision models in these states may also be improved by retrieving trajectories related to the agent's current state and feeding them into the model. If the retrieval data set is constantly updated, it is possible for the agent to exhibit new behaviors without being retrained.

Combining multiple skills

Diffusion models can be combined with classifier guidance or without classifier guidance A variety of simple skills to complete complex tasks. Early results in offline reinforcement learning also indicate that diffusion models can share knowledge between different skills, making it possible to achieve zero-shot transfer or continuous learning by combining different skills.

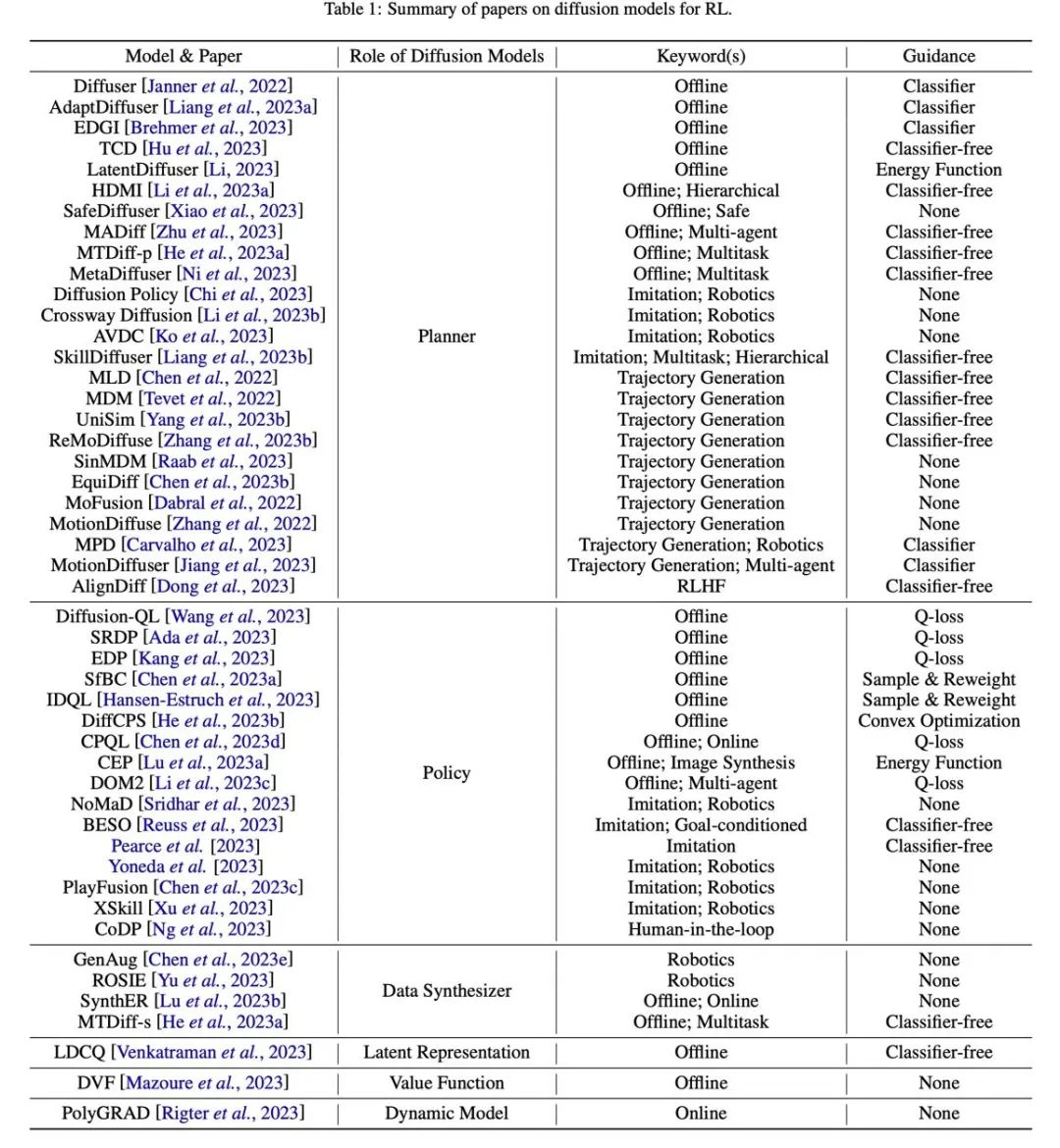

Table

Figure 7: Summary and classification table of related papers.

The above is the detailed content of How does the diffusion model build a new generation of decision-making agents? Beyond autoregression, simultaneously generate long sequence planning trajectories. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

Introduction to how to use the joiplay simulator

May 04, 2024 pm 06:40 PM

Introduction to how to use the joiplay simulator

May 04, 2024 pm 06:40 PM

The jojplay simulator is a very easy-to-use mobile phone simulator. It supports computer games to run on mobile phones and has very good compatibility. Some players don’t know how to use it. The editor below will introduce how to use it. How to use joiplay simulator 1. First, you need to download the Joiplay body and RPGM plug-in. It is best to install them in the order of body-plug-in. The apk package can be obtained in the Joiplay bar (click to get >>>). 2. After Android is completed, you can add games in the lower left corner. 3. Fill in the name casually, and press CHOOSE on executablefile to select the game.exe file of the game. 4. Icon can be left blank or you can choose your favorite picture.

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Life Restart Simulator Guide

May 07, 2024 pm 05:28 PM

Life Restart Simulator Guide

May 07, 2024 pm 05:28 PM

Life Restart Simulator is a very interesting simulation game. This game has become very popular recently. There are many ways to play in the game. Below, the editor has brought you a complete guide to Life Restart Simulator. Come and take a look. What strategies are there? Life Restart Simulator Guide Guide Features of Life Restart Simulator This is a very creative game in which players can play according to their own ideas. There are many tasks to complete every day, and you can enjoy a new life in this virtual world. There are many songs in the game, and all kinds of different lives are waiting for you to experience. Life Restart Simulator Game Contents Talent Card Drawing: Talent: You must choose the mysterious small box to become an immortal. A variety of small capsules are available to avoid dying midway. Cthulhu may choose

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

The U.S. Air Force showcases its first AI fighter jet with high profile! The minister personally conducted the test drive without interfering during the whole process, and 100,000 lines of code were tested for 21 times.

May 07, 2024 pm 05:00 PM

Recently, the military circle has been overwhelmed by the news: US military fighter jets can now complete fully automatic air combat using AI. Yes, just recently, the US military’s AI fighter jet was made public for the first time and the mystery was unveiled. The full name of this fighter is the Variable Stability Simulator Test Aircraft (VISTA). It was personally flown by the Secretary of the US Air Force to simulate a one-on-one air battle. On May 2, U.S. Air Force Secretary Frank Kendall took off in an X-62AVISTA at Edwards Air Force Base. Note that during the one-hour flight, all flight actions were completed autonomously by AI! Kendall said - "For the past few decades, we have been thinking about the unlimited potential of autonomous air-to-air combat, but it has always seemed out of reach." However now,

Introduction to joiplay simulator font setting method

May 09, 2024 am 08:31 AM

Introduction to joiplay simulator font setting method

May 09, 2024 am 08:31 AM

The jojplay simulator can actually customize the game fonts, and can solve the problem of missing characters and boxed characters in the text. I guess many players still don’t know how to operate it. The following editor will bring you the method of setting the font of the jojplay simulator. introduce. How to set the joiplay simulator font 1. First open the joiplay simulator, click on the settings (three dots) in the upper right corner, and find it. 2. In the RPGMSettings column, click to select the CustomFont custom font in the third row. 3. Select the font file and click OK. Be careful not to click the "Save" icon in the lower right corner, otherwise the default settings will be restored. 4. Recommended Founder and Quasi-Yuan Simplified Chinese (already in the folders of the games Fuxing and Rebirth). joi

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

How to delete the thunder and lightning simulator application? -How to delete applications in Thunderbolt Simulator?

May 08, 2024 pm 02:40 PM

How to delete the thunder and lightning simulator application? -How to delete applications in Thunderbolt Simulator?

May 08, 2024 pm 02:40 PM

The official version of Thunderbolt Simulator is a very professional Android emulator tool. So how to delete the thunder and lightning simulator application? How to delete applications in Thunderbolt Simulator? Let the editor give you the answer below! How to delete the thunder and lightning simulator application? 1. Click and hold the icon of the app you want to delete. 2. Wait for a while until the option to uninstall or delete the app appears. 3. Drag the app to the uninstall option. 4. In the confirmation window that pops up, click OK to complete the deletion of the application.