Discussion on the underlying implementation of Go language

Title: Discussion on the underlying implementation of Go language: In-depth understanding of the operating mechanism of Go language

Text:

Go language as an efficient and concise programming Language has always been loved by programmers. Its powerful concurrency model, garbage collection mechanism and concise syntax enable developers to write code more efficiently. However, understanding the underlying implementation of the Go language is crucial to a deep understanding of how the language operates. This article will explore the underlying implementation mechanism of the Go language and lead readers to have an in-depth understanding of the operating principles of the Go language through specific code examples.

Concurrency model of Go language

The concurrency model of Go language is one of its biggest features. It makes concurrent programming simple and efficient through the combination of goroutine and channel. Below we use a simple example to understand the implementation mechanism of goroutine:

package main

import (

"fmt"

"time"

)

func main() {

go func() {

fmt.Println("Hello, goroutine!")

}()

time.Sleep(time.Second)

fmt.Println("Main goroutine exits.")

}In the above code, we start a goroutine to execute an anonymous function and output a piece of text in the main goroutine. Use time.Sleep(time.Second) in the main goroutine to wait for one second to ensure the execution of the child goroutine. By running this code, you can find that the text in the child goroutine will be output first, and then the text in the main goroutine.

This is because goroutine is a lightweight thread in the Go language, which is scheduled and managed by the runtime system of the Go language. When a goroutine is created, it is allocated a separate stack space that grows or shrinks dynamically at runtime. The switching of threads is automatically managed by the Go language runtime system, and developers do not need to care about the details of thread management.

Garbage collection mechanism of Go language

Go language uses a garbage collection mechanism based on concurrent mark and clear algorithm, which allows programmers to focus more on the implementation of business logic without paying too much attention Memory management. Below we use a simple code example to understand the garbage collection mechanism of the Go language:

package main

import "fmt"

func main() {

var a []int

for i := 0; i < 1000000; i++ {

a = append(a, i)

}

fmt.Println("Allocated memory for a")

// Force garbage collection

a = nil

fmt.Println("Force garbage collection")

} In the above code, we continuously add elements to the slice a through a loop. When When a is no longer referenced, we set it to nil to actively trigger garbage collection. By running this code, we can observe that the memory usage is released after forcibly triggering garbage collection.

The garbage collection mechanism of the Go language is a concurrency-based algorithm that dynamically performs garbage collection during program running to avoid memory leaks. This allows the Go language to better cope with the challenges of memory management, allowing programmers to focus more on the implementation of business logic.

Conclusion

Through this article's discussion of the underlying implementation of the Go language, we have learned about the implementation mechanism of goroutine and the application of the garbage collection mechanism in the Go language. A deep understanding of the underlying implementation of the Go language is crucial to understanding its operating mechanism and performance optimization. I hope that through the introduction of this article, readers can have a deeper understanding of the underlying implementation of the Go language, so that they can better apply the Go language for development.

The above is the entire content of this article, thank you for reading!

About the author:

The author is a senior Go language developer with rich project experience and technology sharing experience. Committed to promoting the application of Go language and in-depth exploration of its underlying implementation mechanism, you are welcome to pay attention to the author's more technical sharing.

The above is the detailed content of Discussion on the underlying implementation of Go language. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

What libraries are used for floating point number operations in Go?

Apr 02, 2025 pm 02:06 PM

The library used for floating-point number operation in Go language introduces how to ensure the accuracy is...

What is the problem with Queue thread in Go's crawler Colly?

Apr 02, 2025 pm 02:09 PM

What is the problem with Queue thread in Go's crawler Colly?

Apr 02, 2025 pm 02:09 PM

Queue threading problem in Go crawler Colly explores the problem of using the Colly crawler library in Go language, developers often encounter problems with threads and request queues. �...

How to solve the user_id type conversion problem when using Redis Stream to implement message queues in Go language?

Apr 02, 2025 pm 04:54 PM

How to solve the user_id type conversion problem when using Redis Stream to implement message queues in Go language?

Apr 02, 2025 pm 04:54 PM

The problem of using RedisStream to implement message queues in Go language is using Go language and Redis...

In Go, why does printing strings with Println and string() functions have different effects?

Apr 02, 2025 pm 02:03 PM

In Go, why does printing strings with Println and string() functions have different effects?

Apr 02, 2025 pm 02:03 PM

The difference between string printing in Go language: The difference in the effect of using Println and string() functions is in Go...

What should I do if the custom structure labels in GoLand are not displayed?

Apr 02, 2025 pm 05:09 PM

What should I do if the custom structure labels in GoLand are not displayed?

Apr 02, 2025 pm 05:09 PM

What should I do if the custom structure labels in GoLand are not displayed? When using GoLand for Go language development, many developers will encounter custom structure tags...

What is the difference between `var` and `type` keyword definition structure in Go language?

Apr 02, 2025 pm 12:57 PM

What is the difference between `var` and `type` keyword definition structure in Go language?

Apr 02, 2025 pm 12:57 PM

Two ways to define structures in Go language: the difference between var and type keywords. When defining structures, Go language often sees two different ways of writing: First...

CS-Week 3

Apr 04, 2025 am 06:06 AM

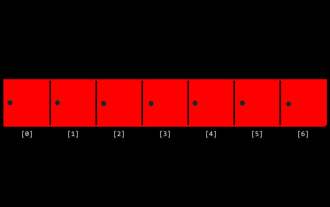

CS-Week 3

Apr 04, 2025 am 06:06 AM

Algorithms are the set of instructions to solve problems, and their execution speed and memory usage vary. In programming, many algorithms are based on data search and sorting. This article will introduce several data retrieval and sorting algorithms. Linear search assumes that there is an array [20,500,10,5,100,1,50] and needs to find the number 50. The linear search algorithm checks each element in the array one by one until the target value is found or the complete array is traversed. The algorithm flowchart is as follows: The pseudo-code for linear search is as follows: Check each element: If the target value is found: Return true Return false C language implementation: #include#includeintmain(void){i

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or provided by well-known open source projects?

Apr 02, 2025 pm 04:12 PM

Which libraries in Go are developed by large companies or well-known open source projects? When programming in Go, developers often encounter some common needs, ...