Technology peripherals

Technology peripherals

AI

AI

The list of 2024 Apple Scholars has been announced, half of them are Chinese! Penn PhD student once worked with Jim Fan to create Nvidia's most popular robot

The list of 2024 Apple Scholars has been announced, half of them are Chinese! Penn PhD student once worked with Jim Fan to create Nvidia's most popular robot

The list of 2024 Apple Scholars has been announced, half of them are Chinese! Penn PhD student once worked with Jim Fan to create Nvidia's most popular robot

The latest list of annual “Apple Scholars” has been announced!

Apple Machine Learning Research has just announced the list of "Apple Scholars" who will receive doctoral scholarships in 2024, which shows their research and development in the field of artificial intelligence/machine learning. Talented students are supported and encouraged.

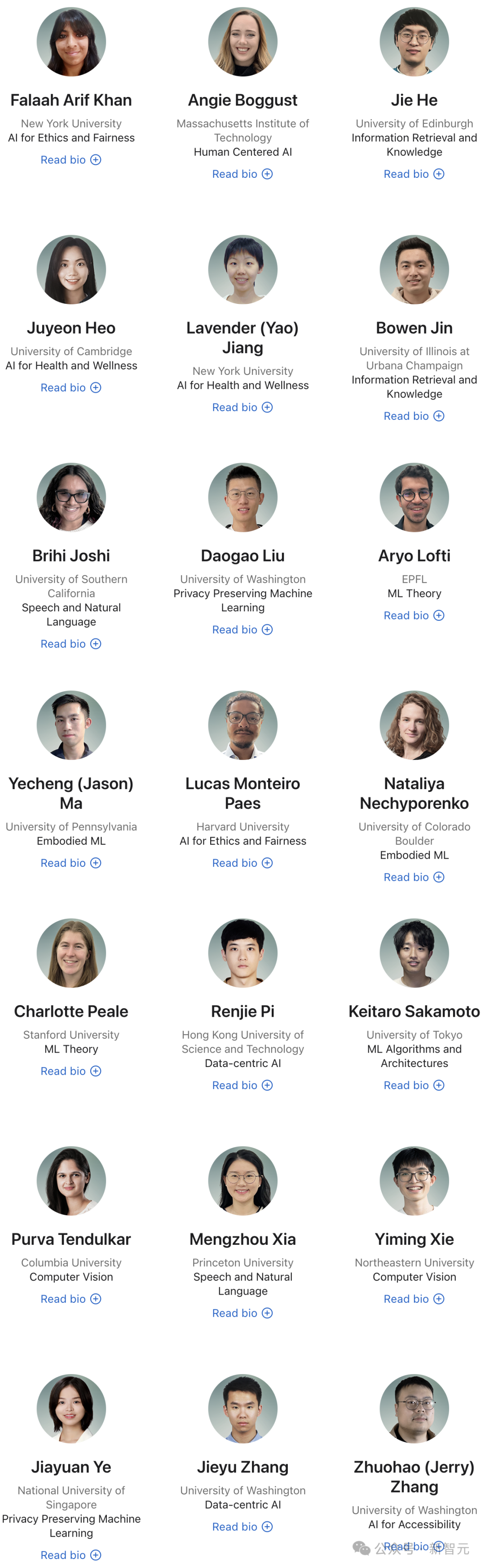

It is worth mentioning that a total of 21 scholars won the award this year. Among them, Chinese scholars account for half of the quota, with 11 people.

The Apple Scholars PhD Scholarship is designed to reward researchers who have made outstanding contributions in the fields of computer science and engineering, from graduate students to postdoctoral levels. This scholarship aims to support and encourage innovative work in the field of artificial intelligence and machine learning to advance science and technology.

Each scholarship student will receive financial support and internship opportunities while pursuing a doctoral degree, and will also be mentored by Apple researchers in the same field.

Each Apple Scholar is selected based on his or her innovative research, leadership, record of collaboration, and commitment to advancing the field.

Let’s take a look, who are the award-winning Chinese scholars?

11 Chinese scholars elected

Jie He

University of Edinburgh, Information Retrieval and Knowledge

##Jie He is a PhD student at the University of Edinburgh, tutored by Jeff Pan. He works to develop more reliable and accurate generative models, especially by diagnosing and evaluating model weaknesses to make targeted improvements. His recent research mainly includes "common sense reasoning and retrieval enhanced language models".

He received a master's degree from the Department of Computer Science of Tianjin University in 2022 and a bachelor's degree from the School of Software of Shandong University in 2019.

Lavender (Yao) Jiang

New York University, AI Health & Health

Lavender Jiang is a third-year Ph.D. at NYU’s Center for Data Science student, mentored by Eric Oermann and Kyunghyun Cho. Her research focuses on the safe and efficient integration of large models (LLMs) into healthcare, exploring their utility, privacy implications, and computational efficiency.

She earned a bachelor's degree in electrical and computer engineering and mathematical sciences from Carnegie Mellon University (CMU).

Bowen Jin

University of Illinois at Urbana-Champaign (UIUC), Information Retrieval and Knowledge

Bowen Jin is a doctoral student at the University of Illinois at Urbana-Champaign. His mentor is the famous computer scientist Han Jiawei ( Jiawei Han).

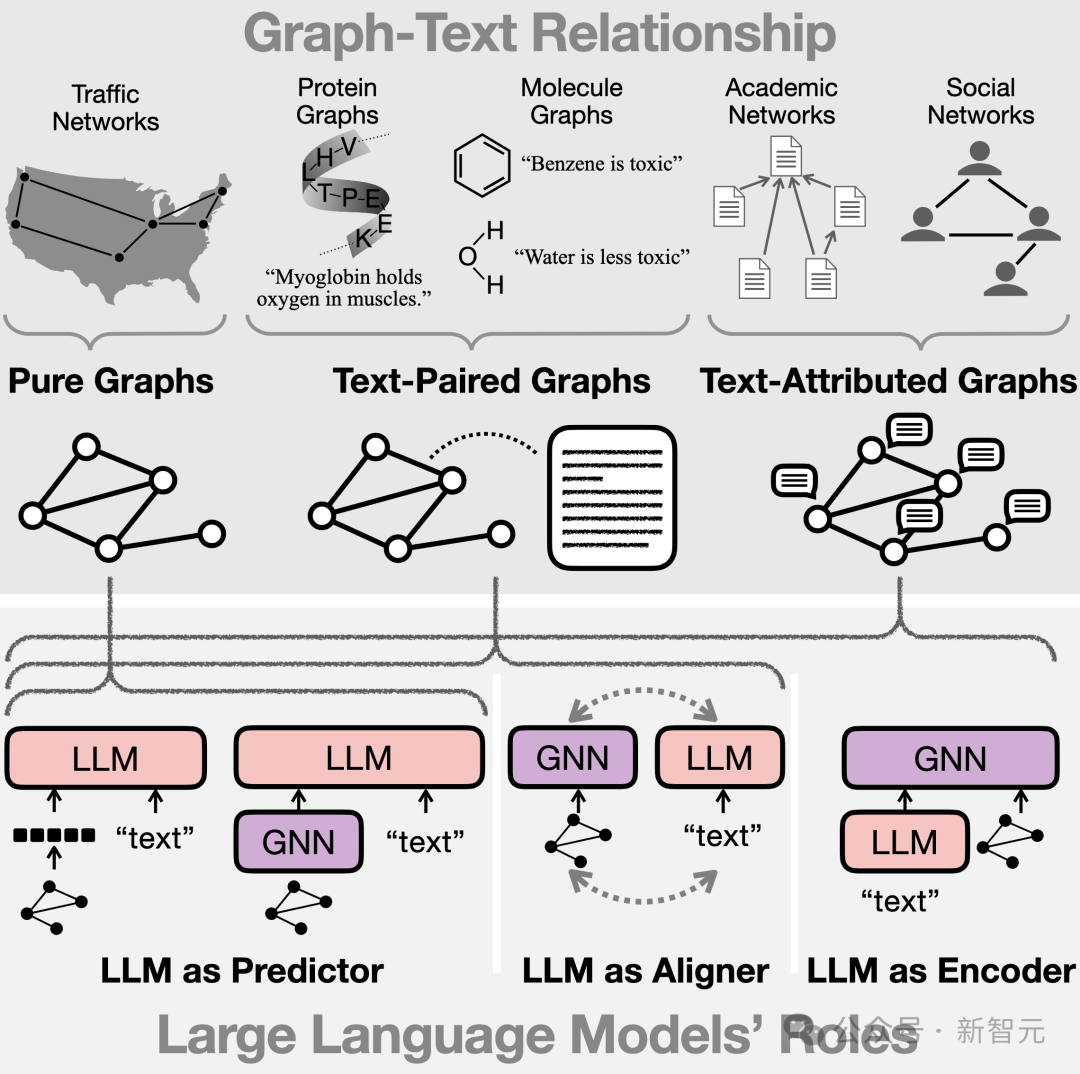

His research areas are large models, information networks and text/data mining. He is particularly interested in how language models integrate text, web, and multimodal data to solve real-world problems, including information retrieval and knowledge discovery.

He received his bachelor's degree in electrical engineering and statistics from Tsinghua University in 2021, under the supervision of Yong Li.

Currently, Bowen Jin is maintaining a great GitHub repository about large models on graphs and summarizing a review paper.

Daogao Liu

## University of Washington, Privacy Preserving Machine Learning

He received his bachelor's degree in mathematics and physics from Tsinghua University in 2020.

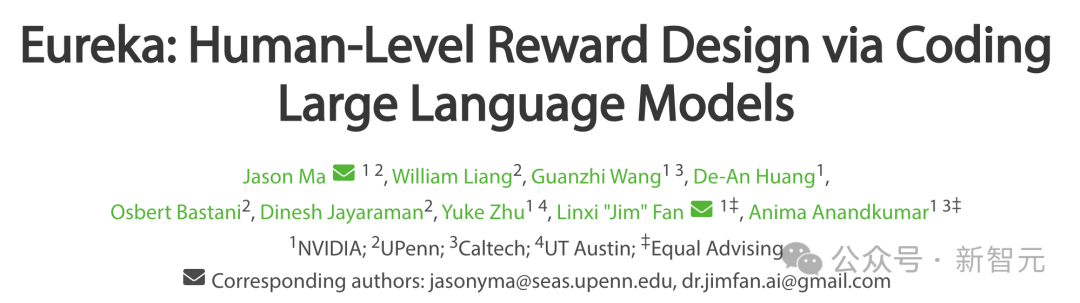

University of Pennsylvania, Embodied Machine Learning

He received a double bachelor's degree in computer science and mathematics from Harvard University.

HKUST, Data-Centric AI

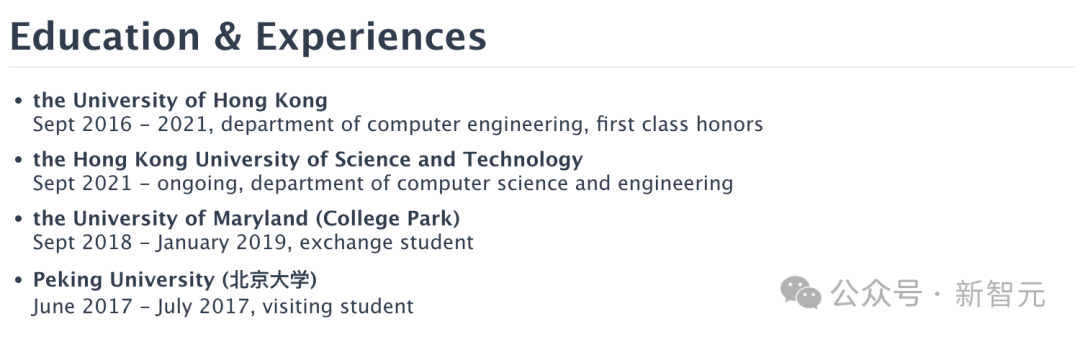

He was a visiting scholar at Peking University in 2017 and an exchange student at the University of Maryland between 2018 and 2019.

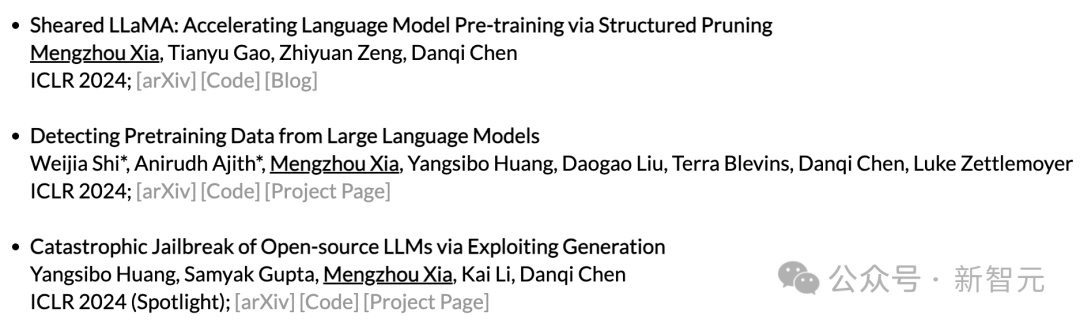

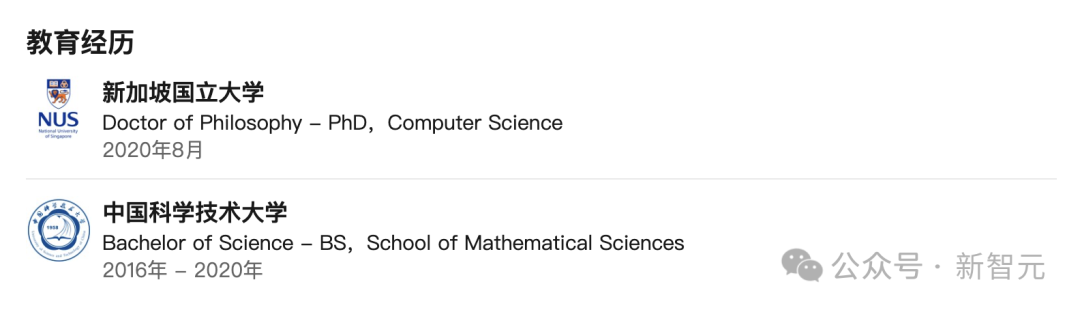

Princeton University, Speech and Natural Language

Before this, I was a master's student at Carnegie Mellon University, and my advisor was Professor Graham Neubig. Xia Mengzhou received a bachelor's degree from the School of Big Data at Fudan University. Mengzhou Xia's research focuses on developing powerful small-scale basic models that are affordable within academic budgets. These include developing model compression methods and efficient data selection strategies. According to her personal homepage, a total of 3 papers were accepted by ICLR 2024 this year. Northeastern University, Computer Vision Yiming Xie is a third-year doctoral student majoring in computer science at Northeastern University. Her supervisor is Professor Huaizu Jiang. His research focuses on 3D computer vision, especially 3D reconstruction, perception and generation. His goal is to develop an intelligent system that unifies three-dimensional perception and generation for augmented reality (AR). He received his bachelor’s degree from Zhejiang University in 2019 under the guidance of Professor Xiaowei Zhou. National University of Singapore, Privacy Preserving Machine Learning Jiayuan is a doctoral student at the National University of Singapore, and his supervisor is Reza Shokri. She focuses on rigorous privacy analysis of learning algorithms under various threat models and tasks. Her research aims to achieve learning that ensures good privacy while retaining other desirable properties such as practicality and efficiency. She received her bachelor’s degree from the School of Mathematical Sciences, University of Science and Technology of China in 2020. ## University of Washington, Data-Centered Artificial Intelligence Jieyu Zhang is a doctoral student in the Paul G. Allen School of Computer Science and Engineering at the University of Washington , studied under Professor Ranjay Krishna and Professor Alex Ratner. His research focuses on data-centric AI/ML, emphasizing faithful evaluation and lightweight methods. His goal is to develop efficient and effective methods to create high-quality training datasets and comprehensive evaluation benchmarks. Prior to this, he received a bachelor's degree in computer science from UIUC, where his mentor was Jiawei Han. University of Washington, Accessible Artificial Intelligence Zhuohao (Jerry) Zhang is a third-year doctoral student at the University of Washington, and his supervisor is Professor Jacob O. Wobbrock. His research focuses on leveraging human-AI interaction to solve real-world accessibility problems. He is particularly interested in designing and evaluating intelligent assistive technologies to make creative tasks accessible. He received a Master of Science in CS from UIUC and was mentored by Professor Yang Wang in the SALT laboratory. Before that, he received a Bachelor of Science in CS from Zhejiang University. Full list

Yiming Xie

Jiayuan Ye

Jieyu Zhang

Zhuohao (Jerry) Zhang

The above is the detailed content of The list of 2024 Apple Scholars has been announced, half of them are Chinese! Penn PhD student once worked with Jim Fan to create Nvidia's most popular robot. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile