Meta has recently launched two powerful GPU clusters to support the training of next-generation generative AI models, including the upcoming Llama 3.

Both data centers are reportedly equipped with up to 24,576 GPUs and are designed to support larger and more complex generative AI models than previously released.

As a popular open source algorithm model, Meta’s Llama is comparable to OpenAI’s GPT and Google’s Gemini.

According to Geek.com, these two GPU clusters are equipped with NVIDIA’s most powerful H100 GPU, which is larger than the large cluster previously launched by Meta. Much larger. Previously, Meta's cluster had about 16,000 Nvidia A100 GPUs.

According to reports, Meta has purchased tens of thousands of Nvidia’s latest GPUs. Market research company Omdia pointed out in its latest report that Meta has become one of Nvidia's most important customers.

Meta engineers announced that they plan to use new GPU clusters to fine-tune existing AI systems to train newer and more powerful AI systems, including Llama 3.

The engineer pointed out that the development of Llama 3 is currently "in progress", but did not disclose when it will be released.

Meta’s long-term goal is to develop general artificial intelligence (AGI) systems, because AGI is closer to humans in terms of creativity and is significantly different from existing generative AI models.

The new GPU cluster will help Meta achieve these goals. Additionally, the company is improving the PyTorch AI framework to support more GPUs.

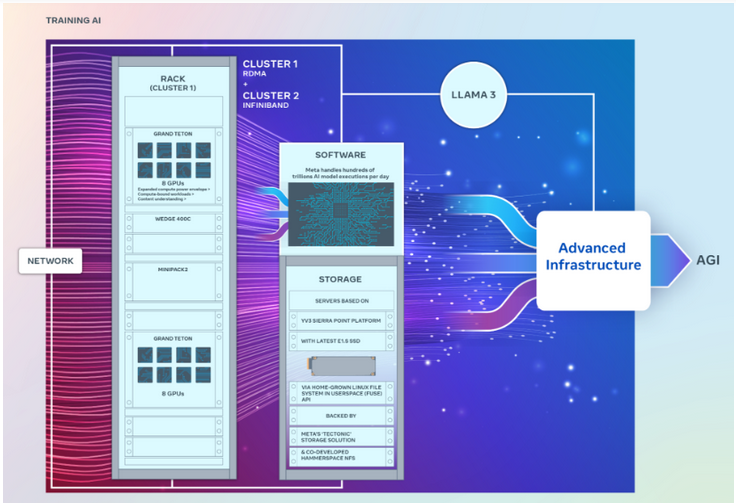

It is worth mentioning that although the two clusters have exactly the same number of GPUs and can be connected to each other at 400GB per second endpoints, they use different architectures.

Among them, a GPU cluster can remotely access direct memory or RDMA through a converged Ethernet network fabric built using Arista Networks’ Arista 7800 with Wedge400 and Minipack2 OCP rack switches. Another GPU cluster is built using Nvidia's Quantum2 InfiniBand network fabric technology.

Both clusters use Grand Teton, Meta’s open GPU hardware platform, which is designed to support large-scale AI workloads. Grand Teton offers four times the host-to-GPU bandwidth of its predecessor, the Zion-EX platform, and twice the computing power, bandwidth and power of Zion-EX.

Meta said the two clusters feature the latest open rack power and rack infrastructure designed to provide greater flexibility in data center design. Open Rack v3 allows power racks to be mounted anywhere inside the rack rather than being fixed to busbars, allowing for more flexible configurations.

Additionally, the number of servers per rack is customizable, allowing for a more efficient balance in each server’s throughput capacity.

In terms of storage, these two GPU clusters are based on the YV3 Sierra Point server platform and use the most advanced E1.S solid-state drives.

Meta engineers emphasized in the article that the company is committed to open innovation of the AI hardware stack. "As we look to the future, we recognize that what worked before or currently may not be enough to meet future needs. That's why we are constantly evaluating and improving our infrastructure."

Meta is the recently founded AI A member of the alliance. The alliance aims to create an open ecosystem that increases transparency and trust in AI development and ensures that everyone benefits from its innovations.

Meta also revealed that it will continue to purchase more Nvidia H100 GPUs and plans to have more than 350,000 GPUs by the end of this year. These GPUs will be used to continue building AI infrastructure, which means that more and more powerful GPU clusters will be available in the future.

The above is the detailed content of Meta has added two new 10,000-card clusters and invested nearly 50,000 Nvidia H100 GPUs.. For more information, please follow other related articles on the PHP Chinese website!