Pitfalls and solutions of Java thread pool

1. Thread leak

ThreadLeak means that the created thread is not destroyed correctly, resulting in memory leak. This is one of the most common pitfalls in thread pools.

solution:

- Use the

shutdown()andshutdownNow()methods of theExecutorServiceinterface to explicitly shut down the thread pool. - Use the

try-with-resourcesstatement to ensure that the thread pool is automatically closed upon exception or normal exit. - Set the maximum number of threads for the thread pool to prevent multiple threads from being created.

2. Resource exhaustion

The number of available threads in the thread pool is limited. If there are too many tasks, it can lead to resource exhaustion, which can lead to poor performance or even crash of the application.

solution:

- Adjust the size of the thread pool to balance task throughput and resource utilization.

- Use queues to manage tasks to prevent task accumulation.

- Consider using an elastic thread pool, which can dynamically adjust the number of threads as needed.

3. Deadlock

A dead lock occurs when threads wait for each other and cannot continue. In a thread pool, if tasks depend on external resources, the risk of deadlock increases.

solution:

- Avoid circular dependencies and use locks or other synchronization mechanisms to ensure sequential access to resources.

- Use the timeout mechanism to force the thread to release the lock within a certain period of time.

- Consider using a non-blocking I/O model to reduce the possibility of deadlock.

4. Task queuing

The thread pool uses queues to manage tasks. The size of the queue is limited, and if there are too many tasks, tasks may be queued for a long time.

solution:

- Adjust queue size to balance throughput and response time.

- Consider using a priority queue to prioritize important tasks.

- Implement task sharding and break down large tasks into smaller tasks to complete faster.

5. Memory usage

Each thread requires a certain amount of memory overhead. Too many threads in the thread pool can cause high memory usage.

solution:

- Limit the size of the thread pool and only create the necessary number of threads.

- Use lightweight thread pool implementation, such as

- ForkJoinPool

.Use local variables in tasks instead of instance variables to reduce memory usage.

6. Performance bottleneck

The thread pool is designed to improve performance, but if improperly configured or used improperly, it may become a performance bottleneck.

solution:

- Carefully analyze your application's thread usage and adjust the thread pool size as needed.

- Avoid creating too many threads to avoid increasing context switching and scheduling overhead.

- Use performance analysis

- tools to identify and resolve performance bottlenecks.

7. Concurrency issues

Although thread pools are designed to manageconcurrent tasks, concurrency issues may still arise if there is data competition between tasks.

solution:

- Use synchronization mechanisms, such as locks or atomic operations, to ensure data consistency.

- Consider using immutable objects to avoid data races.

- Use thread-local storage within tasks to isolate each thread's data.

The above is the detailed content of Pitfalls and solutions of Java thread pool. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

Local fine-tuning of DeepSeek class models faces the challenge of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

1. First, enter the Edge browser and click the three dots in the upper right corner. 2. Then, select [Extensions] in the taskbar. 3. Next, close or uninstall the plug-ins you do not need.

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

The familiar open source large language models such as Llama3 launched by Meta, Mistral and Mixtral models launched by MistralAI, and Jamba launched by AI21 Lab have become competitors of OpenAI. In most cases, users need to fine-tune these open source models based on their own data to fully unleash the model's potential. It is not difficult to fine-tune a large language model (such as Mistral) compared to a small one using Q-Learning on a single GPU, but efficient fine-tuning of a large model like Llama370b or Mixtral has remained a challenge until now. Therefore, Philipp Sch, technical director of HuggingFace

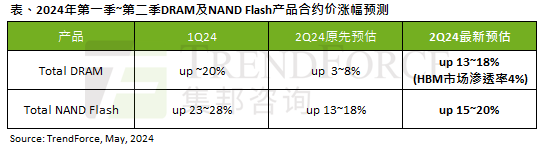

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

According to a TrendForce survey report, the AI wave has a significant impact on the DRAM memory and NAND flash memory markets. In this site’s news on May 7, TrendForce said in its latest research report today that the agency has increased the contract price increases for two types of storage products this quarter. Specifically, TrendForce originally estimated that the DRAM memory contract price in the second quarter of 2024 will increase by 3~8%, and now estimates it at 13~18%; in terms of NAND flash memory, the original estimate will increase by 13~18%, and the new estimate is 15%. ~20%, only eMMC/UFS has a lower increase of 10%. ▲Image source TrendForce TrendForce stated that the agency originally expected to continue to

C++ Concurrent Programming: How to handle inter-thread communication?

May 04, 2024 pm 12:45 PM

C++ Concurrent Programming: How to handle inter-thread communication?

May 04, 2024 pm 12:45 PM

Methods for inter-thread communication in C++ include: shared memory, synchronization mechanisms (mutex locks, condition variables), pipes, and message queues. For example, use a mutex lock to protect a shared counter: declare a mutex lock (m) and a shared variable (counter); each thread updates the counter by locking (lock_guard); ensure that only one thread updates the counter at a time to prevent race conditions.

What pitfalls should we pay attention to when designing distributed systems with Golang technology?

May 07, 2024 pm 12:39 PM

What pitfalls should we pay attention to when designing distributed systems with Golang technology?

May 07, 2024 pm 12:39 PM

Pitfalls in Go Language When Designing Distributed Systems Go is a popular language used for developing distributed systems. However, there are some pitfalls to be aware of when using Go, which can undermine the robustness, performance, and correctness of your system. This article will explore some common pitfalls and provide practical examples on how to avoid them. 1. Overuse of concurrency Go is a concurrency language that encourages developers to use goroutines to increase parallelism. However, excessive use of concurrency can lead to system instability because too many goroutines compete for resources and cause context switching overhead. Practical case: Excessive use of concurrency leads to service response delays and resource competition, which manifests as high CPU utilization and high garbage collection overhead.

What are the concurrent programming frameworks and libraries in C++? What are their respective advantages and limitations?

May 07, 2024 pm 02:06 PM

What are the concurrent programming frameworks and libraries in C++? What are their respective advantages and limitations?

May 07, 2024 pm 02:06 PM

The C++ concurrent programming framework features the following options: lightweight threads (std::thread); thread-safe Boost concurrency containers and algorithms; OpenMP for shared memory multiprocessors; high-performance ThreadBuildingBlocks (TBB); cross-platform C++ concurrency interaction Operation library (cpp-Concur).

What warnings or caveats should be included in Golang function documentation?

May 04, 2024 am 11:39 AM

What warnings or caveats should be included in Golang function documentation?

May 04, 2024 am 11:39 AM

Go function documentation contains warnings and caveats that are essential for understanding potential problems and avoiding errors. These include: Parameter validation warning: Check parameter validity. Concurrency safety considerations: Indicate the thread safety of a function. Performance considerations: Highlight the high computational cost or memory footprint of a function. Return type annotation: Describes the error type returned by the function. Dependency Note: Lists external libraries or packages required by the function. Deprecation warning: Indicates that a function is deprecated and suggests an alternative.