Technology peripherals

Technology peripherals

AI

AI

To protect customer privacy, run open source AI models locally using Ruby

To protect customer privacy, run open source AI models locally using Ruby

To protect customer privacy, run open source AI models locally using Ruby

Translator| Chen Jun

##Reviewer| Chonglou

Recently, we implemented a customized artificial intelligence (AI) project. Given that Party A holds very sensitive customer information, for security reasons we cannot pass it to OpenAI or other proprietary models. Therefore, we downloaded and ran an open source AI model in an AWS virtual machine, keeping it completely under our control. At the same time, Rails applications can make API calls to AI in a safe environment. Of course, if security issues do not have to be considered, we would prefer to cooperate directly with OpenAI.

Next, I will share with you how to download the open source AI model locally, let it run, and how to Run the Ruby script against it.

Why customize?

#The motivation for this project is simple: data security. When handling sensitive customer information, the most reliable approach is usually to do it within the company. Therefore, we need customized AI models to play a role in providing a higher level of security control and privacy protection.

Open source model

In the past6In the past few months, products such as: Mistral, Mixtral and Lama# have appeared on the market. ## and many other open source AI models. Although they are not as powerful as GPT-4, the performance of many of them has exceeded GPT-3.5, and with the As time goes by, they will become stronger and stronger. Of course, which model you choose depends entirely on your processing capabilities and what you need to achieve.

#Since we will be running the AI model locally, a size of approximately4GB was chosen Mistral. It outperforms GPT-3.5 on most metrics. Although Mixtral performs better than Mistral, it is a bulky model requiring at least 48GBMemory can run.

ParametersTalking about large language models (

LLM ), we often consider mentioning their parameter sizes. Here, the Mistral model we will run locally is a 70# model with 70 million parameters (of course, Mixtral has 700 billion parameters, while GPT-3.5 has approximately 1750 billion parameters).

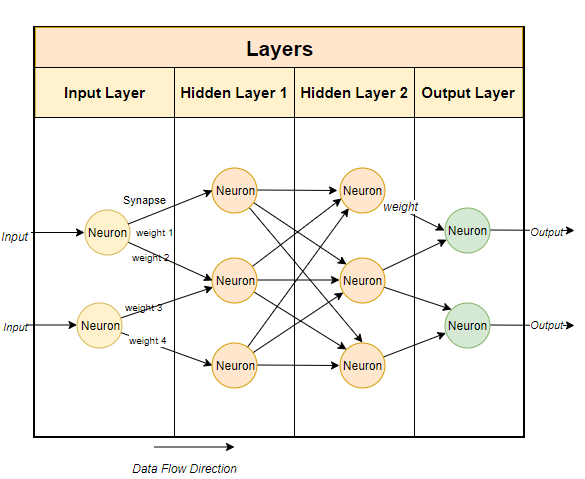

# Typically, large language models use neural network-based techniques. Neural networks are made up of neurons, and each neuron is connected to all other neurons in the next layer.

The purpose of the neural network is to "learn" an advanced algorithm, a pattern matching algorithm. By being trained on large amounts of text, it will gradually learn the ability to predict text patterns and respond meaningfully to the cues we give it. Simply put, parameters are the number of weights and biases in the model. It gives us an idea of how many neurons there are in a neural network. For example, for a model with 70 billion parameters, there are approximately 100 layers, each with thousands of neurons .

Run the model locally

To run the open source model locally, you must first download it Related applications. While there are many options on the market, the one I find easiest and easiest to run on IntelMac is Ollama.

Although Ollama is currently only available on Mac and It runs on Linux, but it will also run on Windows in the future. Of course, you can use WSL (Windows Subsystem for Linux) to run Linux shell# on Windows ##.

Ollama not only allows you to download and run a variety of open source models, but also opens the model on a local port, allowing you to Ruby code makes API calls. This makes it easier for Ruby developers to write Ruby applications that can be integrated with local models.

GetOllama

Since Ollama is mainly based on the command line , so installing Ollama on Mac# and Linux systems is very simple. You just need to download Ollama through the linkhttps://www.php.cn/link/04c7f37f2420f0532d7f0e062ff2d5b5, spend5 It will take about a minute to install the software package and then run the model.

After you have Ollama set up and running, you will see the Ollama icon in your browser's taskbar. This means it is running in the background and can run your model. In order to download the model, you can open a terminal and run the following command:

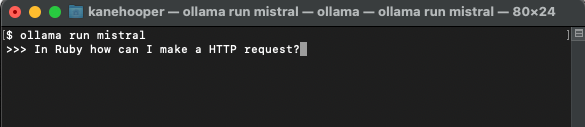

##ollama run mistral

Since Mistral is approximately 4GB, it will take some time for you to complete the download. Once the download is complete, it will automatically open the Ollama prompt for you to interact and communicate with Mistral.

#Next time you run it through Ollamamistral, you can run the corresponding model directly.

Customized model

##Similar to what we have inOpenAI## Create a customized GPT in #. Through Ollama, you can customize the basic model. Here we can simply create a custom model. For more detailed cases, please refer to Ollama's online documentation. First, you can create a Modelfile (model file) and add the following text in it:

FROM mistral# Set the temperature set the randomness or creativity of the responsePARAMETER temperature 0.3# Set the system messageSYSTEM ”””You are an excerpt Ruby developer. You will be asked questions about the Ruby Programminglanguage. You will provide an explanation along with code examples.”””

1 |

|

Next, you can run the following command on the terminal to create a new model:

ollama create

1 |

|

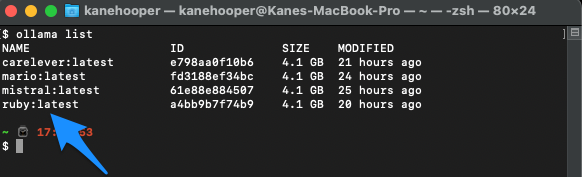

Ruby. ollama create ruby -f './Modelfile'

1 |

|

ollama list

1 |

|

At this point you can use Run the custom model with the following command:

Integrated with Ruby

Although Ollama does not yet have a dedicated gem, butRuby Developers can interact with models using basic HTTP request methods. Ollama running in the background can open the model through the 11434 port, so you can open it via "https://www.php.cn/link/ dcd3f83c96576c0fd437286a1ff6f1f0" to access it. Additionally, the documentation for OllamaAPI also provides different endpoints for basic commands such as chat conversations and creating embeds.

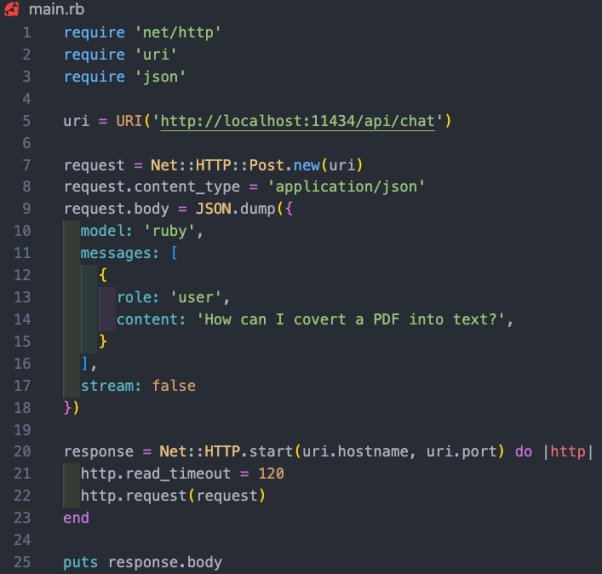

In this project case, we want to use the /api/chat endpoint to send prompts to the AI model. The image below shows some basic Ruby code for interacting with the model:

The functions of the above Ruby code snippet include:

- Via "net/http", "uri" and "json" three libraries respectively execute HTTP requests, parse URI and process JSONData.

- Create an endpoint address containing API(https://www.php. URI object of cn/link/dcd3f83c96576c0fd437286a1ff6f1f0/api/chat).

- Use Net::HTTP::Post.new## with URI as parameter #Method to create a new HTTP POST request.

- The request body is set to a JSON string that represents the hash value. The hash contains three keys: "model", "message" and "stream". Among them, the

- model key is set to "ruby", which is our model;

- The message key is set to an array containing a single hash value representing the user message;

- And the stream key is set to false.

- #How the system guides the model to respond to the information. We have already set it in the Modelfile.

- # User information is our standard prompt.

- #The model will respond with auxiliary information.

- Message hashing follows a pattern that intersects with AI models. It comes with a character and content. Roles here can be System, User, and Auxiliary. Among them, the

- HTTP request is sent using the Net::HTTP.start method . This method opens a network connection to the specified hostname and port and then sends the request. The connection's read timeout is set to 120 seconds, after all I'm running a 2019 Intel Mac, so the response speed may be a bit slow. This will not be a problem when running on the corresponding AWS server.

- The server's response is stored in the "response" variable.

Case Summary

As mentioned above, running the local AI model The real value lies in assisting companies holding sensitive data, processing unstructured data such as emails or documents, and extracting valuable structured information. In the project case we participated in, we conducted model training on all customer information in the customer relationship management (CRM) system. From this, users can ask any questions they have about their customers without having to sift through hundreds of records.

Translator introduction

Julian Chen ), 51CTO community editor, has more than ten years of experience in IT project implementation, is good at managing and controlling internal and external resources and risks, and focuses on disseminating network and information security knowledge and experience.

Original title: ##How To Run Open-Source AI Models Locally With Ruby By Kane Hooper

The above is the detailed content of To protect customer privacy, run open source AI models locally using Ruby. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G

Iyo One: Part headphone, part audio computer

Aug 08, 2024 am 01:03 AM

Iyo One: Part headphone, part audio computer

Aug 08, 2024 am 01:03 AM

At any time, concentration is a virtue. Author | Editor Tang Yitao | Jing Yu The resurgence of artificial intelligence has given rise to a new wave of hardware innovation. The most popular AIPin has encountered unprecedented negative reviews. Marques Brownlee (MKBHD) called it the worst product he's ever reviewed; The Verge editor David Pierce said he wouldn't recommend anyone buy this device. Its competitor, the RabbitR1, isn't much better. The biggest doubt about this AI device is that it is obviously just an app, but Rabbit has built a $200 piece of hardware. Many people see AI hardware innovation as an opportunity to subvert the smartphone era and devote themselves to it.

The first fully automated scientific discovery AI system, Transformer author startup Sakana AI launches AI Scientist

Aug 13, 2024 pm 04:43 PM

The first fully automated scientific discovery AI system, Transformer author startup Sakana AI launches AI Scientist

Aug 13, 2024 pm 04:43 PM

Editor | ScienceAI A year ago, Llion Jones, the last author of Google's Transformer paper, left to start a business and co-founded the artificial intelligence company SakanaAI with former Google researcher David Ha. SakanaAI claims to create a new basic model based on nature-inspired intelligence! Now, SakanaAI has handed in its answer sheet. SakanaAI announces the launch of AIScientist, the world’s first AI system for automated scientific research and open discovery! From conceiving, writing code, running experiments and summarizing results, to writing entire papers and conducting peer reviews, AIScientist unlocks AI-driven scientific research and acceleration

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

How to convert XML files to PDF on your phone?

Apr 02, 2025 pm 10:12 PM

It is impossible to complete XML to PDF conversion directly on your phone with a single application. It is necessary to use cloud services, which can be achieved through two steps: 1. Convert XML to PDF in the cloud, 2. Access or download the converted PDF file on the mobile phone.