Backend Development

Backend Development

Python Tutorial

Python Tutorial

Python and Jython: Unlocking the potential of cross-platform development

Python and Jython: Unlocking the potential of cross-platform development

Python and Jython: Unlocking the potential of cross-platform development

Cross-platform Development is critical to building applications that run seamlessly on multiple operating systems at the same time. python and Jython, as leading programming languages, provide powerful solutions for cross-platform development and unleash their potential.

Python’s cross-platform compatibility

Python is an interpreted language that uses a virtual machine to execute code. This architecture allows Python code to run on a variety of platforms, including windows, linux, MacOS, and mobile devices. Python's broad platform support makes it ideal for building cross-platform applications.

In addition, Python has a rich ecosystem of third-party libraries that provide a wide range of capabilities, from data processing and WEB development to machine learning and data science. The cross-platform compatibility of these libraries ensures that applications run reliably on a variety of operating systems.

Jython’s Java virtual machine integration

Jython is a variant of Python that compiles Python code into Java bytecode, which can be executed on the Java Virtual Machine (JVM). Jython inherits the cross-platform capabilities of Java, allowing applications to run seamlessly on any operating system with a JVM installed.

The ubiquity of the JVM allows Jython to be easily integrated into the existing Java ecosystem. This allows developers to leverage the power of Java while leveraging the simplicity and flexibility of Python.

Comparison between Python and Jython

performance:

- Python is generally slower than Jython because it is interpreted while Jython is compiled.

Memory usage:

- Jython typically takes up more memory than Python because of the additional overhead required by the JVM.

portability:

- Both Python and Jython have excellent portability, but Jython's JVM dependency may limit its use in some embedded systems.

integrated:

- Jython integrates seamlessly into the Java ecosystem, whereas Python requires additional tools and libraries.

Advantages of cross-platform development

- Code Reuse: Cross-platform code can be reused across multiple platforms, saving time and effort.

- Uniform User Experience: The application provides a consistent user experience across all supported platforms.

- Market Expansion: Cross-platform development enables apps to reach a wider audience.

- Convenient maintenance: Only one code base needs to be maintained to be applicable to multiple operating systems, thus simplifying maintenance.

- Development efficiency: The unified development environment and tool chain improve development efficiency.

Example

Python and Jython have a wide range of use cases in cross-platform development, including:

- Web Application

- Desktop Application

- Data Science and MachinesLearningApplications

- Scripting and Automation

- game development

in conclusion

Python and Jython unlock huge potential for cross-platform development by providing cross-platform compatibility and powerful functionality. They allow developers to build reliable, maintainable and user-friendly applications across multiple platforms, maximizing market impact and enhancing user experience. As cross-platform development continues to grow, Python and Jython will continue to be the dominant forces in the space.

The above is the detailed content of Python and Jython: Unlocking the potential of cross-platform development. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Detailed steps for cleaning memory in Xiaohongshu

Apr 26, 2024 am 10:43 AM

Detailed steps for cleaning memory in Xiaohongshu

Apr 26, 2024 am 10:43 AM

1. Open Xiaohongshu, click Me in the lower right corner 2. Click the settings icon, click General 3. Click Clear Cache

What to do if your Huawei phone has insufficient memory (Practical methods to solve the problem of insufficient memory)

Apr 29, 2024 pm 06:34 PM

What to do if your Huawei phone has insufficient memory (Practical methods to solve the problem of insufficient memory)

Apr 29, 2024 pm 06:34 PM

Insufficient memory on Huawei mobile phones has become a common problem faced by many users, with the increase in mobile applications and media files. To help users make full use of the storage space of their mobile phones, this article will introduce some practical methods to solve the problem of insufficient memory on Huawei mobile phones. 1. Clean cache: history records and invalid data to free up memory space and clear temporary files generated by applications. Find "Storage" in the settings of your Huawei phone, click "Clear Cache" and select the "Clear Cache" button to delete the application's cache files. 2. Uninstall infrequently used applications: To free up memory space, delete some infrequently used applications. Drag it to the top of the phone screen, long press the "Uninstall" icon of the application you want to delete, and then click the confirmation button to complete the uninstallation. 3.Mobile application to

What are the c++ open source libraries?

Apr 22, 2024 pm 05:48 PM

What are the c++ open source libraries?

Apr 22, 2024 pm 05:48 PM

C++ provides a rich set of open source libraries covering the following functions: data structures and algorithms (Standard Template Library) multi-threading, regular expressions (Boost) linear algebra (Eigen) graphical user interface (Qt) computer vision (OpenCV) machine learning (TensorFlow) Encryption (OpenSSL) Data compression (zlib) Network programming (libcurl) Database management (sqlite3)

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

Local fine-tuning of DeepSeek class models faces the challenge of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

1. First, enter the Edge browser and click the three dots in the upper right corner. 2. Then, select [Extensions] in the taskbar. 3. Next, close or uninstall the plug-ins you do not need.

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

The familiar open source large language models such as Llama3 launched by Meta, Mistral and Mixtral models launched by MistralAI, and Jamba launched by AI21 Lab have become competitors of OpenAI. In most cases, users need to fine-tune these open source models based on their own data to fully unleash the model's potential. It is not difficult to fine-tune a large language model (such as Mistral) compared to a small one using Q-Learning on a single GPU, but efficient fine-tuning of a large model like Llama370b or Mixtral has remained a challenge until now. Therefore, Philipp Sch, technical director of HuggingFace

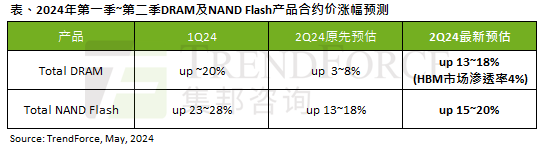

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

According to a TrendForce survey report, the AI wave has a significant impact on the DRAM memory and NAND flash memory markets. In this site’s news on May 7, TrendForce said in its latest research report today that the agency has increased the contract price increases for two types of storage products this quarter. Specifically, TrendForce originally estimated that the DRAM memory contract price in the second quarter of 2024 will increase by 3~8%, and now estimates it at 13~18%; in terms of NAND flash memory, the original estimate will increase by 13~18%, and the new estimate is 15%. ~20%, only eMMC/UFS has a lower increase of 10%. ▲Image source TrendForce TrendForce stated that the agency originally expected to continue to