Technology peripherals

Technology peripherals

AI

AI

Don't wait for OpenAI, the world's first Sora-like one is open source first! All training details/model weights are fully disclosed and the cost is only $10,000

Don't wait for OpenAI, the world's first Sora-like one is open source first! All training details/model weights are fully disclosed and the cost is only $10,000

Don't wait for OpenAI, the world's first Sora-like one is open source first! All training details/model weights are fully disclosed and the cost is only $10,000

Not long ago, OpenAI Sora quickly became popular with its amazing video generation effects, highlighting the differences with other Vincent video models and becoming the focus of global attention.

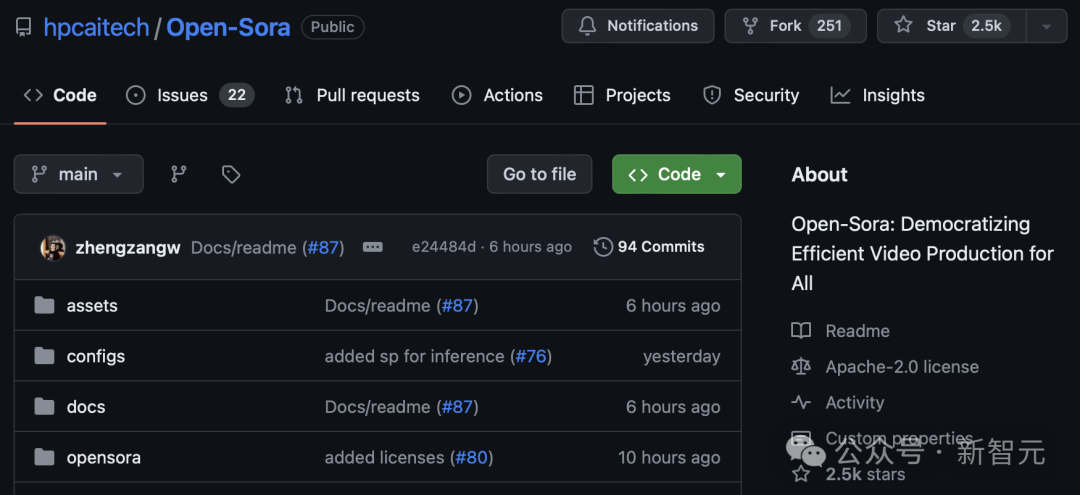

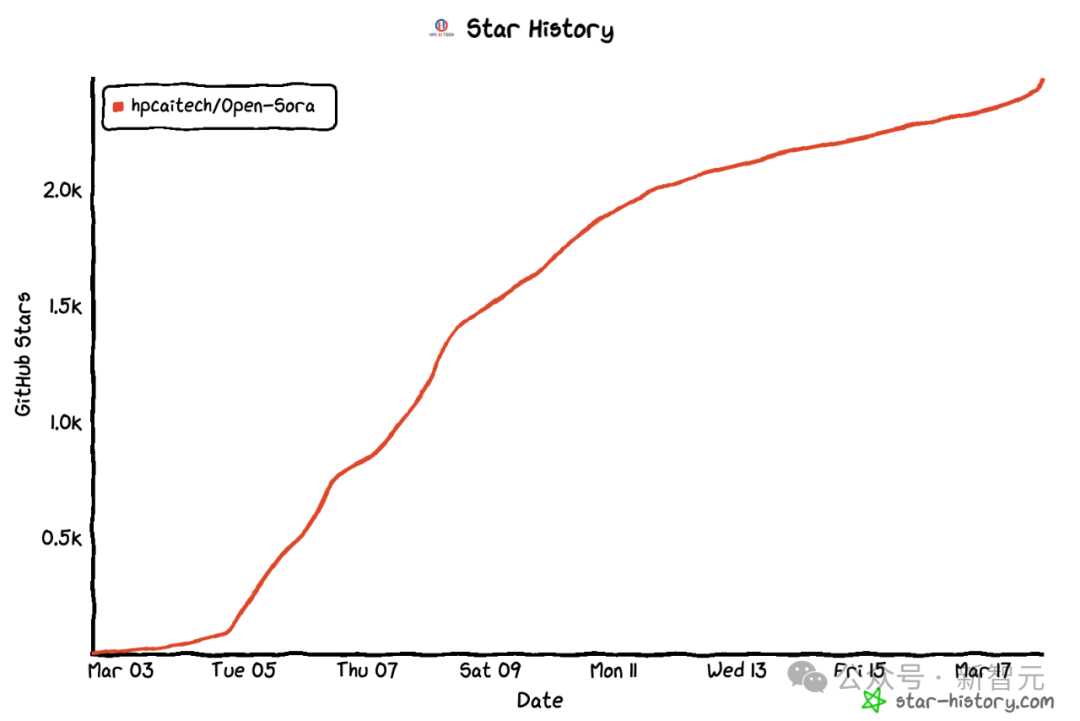

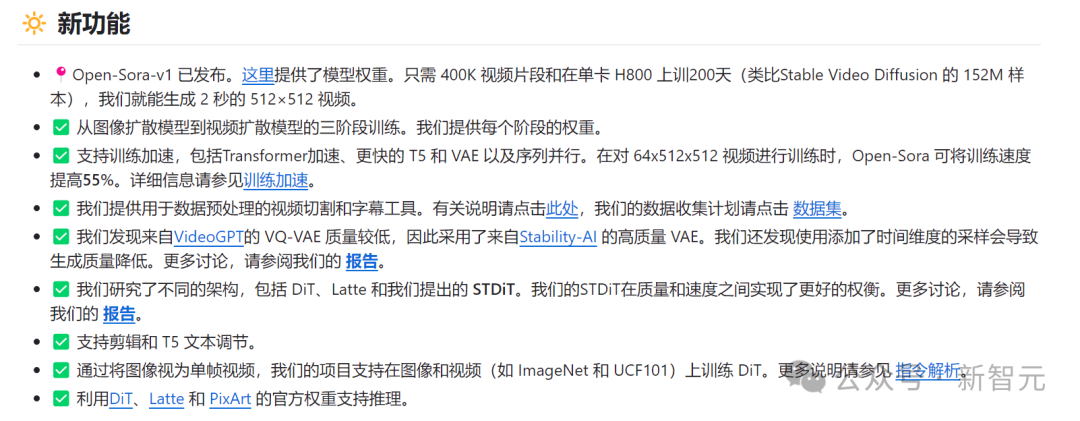

Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team fully open sourced the world's first Sora-like architecture video generation model " Open-Sora 1.0"——covers the entire training process, including data processing, all training details and model weights, and joins hands with global AI enthusiasts to promote a new era of video creation .

##Open-Sora open source address: https://github.com/hpcaitech/Open-Sora

For a sneak peek, let’s take a look at a Glimpse video of a bustling city generated by the "Open-Sora 1.0" model released by the Colossal-AI team.

A snapshot of the bustling city generated by Open-Sora 1.0

This is just the iceberg of Sora’s reproduction technology In the corner of the video, the Colossal-AI team has provided free access to the model architecture, trained model weights, all reproduced training details, data preprocessing process, demo display and detailed hands-on tutorial of Wensheng’s video. Open source on GitHub.

Xinzhiyuan contacted the team immediately and learned that they will continue to update Open-Sora related solutions and latest developments. Interested friends can stay tuned to Open-Sora’s open source community.

Next, we will deeply interpret the multiple key dimensions of the Sora recurrence plan. Including model architecture design, training reproduction plan, data preprocessing, model generation effect display and efficient training optimization strategy.

Model architecture design

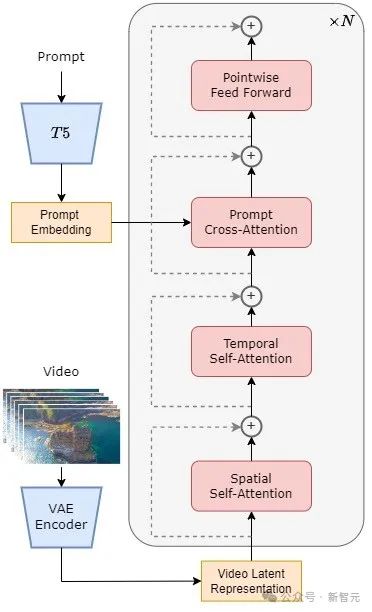

The model adopts the currently popular Diffusion Transformer (DiT) [1] architecture.

The author team uses the high-quality open source Vincent graph model PixArt-α [2], which also uses the DiT architecture, as the base, introduces the temporal attention layer on this basis, and extends it to on video data.

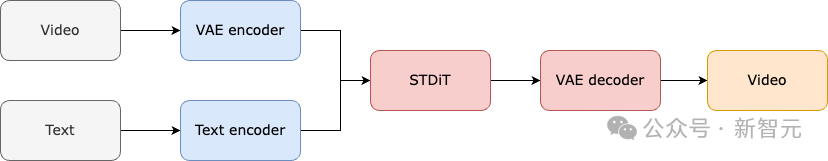

Specifically, the entire architecture includes a pre-trained VAE, a text encoder, and a STDiT (Spatial Temporal Diffusion Transformer) model that utilizes the spatial-temporal attention mechanism.

Among them, the structure of each layer of STDiT is shown in the figure below. It uses a serial method to superimpose a one-dimensional temporal attention module on a two-dimensional spatial attention module to model temporal relationships.

After the temporal attention module, the cross-attention module is used to align the semantics of the text. Compared with the full attention mechanism, such a structure greatly reduces training and inference overhead.

Compared with the Latte [3] model, which also uses the spatial-temporal attention mechanism, STDiT can better utilize the weights of pre-trained image DiT, thereby improving the performance of video data. Continue training.

STDiT structure diagram

The training and inference process of the entire model is as follows. It is understood that in the training phase, the pre-trained Variational Autoencoder (VAE) encoder is first used to compress the video data, and then the STDiT diffusion model is trained together with text embedding in the compressed latent space.

In the inference stage, a Gaussian noise is randomly sampled from the latent space of the VAE and input into STDiT together with the prompt embedding to obtain the denoised features. Finally, it is input to the VAE decoder and decoded to obtain the video.

Model training process

Training recurrence plan

We learned from the team that Open-Sora’s recurrence plan refers to the Stable Video Diffusion (SVD) [3] work and includes three stages, namely:

1. Large-scale image pre-training;

2. Large-scale video pre-training;

3. Fine-tuning of high-quality video data.

Each stage will continue training based on the weights of the previous stage. Compared with single-stage training from scratch, multi-stage training achieves the goal of high-quality video generation more efficiently by gradually expanding data.

Three stages of training plan

First stage: large-scale image pre-training

#The first stage uses large-scale image pre-training and the mature Vincentian graph model to effectively reduce the cost of video pre-training.

The author team revealed to us that through the rich large-scale image data on the Internet and advanced grammatical technology, we can train a high-quality grammatical model, which will be used as the next Initialization weights for one-stage video pre-training.

At the same time, since there is currently no high-quality spatio-temporal VAE, they used the image VAE pre-trained by the Stable Diffusion [5] model. This strategy not only ensures the superior performance of the initial model, but also significantly reduces the overall cost of video pre-training.

The second stage: large-scale video pre-training

The second stage performs large-scale video pre-training to increase model generalization capabilities and effectively grasp the time series correlation of videos.

We understand that this stage requires the use of a large amount of video data for training to ensure the diversity of video themes, thereby increasing the generalization ability of the model. The second-stage model adds a temporal attention module to the first-stage Vincentian graph model to learn temporal relationships in videos.

The remaining modules are consistent with the first stage, and load the first stage weights as initialization, and initialize the output of the temporal attention module to zero to achieve more efficient and faster convergence.

The Colossal-AI team used the open source weights of PixArt-alpha[2] as the initialization of the second-stage STDiT model, and the T5[6] model as the text encoder. At the same time, they used a small resolution of 256x256 for pre-training, which further increased the convergence speed and reduced training costs.

The third stage: fine-tuning of high-quality video data

The third stage of high Quality video data is fine-tuned to significantly improve the quality of video generation.

The author team mentioned that the size of the video data used in the third stage is one order of magnitude less than that in the second stage, but the length, resolution and quality of the video are higher. By fine-tuning in this way, they achieved efficient scaling of video generation from short to long, from low to high resolution, and from low to high fidelity.

The author team stated that in the Open-Sora reproduction process, they used 64 H800 blocks for training.

The total training volume of the second stage is 2808 GPU hours, which is approximately US$7,000. The training volume of the third stage is 1920 GPU hours, which is about 4500 US dollars. After preliminary estimation, the entire training plan successfully controlled the Open-Sora reproduction process to about US$10,000.

Data preprocessing

In order to further reduce the threshold and complexity of Sora reproduction, the Colossal-AI team also provides The convenient video data preprocessing script allows you to easily start Sora recurrence pre-training, including downloading public video data sets, segmenting long videos into short video clips based on shot continuity, and using the open source large language model LLaVA [7] to generate detailed Prompt word.

The author team mentioned that the batch video title generation code they provided can annotate a video with two cards and 3 seconds, and the quality is close to GPT-4V. The resulting video/text pairs can be directly used for training.

With the open source code they provide on GitHub, we can easily and quickly generate the video/text pairs required for training on our own dataset, significantly reducing the complexity of starting Sora. The technical threshold and preliminary preparation of the current project.

Video/text pair automatically generated based on data preprocessing script

Model generation effect display

Let’s take a look at the actual video generation effect of Open-Sora. For example, let Open-Sora generate an aerial footage of sea water lapping against rocks on a cliff coast.

Let Open-Sora capture the magnificent bird's-eye view of mountains and waterfalls surging down from the cliffs and finally flowing into the lake.

In addition to going to the sky, you can also enter the sea. Simply enter prompt and let Open-Sora generate a shot of the underwater world. In the shot, a turtle is on a coral reef. Cruise leisurely.

Open-Sora can also show us the Milky Way with twinkling stars through time-lapse photography.

If you have more interesting ideas for video generation, you can visit the Open-Sora open source community to obtain model weights for free experience.

Link: https://github.com/hpcaitech/Open-Sora

It is worth noting that the author team mentioned on Github that the current version only uses 400K training data, and the model’s generation quality and ability to follow text need to be improved. For example, in the turtle video above, the resulting turtle has an extra leg. Open-Sora 1.0 is also not good at generating portraits and complex images.

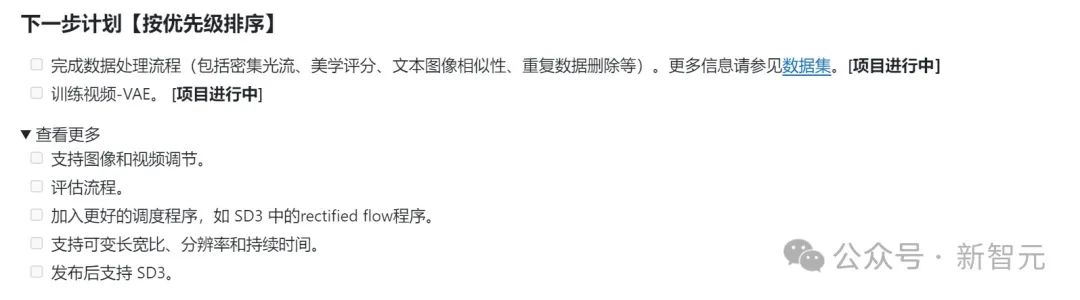

The author team listed a series of plans to be done on Github, aiming to continuously solve existing defects and improve the quality of production.

Efficient training support

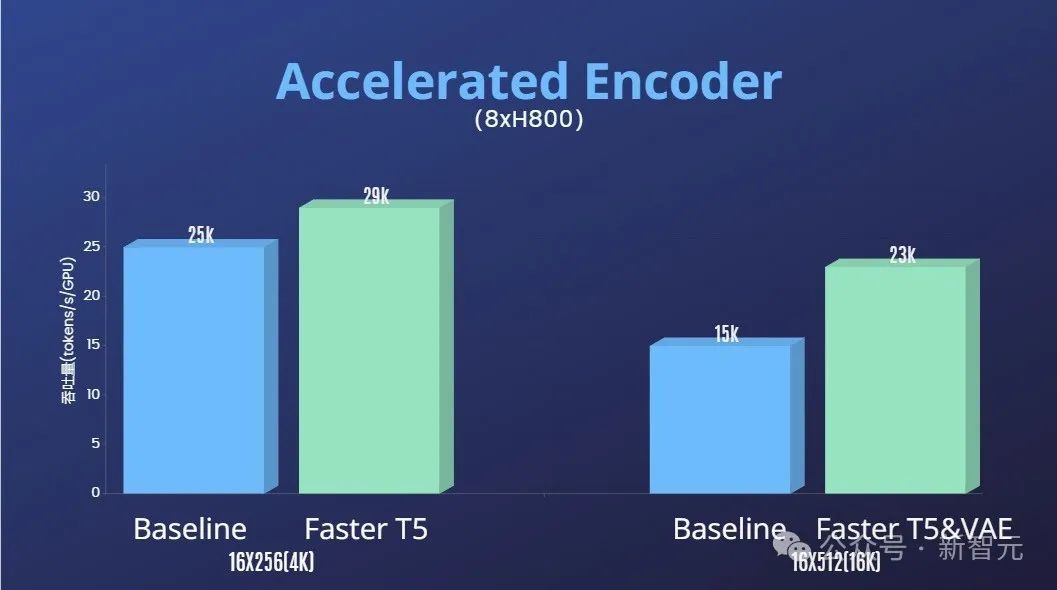

In addition to greatly reducing the technical threshold for Sora reproduction, it also improves the efficiency of video generation Regarding the quality in multiple dimensions such as duration, resolution, content, etc., the author team also provided the Colossal-AI acceleration system for efficient training support for Sora reproduction.

Through efficient training strategies such as operator optimization and hybrid parallelism, an acceleration effect of 1.55 times was achieved in the training of processing 64-frame, 512x512 resolution video.

At the same time, thanks to Colossal-AI’s heterogeneous memory management system, a 1-minute 1080p high-definition video training task can be performed without hindrance on a single server (8 x H800) .

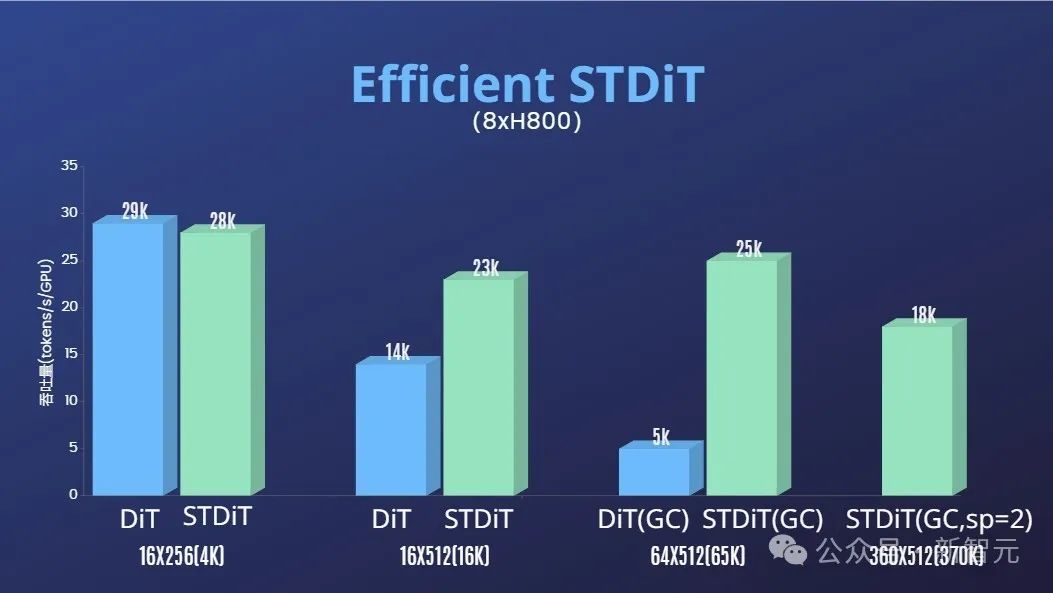

In addition, in the report of the author team, we also found that the STDiT model architecture also showed excellent efficiency during training.

Compared with DiT, which uses a full attention mechanism, STDiT achieves an acceleration effect of up to 5 times as the number of frames increases, which is particularly critical in real-life tasks such as processing long video sequences.

At a glance Open-Sora model video generation effect

HuanWelcome to continue to pay attention to the Open-Sora open source project: https://github.com/hpcaitech/Open-Sora

The author team mentioned that they The Open-Sora project will continue to be maintained and optimized, and it is expected to use more video training data to generate higher quality, longer video content, and support multi-resolution features to effectively promote the application of AI technology in movies, games, Implementation in advertising and other fields.

The above is the detailed content of Don't wait for OpenAI, the world's first Sora-like one is open source first! All training details/model weights are fully disclosed and the cost is only $10,000. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files for vscode

Apr 15, 2025 pm 09:09 PM

How to define header files using Visual Studio Code? Create a header file and declare symbols in the header file using the .h or .hpp suffix name (such as classes, functions, variables) Compile the program using the #include directive to include the header file in the source file. The header file will be included and the declared symbols are available.

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Do you use c in visual studio code

Apr 15, 2025 pm 08:03 PM

Writing C in VS Code is not only feasible, but also efficient and elegant. The key is to install the excellent C/C extension, which provides functions such as code completion, syntax highlighting, and debugging. VS Code's debugging capabilities help you quickly locate bugs, while printf output is an old-fashioned but effective debugging method. In addition, when dynamic memory allocation, the return value should be checked and memory freed to prevent memory leaks, and debugging these issues is convenient in VS Code. Although VS Code cannot directly help with performance optimization, it provides a good development environment for easy analysis of code performance. Good programming habits, readability and maintainability are also crucial. Anyway, VS Code is

Docker uses yaml

Apr 15, 2025 am 07:21 AM

Docker uses yaml

Apr 15, 2025 am 07:21 AM

YAML is used to configure containers, images, and services for Docker. To configure: For containers, specify the name, image, port, and environment variables in docker-compose.yml. For images, basic images, build commands, and default commands are provided in Dockerfile. For services, set the name, mirror, port, volume, and environment variables in docker-compose.service.yml.

What underlying technologies does Docker use?

Apr 15, 2025 am 07:09 AM

What underlying technologies does Docker use?

Apr 15, 2025 am 07:09 AM

Docker uses container engines, mirror formats, storage drivers, network models, container orchestration tools, operating system virtualization, and container registry to support its containerization capabilities, providing lightweight, portable and automated application deployment and management.

What platform Docker uses to manage public images

Apr 15, 2025 am 07:06 AM

What platform Docker uses to manage public images

Apr 15, 2025 am 07:06 AM

The Docker image hosting platform is used to manage and store Docker images, making it easy for developers and users to access and use prebuilt software environments. Common platforms include: Docker Hub: officially maintained by Docker and has a huge mirror library. GitHub Container Registry: Integrates the GitHub ecosystem. Google Container Registry: Hosted by Google Cloud Platform. Amazon Elastic Container Registry: Hosted by AWS. Quay.io: By Red Hat

What is the docker startup command

Apr 15, 2025 am 06:42 AM

What is the docker startup command

Apr 15, 2025 am 06:42 AM

The command to start the container of Docker is "docker start <Container name or ID>". This command specifies the name or ID of the container to be started and starts the container that is in a stopped state.

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Which one is better, vscode or visual studio

Apr 15, 2025 pm 08:36 PM

Depending on the specific needs and project size, choose the most suitable IDE: large projects (especially C#, C) and complex debugging: Visual Studio, which provides powerful debugging capabilities and perfect support for large projects. Small projects, rapid prototyping, low configuration machines: VS Code, lightweight, fast startup speed, low resource utilization, and extremely high scalability. Ultimately, by trying and experiencing VS Code and Visual Studio, you can find the best solution for you. You can even consider using both for the best results.

Docker application log storage location

Apr 15, 2025 am 06:45 AM

Docker application log storage location

Apr 15, 2025 am 06:45 AM

Docker logs are usually stored in the /var/log directory of the container. To access the log file directly, you need to use the docker inspect command to get the log file path, and then use the cat command to view it. You can also use the docker logs command to view the logs and add the -f flag to continuously receive the logs. When creating a container, you can use the --log-opt flag to specify a custom log path. In addition, logging can be recorded using the log driver, LogAgent, or stdout/stderr.