Technology peripherals

Technology peripherals

AI

AI

The latest review of controllable image generation! Beijing University of Posts and Telecommunications has opened up 20 pages of 249 documents, covering various "conditions" in the field of Text-to-Image Diffusion.

The latest review of controllable image generation! Beijing University of Posts and Telecommunications has opened up 20 pages of 249 documents, covering various "conditions" in the field of Text-to-Image Diffusion.

The latest review of controllable image generation! Beijing University of Posts and Telecommunications has opened up 20 pages of 249 documents, covering various "conditions" in the field of Text-to-Image Diffusion.

In the process of rapid development in the field of visual generation, the diffusion model has completely changed the development trend of this field, and its introduction of text-guided generation function marks a profound change in capabilities.

However, relying solely on text to regulate these models cannot fully meet the diverse and complex needs of different applications and scenarios.

Given this shortcoming, many studies aim to control pre-trained text-to-image (T2I) models to support new conditions.

Researchers from Beijing University of Posts and Telecommunications conducted an in-depth review of the controllable generation of T2I diffusion models, outlining the theoretical basis and practical progress in this field. This review covers the latest research results and provides an important reference for the development and application of this field.

Paper: https://arxiv.org/abs/2403.04279 Code: https://github.com/PRIV-Creation/Awesome-Controllable-T2I -Diffusion-Models

Our review begins with a brief introduction to denoised diffusion probabilistic models (DDPMs) and the basics of widely used T2I diffusion models.

We further explored the control mechanism of the diffusion model and determined the effectiveness of introducing new conditions in the denoising process through theoretical analysis.

In addition, we summarized the research in this field in detail and divided it into different categories from the perspective of conditions, such as specific condition generation, multi-condition generation, and general controllability generation.

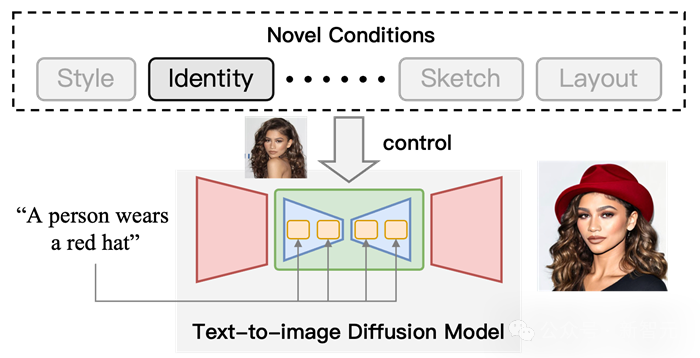

Figure 1 Schematic diagram of controllable generation using T2I diffusion model. On the basis of text conditions, add "identity" conditions to control the output results.

Classification System

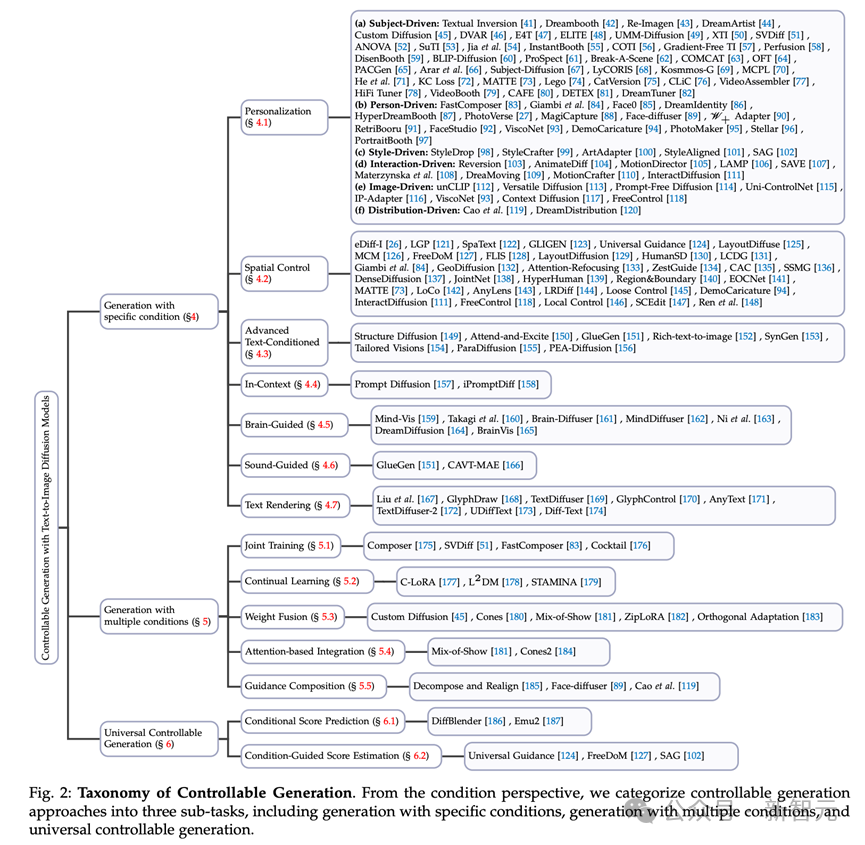

The task of conditional generation using text diffusion models represents a multifaceted and complex field. From a conditional perspective, we divide this task into three subtasks (see Figure 2).

# Figure 2 Classification of controllable generation. From a condition perspective, we divide the controllable generation method into three subtasks, including generation with specific conditions, generation with multiple conditions, and general controllable generation.

Most research is devoted to how to generate images under specific conditions, such as image-guided generation and sketch-to-image generation.

To reveal the theory and characteristics of these methods, we further classify them according to their condition types.

1. Generate using specific conditions: points out the method of introducing specific types of conditions, including customized conditions (Personalization, e.g., DreamBooth, Textual Inversion), It also includes more direct conditions, such as ControlNet series, physiological signal-to-Image

##2. Multi-condition generation: Use multiple Conditions are generated, and we subdivide this task from a technical perspective.

3. Unified controllable generation: This task is designed to be able to generate using any conditions (even any number).

How to introduce new conditions into the T2I diffusion modelFor details, please refer to the original text of the paper. The mechanisms of these methods are briefly introduced below.

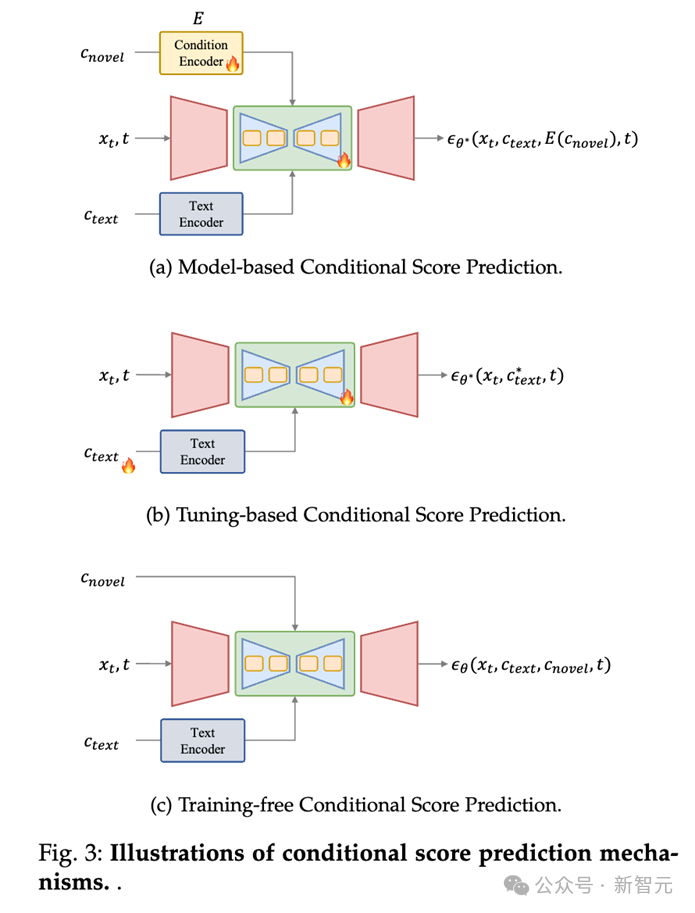

Conditional Score Prediction

In the T2I diffusion model, Utilizing a trainable model (such as UNet) to predict the probability score (i.e., noise) in the denoising process is a basic and effective method.

In the condition-based score prediction method, novel conditions are used as inputs to the prediction model to directly predict new scores.

It can be divided into three methods of introducing new conditions:

1. Based on Conditional score prediction of the model: This type of method will introduce a model used to encode novel conditions, and use the encoding features as the input of UNet (such as acting on the cross-attention layer) to predict novelty Score results under conditions;

2. Conditional score prediction based on fine-tuning: This type of method does not use an explicit condition. Instead, the parameters of the text embedding and denoising network are fine-tuned to learn information about novel conditions, and the fine-tuned weights are used to achieve controllable generation. For example, DreamBooth and Textual Inversion are such practices.

3. Conditional score prediction without training: This type of method does not require training the model, and can directly apply conditions to the model. In the prediction process, for example, in the Layout-to-Image (layout image generation) task, the attention map of the cross-attention layer can be directly modified to set the layout of the object.

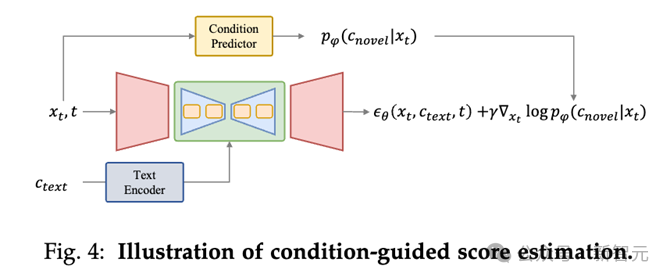

Score evaluation of conditional guidance

Score estimation of conditional guidance evaluation The method is to back-transmit the gradient through the conditional prediction model (such as the Condition Predictor above) to add conditional guidance during the denoising process.

Use specific conditions to generate

1. Personalization: Customized tasks are designed to capture and utilize concepts as generating conditions that are controllable Generating,these conditions are not easily described via text and,need to be extracted from example images. Such as DreamBooth, Texutal Inversion and LoRA.

2. Spatial Control: Because text is difficult to express structural information, that is, position and dense labels, space is used Signal-controlled text-to-image diffusion methods are an important research area in areas such as layout, human pose, and human body parsing. Methods such as ControlNet.

3. Advanced Text-Conditioned Generation: Although text plays a role in the text-to-image diffusion model The role of basic conditions, but there are still some challenges in this area.

First of all, when doing text-guided synthesis in complex texts involving multiple topics or rich descriptions, you often encounter the problem of text misalignment. In addition, these models are mainly trained on English data sets, resulting in a significant lack of multi-language generation capabilities. To address this limitation, many works have proposed innovative approaches aimed at extending the scope of these model languages.

4. In-Context Generation: In the context generation task, based on a pair of task-specific example images and text Guidance,understanding and performing specific tasks on new query,images.

5. Brain-Guided Generation: The brain-guided generation task focuses on controlling images directly from brain activity Create, for example, electroencephalogram (EEG) recordings and functional magnetic resonance imaging (fMRI).

6. Sound-Guided Generation: Generate matching images based on sound.

7. Text Rendering: Generate text in images, which can be widely used in posters, data covers, and expressions package and other application scenarios.

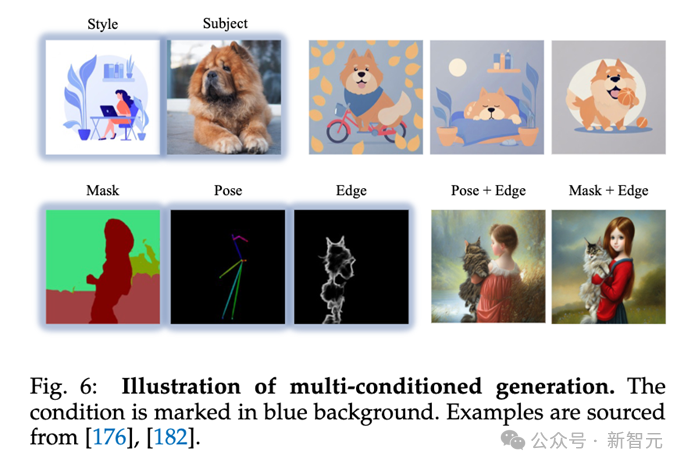

Multi-condition generation

Multi-condition generation tasks are designed to generate Generate images under various conditions, such as generating a specific person in a user-defined pose or generating a person in three personalized identities.

In this section, we provide a comprehensive overview of these methods from a technical perspective and classify them into the following categories:

1. Joint Training: Introduce multiple conditions for joint training during the training phase.

2. Continual Learning: Learn multiple conditions in sequence, and learn new conditions without forgetting the old conditions to achieve multiple Conditional generation.

3. Weight Fusion: Use parameters obtained by fine-tuning under different conditions for weight fusion, so that the model has multiple generated under conditions.

4. Attention-based Integration: Set multiple conditions through attention map (usually object) in the image to achieve multi-condition generation.

Generic condition generation

In addition to methods tailored for specific types of conditions, there are also methods designed to adapt to arbitrary conditions in image generation general method.

These methods are broadly classified into two groups based on their theoretical foundations: general conditional score prediction frameworks and general conditional guided score estimation.

1. Universal condition score prediction framework: The universal condition score prediction framework works by creating a framework that can encode any given conditions and exploit them. A framework for predicting noise at each time step during image synthesis.

This approach provides a universal solution that can be flexibly adapted to a variety of conditions. By directly integrating conditional information into the generative model, this approach allows the image generation process to be dynamically adjusted according to various conditions, making it versatile and applicable to various image synthesis scenarios.

2. General Conditional Guided Score Estimation: Other methods utilize conditionally guided score estimation to incorporate various conditions into text-to-image diffusion models middle. The main challenge lies in obtaining condition-specific guidance from latent variables during denoising.

Applications

Introducing novel conditions can be useful in multiple tasks, including image editing, image completion, image combination, text /Illustration generates 3D.

For example, in image editing, you can use a customized method to edit the cat in the picture into a cat with a specific identity. For other information, please refer to the paper.

Summary

This review delves into the field of conditional generation of text-to-image diffusion models, revealing the incorporation into the text-guided generation process. Novel conditions.

First, the author provides readers with basic knowledge, introducing the denoising diffusion probabilistic model, the famous text-to-image diffusion model, and a well-structured taxonomy. Subsequently, the authors revealed the mechanism for introducing novel conditions into the T2I diffusion model.

Then, the author summarizes the previous conditional generation methods and analyzes them from the aspects of theoretical foundation, technical progress and solution strategies.

In addition, the author explores the practical applications of controllable generation, emphasizing its important role and huge potential in the era of AI content generation.

This survey aims to comprehensively understand the current status of the field of controllable T2I generation, thereby promoting the continued evolution and expansion of this dynamic research field.

The above is the detailed content of The latest review of controllable image generation! Beijing University of Posts and Telecommunications has opened up 20 pages of 249 documents, covering various "conditions" in the field of Text-to-Image Diffusion.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is there a free XML to PDF tool for mobile phones?

Apr 02, 2025 pm 09:12 PM

Is there a free XML to PDF tool for mobile phones?

Apr 02, 2025 pm 09:12 PM

There is no simple and direct free XML to PDF tool on mobile. The required data visualization process involves complex data understanding and rendering, and most of the so-called "free" tools on the market have poor experience. It is recommended to use computer-side tools or use cloud services, or develop apps yourself to obtain more reliable conversion effects.

How to beautify the XML format

Apr 02, 2025 pm 09:57 PM

How to beautify the XML format

Apr 02, 2025 pm 09:57 PM

XML beautification is essentially improving its readability, including reasonable indentation, line breaks and tag organization. The principle is to traverse the XML tree, add indentation according to the level, and handle empty tags and tags containing text. Python's xml.etree.ElementTree library provides a convenient pretty_xml() function that can implement the above beautification process.

How to convert XML to image using Java?

Apr 02, 2025 pm 08:36 PM

How to convert XML to image using Java?

Apr 02, 2025 pm 08:36 PM

There is no "universal" method: XML to image conversion requires selecting the appropriate strategy based on XML data and target image style. Parsing XML: Use libraries such as DOM, SAX, StAX or JAXB. Image processing: Use java.awt.image package or more advanced libraries such as ImageIO and JavaFX. Data to image mapping: Defines the mapping rules of XML nodes to image parts. Consider complex scenarios: dealing with XML errors, image scaling, and text rendering. Performance optimization: Use SAX parser or multithreading technologies.

How to verify the xml format

Apr 02, 2025 pm 10:00 PM

How to verify the xml format

Apr 02, 2025 pm 10:00 PM

XML format validation involves checking its structure and compliance with DTD or Schema. An XML parser is required, such as ElementTree (basic syntax checking) or lxml (more powerful verification, XSD support). The verification process involves parsing the XML file, loading the XSD Schema, and executing the assertValid method to throw an exception when an error is detected. Verifying the XML format also requires handling various exceptions and gaining insight into the XSD Schema language.

How to use char array in C language

Apr 03, 2025 pm 03:24 PM

How to use char array in C language

Apr 03, 2025 pm 03:24 PM

The char array stores character sequences in C language and is declared as char array_name[size]. The access element is passed through the subscript operator, and the element ends with the null terminator '\0', which represents the end point of the string. The C language provides a variety of string manipulation functions, such as strlen(), strcpy(), strcat() and strcmp().

How to set the fonts for XML conversion to images?

Apr 02, 2025 pm 08:00 PM

How to set the fonts for XML conversion to images?

Apr 02, 2025 pm 08:00 PM

Converting XML to images involves the following steps: Selecting the appropriate image processing library, such as Pillow. Use the parser to parse XML and extract font style attributes (font, font size, color). Use an image library such as Pillow to style the font and render the text. Calculate text size, create canvas, and draw text using the image library. Save the generated image file. Note that font file paths, error handling and performance optimization need further consideration.

Avoid errors caused by default in C switch statements

Apr 03, 2025 pm 03:45 PM

Avoid errors caused by default in C switch statements

Apr 03, 2025 pm 03:45 PM

A strategy to avoid errors caused by default in C switch statements: use enums instead of constants, limiting the value of the case statement to a valid member of the enum. Use fallthrough in the last case statement to let the program continue to execute the following code. For switch statements without fallthrough, always add a default statement for error handling or provide default behavior.

How to convert XML to image using C#?

Apr 02, 2025 pm 08:30 PM

How to convert XML to image using C#?

Apr 02, 2025 pm 08:30 PM

C# converts XML into images feasible, but requires designing a way to visualize data. For a simple example, for product information XML, data can be parsed and the name and price can be drawn into images using the GDI library. The steps include: parsing XML data. Create images using drawing libraries such as GDI. Set the image size according to the XML structure. Use the text drawing function to draw data onto an image. Save the image.